Professional Documents

Culture Documents

Speech Recognition - A Look Behind The Mechacnics

Uploaded by

api-273884671Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Speech Recognition - A Look Behind The Mechacnics

Uploaded by

api-273884671Copyright:

Available Formats

Running head: SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

Speech Recognition: A Look behind the Mechanics

Anthony Owen Francis Moon

Waxahachie Global High

SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

Table of Contents

Abstract3

Introduction..4

How Speech Recognition is Used4

Small Vocabulary.4

Large Vocabulary.4

What Makes the Magic........................5

A Quick Lesson in Physics..5

Translating the Wave........5

Reading the Wave6

Ideal Circumstances for Use....7

Speech Recognition Training...7

Troubleshooting...8

References9

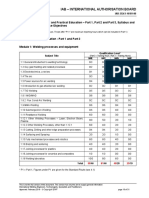

Appendix A ...10

SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

Abstract

Speech recognition is a useful tool that very little is understood. There are many processes that

go into even the simplest of commands or answers. The word yes gets analyzed millions of

times to simply get three letters and proceed in only a few seconds. Programmers have worked

for decades trying to perfect the system and tailoring programs to individuals voices. Today,

programs have over 60,000 words in the English language alone. These words could be

understood by almost anyone fluent in English. Programs also have been set to recognize speech

patterns similar and unique to individuals.

Keywords: Speech Recognition Engine, Wave, Samples, Frequency, Noise, Amplitude,

Phonemes, Plosive Consonant, Normalize, Samples, ADC, Statistic Modeling, Pitch

SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

Speech Recognition: A Look behind the Mechanics

Introduction

In the 1969, a program was created that could recognize and respond to human speech

(Grabianowski, 2014). This is a huge mountain to climb over; all humans have different voices,

accents, and tones. Since then, there have been many different versions of speech recognition

programs, each being more complex and useful than the one before. Today, we can hit a button

and ask for directions, send a text message, and find an answer on the internet. For a more

detailed description on the history of speech recognition, see Appendix A1.

How Speech Recognition is Used

There are two categories of speech recognition; engines with a small vocabulary that can

be used for almost anyone and engines with a large vocabulary that can be used by a very few

people who sound similar.

Small Vocabulary

The engines with a small vocabulary are used today in devices that have very few

functions, such as a customer service menu or a car. When a user calls customer service,

sometimes a machine will prompt the user which department you want to reach. For example, it

will prompt, Are you trying to reach the refund department? At which the user say either yes

or no. The engine doesnt need to have many words in its vocabulary other than yes, no, or

next. Many people have different accents and voices, the computer might see something

sounding like new, but calculate the word is closer to no than to yes. Some cars are

equipped with speech recognition engines too. The driver might want your car to start playing

music. That car still has a very small vocabulary; e.g., next, skip, pause, stop, and

SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

lower. With a small vocabulary, the computer doesnt have to recognize specific words, simply

words close to preset commands (Puthiyedath, 2014).

Large Vocabulary

There are also engines significantly more complicated with an enormous vocabulary.

However, these engines need to be specifically tailored to your unique voice. SIRI, the IPhone

speech recognition engine, can write your text messages, search questions on the internet, and

much more. To accomplish this, SIRI needs to recognize almost any word in the English

language. That poses a problem, did the user say car keys or khakis? To accomplish this the

computer needs to be trained to the users specific voice and accent. How does it do that

though? To answer this, we must dissect the process of speech recognition.

What Makes the Magic

A Quick Lesson in Physics

Every sound wave is constructed of two parts; amplitude and wave length. The best way

to picture a sound wave is with an earthquake needle. The earthquake needle sits almost dormant,

moving slightly side to side at a very slow pace. During an earthquake, the needle moves rapidly

from one side of the page to the other. This is essentially the same thing that happens to the air

particles when a person speaks. The amplitude is how far the wave moves, the earthquake needle

shows amplitude by how far the needle moves left and right. Amplitude, in terms of a sound

wave, measures volume. (Sound Waves and Music, 2014)

Translating the Wave

Wave length measures frequency. On the earthquake needle, wave length is represented

by how fast the needle is moving. On a sound wave, frequency is the pitch of your voice. The

SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

closer together the waves, the higher the pitch of the sound. Men generally speak with lower

frequencies (waves that are farther apart) than women because they have deeper voices.

When you speak, you disrupt the molecules around your mouth sending a wave on energy

in every direction. A microphone picks up the sound wave and sends the signal to the Analog to

Digital Converter (ADC). The ADC translates the sound wave, read in analog signal, to a binary

code (digital signal). The computer can now recognize the sound wave frequencies.

When the ADC sends the digital signals to the sound card of your computer, it sends

exactly what the microphone heard. The microphone picks up what you said and all other noise

in the area. The sound card separates what you said from the excess noise. It does this by looking

at waves that have low amplitudes and frequencies that do not match the frequencies of your

voice. Your voice has a range of frequencies that make up what you say. Outside this range the

computer determines the sound is excess noise and removes it from the sound file (H. V., 2014).

The computer then normalizes the sound file. This means taking what is left of the sound

file and making all the amplitudes the same. The computer analyzes the sound file and finds the

average amplitude. It adjusts the parts of the wave so all parts match the average amplitude.

Whenever a person asks a question aloud, he/she always place emphasis on the last word.

Normalizing reduces the emphasis that makes the sound file harder for the computer to

understand. (H. V., 2014).

Reading the Wave

After the sound file is normalized, it is broken up into pieces less than a hundredth of a

second long. The segments are called phonemes. Phonemes are very slight sound differences,

they can change the entire meaning of the word. For example, the difference between the word

pub and the word pug. The words are essentially the same pronunciation wise (p-uh-buh

SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

and p-uh-guh), so the phonemes are small to make sure the sound card can capture them

properly (How speech recognition works, n.d.).

After the phonemes are processed, they are placed together to form plosive consonant

sounds. These are essentially the pronunciation of individual letters. This is what the sound card

matches to the right letter(s). For example, the pronunciation of ch needs to be matched to two

letters, c and h (How speech recognition works, n.d.).

After the plosive consonants are matched, the computer then needs to use a system called

statistic modeling. It puts all the sounds together and mathematically tries to figure out what you

are saying based on the sounds, or spaces in between sounds. Since the sound file is broken into

thousands and thousands of parts, the computer can see when you speech breaks. If there are

many intervals with no sound, the computer figures you have ended your word or sentence.

By taking the plosive consonant sounds in chronological order, the computer can figure

what word you are saying by process of elimination. It takes the first sound, and finds all the

words in its vocabulary that start with that sound and eliminates all the words that dont. It then

repeats this step, until it is left with either one word or no sounds remaining in the word. This is

by far the most complicated part of the speech recognition process, it is also where most of the

errors come from.

Why is this so complicated? If a program has a vocabulary of 60,000 words (common in

todays programs), a sequence of three words could be any of 216 trillion possibilities. Obviously

even the most powerful computers cant search through all of them without help. (Grofolo,

personal communications).

Ideal Circumstances for Use

Speech Recognition Training

SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

With large vocabulary speech engines, the program needs to be able to recognize your

voice uniquely. Many programmers spend hours upon hours simply talking to their program.

This is a sort of training to help make the program better understand the sound and which letters

go where. Sometimes, a program will request the user to do some preliminary talking. This is

simply saying a few words so the program can better listen to the user voice with more accuracy.

Troubleshooting

When a program does not recognize the users voice accurately, there could be one or

more of many problems. If the microphone is low quality, it might not be picking up phonemes

properly or at all. If the computers sound card is corrupted or dirty, it will add extra noise to the

sound file, thus making the noise cancelling system unable to detect the users voice from the

noise. If there is a lot of noise surrounding the user, his/her voice might be too diluted for the

noise cancelling system. Finally, if the program is not accustom to the users voice, it might be

searching for patterns in his/her voice that he/she doesnt have.

SPEECH RECOGNITION: A LOOK BEHIND THE MECHANICS

References

Grabianowski, E. (n.d.) Speech recognition and statistical modeling: How stuff works. Retrieved

October 28, 2014, from http://electronics.howstuffworks.com/gadgets/high-techgadgets/speech-recognition2.htm

How speech recognition works. (2001, July 6). Retrieved November 12, 2014, from

http://project.uet.itgo.com/speech.htm

H., V. (2014). The secret of Googles amazing voice recognition revealed: It works like a brain.

Retrieved November 12, 2014, from http://www.phonearena.com /news/The-secret-ofGoogles-amazing-voice-recognition-revealed-it-works-like-a0brain_id39938

Puthiyedath, S. (2014). How speech recognition works. Retrieved November 12, 2014, from

http://www.codeguru.com/cpp/g-m/multimedia/audio/article.php/c12363/How-SpeechRecognition-Works.htm

Sound Waves and Music. (2014). Retrieved November 12, 2014, form

http://www.physicsclassroom.com/class/sound

Woodford, C. (2006, January 1). Voice recognition software. Retrieved November 12, 2014,

from http://www.explainthatstuff.com/voicerecognition.htm

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- IBM Sterling Warehouse Management System: Increase Warehouse Productivity and Reduce CostsDocument5 pagesIBM Sterling Warehouse Management System: Increase Warehouse Productivity and Reduce CostsRishard MohamedNo ratings yet

- Multi-Crop HarvesterDocument6 pagesMulti-Crop HarvesterErwinNo ratings yet

- Industrial Wiring and Design ProjectDocument3 pagesIndustrial Wiring and Design ProjectJibril JundiNo ratings yet

- IWE SyllabusDocument4 pagesIWE Syllabusmdasifkhan2013No ratings yet

- Eaton 9PX Brochure (SEA)Document4 pagesEaton 9PX Brochure (SEA)Anonymous 6N7hofVNo ratings yet

- MAN4200075 Rel. 00 Luce x2 GV - GBDocument73 pagesMAN4200075 Rel. 00 Luce x2 GV - GBGabriel Montoya CorreaNo ratings yet

- LexionAir Flyer UKDocument2 pagesLexionAir Flyer UKficom123No ratings yet

- EnglishDocument10 pagesEnglishAnonymous KYw5yyNo ratings yet

- Data Flow Diagram Level-0: Health Insurance System Customer InsurerDocument1 pageData Flow Diagram Level-0: Health Insurance System Customer InsurerVarun DhimanNo ratings yet

- Switch 3COM 2952Document4 pagesSwitch 3COM 2952Fabio de OliveiraNo ratings yet

- Agt Lim Katrin SaskiaDocument4 pagesAgt Lim Katrin SaskiaJanine RoceroNo ratings yet

- HydacDocument4 pagesHydacmarkoNo ratings yet

- Reva Parikshak Online Examination SystemDocument30 pagesReva Parikshak Online Examination SystemSandesh SvNo ratings yet

- Aircraft Landing Gear Design ProjectDocument20 pagesAircraft Landing Gear Design Projectchristo.t.josephNo ratings yet

- LIENSON Company ProfileDocument8 pagesLIENSON Company ProfileShino Ping PoongNo ratings yet

- Positioning The EventDocument16 pagesPositioning The EventStephanie Aira LumberaNo ratings yet

- Rev. e Genexpert Lis Protocol SpecificationDocument174 pagesRev. e Genexpert Lis Protocol Specificationmkike890% (1)

- Lab Exercise - Ethernet: ObjectiveDocument11 pagesLab Exercise - Ethernet: ObjectiveTakaskiNo ratings yet

- Polcom ServicesDocument29 pagesPolcom ServicesEduard KuznetsovNo ratings yet

- Installing USBasp in Atmel Studio Ver 7Document4 pagesInstalling USBasp in Atmel Studio Ver 7J Uriel CorderoNo ratings yet

- Role of Project Management Consultancy in ConstructionDocument4 pagesRole of Project Management Consultancy in Constructionmnahmed1972No ratings yet

- Textile Shop Management SystemDocument51 pagesTextile Shop Management System19MSS041 Selva ganapathy K100% (2)

- Daily Reports Postilion: Alarms - A05W063Document8 pagesDaily Reports Postilion: Alarms - A05W063dbvruthwizNo ratings yet

- QL 320 TDocument109 pagesQL 320 TSteven SalweyNo ratings yet

- TPM KN MMHDocument12 pagesTPM KN MMHKn ShaplaNo ratings yet

- LS Retail Training Manual Version 4.2Document170 pagesLS Retail Training Manual Version 4.2MOHAMMED AARIFNo ratings yet

- IEM PI 0100 - Competency Model For PI Oral InterviewDocument6 pagesIEM PI 0100 - Competency Model For PI Oral InterviewJani AceNo ratings yet

- Improve Follow-Up Reporting: HealthcareDocument2 pagesImprove Follow-Up Reporting: HealthcareVisheshNo ratings yet

- Guta G. Pragmatic Python Programming 2022Document212 pagesGuta G. Pragmatic Python Programming 2022Ryan GiocondoNo ratings yet

- What Is A StartupDocument3 pagesWhat Is A StartupArun SoniNo ratings yet