Professional Documents

Culture Documents

Concurrent Heap-Based Network Sort Engine: - Toward Enabling Massive and High Speed Per-Flow Queuing

Uploaded by

ms6675Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Concurrent Heap-Based Network Sort Engine: - Toward Enabling Massive and High Speed Per-Flow Queuing

Uploaded by

ms6675Copyright:

Available Formats

This full text paper was peer reviewed at the direction of IEEE Communications Society subject matter experts

for publication in the IEEE ICC 2009 proceedings

Concurrent Heap-based Network Sort Engine

-Toward enabling massive and high speed per-flow queuingMuneyoshi Suzuki and Katsuya Minami NTT Access Network Service Systems Laboratories, NTT Corporation {suzuki.muneyoshi, minami.katsuya}@lab.ntt.co.jp

Abstract A Network Sort Engine (NSE) that rapidly identifies the highest priority from numerous priorities is indispensable to enable per-flow queuing that supports massive queues in high-speed communications lines. This is because the bottleneck in per-flow queuing is the process to select a single queue to emit a frame from queues that are ready to emit frames; this process leads to sorting issues in identifying the highest priority. Thus, a Concurrent Heap that parallelizes each layer of a binary tree has been developed as a method of implementing a massive and high speed NSE. Since it does not essentially modify conventional Heap algorithms, it can work at high speed due to its lightweight memory management and it also ensures the worst-case runtime. As the FPGA results from implementing the Concurrent Heap indicate that the required resources are very small, it could be implemented, in practice, in a small FPGA, and the warranted runtime speed indicates that over 8,000 per-flow queues for a 10GbE LAN-PHY could work at the wire rate with successive minimum-length frames.

I. INTRODUCTION

is the most fundamental mechanism in communication networks. This is because per-flow queuing in communications lines enables the quality of services to be controlled based on a service discipline with a segregation process that stores an incoming frame to a queue based on the flow * information in the frame and a selection process that identifies a single queue to emit a frame. However, supporting the per-flow queuing of a massive number of queues or high-speed communications lines is impractical, thus its scalability is limited. This is relevant to shaping queues and timestamp-tagging queues (e.g., WFQ, Virtual Clock, and Jitter-EDD) that assign a timing tag to an incoming frame, then extract a frame from a queue in timing order, as well as basic queues such as priority queues and round-robin scheduled queues. The cause of the scalability problem is the process of selecting a single queue to emit a frame from queues that are ready to emit frames. A priority queue needs to identify the highest priority from prioritized queues that are ready to emit frames. In a round-robin scheduled queue, a single queue next to the latest queue that emitted a frame has to be selected from queues that are ready to emit frames. Shaping queues and timestamp-tagging queues must identify the earliest timing tag. For example, in a 10 Gbps Ethernet (10GbE) LAN-PHY, the time to transmit the minimum-length frame is just 67.2 ns, and per-flow queuing that could work at the wire rate must identify a single queue that emits a frame within this short interval.

UEUING

Therefore, the difficulty of implementing per-flow queuing is in proportion to the number of queues and the communications line speed. Since the sequence of a round-robin scheduled queue and timing tags could be regarded as priorities, all the problems can be considered as a sorting issue in the broad sense where the highest priority is identified from numerous priorities. That is, to enable massive and high-speed per-flow queuing, a function that rapidly sorts out numerous priorities is indispensable. The Network Sort Engine (NSE) is a mechanism that identifies the highest priority from given priorities. Insertion operation that inputs a priority and deletion operation that outputs the highest priority have been defined for this. That is, a priority is deleted from NSE based on highest-in-first-out discipline . The difference between NSE and a conventional sorter is that the former identifies the highest priority from priorities while the latter sorts out all priorities in order. Although there are various hardware-based NSE implementations, limited support for massive priorities is achieved with tree-structure-based implementations such as the binary-tree sorter and Heap. However, as the processing of a binary-tree sorter is essentially sequential, because it consists of priorities and comparators that correspond to the leaves and nodes of a tree, it is difficult to produce a parallelized or pipelinized binary-tree sorter for high-speed implementations. Although Pipelinized Heap was proposed to enable queuing for high-speed networks [3], [4], as these proposals essentially modified conventional Heap algorithms for pipeline operations, complicated memory management was required. Since this has an impact on the signal-delay time in logic circuits due to the place and route required for increased resources to enable complicated memory management, it does not meet current requirements for high-speed logic implementations. To enable massive and high-speed per-flow queuing, this paper clarifies the principles, method of implementation, and evaluation results for the Concurrent Heap we developed that parallelizes the conventional Heap to support NSE. Section II explains the principles underlying the conventional Heap while Section III clarifies the principles underlying the developed Concurrent Heap. Section IV describes an implementation of the Concurrent Heap and Section V presents the evaluation results.

In the field of algorithmic theory, these kinds of data structures and procedures are termed as priority queues, event queues, or dictionary machines [2]. However, in communications technology, a queuing mechanism that outputs a frame from the highest prioritized queue is termed a priority queue. To avoid confusion, the former is termed NSE in this paper. Although it is technically possible to implement massive NSE with a specially structured CAM, currently this has not appeared on the market.

* Connectivity or connection in a network, such as a VLAN, session, and association, identified with a combination of addresses and identifiers in a frame.

978-1-4244-3435-0/09/$25.00 2009 IEEE

This full text paper was peer reviewed at the direction of IEEE Communications Society subject matter experts for publication in the IEEE ICC 2009 proceedings

II. PRINCIPLES UNDERLYING CONVENTIONAL HEAP [1] A. Data Structure for Heap A Heap consists of a Heap structure that is a kind of binary-tree data structure and algorithms that input and output priority to/from it. There is an example of a Heap structure in Fig. 2-1, where a smaller priority value has precedence.

Fig. 2-2. Example insertion operation.

Fig. 2-2 outlines an example of insertion operation that inputs priority 7 to the Heap structure given in Fig. 2-1. C. Deletion Operation from Heap Deletion operation from a Heap is achieved by removing the uppermost and rightmost priorities then searching an insertion address (position) for the latter. The basic algorithm is given below. a) Remove the uppermost priority; if the number of priorities in the Heap is decreased to 0, then stop. b) Remove the rightmost priority, then assign it to the priority to be inserted. c) Assign the uppermost priority to the initial insertion position. d) If the priority to be inserted is prior to all the children of the insertion position or it has no children, move the priority to be inserted to the insertion position, then stop. e) Otherwise, move the child (the prior child if there are two children) to the insertion position, then update the insertion position to the child. f) Repeat d) and e) until they stop. Fig. 2-3 shows an example of deletion operation that outputs the highest priority from the Heap structure given in Fig. 2-1.

Fig. 2-1. Example Heap structure.

In the figure, the nodes indicate priority and the links indicate the parent-child relationship between priorities. As shown in the figure, each priority has at most two children that are below it. Each priority in a Heap structure is placed in the order of a parent being prior to a child, if it has at least one child. Therefore, the uppermost node has the highest priority. Note that if a parent has children, the order of priorities between children is undefined. If a Heap structure is implemented with an array, a priority is identified with an address. As shown in Fig. 2-1, the address of the uppermost priority in a Heap structure is assigned 1 then the remaining addresses are sequentially assigned top-to-bottom and left-to-right. Here, address 12 identifies the rightmost priority and 13 identifies the leftmost empty entry. As shown Fig. 2-1, priorities are densely packed from the left in an array-based Heap structure. Insertion operation that inputs a priority to an array-based Heap structure and deletion operation that outputs the highest priority replace priorities while retaining constraints where a parent is prior to each child and priorities are densely packed from the left. B. Insertion Operation to Heap Insertion operation to a Heap is achieved by searching an insertion address (position) for the priority to be inserted. The basic algorithm is given below. a) Assign the leftmost empty entry to the initial insertion position. b) If the priority to be inserted is not prior to the priority in the parent of the insertion position or if the insertion position is the uppermost priority, move the priority to be inserted to the insertion position, then stop. c) Otherwise, move the priority in the parent of the insertion position to the insertion position, then update the insertion position to the parent. d) Repeat b) and c) until they stop.

Fig. 2-3. Example deletion operation.

D. Features of Conventional Heap The major feature of the conventional array-based Heap explained above is its lightweight memory management due to

If successive operations of deletion then insertion are required, instead of the rightmost priority, assign the priority to be inserted of insertion operation to the priority that is to be inserted in this step; this is consequently faster than the sequential operation of deletion and insertion.

This full text paper was peer reviewed at the direction of IEEE Communications Society subject matter experts for publication in the IEEE ICC 2009 proceedings

densely packed priorities from the left; thus, it enables high-speed implementation. A further feature is the maximum number of comparisons and movements of priorities in insertion or deletion operation is of the order of log (Heap capacity); thus, the worst-case runtime is ensured. III. PRINCIPLES OF CONCURRENT HEAP The conventional Heap algorithms mentioned in Section II are described in the sequential procedure for software implementations**. The principles underlying the Concurrent Heap we developed that assumes hardware implementations are described in this section. A. Data Structure of Concurrent Heap The data structure for the Concurrent Heap is given in Fig. 3-1. As shown in the figure, it consists of layers identified with the distance from the uppermost priority, which is in layer 0. Each layer has a memory that holds priorities; e.g., layer N holds 2N priorities. The memory address for the leftmost priority in a layer is assigned to 0 then the remaining addresses are sequentially assigned from the left. Therefore, the addresses for children, whose parent is identified with address A in the layer above, are 2A and 2A+1. Each layer also holds a number of priorities in its memory. To distinguish empty and full, the word length for the number of priorities in layer N is N+1 bit.

Insertion step 1: Select ancestor priorities from the leftmost empty entry to the uppermost priority and simultaneously compare them with the priority to be inserted, then determine an insertion position.

Fig. 3-2. Example insertion step 1.

Insertion step 2: Move each selected priority for the insertion position and below to the selected child, and simultaneously move the priority to be inserted to the insertion position.

Fig. 3-3. Example insertion step 2.

Insertion step 3: If each parent of the moved priorities has the other child, compare the priorities then update a related entry in the comparison-result memory, otherwise clear the entry. This step is simultaneously processed among layers, because at most one priority is moved in a layer with an insertion or deletion operation.

Fig. 3-1. Data structure of Concurrent Heap.

Each layer except for layer 0 also has a memory in which address A holds the result for comparing priorities, whose parents are the same, identified with addresses 2A and 2A+1 in the priorities memory; layer N holds 2N-1 results. The representation of comparison results is given below. 0: The left side has precedence or only left-side priority exists. 1: The right side has precedence. NA: The same priorities or neither priority exists. B. Parallelized Insertion Operation Insertion operation in Concurrent Heap is parallelized based on the following steps. The figures that follow illustrate the parallelized insertion operation outlined in Fig. 2-2.

There has been considerable research on parallelized Heap for a shared-memory multiprocessor where Heap structure is in the shared-memory and each processor is assigned to an insertion or deletion operation (e.g., [5]) or processors execute an insertion or deletion operation in parallel (e.g., [6]). However, in terms of hardware-based implementations, these researches are not particularly informative, because the essence of hardware-based parallelization is that the simultaneous execution of distributed finite state machines where a state is local to a machine.

**

Fig. 3-4. Example insertion step 3.

C. Parallelized Deletion Operation Deletion operation in Concurrent Heap is parallelized based on the steps that follow. The following figures illustrate the parallelized deletion operation outlined in Fig. 2-3. Deletion step 1: Remove the uppermost priority then output it as the highest priority. If the number of priorities in the Heap is decreased to 0, then stop. Remove the rightmost priority then assign it to the priority to be inserted and clear the related entry in the comparison-result memory. Then, select a series of

If successive operations of deletion then insertion are required, the same modifications as to the conventional Heap could be applied.

This full text paper was peer reviewed at the direction of IEEE Communications Society subject matter experts for publication in the IEEE ICC 2009 proceedings

prior descendants (recursively select a prior child of a selected priority) from the uppermost priority (the details will be described later) and simultaneously compare them with the priority to be inserted; then, determine an insertion position.

data_out

Layer 0

push_data pop_data

empty empty

push push

data_in

1 bit

Layer 1

1 bit pop_address push_data pop_data

pop

pop

full

full

2 bit

Layer 2

2 bit pop_address push_data pop_data

empty

push

Deletion step 2: Move each selected priority of the insertion position and above to the parent, and simultaneously move the priority to be inserted to the insertion position.

push_pop_address_bus

Fig. 3-5. Example deletion step 1.

3 bit

Layer 3

pop_address push_data pop_data

pop

empty

3 bit

push

4 bit

Layer 4 (Bottom layer)

Fig. 4-1. Block diagram of Concurrent Heap.

Fig. 3-6. Example deletion step 2.

Deletion step 3: This is the same as insertion step 3. D. Features of Concurrent Heap One feature of Concurrent Heap is that the priority memory of the conventional array-based Heap is segmented into layers of a binary tree; then, the insertion- and deletion-operation algorithms are parallelized without the need for essential modifications. Therefore, all the features of conventional Heap can be applied to Concurrent Heap. To parallelize the deletion-operation algorithm, comparison processes between children and between the priority to be inserted and the prior child are separated; the former is simultaneously executed among layers after the movement of priorities and the latter is also simultaneously processed based on the comparison results. IV. IMPLEMENTATION OF CONCURRENT HEAP A. Block diagram There is a block diagram of Concurrent Heap in Fig. 4-1, which shows an example of five layers of Heap. The signals between layers are as follows. data_in: The input of the priority to be inserted. data_out: The output of the highest priority. comparison_data_bus: The broadcast bus for

priority to be inserted. push_pop_address_bus: The cuneiform bus for the address that selects priorities compared with the priority to be inserted. pop_address: The output of comparison-result memories. full: This indicates priorities in the layer are full. empty: This indicates priorities in the layer are empty. push and pop: These indicate the source of the priority to be moved. push_data and pop_data: These indicate the priority to be moved. B. Identification of layer that holds leftmost empty entry or rightmost priority The insertion and deletion operations of Concurrent Heap replace priorities while retaining the constraint that the priorities are densely packed from the left. Therefore, if priorities in a layer are full, all the layers above are also full, because priorities are sequentially stored from the upper to the lower layers. If priorities in a layer are empty, all the layers below are also empty. Therefore, the layer that holds the leftmost empty entry is the layer whose priorities are not full and the layer above that is full; i.e., the layer (full output = false AND full input = true). However, in layer 0 or the bottom layer, if (full output = false) or (priorities are not full AND full input = true) is respectively asserted, it holds the leftmost empty entry. Similarly, the layer that holds the rightmost priority is that whose priorities are not empty and the layer below that is empty; i.e., the layer (empty output = false AND empty input = true). However, in layer 0 or the bottom layer, if (priorities are

pop

full

full

comparison_data_bus

This full text paper was peer reviewed at the direction of IEEE Communications Society subject matter experts for publication in the IEEE ICC 2009 proceedings

Number of priority

full AND empty input = true) or (empty output = false) is respectively asserted, it holds the rightmost priority. C. Identification of layer that holds insertion position The insertion and deletion operations of Concurrent Heap replace priorities while retaining the constraint that a parent is prior to each child. Therefore, if the priority to be inserted is prior to the selected priority in a layer, it is also prior in all the layers below. If the priority to be inserted is not prior to the selected priority in a layer, it is also not prior in any of the layers above. Therefore, in insertion operation, the identification of the layer that holds the insertion position is facilitated with each push output, which is asserted to indicate that the layer is a source of priority to be moved if the priority to be inserted is prior to the selected priority in the layer. Thus, the layer that holds the insertion position is the layer whose push input from the layer above is not asserted and (push output to the layer below is asserted OR is holding the leftmost empty entry). However, in layer 0, it has been assumed that the push input from the layer above has not been asserted. A pop output is asserted in deletion operation to indicate that the layer is a source of priority to be moved if the priority to be inserted is not prior to the selected priority in the layer. Thus, the layer that holds the insertion position is that whose pop input from the layer below is not asserted and pop output to the layer above is asserted. However, in layer 0, it has been assumed that the pop output to the layer above is asserted and, in the bottom layer, it has also been assumed that the pop input from the layer below has not been asserted. D. Selection of priorities that are compared with priority to be inserted Parent-child priorities in Concurrent Heap that are compared with the priority to be inserted are simultaneously selected with the cuneiform push_pop_address_bus that addresses each priorities memory segmented into the layers. The relationship between each address input of priorities memory, input and output of comparison-result memories, and the number of priorities is shown in Fig. 4-2. In insertion operation, layer N (N > 0) that holds the leftmost empty entry is never full; thus, lower N bits for the number of priorities identifies the leftmost empty entry in the priorities memory of layer N. Therefore, priorities that are compared with the priority to be inserted are selected with input from the cuneiform push_pop_address_bus that is driven by the lower N bits of the number of priorities of layer N that holds the leftmost empty entry. In deletion operation, a series of prior descendants from the uppermost priority are selected. This is implemented with comparison-result memories in Concurrent Heap. More concretely, as shown in Fig. 4-2, the address of a comparison-result memory in layer N (N > 1) is driven from concatenated outputs of comparison-result memories between layers 1 and N -1 where the MSB of the address is driven from layer 1 and the remaining addresses are sequentially driven from the other layers. Since each bit of the

push_pop_address_bus

Number of priorities

Fig. 4-2. Circuit diagram for priorities memories, comparison-result memories, and number of priorities.

push_pop_address_bus is driven from an output of a comparison-result memory, it recursively selects a prior child of a selected priority. The processing time for this selection is the total sum of access time of comparison-result memories. Thus, comparison-result memories in Concurrent Heap are supported by asynchronous-read memories to enable sequential memory access in a single clock for high-speed implementations. V. EVALUATION OF CONCURRENT HEAP To investigate the feasibility of the Concurrent Heap we developed, it was implemented with a Xilinx Virtex-II Pro XC2VP70-6 FPGA (high-capacity and middle-speed grade). The specifications for implementation were 16 or 32 bit word length for priority, a Heap capacity between 15 and 8,191 priorities (413 layers), and ISE (Ver. 8.2.03i) was used as the VHDL synthesis tool. An evaluation of the required FPGA resources, relationships between Heap capacities and the number of required slices is shown in Fig. 5-1. As shown in the figure, the required slices are the order of log (Heap capacity) and in the maximum case, which is 32-bit word length and 8,191 priorities, 1,740 slices are required. This value is approximately 5% of the XC2VP70-6 slice capacity, which is 33,088 slices; thus, it indicates that a small FPGA could support Concurrent Heap in practice.

Comparison-result memory

Number of priorities

Comp.-result memory

Comp.-result memory

Number of priorities

Comp.result memory

This full text paper was peer reviewed at the direction of IEEE Communications Society subject matter experts for publication in the IEEE ICC 2009 proceedings

1,800 16 bit 1,600 32 bti

70 16 bit 32 bit

60

1,400

Elapsed time of insertion and deletion operations (ns)

10 100 1,000 10,000

FPGA resources (number of slices)

50

1,200

1,000

40

800

30

600

20

400

200

10

0 10 100 1,000 10,000

Heap capacity (number of priorities)

Heap capacity (number of priorities)

Fig. 5-1. Relationships between Heap capacity and FPGA resources.

The elapsed time for insertion and deletion operations was derived from the clock speed to obtain the benchmark for runtime. This is because, in implementations of per-flow queuing, a timing tag is assigned to an incoming frame then insertion operation for the tag is executed and when a frame is emitted, deletion operation is executed; thus, if the total sum of the elapsed times for these operations does not exceed the transmission time for the minimum-length frame on a communications line, it could work at the wire rate with successive minimum-length frames. The relationships between Heap capacity and elapsed time for insertion and deletion operations are shown in Fig. 5-2. Each elapsed time was derived from the maximum frequency that successfully met the clock constraints of place and route for VHDL synthesis (i.e., the warranted frequency from FPGA specifications). Where the Heap capacity was less than 512 priorities, the bottleneck in the elapsed time was the time to compare priorities and in the other cases this was the time to select priorities with the comparison-result memories. The maximum warranted elapsed time was 67.1 ns which is 0.1 ns faster than the transmission time for the minimum-length frame of a 10GbE LAN-PHY. The bit length that can identify the number of queues is sufficient for the priority word length of NSE in priority queues and round-robin scheduled queues. The bit length that can identify the maximum interval of scheduled frames is required for the word length in shaper and timestamp tagging queues. The details depend on the implementations but it typically does not exceed 30 bits. For example, in a shaper for a 10GbE LAN-PHY where the frame information rate is any integral multiple of 500 Kbps and the maximum frame length is 10,000 octets, the required word length for frame-emission timing, i.e.,

If a timing tag is assigned when a frame has reached the head of a queue, insertion operation is executed when an empty queue receives a frame, deletion operation is executed when a frame is emitted from a queue that is consequently empty, and successive operations of deletion then insertion are executed when a frame is emitted from a queue that is consequently not empty. In Concurrent Heap, the elapsed times for deletion operations and successive operations of deletion then insertion are the same, thus the total sum of elapsed times for insertion and deletion will eventually be eligible as benchmarks of runtime.

Fig. 5-2. Relationships between Heap capacity and elapsed time of insertion and deletion operations.

word length for priority, would be 26 bits [7]. Therefore, implementation results show that the Concurrent Heap-based NSE we developed could support over 8,000 per-flow queues for a 10GbE LAN-PHY that could work at the wire rate with successive minimum-length frames. VI. CONCLUSIONS The Concurrent Heap was developed to enable massive and high-speed per-flow queuing as a method of implementing NSE that could rapidly identify the highest priority from numerous other priorities. Since it parallelized the conventional Heap with segmented priority memories into layers of a binary tree and did not essentially modify conventional Heap algorithms, it could work at high speed due to its lightweight memory management and it also ensured the worst-case runtime. As the results from implementing FPGA indicated that the required resources were very small, it could be implemented, in practice, in a small size FPGA, and the warranted runtime speed indicated that over 8,000 per-flow queues for a 10GbE LAN-PHY could work at the wire rate with successive minimum-length frames. REFERENCES

[1] [2] [3] [4] [5] [6] [7] J. W. J. Williams, "Heapsort," CACM, Vol. 7, No. 6, June, 1964. D. E. Knuth, "The Art of Computer Programming, 2nd Ed., Vol. 3, Sorting and Searching," Addison-Wesley, 1998. T. Ha-duong and S. S. Moreira, "The Heap-sort Based ATM Cell Spacer," IEEE ICATM '99, June, 1999. R. Bhagwan and B. Lin, "Fast and Scalable Priority Queue Architecture for High-Speed Network Switches," IEEE INFOCOM, 2000. V. N. Rao and V. Kumar, "Concurrent Access of Priority Queues," IEEE Trans. on Computers, Vol. 37, No. 12, December, 1988. W. Zhang and R. E. Korf, "Parallel Heap Operations on EREW PRAM: Summary of Results," IEEE IPPS 1992, March, 1992. M. Suzuki, S. Yoshihara, and K. Minami, "Shaping Algorithm Based on Bresenham's Quantization -Enabling high-speed and scalable communications quality control-," IEICE Technical Report, NS2007-202, March, 2008.

You might also like

- ITNE3006 Design Network Infrastructure: AssignmentDocument12 pagesITNE3006 Design Network Infrastructure: Assignmentqwerty100% (1)

- Heat TreatmentsDocument14 pagesHeat Treatmentsravishankar100% (1)

- Powerpoint Presentation R.A 7877 - Anti Sexual Harassment ActDocument14 pagesPowerpoint Presentation R.A 7877 - Anti Sexual Harassment ActApple100% (1)

- Week 7 Apple Case Study FinalDocument18 pagesWeek 7 Apple Case Study Finalgopika surendranathNo ratings yet

- Evaluation of NOC Using Tightly Coupled Router Architecture: Bharati B. Sayankar, Pankaj AgrawalDocument5 pagesEvaluation of NOC Using Tightly Coupled Router Architecture: Bharati B. Sayankar, Pankaj AgrawalIOSRjournalNo ratings yet

- A Simple Self-Timed Implementation of A Priority Queue For Dictionary Search ProblemsDocument6 pagesA Simple Self-Timed Implementation of A Priority Queue For Dictionary Search ProblemsSahilYadavNo ratings yet

- 6.automation, Virtualization, Cloud, SDN, DNADocument23 pages6.automation, Virtualization, Cloud, SDN, DNAHoai Duc HoangNo ratings yet

- IJECE - Design and Implementation of An On CHIP JournalDocument8 pagesIJECE - Design and Implementation of An On CHIP Journaliaset123No ratings yet

- An Efficient Folded Architecture For Lifting-Based Discrete Wavelet TransformDocument5 pagesAn Efficient Folded Architecture For Lifting-Based Discrete Wavelet Transformcheezesha4533No ratings yet

- Abcdplace Tcad2020 LinDocument13 pagesAbcdplace Tcad2020 LinEric WangNo ratings yet

- Efficient Priority Queue Data Structure For Hardware ImplementationDocument16 pagesEfficient Priority Queue Data Structure For Hardware ImplementationFaisal IrfanNo ratings yet

- DivyasriDocument21 pagesDivyasrivenkata satishNo ratings yet

- 16 Dynamic FullDocument14 pages16 Dynamic FullTJPRC PublicationsNo ratings yet

- Lab 1 Identifying Network Architectures and EquipmentsDocument17 pagesLab 1 Identifying Network Architectures and EquipmentsFarisHaikalNo ratings yet

- A Review-Analysis of Network Topologies For MicroenterprisesDocument7 pagesA Review-Analysis of Network Topologies For MicroenterprisesLionaFerIvanNo ratings yet

- Routing and Multicast in Multihop, Mobile Wireless NetworksDocument6 pagesRouting and Multicast in Multihop, Mobile Wireless NetworksrishikarthickNo ratings yet

- Q S O M: Karol MolnárDocument6 pagesQ S O M: Karol MolnárMinhdat PtitNo ratings yet

- Topological Sorting of Large NetworksDocument5 pagesTopological Sorting of Large NetworksPepeNo ratings yet

- Gap Method For Keyword Spotting: Varsha Thakur, HimanisikarwarDocument7 pagesGap Method For Keyword Spotting: Varsha Thakur, HimanisikarwarSunil ChaluvaiahNo ratings yet

- Huawei RTN A Modo Transparente RTN910Document10 pagesHuawei RTN A Modo Transparente RTN910julu paezNo ratings yet

- A Novel Algorithm For Distribution Netwo PDFDocument6 pagesA Novel Algorithm For Distribution Netwo PDFmunnakumar1No ratings yet

- Topology: Computer Network (Module 1) (DPP)Document26 pagesTopology: Computer Network (Module 1) (DPP)anup03_33632081No ratings yet

- Segment-Based Routing: An Efficient Fault-Tolerant Routing Algorithm For Meshes and ToriDocument10 pagesSegment-Based Routing: An Efficient Fault-Tolerant Routing Algorithm For Meshes and ToriDivyesh MaheshwariNo ratings yet

- Router Level Load Matching Tools in WMN - A SurveyDocument2 pagesRouter Level Load Matching Tools in WMN - A SurveyIJRAERNo ratings yet

- 2019 - ASA-routing A-Star Adaptive Routing Algorithm For Network-on-Chips 18th ASA-routing A-Star Adaptive Routing Algorithm For NetwDocument13 pages2019 - ASA-routing A-Star Adaptive Routing Algorithm For Network-on-Chips 18th ASA-routing A-Star Adaptive Routing Algorithm For NetwHypocrite SenpaiNo ratings yet

- Rethinking The Service Model: Scaling Ethernet To A Million NodesDocument6 pagesRethinking The Service Model: Scaling Ethernet To A Million Nodesbalachandran99No ratings yet

- 10 - Cloud-Based OLAP Over Big Data Application Scenarious and Performance AnalysisDocument7 pages10 - Cloud-Based OLAP Over Big Data Application Scenarious and Performance AnalysisSourabhNo ratings yet

- MSME CI AnalysisNoteDocument5 pagesMSME CI AnalysisNoteArif Nur HidayatNo ratings yet

- Wingz Technologies 9840004562: A Scalable and Modular Architecture For High-Performance Packet ClassificationDocument10 pagesWingz Technologies 9840004562: A Scalable and Modular Architecture For High-Performance Packet ClassificationReni JophyNo ratings yet

- On The Extreme Parallelism Inside Next-Generation Network ProcessorsDocument9 pagesOn The Extreme Parallelism Inside Next-Generation Network ProcessorsNaser AlshafeiNo ratings yet

- Optimizing Routing Rules Space Through Traffic Engineering Based On Ant Colony Algorithm in Software Defined NetworkDocument7 pagesOptimizing Routing Rules Space Through Traffic Engineering Based On Ant Colony Algorithm in Software Defined NetworkTibebu Xibe TeNo ratings yet

- Written by Jun Ho Bahn (Jbahn@uci - Edu) : Overview of Network-on-ChipDocument5 pagesWritten by Jun Ho Bahn (Jbahn@uci - Edu) : Overview of Network-on-ChipMitali DixitNo ratings yet

- Clock Tree Synthesis Under Aggressive Buffer Insertion: Ying-Yu Chen, Chen Dong, Deming ChenDocument4 pagesClock Tree Synthesis Under Aggressive Buffer Insertion: Ying-Yu Chen, Chen Dong, Deming ChenSudheer GangisettyNo ratings yet

- Rethinking The Service Model Scaling Ethernet To A Million Nodes2004 PDFDocument6 pagesRethinking The Service Model Scaling Ethernet To A Million Nodes2004 PDFChuang JamesNo ratings yet

- Core Techniques On Designing Network For Data Centers: A Comparative AnalysisDocument4 pagesCore Techniques On Designing Network For Data Centers: A Comparative AnalysisBeliz CapitangoNo ratings yet

- Computer Generation of Streaming Sorting NetworksDocument9 pagesComputer Generation of Streaming Sorting Networksdang2327No ratings yet

- Coordinated Network Scheduling: A Framework For End-to-End ServicesDocument11 pagesCoordinated Network Scheduling: A Framework For End-to-End ServiceseugeneNo ratings yet

- Basis For Comparison Synchronous Transmission Asynchronous TransmissionDocument31 pagesBasis For Comparison Synchronous Transmission Asynchronous TransmissionDev SharmaNo ratings yet

- Cost-Effective Resource Allocation of Overlay Routing Relay NodesDocument8 pagesCost-Effective Resource Allocation of Overlay Routing Relay NodesJAYAPRAKASHNo ratings yet

- High-Speed and Energy-Efficient Carry Skip Adder Operating Under A Wide Range of Supply Voltage LevelsDocument8 pagesHigh-Speed and Energy-Efficient Carry Skip Adder Operating Under A Wide Range of Supply Voltage LevelsTechnos_IncNo ratings yet

- Modelling and Simulation of Load Balancing in Computer NetworkDocument7 pagesModelling and Simulation of Load Balancing in Computer NetworkAJER JOURNALNo ratings yet

- Cisco Spine LeafDocument5 pagesCisco Spine LeafMasih FavaNo ratings yet

- 10 1 1 70Document14 pages10 1 1 70Sanjeev Naik RNo ratings yet

- Energy-And Performance-Aware Mapping For Regular Noc ArchitecturesDocument12 pagesEnergy-And Performance-Aware Mapping For Regular Noc ArchitecturespnrgoudNo ratings yet

- Joint Scheduling and Resource Allocation in Uplink OFDM Systems For Broadband Wireless Access NetworksDocument9 pagesJoint Scheduling and Resource Allocation in Uplink OFDM Systems For Broadband Wireless Access NetworksAnnalakshmi Gajendran100% (1)

- Run PP07 02Document11 pagesRun PP07 02MangoNo ratings yet

- Design of A Reconfigurable Switch Architecture For Next Generation Communication NetworksDocument6 pagesDesign of A Reconfigurable Switch Architecture For Next Generation Communication Networkshema_iitbbsNo ratings yet

- A Novel Distributed Call Admission Control Solution Based On Machine Learning Approach - PRESENTATIONDocument11 pagesA Novel Distributed Call Admission Control Solution Based On Machine Learning Approach - PRESENTATIONAli Hasan KhanNo ratings yet

- How To Choose A Motion BusDocument7 pagesHow To Choose A Motion BussybaritzNo ratings yet

- The Research of Load Balancing Technology in Server Colony: - Gao Yan, Zhang Zhibin, and Du WeifengDocument9 pagesThe Research of Load Balancing Technology in Server Colony: - Gao Yan, Zhang Zhibin, and Du WeifengNguyen Ba Thanh TungNo ratings yet

- Applying Time-Division Multiplexing in Star-Based Optical NetworksDocument4 pagesApplying Time-Division Multiplexing in Star-Based Optical NetworksJuliana CantilloNo ratings yet

- Khoaluan HoangTuanHungDocument6 pagesKhoaluan HoangTuanHungHoàng Tuấn HùngNo ratings yet

- On The Interaction of Multiple Routing AlgorithmsDocument12 pagesOn The Interaction of Multiple Routing AlgorithmsLee HeaverNo ratings yet

- What Is A Network Operating SystemDocument8 pagesWhat Is A Network Operating SystemJay-r MatibagNo ratings yet

- Jecet: Journal of Electronics and Communication Engineering & Technology (JECET)Document6 pagesJecet: Journal of Electronics and Communication Engineering & Technology (JECET)IAEME PublicationNo ratings yet

- Abstract: Due To Significant Advances in Wireless Modulation Technologies, Some MAC Standards Such As 802.11aDocument6 pagesAbstract: Due To Significant Advances in Wireless Modulation Technologies, Some MAC Standards Such As 802.11aVidvek InfoTechNo ratings yet

- Core Selection TechniquesDocument5 pagesCore Selection TechniquesNabil Ben MazouzNo ratings yet

- 603 PaperDocument6 pages603 PaperMaarij RaheemNo ratings yet

- Very High-Level Synthesis of Datapath and Control Structures For Reconfigurable Logic DevicesDocument5 pagesVery High-Level Synthesis of Datapath and Control Structures For Reconfigurable Logic Devices8148593856No ratings yet

- Improving Delivery Ratio For Application - Layer Multicast Using IrpDocument5 pagesImproving Delivery Ratio For Application - Layer Multicast Using Irpsurendiran123No ratings yet

- Cisco Data Center Spine-And-Leaf Architecture - Design Overview White Paper - CiscoDocument32 pagesCisco Data Center Spine-And-Leaf Architecture - Design Overview White Paper - CiscoSalman AlfarisiNo ratings yet

- 7Document101 pages7Navindra JaggernauthNo ratings yet

- Question Paper: Hygiene, Health and SafetyDocument2 pagesQuestion Paper: Hygiene, Health and Safetywf4sr4rNo ratings yet

- Prevalence of Peptic Ulcer in Patients Attending Kampala International University Teaching Hospital in Ishaka Bushenyi Municipality, UgandaDocument10 pagesPrevalence of Peptic Ulcer in Patients Attending Kampala International University Teaching Hospital in Ishaka Bushenyi Municipality, UgandaKIU PUBLICATION AND EXTENSIONNo ratings yet

- Syed Hamid Kazmi - CVDocument2 pagesSyed Hamid Kazmi - CVHamid KazmiNo ratings yet

- Go Ask Alice EssayDocument6 pagesGo Ask Alice Essayafhbexrci100% (2)

- EASY DMS ConfigurationDocument6 pagesEASY DMS ConfigurationRahul KumarNo ratings yet

- Understanding Consumer and Business Buyer BehaviorDocument47 pagesUnderstanding Consumer and Business Buyer BehaviorJia LeNo ratings yet

- Donation Drive List of Donations and BlocksDocument3 pagesDonation Drive List of Donations and BlocksElijah PunzalanNo ratings yet

- Economies and Diseconomies of ScaleDocument7 pagesEconomies and Diseconomies of Scale2154 taibakhatunNo ratings yet

- BPL-DF 2617aedrDocument3 pagesBPL-DF 2617aedrBiomedical Incharge SRM TrichyNo ratings yet

- Design of Flyback Transformers and Filter Inductor by Lioyd H.dixon, Jr. Slup076Document11 pagesDesign of Flyback Transformers and Filter Inductor by Lioyd H.dixon, Jr. Slup076Burlacu AndreiNo ratings yet

- LT1256X1 - Revg - FB1300, FB1400 Series - EnglishDocument58 pagesLT1256X1 - Revg - FB1300, FB1400 Series - EnglishRahma NaharinNo ratings yet

- STM Series Solar ControllerDocument2 pagesSTM Series Solar ControllerFaris KedirNo ratings yet

- Sacmi Vol 2 Inglese - II EdizioneDocument416 pagesSacmi Vol 2 Inglese - II Edizionecuibaprau100% (21)

- Assessment 21GES1475Document4 pagesAssessment 21GES1475kavindupunsara02No ratings yet

- SPC Abc Security Agrmnt PDFDocument6 pagesSPC Abc Security Agrmnt PDFChristian Comunity100% (3)

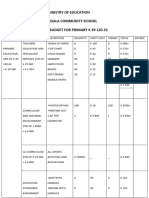

- Ministry of Education Musala SCHDocument5 pagesMinistry of Education Musala SCHlaonimosesNo ratings yet

- Home Guaranty Corp. v. Manlapaz - PunzalanDocument3 pagesHome Guaranty Corp. v. Manlapaz - PunzalanPrincess Aliyah Punzalan100% (1)

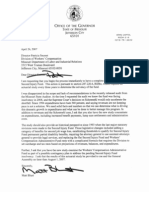

- Historical DocumentsDocument82 pagesHistorical Documentsmanavjha29No ratings yet

- Engagement Letter TrustDocument4 pagesEngagement Letter Trustxetay24207No ratings yet

- Outage Analysis of Wireless CommunicationDocument28 pagesOutage Analysis of Wireless CommunicationTarunav SahaNo ratings yet

- Department of Labor: 2nd Injury FundDocument140 pagesDepartment of Labor: 2nd Injury FundUSA_DepartmentOfLabor100% (1)

- Writing Task The Strategy of Regional Economic DevelopementDocument4 pagesWriting Task The Strategy of Regional Economic DevelopementyosiNo ratings yet

- YeetDocument8 pagesYeetBeLoopersNo ratings yet

- Chapter 3 Searching and PlanningDocument104 pagesChapter 3 Searching and PlanningTemesgenNo ratings yet

- Kicks: This Brochure Reflects The Product Information For The 2020 Kicks. 2021 Kicks Brochure Coming SoonDocument8 pagesKicks: This Brochure Reflects The Product Information For The 2020 Kicks. 2021 Kicks Brochure Coming SoonYudyChenNo ratings yet

- Review of Accounting Process 1Document2 pagesReview of Accounting Process 1Stacy SmithNo ratings yet