Professional Documents

Culture Documents

Market Overview - Information Quality 2002

Uploaded by

fakharimranOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Market Overview - Information Quality 2002

Uploaded by

fakharimranCopyright:

Available Formats

© 2002 Giga Information Group

Copyright and Material Usage Guidelines

September 13, 2002

Market Overview 2002: Information Quality

Lou Agosta

Contributing Analysts: Keith Gile, Erin Kinikin, Philip Russom

Giga Position

Giga last reported on the data quality market in August 2000. Since then, the total market has grown from

$250 million to an estimated $580 million, including both software and services. The represents a compound

annual growth rate (CAGR) of 66 percent, which is no small accomplishment in the past two years, albeit for

a market of modest size. That growth consists of two parts — software and services — which have grown at

rates of 40 percent and nearly 55 percent (respectively) during that period. Our prediction is that the data and

information quality market will slow, with the respective growth rates reversed to 25 percent for software and

20 percent for services during the next year. The economy is too uncertain to predict beyond that at this time.

At that rate, the market will hit $1 billion in 2005.

The reason for this reversal of emphasis of software and services is a growing appreciation from IT managers

that the information quality process requires software to automate, leverage productivity and manage what is

otherwise a labor-intensive task. If data quality software vendors are able to help enterprises move beyond

defect inspection to root cause analysis and intelligent information integration, then the huge potential of this

market in improving the quality of enterprise information may indeed be realized much sooner than 2005.

Proof/Notes

Information quality initiatives and the market for supporting technology are being driven by:

1. Customer relationship management (CRM) implementations

2. Public sector initiatives

3. Mergers and acquisitions

4. Data and information aggregation and validation

5. Service bureaus/application service providers (ASPs)

6. Data profiling

7. Data warehousing and extraction, transformation and loading (ETL) operations

8. Run-to-run balancing and control

9. Profitable postal niches

10. Information quality features: reducing uncertainty via quality at no extra charge

End-user enterprises will not be able to “plug into” data and information quality as easily as buying a

software connector or adapter for an ETL tool or a CRM application. Therefore, enterprises should plan on

completing design work to understand and reconcile the diverse schemas that represent customers, products

and other key data dimensions in their firms. A design for a consistent and unified view of customers,

products and related entities is on the critical path of successful intelligent information integration (see table

at end of document).

Planning Assumption ♦ Market Overview 2002: Information Quality

RPA-092002-00008

© 2002 Giga Information Group

All rights reserved. Reproduction or redistribution in any form without the prior permission of Giga Information Group is expressly prohibited. This information

is provided on an “as is” basis and without express or implied warranties. Although this information is believed to be accurate at the time of publication, Giga

Information Group cannot and does not warrant the accuracy, completeness or suitability of this information or that the information is correct.

Market Overview 2002: Information Quality ♦ Lou Agosta

CRM implementations: Information quality issues have turned out to be the weak underbelly of CRM

implementations. The need to address the data quality risk to CRM systems will continue to stimulate the

acquisition of information quality software and solutions by end-user enterprises. Without information

quality, the risk is that the client will implement CRM but miss the customer. For example, knowing the

lifetime value of a customer still requires aggregating a lifetime of customer transactions across multiple

systems and datasets, some of which are not available. Such intelligent information integration is something

no mere user interface, no matter how elegant, can provide. Best-of-breed metadata administration,

mechanisms to share designs as well as the data standardization tools from the data quality vendors, will be

essential in helping technology catch up with the much hyped 360-degree view of the customer. Given the

many valid and legitimate definitions of customer, this entity often ends up being a point on the horizon

toward which service, delivery and marketing processes converge. There is no easy substitute for a piece of

design work for data integration that optimizes transactional systems by closing the loop back from decision-

support systems, preserving the business context of the organization in question.

CRM has brought to the fore the task of identifying and deduplicating individual customers across multiple

datasets, touchpoints and user interfaces. Much of the work in the data quality arena goes into accurately

identifying customers as individuals, then building the business rules that warrant the outcome of a match or

not. This requires data standardization, diagnosis of data defects, duplicate detection, error correction,

resolution recommendations, approvals and notifications and ongoing monitoring. As Giga research has

pointed out, enterprises should establish an information quality plan as part of any new CRM initiative and

press CRM vendors to articulate a consistent, end-to-end strategy to identify and address customer data

quality issues as an integral part of the customer process (see References).

All of the best-of-breed data quality vendors in the table at the end of the document — Ascential, DataFlux,

Firstlogic, Innovative Systems, Group 1 Software and Trillium Software — provide standardization,

identification, matching and deduplication software that can be applied to a wide variety of data types, not

just customer data. However, with the amount of activity surrounding CRM systems, vendors have been

attracted to the market and positioned software solutions to address the real needs (see Planning Assumption,

Market Overview 2002: Customer Relationship Management, Erin Kinikin) — it’s where the data is. For

example, several vendors have adapters or connectors that specifically target Siebel:

•= Ascential INTEGRITY for Siebel eBusiness Applications

•= Group 1 Data Quality Connector for Siebel

•= Innovative i/Lytis for Siebel

•= Firstlogic Siebel Connector

•= Trillium Connector for Siebel (both versions 6 and 7)

Other data quality providers such as DataFlux and Sagent (GeoStan) do not yet have a separately predefined

adapter for Siebel but support a software development kit (SDK) that can be used to jump-start the custom

software development desk. Without exception, the challenge is to move beyond name and address

standardization and attain intelligent information integration, something no single-system plug-in can

provide.

The installation of a new CRM system often acts as a lightning rod for the constellation of data quality issues

as the system is loaded with data from other back-end systems in the enterprise. Often times, the piece of

design work needed to identify the customer of records is the responsibility of the IT department at the end-

user firm. Assuming that that department has been diligent, it is useful to have technology with which to

implement the design rather than reinvent the wheel. This is where a prepackaged adapter or connector can

provide a boost to developer productivity, provided that the inputs are understood and qualified.

Public sector initiatives: Giga has recently heard from several information quality software vendors with

Planning Assumption ♦ RPA-092002-00008 ♦ www.gigaWeb.com

© 2002 Giga Information Group

Page 2 of 9

Market Overview 2002: Information Quality ♦ Lou Agosta

special capabilities in immigration and law enforcement. For example, in 1986 Australia was trying to

determine how many illegal immigrants were in the country. Since Australia is an island continent, the

government figured it would match the names of people who arrived for vacations, etc. with those who left,

and the difference would be the number of illegal immigrants. However, out of a population of about 12

million, the number that was derived — 400,000 — was too high. Tourists with no knowledge of English or

the alphabet — for example, tourists from Asia — did not necessarily know how to write their names and

might transliterate their names differently after they had been in the country for several weeks or months.

Thus, out of the crucible of an international melting pot, Search Software (renamed Search Software

America) was created. The actual number of illegal immigrants was 100,000.

Innovative Systems has a timely offering to the burgeoning security and law enforcement markets: i/Lytics

Secure. This offering enables public and private organizations to compare client records against lists of

criminal suspects and fugitives published by the US Treasury Department’s Office of Foreign Assets Control

(OFAC compliance) and other leading US and international government agencies, such as the FBI’s most

wanted fugitives list, the US Marshals’ most wanted fugitives, the US Drug Enforcement Administration’s

fugitives list and Interpol’s wanted notices.

Trillium Software reportedly offered to its government and financial customers a free service engagement to

develop a process to identify and screen for OFAC compliance. Some took advantage of the offer. Others

reportedly used the tunable matching technology already available within the Trillium Software System to

identify and link suspect names and addresses.

Developers and policy makers are advised to be skeptical about the appropriateness of high-risk CRM

technology applications designed to manage consumer or business PC sales (see IdeaByte, CRM Takes on

Terrorism, but Only Offers a Partial Solution, John Ragsdale). However, sophisticated matching technology,

especially from the data quality vendors, properly tuned and backed up with the right databases and

management policies, are likely to be an important part of the solution.

The market for addressing information quality defects is being extended to improving aging database

infrastructure and outmoded data management practices in the public sector. Thus, all types of databases in

the public sector (for example, voter registration, driver’s licenses, hazardous waste haulers, pilot’s licenses,

immigration, education and health records) will be enhanced and interconnected where appropriate policies

and protections warrant it. From a public policy point of view, something such as national identity cards are

far less significant than operational excellence and information quality work in the trenches, for instance,

getting driver’s license and voter registration data stores accurately aligned and synchronized.

Mergers and acquisitions: Mergers and acquisitions continue apace, and as soon as enterprises formalize a

merger, the issue of compatibility between IT systems arises. In defining the new technology infrastructure,

identifying, assessing and managing the overlap between customers, products and other essential data

dimensions become a priority. The consolidation of product and customer dimensions enables cross-selling

and upselling in CRM, presenting a single product catalog to partners and clients over the Web, as well as

substituting information for inventory in demand planning and supply chain applications. A profile of the

data and of the respective workloads surfaces a whole hierarchy of information quality issues. No reason

exists why systems from completely different enterprises should be consistent or aligned or should satisfy a

unified design. As a result of the merger, the two or more firms that are now, as a matter of definition, part of

a single business enterprise risk an information quality meltdown unless the data is inventoried, evaluated and

managed proactively as an enterprise asset. For example, client references from Firstlogic, Group 1, Trillium

and Vality have reported the use of searching and matching technologies to produce customer-focused

consolidated systems where previously heterogeneous, multi-divisional line of business or product-focused

organizations had existed.

Data and information aggregation and validation: The technical and business processes for data (and

information) integration are so similar to those for data validation that it is hard to justify excluding such

Planning Assumption ♦ RPA-092002-00008 ♦ www.gigaWeb.com

© 2002 Giga Information Group

Page 3 of 9

Market Overview 2002: Information Quality ♦ Lou Agosta

matters from the information quality market. Credit bureaus and information aggregators have found that

customer data integration and information quality is a growth industry. After having built up databases of

individuals and households in the 100 million record range as a result of consumer credit and credit reporting,

firms such as Equifax, Experian and TransUnion now find they are in the business of verifying, identifying

and integrating customer data. Database marketing and demographic firms such as Acxiom, CACI, Claritas,

Harte-Hanks and Polk see similar opportunities. For example, Acxiom reportedly has more information on

Americans than the Internal Revenue Service (IRS) does. Acxiom acquired DataQuick, one of the largest

compilers and resellers of real estate information in the country, and it can identify home ownership. By

using Acxiom’s AbiliTec customer data integration technology, the end users of customer loyalty (and

related) applications can link contact information captured in real time across multiple customer interfaces

with legacy customer data stores. Experian claims that its Truevue CDI solution adds a significant lift to the

matching and deduplication results by checking a reference database with some 205 million entries for US

residents. This market segment is highly competitive and alternative vendors are discussed in previous

research (see IdeaByte, Information Infomediaries for Demographics, Lou Agosta). In most instances,

enterprises must use data quality functionality narrowly defined to profile, standardize and link their customer

data before matching to any of the above cited external databases.

Service bureaus/ASPs: The ASP market has not fared well since August 2000 when Giga reported that

several data quality vendors were extending their solutions in the direction of the Web, with an emphasis on

eCRM ASPs. At the time, Firstlogic, Group 1, Innovative Systems, Trillium and Vality (whose INTEGRITY

is now part of Ascential’s enterprise data integration solution as well as a stand-alone) were all working on

separate versions of an ASP offering to allow clients to process individual records on a transaction-by-

transaction basis (which yields real-time data quality). The revenue model is a vendor’s dream and can be

cost-effective for clients with high-value, low-volume transactions. The problem has been forecasting

revenue using a “by the transaction approach.” The service bureau has a similar tradition, without the

emphasis on specific application functionality, and that is the direction this submarket has taken. For

example, Firstlogic has exposed its data assessment functions via a service offering with reporting over the

Web via IQ Insight; in addition, Group 1 has Hot Data, Sagent has Centrus Record Processing and Acxiom

has Acxiom Data Network (see IdeaByte, Uses and Limitations of Real-Time Data Quality Functions, Philip

Russom).

Data profiling: Data profiling products and services include Ascential MetaRecon (available either as a

stand-alone or as part of a data integration suite), Avellino Discovery (also available from Innovative

Systems), Evoke Axio, Firstlogic IQ Insight, and Trillium Software’s Data Quality Analytics. Data quality

should be checked every time the data is touched, at the front end, middle and back end of the system. This

applies first to the operational transactional systems. Data entry screens, user interfaces or points of data

capture should include validation and editing logic. Of course, this implies the rules of validation and the

relations between data elements are known and can be coded. As indicated, this guideline extends to any and

all systems, operational or decision support (data warehouse). The days are gone when Evoke was the only

technology in the data profiling market and its prices reflected it. The competition between Ascential

MetaRecon, Avellino Discovery (also available from Innovative Systems) and Evoke Axio will cause prices

to fall further, which is good news for buyers.

Data warehousing and ETL operations: The architectural point at which upstream transactional systems are

mapped to the data warehouse is a natural, architectural choke point through which all data must flow in the

process of extraction and transformation on its way from the operational to the decision-support system. It is

a natural and convenient place to perform a number of essential functions, such as checking and correcting

information quality and capturing metadata statistics about information quality. The process is often

automated by means of an ETL tool, so it makes sense to look for connectors from the ETL to the data

quality technologies. The available options are:

•= Firstlogic Information Quality Suite can be invoked by ETL technology from Ascential (DataStage)

and Informatica (PowerCenter).

Planning Assumption ♦ RPA-092002-00008 ♦ www.gigaWeb.com

© 2002 Giga Information Group

Page 4 of 9

Market Overview 2002: Information Quality ♦ Lou Agosta

•= Ascential INTEGRITY can be invoked by Ascential (DataStage) and, at least prior to its acquisition

by Ascential, by Informatica (PowerCenter). Of course, Ascential is promoting the advantages of

getting both data quality and ETL technology from the same provider — in this case, Ascential.

•= Trillium Software System can be invoked by Ascential (DataStage), Informatica (PowerCenter),

Microsoft DTS, Ab-Initio and, at least prior to its acquisition by Business Objects, by Acta.

•= DataFlux Blue Fusion is integrated with SAS Warehouse Administrator.

•= In November 1999, Oracle purchased Carleton and its Pure Integrate contains data standardization

technology. However, according to Oracle, Pure Integrate is being desupported as a stand-alone tool.

Oracle Warehouse Builder (OWB) 9i includes the Name/Address operator of Pure Integrate and full

support of the product functionality should be available by 2003. Meanwhile, Oracle reports that

technology from the Trillium Software System is being integrated into OWB.

•= Group 1 DataSight is able to invoke Informatica and iWay, thus turning the tables on the ETL tool

and subordinating the ETL process to the data quality one.

Note that, for once, the promise of a “seamless interface” is not mere marketing hype. In demonstrations

provided to Giga, the integration between Ascential and Informatica and the respective data quality

technologies were such that the data quality features appear as subfunctions of the ETL process and not as

part of a separate window or tool. According to DataFlux, a similar consideration applies to it and SAS

Warehouse Administrator.

Run-to-run balancing and control: Data center operations are highly complex in diverse ways. The output of

one process is the input to another. Many-to-many relationships between jobs and processes occur in intricate

patterns. Mounting the wrong input or mounting a duplicate input (whether tape or other media) can wreck

havoc with downstream jobs. Even if the proper input is used, the record counts can be inaccurate. Software

to perform run-to-run balancing and control based on the particulars of the application has been available on

the mainframe for many years and has now migrated to alternative platforms, including Unix in its many

forms and NT/W2K. Vendors such as Unitech Systems have been doing business since the mid-1980s and

have kept pace with the technology innovations in platforms and processes. This is definitely a problem space

of the utmost importance; it is deeply embedded in the operational matrix and addresses risks to data quality

from the perspective of operations. Vendors that compete with Unitech and offer competing run-to-run

balancing solutions include Accurate Software, Smart Stream and Checkfree.

Profitable postal niches: For firms requiring direct mail, the deliverable is a standard name and address that

can be printed on an envelope. Firstlogic offers PostalSoft, Group 1 offers a name and address information

system (CODE-1 Plus), Ascential (Vality) offers INTEGRITY CASS Appended Information Modules

(AIM). CASS is the coding accuracy support system, certified by the US Postal Service to ensure accuracy,

completeness, consistency and validity of US address information in the corporate database. According to

DataFlux, with the dfPower Address Verification module, addresses can be verified directly within the

database. This is a competitive market with significant barriers to entry due to the complex rules for postal

name and address formatting and the diversity of foreign and local names and noise words, such as “Dr.,”

“For Benefit of” or “In Trust for.” Companies such as Quick Address (QAS.com), AND Group and Human

Inference offer solutions for international name and addresses in the European Union (EU). Group 1 claims

it understands the postal formats of some 220 countries, works with the International Postal Union (IPU) to

keep the address lists current and lines up with catalog merchants in using address-based identifiers data as

the way to isolate households. Group 1 is able to append demographic data in any legally permitted detail and

offer a service bureau for address checking on a transaction basis. This eliminates the need for end-user firms

to update the 220 databases of country addresses. Name and address preparation for the US Postal Service (or

any national postal system) is a dull application that costs a small fortune if a firm makes an error. Thus, a

specialized application is important here.

Information quality future — reducing uncertainty via quality at no extra charge: Information defects

Planning Assumption ♦ RPA-092002-00008 ♦ www.gigaWeb.com

© 2002 Giga Information Group

Page 5 of 9

Market Overview 2002: Information Quality ♦ Lou Agosta

generate uncertainty in simple ways, such as when the address is wrong and the client’s package is lost. They

also cause uncertainty in complex ways, such as when what counts as a “sale” is ambiguous between

receiving a customer order vs. receiving a customer payment. Thus, the need for business enterprises to

manage and reduce uncertainty drives forward the information quality imperative. This imperative addresses

and, if successful, reduces the risk of surprises such as schedule slips, disappointed customers, solving the

wrong problem or budget overruns due to information quality defects. This, in turn, enables implementation

of state-of-the-art data warehouses that support high-impact business applications in CRM, supply chain

management and business intelligence. Due to a wide diversity of heterogeneous data sources in the

enterprise, both design and implementation skills will be needed to transform dumb data into useful

information. When the costs of defective data are added up — operational costs such as job failures, rework

and duplicate data, as well as missed business opportunities due to uncertainty — information quality is

available at no extra charge. Giga predicts that technology for deduplication, customer identification and

entry-level data standardization functions will ship “at no extra charge” with the underlying relational

database by the end of 2003 [.7p]. This is analogous to the trend whereby ETL technology, data mining and

online analytical processing (OLAP) have been driven into the relational database.

Alternative View

Two factors will limit the growth of the data quality market to single digits: The first relates to vendors, the

second to end-user firms (customers). First, a review of the software features and functions indicates many

data quality software providers are technology driven, not market oriented. The business application is, by

definition, where the business value shows up. But most of the data quality firms seem constitutionally

lacking in the ability or experience to market data quality as an enabler at the application level. Another

constraint that will significantly limit the growth of the data quality market is the similarity between the

corporate reaction to data defects and the discovery that a firm has been the victim of fraud. Firms do not

want the events discussed publicly. It seems to imply lack of diligence, skill or intelligence on the part of the

victim. Whether data quality is the weak underbelly of CRM (and diverse other applications) is a fact that

will remain a well-kept secret, because lack of data quality opens up potential liabilities that firms have an

incentive to overlook and not mention. Absent an information quality safe harbor policy to drive out the fear

of reporting data quality issues, such issues will continue to be swept under the rug. Thus, the prospective end

users of data quality products will continue to be addicted to data and drowning in it, all the while living in

denial of data quality issues.

Findings

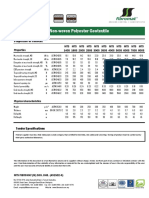

Since August 2000, the total market for data and information quality has grown from $250 million to an

estimated $580 million, including both software and services. The represents a CAGR of 66 percent, which is

no small accomplishment during the past two years, albeit for a market of modest size. That growth consists

of two parts — software and services — that have grown at rates of 40 percent and nearly 55 percent

(respectively) over that period (see table below).

All of the best-of-breed data quality vendors on the table of market shares — Ascential, DataFlux, Firstlogic,

Innovative Systems, Group 1 and Trillium — provide standardization, identification, matching and

deduplication software that can be applied to a wide variety of data types, not just customer data. However,

with the amount of activity surrounding CRM, these software providers have been attracted to the market and

have positioned products and solutions to address the real needs — it’s where the data is.

The market for addressing information quality defects is being extended to improving aging database

infrastructure and outmoded data management practices in the public sector. Thus, all sorts of databases in

the public sector voter registration, driver’s licenses, hazardous waste haulers, pilot’s licenses,

immigration, education and health records will be enhanced and interconnected where appropriate policies

and protections warrant it. From a public policy point of view, something such as national identity cards are

far less significant than operational excellence and information quality work in the trenches, for instance,

getting driver’s license and voter registration data stores accurately aligned and synchronized.

Planning Assumption ♦ RPA-092002-00008 ♦ www.gigaWeb.com

© 2002 Giga Information Group

Page 6 of 9

Market Overview 2002: Information Quality ♦ Lou Agosta

Stand-alone data aggregators have found that customer data integration and information quality is a growth

industry. After having built up databases of individuals and households in the 100 million record range as a

result of direct marketing and consumer credit and credit reporting, firms such as Acxiom, CACI, Claritas,

Equifax, Experian, Harte-Hanks, Polk and TransUnion now find themselves in the business of verifying,

matching and integrating customer data.

The point at which the ETL tool is invoked is a good time to check data and information quality. Data quality

vendors that can be invoked from ETL tools are cited under Recommendations.

When the costs of defective data are added up — operational costs such as job failures, rework and duplicate

data, as well as missed business opportunities due to uncertainty — information quality is available at no

extra charge. The prediction is that technology for deduplication, customer identification and entry-level data

standardization functions will ship “at no extra charge” with the underlying relational database by the end of

2003. This is analogous to the trend whereby ETL technology, data mining and OLAP capabilities have been

driven into the relational database.

Recommendations

End-user enterprises will not be able to “plug into” data and information quality as easily as buying a

software connector or adapter for an ETL tool or CRM application. Therefore, enterprises should plan on

completing design work to understand and reconcile the diverse schemas that represent customers, products

and other key data dimensions in their firms. A design for a consistent and unified view of customers,

products and related entities is critical path of intelligent information integration.

Certified data quality interfaces that specifically target Siebel are available — Ascential INTEGRITY for

Siebel eBusiness Applications, Group 1 Data Quality Connector for Siebel, Innovative i/Lytics for Siebel,

Firstlogic Siebel Connector and Trillium Connector for Siebel. Be prepared to perform additional design

work, since no single-system plug-in can provide integration for multiple upstream data sources.

End-user clients in the public sector will find that the data and information quality vendors are increasingly

responsive to their special needs and willing to go the extra mile to accommodate procurement and bidding

requirements. Innovative Systems, Search Software America (SSA) and Trillium have solutions targeting

law enforcement and immigration applications. In particular, SSA has a long history of being able to tune its

technology for “high-risk” applications where it is unacceptable to get a false negative (e.g., a criminal is

allowed entry into the country). Group 1, SAS, Trillium and Ascential also have separate groups to handle

government contracts.

The competition between Ascential MetaRecon, Avellino Discovery and Evoke Axio will cause prices to fall

further, which is good news for buyers. Organizations in need of data profiling and analysis tools should plan

on exploiting these competitive market dynamics.

Firstlogic IQ Insight exposes data assessment functions via a service bureau offering with reporting over the

Web — Group 1 uses Hot Data, Sagent uses Centrus Record Processing and Acxiom uses Acxiom Data

Network.

Trillium Software System, INTEGRITY and Firstlogic can be invoked by ETL tools from Ascential and

Informatica in a way that makes them look and behave like subfunctions of the ETL tool. So “seamless” is

not hype — the integration is truly seamless. Likewise, SAS Warehouse Administrator is fully integrated

with DataFlux in a similar way through the BlueFusion Client-Server implementation. Group 1 is able to

invoke Informatica and iWay ETL technologies.

For organizations requiring data quality processing using the mainframe, Trillium, Innovative Systems,

Planning Assumption ♦ RPA-092002-00008 ♦ www.gigaWeb.com

© 2002 Giga Information Group

Page 7 of 9

Market Overview 2002: Information Quality ♦ Lou Agosta

Group 1 and DataFlux (SAS) and Ascential (INTEGRITY) are first choices. SDKs that enable mainframe

processing are also available from SSA and Innovative Systems.

End-user clients with data quality issues that cannot be adequately addressed by standardization and parsing,

high-performance requirements where standardization presents unacceptable overhead, high-risk applications

(e.g., law enforcement or immigration), or multi-language or global naming requirements (including double-

byte character sets) will want to consider a combination of stabilization algorithms (e.g., Soundex, NYIIS)

and context-based rules as exemplified in SSA’s and DataFlux’s approaches. Trillium Software is UNICODE

enabled and has references that are cleansing double-byte character sets. It reportedly has more than 40

customers using the product on Japanese characters sets in Kana, Kanji, KataKana and Romanji.

Data quality should be checked every time the data is touched, at the front end, middle and back end of the

system. It is not a one-time event but a continuous process. This applies first to the operational transactional

systems. Data entry screens, user interfaces or points of data capture should include validation and editing

logic. Of course, this implies the rules of validation and the relations between data elements are known and

can be coded. If not, an additional data engineering and profiling task is implied. As indicated, this guideline

extends to any and all systems, operational or decision support (data warehouse).

References

Related Giga Research

Planning Assumptions

Data Quality Methodologies and Technologies, Lou Agosta

Developing an Integrated Customer Information System — Approaches and Trade-Offs, Richard Peynot and

Henry Peyret

IdeaBytes

Customer Data Quality: The Newest CRM Application, Erin Kinikin

CRM Is Not a Substitute for Good Customer Data Quality Processes, Erin Kinikin

Information Quality Market Drivers, Lou Agosta

The Public Sector Gets Serious About Data Infrastructure Improvement, Lou Agosta

Data Quality Breakdown in Election Spells Opportunity for Technology Upgrades, Lou Agosta

IT Trends 2002: Data Warehousing, Lou Agosta

Search Software America Has Novel Approach to Overcoming Poor Data Quality, Lou Agosta

Data Quality — It’s Not Just For Data Warehouses, Lou Agosta

Look at Evoke’s Heritage to Grasp Its Special Capabilities, Lou Agosta

Information Infomediaries for Demographics, Lou Agosta

IT Projects Accessing Legacy Databases Benefit From Data Profiling During Planning, Philip Russom

Uses and Limitations of Real-Time Data Quality Functions, Philip Russom

Planning Assumption ♦ RPA-092002-00008 ♦ www.gigaWeb.com

© 2002 Giga Information Group

Page 8 of 9

Market Overview 2002: Information Quality ♦ Lou Agosta

The Data Quality Market 2001 (in millions of dollars)

Share

Total SW Share of Share of Total

Software Service of Total

& Service Total SW Revenue (SW

Revenue Revenue Service

Revenue Revenue & Service)

Revenue

Group 1 (DataSight) $36 $42 $78 14% 13% 13%

Firstlogic (Information

$35 $22 $57 14% 7% 10%

Quality Suite)

Ascential

(INTEGRITY,

$33 $15 $48 13% 5% 8%

Metarecon, and

DataCleanse DS XE)

Trillium Software

$31 $4 $35 12% 1% 6%

System

QAS $30 $3 $33 12% 1% 6%

Innovative Systems

$20 $5 $25 8% 2% 4%

(i/Lytics)

Evoke $8 $2 $10 3% 1% 2%

DataFlux (BlueFusion) $6 $1 $7 2% 0.3% 1%

Avellino (Discovery) $4 $3 $7 2% 1% 1%

Data aggregators/

$0 $210 $210 0% 63% 36%

integrators

Other $46 $24 $70 18% 7% 12%

Total $249 $331 $580 100% 100% 100%

Source: Giga Information Group

Planning Assumption ♦ RPA-092002-00008 ♦ www.gigaWeb.com

© 2002 Giga Information Group

Page 9 of 9

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Best Practices - Customer Data Quality ManagementDocument10 pagesBest Practices - Customer Data Quality ManagementfakharimranNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- SSP421 Body BasicsDocument76 pagesSSP421 Body Basicsmamuko100% (3)

- Basic Metatrader 5 GuideDocument22 pagesBasic Metatrader 5 GuideDavid Al100% (1)

- Chapter 7 - Transient Heat Conduction PDFDocument64 pagesChapter 7 - Transient Heat Conduction PDFhaqjmiNo ratings yet

- LHR IndstryDocument84 pagesLHR Indstryabubakar09ect50% (2)

- Hotpoint Service Manual Fridge FreezerDocument36 pagesHotpoint Service Manual Fridge FreezerMANUEL RODRIGUEZ HERRERA100% (1)

- Domino A Series Classic Spares CatalogueDocument137 pagesDomino A Series Classic Spares CatalogueKoos Roets100% (2)

- AdvOSS Wateen Case StudyDocument9 pagesAdvOSS Wateen Case StudymarsalanranaNo ratings yet

- 2012 - TelecomsBusinessReport - 13feb2012 - Low Res PDFDocument28 pages2012 - TelecomsBusinessReport - 13feb2012 - Low Res PDF22nomad22No ratings yet

- Evaluating ETL Technology Part 2Document9 pagesEvaluating ETL Technology Part 2fakharimranNo ratings yet

- Evaluating ETL Technology Part 1Document8 pagesEvaluating ETL Technology Part 1fakharimran100% (2)

- Data Quality Project Cost DriversDocument2 pagesData Quality Project Cost DriversfakharimranNo ratings yet

- Data Quality Market SegmentsDocument2 pagesData Quality Market SegmentsfakharimranNo ratings yet

- Data Quality Is A MisnomerDocument3 pagesData Quality Is A MisnomerfakharimranNo ratings yet

- The Total Economic Impact™ of Implementing Corporate PortalsDocument10 pagesThe Total Economic Impact™ of Implementing Corporate Portalsfakharimran100% (2)

- What Is A Portal - Sorting Through The ConfusionDocument6 pagesWhat Is A Portal - Sorting Through The ConfusionfakharimranNo ratings yet

- The Total Economic Impact™ of Electronic Bill Presentment and PaymentDocument12 pagesThe Total Economic Impact™ of Electronic Bill Presentment and Paymentfakharimran100% (2)

- 12 ElectrostaticsDocument24 pages12 ElectrostaticsTanvi ShahNo ratings yet

- TBR Wipro LeanDocument8 pagesTBR Wipro LeanAnonymous fVnV07HNo ratings yet

- STAAD Pro Tutorial - Lesson 04 - Selection ToolsDocument3 pagesSTAAD Pro Tutorial - Lesson 04 - Selection ToolsEBeeNo ratings yet

- Transmission Line Surveyors Face Challenges in Projecting Data onto MapsDocument3 pagesTransmission Line Surveyors Face Challenges in Projecting Data onto MapsTATAVARTHYCH HANUMANRAONo ratings yet

- Car Brochure Hyundai Ioniq PX 929 RDocument13 pagesCar Brochure Hyundai Ioniq PX 929 RHalil KayaNo ratings yet

- Unit One: Wind LoadsDocument67 pagesUnit One: Wind Loadsabdu yimerNo ratings yet

- Sae j419 1983 Methods of Measuring Decarburization PDFDocument8 pagesSae j419 1983 Methods of Measuring Decarburization PDFSumeet SainiNo ratings yet

- 7216Document8 pages7216siicmorelosNo ratings yet

- Fatigue Strength in Laser Welding of The Lap Joint: S.-K. Cho, Y.-S. Yang, K.-J. Son, J.-Y. KimDocument12 pagesFatigue Strength in Laser Welding of The Lap Joint: S.-K. Cho, Y.-S. Yang, K.-J. Son, J.-Y. KimbnidhalNo ratings yet

- Compressed Air SystemDocument372 pagesCompressed Air SystemMauricioNo ratings yet

- CS153 111017Document29 pagesCS153 111017Sethu RamanNo ratings yet

- 1.2 Beams With Uniform Load and End Moments: CHAPTER 1: Analysis of BeamsDocument8 pages1.2 Beams With Uniform Load and End Moments: CHAPTER 1: Analysis of Beamsabir ratulNo ratings yet

- QSEE6800+H Signal Monitor Video and Thumbnail Streamer: Installation and Operation ManualDocument86 pagesQSEE6800+H Signal Monitor Video and Thumbnail Streamer: Installation and Operation ManualTechne PhobosNo ratings yet

- Squashing Commits with RebaseDocument4 pagesSquashing Commits with RebaseDavid BeaulieuNo ratings yet

- Strength Calculation and Dimensioning of Joints: Prepared By: Samson Yohannes Assistant ProfessorDocument45 pagesStrength Calculation and Dimensioning of Joints: Prepared By: Samson Yohannes Assistant ProfessorBK MKNo ratings yet

- FibrotexDocument2 pagesFibrotexMan ChupingNo ratings yet

- Hydraulic: Centrifugal Pump Application Performance CurvesDocument1 pageHydraulic: Centrifugal Pump Application Performance CurvesEdwin ChavezNo ratings yet

- Automated Discovery of Custom Instructions for Extensible ProcessorsDocument8 pagesAutomated Discovery of Custom Instructions for Extensible Processorsinr0000zhaNo ratings yet

- UNIT 1 PPT Satellite CommunicationDocument34 pagesUNIT 1 PPT Satellite Communicationselvi100% (1)

- Install bladder tanks under homesDocument4 pagesInstall bladder tanks under homessauro100% (1)

- Part A: - To Be Filled in by Officer From Respective OrganisationDocument1 pagePart A: - To Be Filled in by Officer From Respective OrganisationArcont ScriptNo ratings yet

- Turbo Machines and Propulsion Exam Questions on Pumps, Turbines, Pipe FlowDocument3 pagesTurbo Machines and Propulsion Exam Questions on Pumps, Turbines, Pipe FlowAdams100% (1)

- Lars Part Ix - Safety Managment System Requirements-SmsDocument24 pagesLars Part Ix - Safety Managment System Requirements-SmssebastienNo ratings yet

- Group Presentation on CIM, Database Systems and Product Life CyclesDocument23 pagesGroup Presentation on CIM, Database Systems and Product Life Cyclesশাহীন আহেমদNo ratings yet