Professional Documents

Culture Documents

Computer Organization and Architecture Module 3

Uploaded by

Assini HussainCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Computer Organization and Architecture Module 3

Uploaded by

Assini HussainCopyright:

Available Formats

08.

503 Computer Organization and Architecture

Module 3

Memory System Hierarchy: An ideal memory system has infinite capacity and as fast as possible. But practically it is not possible. It can be achieved by: 1. Temporal locality: If an item is referenced, it will tend to be referenced again soon. 2. Spatial locality: If an item is referenced, items whose addresses are close be will tend to be referenced soon. The same approach can be used in computer system, because most program contain loops, so instructions and data are likely to be accessed repeatedly and has temporal locality. Also the instructions are normally accessed sequentially, so it shows high spatial locality. For implementing memory of a computer, consider memory hierarchy. It consists of multiple levels of memory with different speeds and sizes. The faster memory is more expensive per bit than slower memory and thus smaller. There are three primary technologies used for building memory hierarchies: 1) Main memory by DRAM, which is less costly per bit than SRAM and is less slow. 2) Cache memory by SRAM, which is faster and its level closer to the CPU, but its cost is more per bit comparing to DRAM. 3) Secondary memory by hard disk or magnetic disk, which is slowest and largest in level. Three technologies and their typical performance for the year 2004 is shown below:

Based on these differences, the basic structure of a memory hierarchy is shown below:

Department of ECE, VKCET

Page 1

08.503 Computer Organization and Architecture

Module 3

The level closer to the processor is faster and data stored at the lowest level. The level away from the processor progressively longer to access and largest capacity. The data stored in such memory is at high level. The memory closer to processor is costly per bit and other one is economic. Another diagram for the structure of memory hierarchy is shown below:

Cache Memories The cache is a small and very fast memory. It is between the processor and the main memory. Its purpose is to make the main memory appear to the processor to be much faster than it actually is. This approach is based on a properties temporal locality and spatial locality. Temporal locality means that whenever information is first needed, this item should be brought into the cache, because it is likely to be needed again soon. Spatial locality means that instead of fetching just one item from the main memory to the cache, it is useful to fetch several items that are located at adjacent addresses as well. Consider a two level hierarchy memory unit shown below:

The minimum unit of information that can either present or not in the cache memory is called block or line. Page 2

Department of ECE, VKCET

08.503 Computer Organization and Architecture

Module 3

Cache hits Processor issues Read and Write requests using addresses that refer to locations in the main memory. The cache control circuitry determines whether the requested word currently exists in the cache. If the data exists, the Read or Write operation is performed on the appropriate cache location. Then a Read or Write hit is said to have occurred. For a Read operation, main memory is not involved. But for a Write operation, the system can proceed in one of two ways. In the first technique, called the write-through protocol: both the cache location and the main memory location are updated. The second technique is to update only the cache location and to mark the block containing it with an associated flag bit, often called the dirty or modified bit. The main memory location of the word is updated later, when the block containing this marked word is removed from the cache to make room for a new block. This technique is known as the write-back, or copy-back protocol. The write-through protocol is simpler than the write-back protocol, but it results in unnecessary Write operations in the main memory. The write-back protocol also involves unnecessary Write operations, because all words of the block are eventually written back, even if only a single word has been changed while the block was in the cache. The write-back protocol is used most often, to take advantage of its high speed. Cache Misses A Read operation for a word that is not in the cache constitutes a Read miss. If it occur the block of words containing the requested word to be copied from the main memory into the cache. After the entire block is loaded into the cache, the particular word requested is forwarded to the processor. Alternatively, this word may be sent to the processor as soon as it is read from the main memory. This approach is called load-through, or early restart, and it reduces the processors waiting time. But it is expense due to complex circuitry. When a Write miss occurs in a computer that uses the write-through protocol, the information is written directly into the main memory.

Department of ECE, VKCET

Page 3

08.503 Computer Organization and Architecture

Module 3

Mapping Functions Different methods for determining where memory blocks are placed in the cache. Consider a cache consisting of 128 blocks of 16 words each, for a total of 2048 (2K) words, and assume that the main memory is addressable by a 16-bit address. Then main memory has 64K words, which we will view as 4K blocks of 16 words each. For simplicity, we have assumed that consecutive addresses refer to consecutive words.

Direct Mapping The simplest way to determine cache locations. In this technique, block j of the main memory maps onto (block j) modulo (128) of the cache shown above. Thus, whenever one of the main memory blocks 0, 128, 256,...is loaded into the cache, it is stored in cache block 0. Blocks 1, 129, 257,...are stored in cache block 1, and so on. If more than one memory block is mapped onto a given cache block position, conflict may arise for that position even when the cache is not full. For example, instructions of a program may start in block 1 and continue in block 129, possibly after a branch. This conflict is resolved by allowing the new block to overwrite the currently resident block.

Department of ECE, VKCET

Page 4

08.503 Computer Organization and Architecture

Module 3

The memory address in direct mapping is divided as shown below:

Main memory address is mapped by: cache size / line size = number of lines, log2(line size) = bits for offset (word), log2(number of lines) = bits for cache block (index) and remaining upper bits = tag address bits. Consider 2048 byte cache and 16 word per line: No. of lines = 128 Word bit address = log2(16) = 4 bits, ( low-order 4 bits) Block address = log2(128) = 7 bits Remaining higher order 5 bits are tag bits Tag bits are to identify which of the main memory blocks mapped into this cache position is currently reside the cache. The high-order 5 bits addresses of main memory are compared with the tag bits associated with that cache location. If they match, then the desired word is in that block of the cache. If there is no match, then the block containing the required word must first be read from the main memory and loaded into cache. The direct-mapping technique is easy to implement, but it is not very flexible. Page 5

Department of ECE, VKCET

08.503 Computer Organization and Architecture

Module 3

Associative Mapping The most flexible mapping method, in which a main memory block can be placed into any cache block position as shown below.

Here 12 tag bits are required to identify a memory block when it is resident in the cache. The tag bits of an address received from the processor are compared to the tag bits of each block of the cache to see if the desired block is present. It gives complete freedom in choosing the cache location in which to place the memory block, resulting in a more efficient use of the space in the cache. When a new block is brought into the cache, it replaces (ejects) an existing block only if the cache is full. Here a replacement algorithm is required to select the block to be replaced. The complexity of an associative cache is higher than that of a direct-mapped cache, because it needs to search all 128 tag patterns to determine whether a given block is in the cache. This long delay can be avoided by searching the tags in parallel. This is called an associative search.

Department of ECE, VKCET

Page 6

08.503 Computer Organization and Architecture

Module 3

Set-Associative Mapping Another approach, it uses the combination of the direct- and associative-mapping techniques. Here the blocks of the cache are grouped into sets, and the mapping allows a block of the main memory to reside in any block of a specific set. The conflict problem of the direct method is eased by having a few choices for block placement. Also the hardware cost is reduced by decreasing the size of the associative search. An example of the set-associative-mapping technique is shown below for a cache with two blocks per set.

In this, memory blocks 0, 64, 128,..., 4032 map into cache set 0, and they can occupy either of the two block positions within this set. 64 sets can be selected by 6-bit set field of the address determines which set of the cache might contain the desired block. The 6-bit tag field of the address can be associatively compared to the tags of the two blocks of the set to check if the desired block is present. This two-way associative search is simple to implement. Page 7

Department of ECE, VKCET

08.503 Computer Organization and Architecture

Module 3

Valid Bit and Stale Data Initially the cache contains no valid data. This is usually represented by a bit called valid bit, and it must be provided for each cache block to indicate whether the data in that block are valid. The valid bits of all cache blocks are set to 0 when power is initially applied to the system. The valid bit also set to 0 when new programs or data are loaded from disk into main memory; it is because data transferred from disk to main memory is using DMA mechanism after bypassing cache. When the memory blocks in the cache is currently updating, the valid bits corresponding to the blocks are set to 0. During program execution, the valid bit of a given cache is set to 1 when a memory block is loaded into that location. The processor fetches data from a cache block only if its valid bit is equal to 1. The use of valid bit is to ensure that the processor will not fetch stale data from the cache. The same approach can be done during the write-back protocol. For this, stale data will force to flush from the cache. Replacement Algorithms In a direct-mapped cache, the position of each block is predetermined by its address so the replacement is unimportant. In associative and set-associative caches there exists some flexibility. So both requires a replacement algorithm to make a block free in cache for the referenced data in main memory. The property of locality of reference in programs gives that the program execution usually stays in localized areas for reasonable periods of time. So there is a high probability that the blocks that have been referenced recently will be referenced again soon. Therefore, when a block is to be overwritten, it overwrites the one that has the longest time without being referenced. The block to be overwritten is called the least recently used (LRU) block, and the technique is called the LRU replacement algorithm. For LRU algorithm, the cache controller must track references to all blocks as computation proceeds. Let LRU algorithm required to track the LRU block of a 4-block set in a set-associative cache. A 2-bit counter can be used for each block. When a hit occurs, the counter of the block that is referenced is set to 0. Counters with values originally lower than the referenced one are incremented by one, and all others remain unchanged. When a miss occurs and the set is not full, the counter associated with the new block loaded from the main memory is set to 0, and the values of all other counters are increased by one. When a miss occurs and the set is full, the block with the counter value 3 is removed, the new block is put in its place, and its counter is set to 0. The other three block counters are incremented by one. It can be easily verified that the counter values of occupied blocks are always distinct. Its performs well for many loop patterns, but it can lead to poor performance in some cases like accessing sequential elements of an array that is slightly too large to fit into the cache Department of ECE, VKCET Page 8

08.503 Computer Organization and Architecture

Module 3

Performance of the LRU algorithm can be improved by introducing a small amount of randomness in deciding which block to replace. Performance Considerations One key factor of computer is its performance and it depends on how fast machine instructions can be brought into the processor and how fast they can be executed. Another key factor of the commercial success of a computer is the cost. Then a common measure of commercial success of the computer is the price/performance ratio. The main purpose of the memory hierarchy is to create a memory that the processor sees as having a short access time and a large capacity. Using cache, the processor can access data and instruction from the main memory if it is in cache. So performance of the computer dependent on how frequently the requested instructions and data are found in the cache. Hit Rate and Miss Penalty Effective implementation of the memory hierarchy is the success rate in accessing information at various levels of the hierarchy. The successful access of data in a cache is called a hit. And the number of hits stated as a fraction of all attempted accesses is called the hit rate, and the miss rate is the number of misses stated as a fraction of attempted accesses. Ideally, the entire memory would appear to the processor as a single memory unit that has the access time of the cache on the processor chip and the size of the magnetic disk. This ideality depends largely on the hit rate at different levels of the memory hierarchy. For high-performance computers, hit rates well over 0.9. A performance penalty is incurred because of the extra time needed to bring a block of data from a slower unit in the memory hierarchy to a faster unit. During that period, the processor is stalled waiting for instructions or data. Consider a system with only one level of cache. Here the miss penalty consists almost entirely of the time to access a block of data in the main memory. Let h be the hit rate, M the miss penalty, and C the time to access information in the cache. Thus, the average access time experienced by the processor is tavg = hC + (1h) M An example: Consider a computer that has the following parameters: Cache access time and main memory access time 10. When a cache miss occurs, a block of 8 words is transferred from the main memory to the cache and it takes 10 to transfer the first word of the block, and the remaining 7 words are transferred at the rate of one word every seconds. The miss penalty also includes a delay of for the initial access to the cache, which misses, and another delay of to transfer the word to the processor after the block is loaded into the cache. Thus, the miss penalty in this computer is given by: M = + 10 + 7 + = 19 Assume that 30 percent of the instructions in a typical program perform a Read or a Write operation, which means that there are 130 memory accesses for every 100 instructions executed. Assume that the hit rates in the cache are 0.95 for instructions and 0.9 for data. Assume further that the miss penalty is the same for both read and write accesses.

Department of ECE, VKCET

Page 9

08.503 Computer Organization and Architecture

Module 3

Then, a rough estimate of the improvement in memory performance that results from using the cache can be obtained as follows:

This result shows that the cache makes the memory appear almost five times faster than without it. Also,

This shows that a 100% hit rate in the cache would make the memory appear twice as fast as when realistic hit rates are used. By making the cache larger, hit rate can be improved, but this increase the cost. Another possibility is to increase the cache block size while keeping the total cache size constant, to take advantage of spatial locality. If all items in a larger block are needed in a computation, then it is better to load these items into the cache in a single miss, rather than loading several smaller blocks as a result of several misses. But larger blocks are effective only up to a certain size, beyond this the hit rate is limited by the fact that some items may not be referenced before the block is ejected (replaced). Also, larger blocks take longer to transfer, and hence increase the miss penalty. Since the performance of a computer is affected positively by increased hit rate and negatively by increased miss penalty, block size should be neither too small nor too large. In practice, block sizes in the range of 16 to 128 bytes are the most popular choices. Interleaved Memory Cache misses are satisfied from main memory, which is constructed from DRAM. Such memories are designed with the primary emphasis on capacity rather than access time. It is difficult to reduce the memory access time, but it is possible to decrease miss penalty if the bandwidth from the memory to the cache increases. The increase in bandwidth allows increase in larger block size cause a reduction in miss penalty. Three method for designing memory systems are: 1) One-word wide organization 2) Wide memory organization 3) Interleaved memory organization. One-word wide memory: Here memory is one word wide and all access is made sequentially.

Department of ECE, VKCET

Page 10

08.503 Computer Organization and Architecture

Module 3

Wide memory: Here the bandwidth increases by widening the memory and the buses between the processor and memory. Allow parallel access to all words of the block.

Interleaved Memory: Here the bandwidth increases by widening the memory but not the interconnection bus.

Instead of making wide datapath between memory and cache, memory chips can be organized in banks to read or write multiple words in one access time rather than reading or writing a single word each time. Each bank is one word wide, so no need to widen the width of the bus and cache. But sending an address to several banks permits them to read all simultaneously. This scheme is called interleaving. The main advantage of this scheme is maximum memory latency at one access time. For example, with four banks memory a single cycle access results all bank access. Virtual Memory In computer systems, the physical main memory is not as large as the address space of the processor. A processor with 32-bit addresses and 8-bit data has an addressable space of 4G bytes (232 x 8 bits). The size of the main memory in a typical computer with a 32-bit processor may range from 1G to 4G bytes. Department of ECE, VKCET Page 11

08.503 Computer Organization and Architecture

Module 3

If a program does not completely fit into the main memory, the parts of it not currently being executed are stored on a secondary storage device (magnetic disk). When these parts are needed for execution, they must first be brought into the main memory, possibly replacing other parts that are already in the memory. These actions are performed automatically by the operating system, using a scheme known as virtual memory. In virtual memory system, the processor reference instructions and data in an address space that is independent of the available physical main memory space. The binary addresses that the processor issues for either instructions or data are called virtual or logical addresses. These addresses are translated into physical addresses by a combination of hardware and software actions. If a virtual address refers to a part of the program or data space that is currently in the physical memory, then the contents of the appropriate location in the main memory are accessed immediately. Otherwise, the contents of the referenced address must be brought into a suitable location in the memory before they can be used. A typical organization that implements virtual memory is shown below:

A special hardware unit, called the Memory Management Unit (MMU), keeps track of which parts of the virtual address space are in the physical memory. When the desired data or instructions are in the main memory, the MMU translates the virtual address into the corresponding physical address. Then, the requested memory access proceeds in the usual manner. Department of ECE, VKCET Page 12

08.503 Computer Organization and Architecture

Module 3

If the data are not in the main memory, the MMU causes the operating system to transfer the data from the disk to the memory. Such transfers are performed using the DMA (Direct Memory Access) scheme. Address Translation Paging is a simple method for translating virtual addresses into physical addresses. It is by fixed-length units called pages, each of which consists of a block of words that occupy nearby locations in the main memory. Pages commonly range from 2K to 16K bytes in length. Pages should not be too small, because the access time of a magnetic disk is much longer than the access time of the main memory. On the other hand, if pages are too large, it is possible that a significant portion of a page may not be used, yet this unnecessary data will occupy valuable space in the main memory. Cache techniques and virtual-memory techniques are very similar. But they differ mainly in the details of their implementation. The cache bridges the speed gap between the processor and the main memory and is implemented in hardware. But the virtual-memory mechanism bridges the size and speed gaps between the main memory and secondary storage and is usually implemented in part by software techniques. A virtual-memory address-translation method based on the concept of fixed-length pages is shown:

Department of ECE, VKCET

Page 13

08.503 Computer Organization and Architecture

Module 3

Virtual address generated by the processor is interpreted as a virtual page number (high-order bits) followed by an offset (low-order bits) that specifies the location of a particular byte (or word) within a page. Information about the main memory location of each page is kept in a page table. This information includes the main memory address where the page is stored and the current status of the page. An area in the main memory that can hold one page is called a page frame. The starting address of the page table is kept in a page table base register. By adding the virtual page number to the contents of this register, the address of the corresponding entry in the page table is obtained. The contents of this location give the starting address of the page if that page currently resides in the main memory. Each entry in the page table also includes some control bits that describe the status of the page while it is in the main memory. One of the control bit indicates the validity of the page, that is, whether the page is actually loaded in the main memory. This bit allows the operating system to invalidate the page without actually removing it. Another bit indicates whether the page has been modified during its presence in the memory. Similar to cache memories, this information is needed to determine whether the page should be written back to the disk before it is removed from the main memory to make room for another page. Other control bits indicate various restrictions on accessing the page. For example, a program may be given full read and write permission, or it may be restricted to read accesses only. Interfacing I/O to Processor A basic feature of a computer is its ability to exchange data with other devices like IO devices. An example is, using a keyboard and a display screen to process text and graphics. The components of a computer system communicate with each other through an interconnection network, as shown below:

This interconnection network consists of circuits needed to transfer data between the processor, the memory unit, and a number of I/O devices. Each I/O device has an address, so the processor can access it just like the memory.

Department of ECE, VKCET

Page 14

08.503 Computer Organization and Architecture

Module 3

Some addresses in the address space of the processor are assigned to the I/O address locations. These locations are usually implemented as bit storage circuits (flip-flops) organized in the form of registers. It is referred as I/O registers. If the I/O devices and the memory share the same address space, this arrangement is called memory-mapped I/O. Most of the computers use this method. With memory-mapped I/O scheme, any machine instruction (memory reference) can used to access I/O devices like memory. An I/O device is connected to the interconnection network using a circuit, called the device interface. This interface provides the means for data transfer and for the exchange of status and control information needed to the data transfers and govern the operation of the device. The interface includes three types of registers that can be accessed by the processor. One register is used for data transfers, other hold the information about the current status of the I/O device, and another one store the information that controls the operational behavior of the device. The data, status, and control registers are accessed by program instructions as if they were memory locations. The following illustration shows how the keyboard and display devices are connected to the processor from the software point of view.

Usually program-controlled I/O operation is performed for I/O device interface, in which a program executing in the processor decide all the control and data transfer between the processor and the I/O device. Interrupts It is one of the efficient methods for interfacing I/O device with processor. There are many situations where other tasks can be performed by the processor while waiting for an I/O device to become ready. To avoid this situation, a signal called interrupt request is send to the processor when the I/O device is ready. Then the processor is no longer required to continuously poll the status of I/O devices, and it can use the waiting period to perform other useful tasks. Also by using interrupts, the waiting periods of the processor can ideally be eliminated.

Department of ECE, VKCET

Page 15

08.503 Computer Organization and Architecture

Module 3

An example which illustrates the concept of interrupts is shown below.

The program executed in response to an interrupt request is called the interrupt-service routine (ISR), here is named as the DISPLAY routine. Interrupt request signals are considerable as subroutine calls and ISR can consider as subprogram. Assume that an interrupt request arrives during execution of instruction i in during the execution of the program 1, COMPUTE routine. The processor first completes execution of instruction i and then loads the PC with the address of the first instruction of the interrupt-service routine. After execution of the interrupt-service routine, the processor returns to instruction (i+1)th . Therefore, when an interrupt occurs, the current contents of the PC, which point to instruction (i+1)th, must be put in temporary storage in a known location. A Return-from-interrupt instruction at the end of the interrupt-service routine reloads the PC from that temporary storage location, causing execution to resume at instruction (i+1). Using a signal named interrupt acknowledge, the processor inform the device that its request has been recognized so that it may remove its interrupt-request signal. Before starting execution of the interrupt service routine, status information and contents of processor registers that may affect during the execution of that routine must be saved. This saved information must be restored before execution of the interrupted program is resumed. The task of saving and restoring information can be done automatically by the processor or by program instructions. Most modern processors save only the minimum amount of information. This is because the process of saving and restoring registers involves more memory transfers that increase the total execution time. Saving registers also increases the delay between the time an interrupt request is received and the start of execution of the interrupt-service routine. This delay is called interrupt latency. Enabling and Disabling Interrupts: The programmer must use the complete control over the events that take place during program execution. The arrival of an interrupt request from an external device causes the processor to suspend the execution of one program and start the execution of another. Department of ECE, VKCET Page 16

08.503 Computer Organization and Architecture

Module 3

An interrupt can arrive at any time; they may alter the sequence of events. Hence, the interruption of program execution must be carefully controlled. A fundamental facility found in all computers is the ability to enable and disable such interruptions as desired. Typically processor has a status register (PS), which contains information about its current state of operation. Let one bit, IE (Interrupt Enable), of this register be assigned for enabling/disabling interrupts. Then, the programmer can set or clear IE to cause the desired action. When IE=1, interrupt requests from I/O devices are accepted and serviced by the processor. When IE =0, the processor simply ignores all interrupt requests from I/O devices. Assuming that interrupts are enabled in both the processor and the device, the following is a typical scenario: 1. The I/O device raises an interrupt request. 1. The processor interrupts the program currently being executed and saves the contents of the PC and PS registers. 2. Interrupts are disabled by clearing the IE bit in the PS to 0. 3. The action requested by the interrupt is performed by the interrupt-service routine, during which time the device is informed that its request has been recognized, and in response, it deactivates the interrupt-request signal. 4. Upon completion of the interrupt-service routine, the saved contents of the PC and PS registers are restored (enabling interrupts by setting the IE bit to 1), and execution of the interrupted program is resumed. Handling Multiple Devices Consider a number of devices capable of initiating interrupts are connected to the processor. The devices are operationally independent and there is no definite order in which they will generate interrupts. For example, device X may request an interrupt while an interrupt caused by device Y is being serviced, or several devices may request interrupts at exactly the same time. One method to handle this situation is by polling. By this, when the device raises an interrupt request, it sets to 1 a bit in its status register, which is called the IRQ bit. The simplest way to identify the interrupting device is, the first device encountered with its IRQ bit set to 1 is the device that should be serviced. An appropriate subroutine is then called to provide the requested service. The polling scheme is easy to implement. But its main disadvantage is the time spent to check the IRQ bits of devices that may not be requesting any service. Another method is Vectored Interrupts, it reduce the time involved in the polling process, a device requesting an interrupt may identify itself directly to the processor. Then, the processor can immediately start executing the corresponding interrupt-service routine. A device requesting an interrupt can identify itself if it has its own interrupt-request signal, or it can send a special code to the processor through the device interface. The processors circuits determine the memory address of the required interrupt-service routine. A common method is to allocate a permanent area in the memory to hold the addresses of Department of ECE, VKCET Page 17

08.503 Computer Organization and Architecture

Module 3

interrupt-service routines. These addresses are usually referred to as interrupt vectors, and they are said to constitute the interrupt-vector table. Typically, the interrupt vector table is in the lowest-address range. The interrupt-service routines may be located anywhere in the memory.

Direct Memory Access (DMA) The DMA controller performs the functions that would normally be carried out by the processor when accessing the main memory. For transferring each word, it provides the memory address and generates all the control signals needed. It increments the memory address for successive words and keeps track of the number of transfers. DMA controller operation is under the control of a program executed by the processor, usually an operating system routine. To initiate the transfer of a block of words, the processor sends the starting address, number of words in the block, and the direction of the transfer to the DMA controller. The DMA controller then performs the requested operation. When the entire block is transferred, it informs the processor by raising an interrupt. An example of the DMA controller registers that are accessed by the processor to initiate data transfer operations is shown below:

Two registers are used for storing the starting address and the word count. The third register contains status and control flags. The R/W bit determines the direction of the transfer. When R/W set 1 by a program instruction, the controller performs a Read operation, that is, it transfers data from the memory to the I/O device. Otherwise, it performs a Write operation. When the DMA controller has completed transferring a block of data, it sets the Done flag to 1. Bit 30 is the Interrupt-enable flag, IE. When this flag is set to 1, it causes the controller to raise an interrupt after it has completed transferring a block of data. Another bit is IRQ and the controller sets it to 1 when it has requested an interrupt. The use of DMA controllers in a computer system is shown below: Page 18

Department of ECE, VKCET

08.503 Computer Organization and Architecture

Module 3

One DMA controller connects a high-speed Ethernet to the computers I/O bus. Another one along with disk controller, which controls two disks, also has DMA capability and provides two DMA channels.

CSIC Microprocessor: CISC stands for Complex Instruction Set Computer. CISC takes its name from the very large number of instructions (typically hundreds) and addressing modes in its ISA. The first PC microprocessors developed were CISC chips, because all the instructions the processor could execute were built into the chip. Examples of CISC processors are 1. PDP-11 2. Motorola 68000 family 3. Intel x86/Pentium CPUs Architecture of Intel 8086 Features of 8086 Microprocessor: Intel 8086 was launched in 1978. It was the first 16-bit microprocessor. This microprocessor had major improvement over the execution speed of 8085. It is available as 40-pin Dual-Inline-Package (DIP). It is available in three versions: 8086 (5 MHz) 8086-2 (8 MHz) 8086-1 (10 MHz) The 8086 architecture can be broadly divided into two groups: (i) Execution Unit (EU) (ii) Bus Interface Unit (BIU) The EU and the BIU operate asynchronously. Department of ECE, VKCET Page 19

08.503 Computer Organization and Architecture

Module 3

Bus Interface Unit (BIU) The function of BIU is to: Fetch the instruction or data from memory. Write the data to memory. Write the data to the port. Read data from the port. It contains: An instruction queue An Instruction pointer (IP) Segment registers Bus interface logic Execution Unit (EU) The functions of execution unit are: To tell BIU where to fetch the instructions or data from. To decode the instructions. To execute the instructions. It contains: General Purpose Registers ALU Control unit Flag Registers Instruction Queue To increase the execution speed, BIU fetches as many as six instruction bytes ahead to time from memory. All six bytes are then held in first in first out (FIFO) 6 byte register called instruction queue. Then all bytes have to be given to EU one by one. Department of ECE, VKCET Page 20

08.503 Computer Organization and Architecture

Module 3

This pre fetching operation of BIU may be in parallel with execution operation of EU, which improves the speed execution of the instruction. General Purpose Registers These registers can be used as 8-bit registers individually or can be used as 16-bit in pair to have AX, BX, CX, and DX AX Register: AX register is also known as accumulator register that stores operands for arithmetic operation like add, divided, rotate etc. BX Register: This register is mainly used as a base register. It holds the starting base location of a memory region within a data segment. CX Register: It is defined as a counter. It is primarily used in loop instruction to store loop counter. DX Register: DX register is used to contain I/O port address for I/O instruction. The pointer and index register file consists of the Stack Pointer (SP), the Base Pointer (BP), Source Index (SI) and Destination Index (DI) registers all are of 16-bits. They can also be used in most ALU operations. These registers are usually used to hold offset addresses for addressing within a segment. The Pointer registers are used to access the current stack segment. The index registers are used to access the current data segment. SP register: It is used similar to the stack pointer in other machine (for pointing to subroutine and interrupt return addresses). BP register: It is used to hold an old SP value. SI and DI: are both 16-bits wide and are used by string manipulation instructions and in building some of the more powerful structures and addressing modes. Both the SI and the DI registers have auto incrementing and auto-decrementing capabilities. Segment Registers They are additional registers to generate memory address when combined with other register like pointer and index registers. In 8086 microprocessor, memory is divided into 4 segments as follow:

Code Segment (CS): The CS register is used for addressing a memory location in the Code Segment of the memory, where the executable program is stored. Department of ECE, VKCET Page 21

08.503 Computer Organization and Architecture

Module 3

Data Segment (DS): The DS contains most data used by program. Data are accessed in the Data Segment by an offset address or the content of other register that holds the offset address. Stack Segment (SS): SS defined the area of memory used for the stack. Extra Segment (ES): ES is additional data segment that is used by some of the string to hold the destination data. Instruction Pointer (IP) The Instruction Pointer is a 16-bit register. This register is always used as the effective memory address, and is added to the CS with a displacement of four bits to obtain the physical address of the opcode. The code segment cannot be changed by the move instruction. The instruction pointer is incremented after each opcode fetch to point to the next instruction The physical address of 20-bit is generated by the selected segment register contents and are shifted-left by 4 bits (i.e., multiplied by 16), and then added to the effective memory address.

Flag Registers : Flag register in EU is of 16-bit and is shown:

Flags Register determines the current state of the processor. They are modified automatically by CPU after mathematical operations. This allows to determine the type of the result, and to determine conditions to transfer control to other parts of the program. There are 9 flags and they are divided into two categories: 1. Conditional Flags 2. Control Flags Conditional Flags Conditional flags represent result of last ALU instruction executed. The flags are: Carry Flag (CF): This flag indicates an overflow condition for unsigned integer arithmetic. Auxiliary Flag (AF): If an operation performed in ALU generates a carry/borrow from lower nibble to upper nibble (i.e. D4 to D7), this flag is set. This is not a general-purpose flag, it is used internally by the processor to perform Binary to BCD conversion. Parity Flag (PF): This flag is used to indicate the parity of result. If lower order 8-bits of the result contains even number of 1s, the PF is set and for odd number of 1s, the PF is reset. Zero Flag (ZF): It is set; if the result of ALU operation is zero else it is reset. Department of ECE, VKCET Page 22

08.503 Computer Organization and Architecture

Module 3

Sign Flag (SF): In sign magnitude format the sign of number is indicated by MSB bit. If the result of operation is negative, sign flag is set. Overflow Flag (OF): It occurs when signed numbers are added or subtracted. An OF indicates that the result has exceeded the capacity of machine. Control Flags Control flags are set or reset to control the operations of the execution unit. Control flags are as follows: Trap Flag (TP): It is used for single step control. It allows user to execute one instruction of a program at a time for debugging. When trap flag is set, program can be run in single step mode. Interrupt Flag (IF): It is an interrupt enable/disable flag. If it is set, the maskable interrupt of 8086 is enabled and if it is reset, the interrupt is disabled. It can be set by executing instruction SIT and can be cleared by executing CLI instruction. Direction Flag (DF): It is used in string operation. If it is set, string bytes are accessed from higher memory address to lower memory address. When it is reset, the string bytes are accessed from lower memory address to higher memory address. 8086 pin functions

Department of ECE, VKCET

Page 23

08.503 Computer Organization and Architecture

Module 3

8086 operates in single processor (minimum mode) or multi processor (maximum mode) configurations to achieve high performance. The 8086 signals can be categorized in 3 groups. 1. Signals having common functions in minimum as well as maximum mode. 2. Signals which have special functions for minimum mode. 3. Signals which have special functions for maximum mode. 4. Pins and functions:

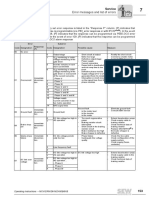

Signals common for both minimum & maximum mode: 1. AD15-AD0: Address/Data Bus The time multiplexed memory I/O address & data lines. Address remains on the lines during T1 state, while the data is available on the data bus during T2, T3, TW and T4 states. 2. A19/S6, A18/S4, A17/S4, A16/S3: Address/Status lines These are the time multiplexed address and status lines. During T1, these are the most significant address lines for memory operations. During I/O operations these lines are low. The status of the Interrupt Enable Flag (IF) bit (displayed on S5) is updated at the beginning of each clock cycle. A17/S4 and A16/S3 are encoded as shown:

3. BHE/S7: Bus High Enable/Status The bus high enable signal is used to indicate the transfer of data over the higher order (D15-D8) data bus. If BHE is low then D15-D8 used to transfer data

4. RD: Read Read signal, when low, indicates the peripherals that the processor is performing a memory or I/O operation. RD is active low & shows the state for T2, T3, TW of any read cycle. The signal remains tristated during the hold acknowledge. Department of ECE, VKCET Page 24

08.503 Computer Organization and Architecture

Module 3

5. READY: This is the acknowledgement from the slow devices or memory that they have completed the data transfer. 6. INTR: Interrupt Request This signal is sampled during the last clock cycle of each instruction to determine the availability of the request. If any interrupt request is pending the processor enters the interrupt acknowledge cycle. 7. TEST: This input is examined by a WAIT instruction. If TEST=0, execution will continue, else processor remains in an idle state. Input is synchronized by clock cycle. 8. NMI: Non-Maskable Interrupt The NMI is not maskable internally by software. A transition from low to high initiates the interrupt response at the end of the current instruction. 9. RESET: This input causes to processor to terminate the current activity and start execution from FFFF0H of program memory, i.e reinitialize the system. 10. CLK: Clock Input The clock input provides the basic timing for processor operation and bus control activity. 11. Vcc: +5V power supply for the operation of the internal circuit. 12. GND: Ground for the internal circuit. 13. MN/MX: This pin decides whether the processor is to operate in either minimum or maximum mode.

Signals for minimum mode and its operation 1. M/IO: STATUS LINE: It is used to differentiate a memory access and an I/O access. M/IObecomes valid in the T4 preceding a bus cycle and remains valid until the final T4 of the cycle (M = HIGH, IO = LOW). M/IO floats to 3-state OFF in local bus ``hold acknowledge''. 2. WR: WRITE: It indicates that the processor is performing a write memory or writes I/O cycle, depending on the state of the M/IO signal. WR is active for T2, T3 and TW of any write cycle. It is active LOW, and floats to 3-state OFF in local bus ``hold acknowledge''.

Department of ECE, VKCET

Page 25

08.503 Computer Organization and Architecture

Module 3

3. INTA : Interrupt acknowledgement It is used as a read strobe for interrupt acknowledge cycles. It is active LOW during T2,T3 and TW of each interrupt acknowledge cycle. 4. ALE: Address Latch Enable This output signal indicates the availability of the valid address on the address/data lines & it is connected to latch enable input of latches. This signal is active high and is never tristated. 5. DT/R: Data transmit/Receive This output is used to decide the direction of data flow through the transreceivers (i.e bidirectional buffers) DT/R = 1 Processor sends out data DT/R = 0 Processor receives data DT/R = S1 in maximum mode. 6. DEN: Data Enable This signal indicates the availability of valid data over the address/data lines. This used to separate data from multiplexed address/data signals. It is active from middle of T2 to middle of T4. 7. HOLD & HLDA: Hold/Hold Acknowledge Hold is high, indicates to the processor that another master is requesting the bus access. After receiving the HOLD request, issues the hold acknowledge signal on HLDA pin, in the middle of the next clock cycle after completing the current bus cycle. HOLD is an asynchronous input, it should be externally synchronized.

Department of ECE, VKCET

Page 26

08.503 Computer Organization and Architecture

Module 3

Signals for maximum modes and its operation: 1. S2, S1, S0: Status lines These lines active during T4 of the previous cycle & remain active during T1 & T2 of the current bus cycle. This status is used by the 8288 Bus Controller to generate all memory and I/O access control signals. These status lines are encoded as shown:

2. LOCK: This output pin indicates that other system bus masters will be prevented from gaining the system bus, while the LOCK=0. The LOCK signal is activated by the LOCK prefix instruction and remains active until the completion of the next instruction. This floats to tri-state off during hold acknowledge

Department of ECE, VKCET

Page 27

08.503 Computer Organization and Architecture

Module 3

3. QS1, QS0: Queue status These lines give information about the status of the code-prefetch queue. These are active during the CLK cycle after which the queue operation is performed.

4. RQ/GT0, RQ/GT1: Request/Grant These pins are used by other local bus masters, in maximum mode, to force the processor to release the local bus at the end of the processors current bus cycle. Each of the pins is bidirectional with RQ/GT0 having higher priority than RQ/GT1.

Department of ECE, VKCET

Page 28

08.503 Computer Organization and Architecture

Module 3

Addressing modes The addressing modes of any processor can be classified as: 1) Data addressing modes, 2) Program memory addressing modes and 3) Stack memory addressing modes. The basic addressing modes available in Intel 8086 are: 1. Register Addressing 2. Immediate Addressing 3. Direct Addressing 4. Register Indirect Addressing 5. Based Relative Addressing 6. Indexed Relative Addressing 7. Based Indexed Relative Addressing Register Addressing Mode: The operand to be accessed is specified as residing in a n internal register of the 8086. Example: MOV AX, BX This stands for move the contents of BX (the source operand) to AX (the destination operand).

Immediate Addressing Mode: If a source operand is part of the instruction instead of the contents of a register or memory location, it represents what is called the immediate operand and is accessed using immediate addressing mode. Typically immediate operand represents constant data. Example: MOV AL , 015H In this instruction the operand 015H is an example of a byte wide immediate source operand. The destination operand, which consists of the contents of AL, uses register addressing.

Direct Addressing Mode: The locations following the opcode hold an effective memory address (EA). This effective address is a 16- bit offset of the storage location of the operand from the current value in the data segment (DS) register. Department of ECE, VKCET Page 29

08.503 Computer Organization and Architecture

Module 3

EA is combined with the contents of DS in the BIU to produce the physical address of the operand. Example: MOV CX, BETA This stands for move the contents of the memory location, which is offset by BETA from the current value in DS into internal register CX. If DS = 4909H and offset value BETA = 235AH, then EA = 49090 + 235A = 4B3EA H, then the content in 4B3EA is moved to CX

Register Indirect Addressing Mode: Similar to direct addressing, that an effective address is combined with the contents of DS to obtain a physical address. However it differs in a way that the offset is specified. Here EA resides in either a pointer register or an index register within the 8086. The pointer register can be either a base register BX or a base pointer register BP and the index register can be source index register SI or the destination index registers DI. Example MOV AX , [SI] This instruction moves the contents of the memory location offset by the value of EA in SI from the current value in DS to the AX register. If DS = 4909H and offset value SI = 230AH, then EA = 49090 + 230A = 4B39A H, then the content in 4B39A is moved to AX.

Department of ECE, VKCET

Page 30

08.503 Computer Organization and Architecture

Module 3

Based Addressing Mode: The physical address of the operand is obtained by adding a direct or indirect displacement of the contents of either base register BX or base pointer register BP and the current value in DS and SS respectively. Example MOV [BX].BETA, AL This instruction uses base register BX and direct displacement BETA to derive the EA of the destination operand. The based addressing mode is implemented by specifying the base register in the brackets followed by a period and direct displacement. The source operand is located in the byte accumulator AL.

Indexed Addressing Mode: Indexed addressing mode works identically to the based addressing but it uses the contents of the index registers instead of BX or BP, in the generation of the physical address. Example MOV AL, ARRAY [SI] The source operand has been specified using direct index addressing. The notation ARRAY which is a direct displacement, prefixes the selected index register, SI.

Department of ECE, VKCET

Page 31

08.503 Computer Organization and Architecture

Module 3

Based Indexed Addressing Mode: Combining the based addressing mode and the indexed addressing mode together results in a new, more powerful mode known as based indexed addressing. Example: MOV AH, [BX].BETA [SI] Here the source operand is accessed using the based indexed addressing mode. The effective address of the source operand is obtained as EA=(BX)+BETA+(SI)

VLIW Architecture Very Long Instruction Word Architecture. EPIC: Explicit Parallel Instruction-set Computer. Very large instruction word means that program recompiled in the instruction to run sequentially without the stall in the pipeline. Thus require that programs be recompiled for the VLIW architecture. No need for the hardware to examine the instruction stream to determine which instructions may be executed in parallel. Take a different approach to instruction-level parallelism. The compiler determines which instructions may be executed in parallel and providing that information to the hardware. Each instruction specifies several independent operations (called very long words) that are executed in parallel by the hardware Compiler determining the operations (VLIWs) and which operations can be run in parallel and which are to be at which execution unit. Instruction format of VLIW processor is:

Instructions are hundreds of bits in length. An example:

Department of ECE, VKCET

Page 32

08.503 Computer Organization and Architecture

Module 3

Achieve very good performance on programs written in sequential languages such as C or FORTRAN when these programs are recompiled for a VLIW processor. Execution difference in scalar, super scalar and VLIW processor: Scalar processor:

Super scalar processor:

VLIW processor:

Department of ECE, VKCET

Page 33

08.503 Computer Organization and Architecture

Module 3

Instruction parallelism of VLIW processor is shown below:

Each operation in a VLIW instruction is equivalent to one instruction in a superscalar or purely sequential processor. The number of operations in a VLIW instruction equal to the number of execution units in the processor. Each operation specifies the instruction that will be executed on the corresponding execution unit in the cycle that the VLIW instruction is issued. TMS320C6X CPU is an example for VLIW processor. It has 8 Independent Execution units, which is split into two identical datapaths, each contains the same four units (L, S, D, M). Where Execution unit types - L : Integer adder, Logical, Bit Counting, FP adder, FP conversion. S : Integer adder, Logical, Bit Manipulation, Shifting, Constant, Branch/Control, FP compare, FP conversion, FP seed generation (for software division algorithm)D : Integer adder, Load-Store M : Integer Multiplier, FP multiplier

Department of ECE, VKCET

Page 34

You might also like

- Computer Architecture I Lecture Presentation 1Document37 pagesComputer Architecture I Lecture Presentation 1malkovan100% (2)

- COA Module 2 NotesDocument46 pagesCOA Module 2 NotesAssini HussainNo ratings yet

- Artificial Intelligence Anna University NotesDocument156 pagesArtificial Intelligence Anna University NotesskyisvisibleNo ratings yet

- COADocument137 pagesCOAThonta DariNo ratings yet

- COMPUTER GRAPHICS VIVA QUESTIONSDocument8 pagesCOMPUTER GRAPHICS VIVA QUESTIONSRugved TatkareNo ratings yet

- 8086 MicroprocessorDocument100 pages8086 MicroprocessorJASONNo ratings yet

- Introduction To DSPDocument35 pagesIntroduction To DSPapi-247714257No ratings yet

- Ai Notes (All Units) RCS 702 PDFDocument140 pagesAi Notes (All Units) RCS 702 PDFRuchi Khetan50% (2)

- Vtu 4TH Sem Cse Computer Organization Notes 10CS46Document75 pagesVtu 4TH Sem Cse Computer Organization Notes 10CS46EKTHATIGER633590100% (12)

- Computer Architecture Lecture Notes Input - OutputDocument20 pagesComputer Architecture Lecture Notes Input - OutputymuminNo ratings yet

- Why bus protocol is importantDocument4 pagesWhy bus protocol is importantashar565No ratings yet

- ECE2303 Computer Architecture and Organization 2and16marksDocument18 pagesECE2303 Computer Architecture and Organization 2and16marksThanigaivel Raja50% (2)

- Basic FPGA Architectures: Altera XilinxDocument8 pagesBasic FPGA Architectures: Altera XilinxkvinothscetNo ratings yet

- CMP 103 3 Programming in C 3 0 3Document2 pagesCMP 103 3 Programming in C 3 0 3Dinesh PudasainiNo ratings yet

- Microprocessor Interview Questions and AnswersDocument8 pagesMicroprocessor Interview Questions and AnswersGXTechno50% (2)

- Solve The Following Question: The Time Delay of The Five Segments in A Certain Pipeline Are As FollowsDocument31 pagesSolve The Following Question: The Time Delay of The Five Segments in A Certain Pipeline Are As FollowsSurya Kameswari83% (6)

- Advanced Computer ArchitectureDocument5 pagesAdvanced Computer ArchitecturePranav JINo ratings yet

- Embedded System Design MetricsDocument270 pagesEmbedded System Design MetricsBSRohitNo ratings yet

- Design and Analysis of Algorithms Laboratory (15Csl47)Document12 pagesDesign and Analysis of Algorithms Laboratory (15Csl47)Raj vamsi Srigakulam100% (1)

- Interview Questions On MicroprocessorDocument8 pagesInterview Questions On Microprocessoranilnaik287No ratings yet

- 29-2 CDocument8 pages29-2 Canon_621618677No ratings yet

- EC8552 Computer Architecture and Organization Unit 1Document92 pagesEC8552 Computer Architecture and Organization Unit 1Keshvan Dhanapal100% (1)

- Unit 1 MPMC NotesDocument40 pagesUnit 1 MPMC NotesRohit Venkat PawanNo ratings yet

- 1-Introduction To NetworkingDocument18 pages1-Introduction To NetworkingmikeNo ratings yet

- Research Notes On VlsiDocument70 pagesResearch Notes On VlsiMohit GirdharNo ratings yet

- CS1601 Computer ArchitectureDocument389 pagesCS1601 Computer Architectureainugiri100% (1)

- Dynamic Random Access MemoryDocument20 pagesDynamic Random Access MemoryNahum QuirosNo ratings yet

- Computer Network SyllabusDocument4 pagesComputer Network SyllabusSanjay ShresthaNo ratings yet

- Quantum Computer SynopsisDocument6 pagesQuantum Computer SynopsisAkshay Diwakar100% (1)

- Network Technologies and TCP/IP: Biyani's Think TankDocument78 pagesNetwork Technologies and TCP/IP: Biyani's Think TankDhruv Sharma100% (1)

- Basic Computer Model and Units ExplainedDocument15 pagesBasic Computer Model and Units ExplainedsheetalNo ratings yet

- Computer Bus Architecture, Pipelining and Memory ManagementDocument13 pagesComputer Bus Architecture, Pipelining and Memory ManagementniroseNo ratings yet

- VLSI DesignDocument19 pagesVLSI DesignEr Deepak GargNo ratings yet

- Computer Architecture - Memory SystemDocument22 pagesComputer Architecture - Memory Systemamit_coolbuddy20100% (1)

- Operating System TutorialDocument17 pagesOperating System TutorialMD Majibullah AnsariNo ratings yet

- MPMC Digtal NotesDocument129 pagesMPMC Digtal NotesMr.K Sanath KumarNo ratings yet

- Computer Architecture & Organization UNIT 1Document17 pagesComputer Architecture & Organization UNIT 1Nihal GuptaNo ratings yet

- DAA Viva QuestionsDocument15 pagesDAA Viva QuestionsCareer With CodesNo ratings yet

- Embedded SystemsDocument2 pagesEmbedded SystemsKavitha SubramaniamNo ratings yet

- Microprocessor UNIT - IVDocument87 pagesMicroprocessor UNIT - IVMani GandanNo ratings yet

- Programming in C Data Structures 14pcd23Document112 pagesProgramming in C Data Structures 14pcd23ISHITA BASU ROYNo ratings yet

- Parallel Computing Architectures & ProgrammingDocument1 pageParallel Computing Architectures & ProgrammingRohith RajNo ratings yet

- Memory OrganizationDocument30 pagesMemory OrganizationPARITOSHNo ratings yet

- DS LAB - Practice Set - I 1.revarr: TH TH N n-1 n-2 1 2Document2 pagesDS LAB - Practice Set - I 1.revarr: TH TH N n-1 n-2 1 2archie_ashleyNo ratings yet

- Computer Networks GuidebookDocument420 pagesComputer Networks Guidebookbobsgally100% (1)

- Multi-Core Architectures and Programming For R-2013 by Krishna Sankar P., Shangaranarayanee N.P.Document8 pagesMulti-Core Architectures and Programming For R-2013 by Krishna Sankar P., Shangaranarayanee N.P.Shangaranarayanee.N.PNo ratings yet

- Group E: Embedded Systems: Dustin Graves CSCI 342 January 25, 2007Document33 pagesGroup E: Embedded Systems: Dustin Graves CSCI 342 January 25, 2007niteshwarbhardwajNo ratings yet

- Memory and StorageDocument46 pagesMemory and StoragekasthurimahaNo ratings yet

- AC58-AT58 Computer OrganizationDocument26 pagesAC58-AT58 Computer OrganizationSaqib KamalNo ratings yet

- Unit 5Document40 pagesUnit 5anand_duraiswamyNo ratings yet

- Cache Memory: Replacement AlgorithmsDocument9 pagesCache Memory: Replacement AlgorithmsLohith LogaNo ratings yet

- CPU Cache Basics in 40 CharactersDocument13 pagesCPU Cache Basics in 40 CharactersAnirudh JoshiNo ratings yet

- CPU Cache: Details of OperationDocument18 pagesCPU Cache: Details of OperationIan OmaboeNo ratings yet

- CPU Cache: From Wikipedia, The Free EncyclopediaDocument19 pagesCPU Cache: From Wikipedia, The Free Encyclopediadevank1505No ratings yet

- 4 Unit Speed, Size and CostDocument5 pages4 Unit Speed, Size and CostGurram SunithaNo ratings yet

- Cache MemoryDocument20 pagesCache MemoryTibin ThomasNo ratings yet

- Memory Hierarchy - Introduction: Cost Performance of Memory ReferenceDocument52 pagesMemory Hierarchy - Introduction: Cost Performance of Memory Referenceravi_jolly223987No ratings yet

- Cache Memory in Computer OrganizatinDocument12 pagesCache Memory in Computer OrganizatinJohn Vincent BaylonNo ratings yet

- Design of Cache Memory Mapping Techniques For Low Power ProcessorDocument6 pagesDesign of Cache Memory Mapping Techniques For Low Power ProcessorhariNo ratings yet

- Conspect of Lecture 7Document13 pagesConspect of Lecture 7arukaborbekovaNo ratings yet

- 08.705 RTOS Module 3 NotesDocument19 pages08.705 RTOS Module 3 NotesAssini HussainNo ratings yet

- Operating SystemDocument24 pagesOperating SystemAssini HussainNo ratings yet

- Computer Organization and Architecture Module 1Document46 pagesComputer Organization and Architecture Module 1Assini Hussain100% (1)

- Computer Organization and Architecture Module 1Document46 pagesComputer Organization and Architecture Module 1Assini Hussain100% (1)

- Computer Organization and Architecture Module 1 (Kerala University) NotesDocument30 pagesComputer Organization and Architecture Module 1 (Kerala University) NotesAssini Hussain100% (11)

- 08.705 RTOS Module 2 NotesDocument30 pages08.705 RTOS Module 2 NotesAssini HussainNo ratings yet

- Embedded SystemDocument21 pagesEmbedded SystemAssini HussainNo ratings yet

- VHDLDocument11 pagesVHDLAssini HussainNo ratings yet

- Hetero JunctionsDocument19 pagesHetero JunctionsAssini Hussain100% (1)

- 08.601 MBSD Module 3Document43 pages08.601 MBSD Module 3Assini HussainNo ratings yet

- Advantages of NanotechnologyDocument20 pagesAdvantages of NanotechnologyAssini HussainNo ratings yet

- Design of Serial MultiplierDocument12 pagesDesign of Serial MultiplierAssini HussainNo ratings yet

- Real Time FIR Filter Design Using DSK TMS3206713Document7 pagesReal Time FIR Filter Design Using DSK TMS3206713Assini HussainNo ratings yet

- Embedded SystemDocument42 pagesEmbedded SystemAssini HussainNo ratings yet

- Embedded SystemDocument18 pagesEmbedded SystemAssini HussainNo ratings yet

- VHDLDocument6 pagesVHDLAssini HussainNo ratings yet

- System Hierarchy Actions and Layers Between The Subsystems: Embedded SystemsDocument5 pagesSystem Hierarchy Actions and Layers Between The Subsystems: Embedded SystemsAssini HussainNo ratings yet

- 08.607 Microcontroller Lab ManualDocument115 pages08.607 Microcontroller Lab ManualAssini Hussain100% (12)

- Embedded Systems: Sighandler To Create A Signal Handler Corresponding To A SignalDocument3 pagesEmbedded Systems: Sighandler To Create A Signal Handler Corresponding To A SignalAssini HussainNo ratings yet

- 08.601 MBSD Module 2Document86 pages08.601 MBSD Module 2Assini HussainNo ratings yet

- Embedded System Kerala University Module 1 NotesDocument13 pagesEmbedded System Kerala University Module 1 NotesAssini Hussain100% (1)

- 8051 Interrupts and ProgrammingDocument17 pages8051 Interrupts and ProgrammingAssini HussainNo ratings yet

- ADC0809CCNDocument16 pagesADC0809CCNFrancesca Castelar BenalcazarNo ratings yet

- FIRE DETECTION SYSTEM. Consilium MarineDocument41 pagesFIRE DETECTION SYSTEM. Consilium Marinesrg6975% (4)

- sm331 Manual PDFDocument25 pagessm331 Manual PDFdiegosantosbNo ratings yet

- CSE211: Course Assessment, Lectures, BooksDocument83 pagesCSE211: Course Assessment, Lectures, BooksJosh AmbatiNo ratings yet

- ErtosDocument16 pagesErtosМахеш Бабу100% (1)

- 8279 - Programmable KeyboardDocument5 pages8279 - Programmable KeyboardGaganBhayanaNo ratings yet

- Computer Hardware: An Overview of the Basic ComponentsDocument45 pagesComputer Hardware: An Overview of the Basic Componentsخالد محمدNo ratings yet

- Design, Modeling and Simulation Methodology For Source Synchronous DDR Memory SubsystemsDocument5 pagesDesign, Modeling and Simulation Methodology For Source Synchronous DDR Memory Subsystemssanjeevsoni64No ratings yet

- SV Concepts PLK PDFDocument276 pagesSV Concepts PLK PDFPoojashree LRNo ratings yet

- GeniusDocument8 pagesGeniusAhmad Nadzri Bin HamzahNo ratings yet

- Sysmac NJ FINS TechnicalGuide en 201205 W518-E1-01Document40 pagesSysmac NJ FINS TechnicalGuide en 201205 W518-E1-01Luigi FaccioNo ratings yet

- Planmeca Proline XC Instalacion Manual Dimax3 PDFDocument88 pagesPlanmeca Proline XC Instalacion Manual Dimax3 PDFcreaelectronicaNo ratings yet

- 8050QRDocument121 pages8050QRcjarekNo ratings yet

- III Year Syllabus UITDocument14 pagesIII Year Syllabus UITAnith AshokNo ratings yet

- External Interface (XINTF)Document38 pagesExternal Interface (XINTF)AlonsoNo ratings yet

- Dcap105 Workshop On Computer Hardware and NetworkDocument182 pagesDcap105 Workshop On Computer Hardware and NetworkRupesh PatelNo ratings yet

- 8085 MICROPROCESSOR: BUS ORGANIZATION AND PIN FUNCTIONSDocument38 pages8085 MICROPROCESSOR: BUS ORGANIZATION AND PIN FUNCTIONSAkshit MalhotraNo ratings yet

- BIOS Survival GuideDocument57 pagesBIOS Survival Guidesarfaraz_khudabux100% (3)

- Webinar SigrityDocument54 pagesWebinar Sigrityjagadees21No ratings yet

- Lesson 1: Understanding The Computer SystemDocument50 pagesLesson 1: Understanding The Computer SystemR-Yel Labrador BaguioNo ratings yet

- Interfacing Processors and Peripherals: CS151B/EE M116C Computer Systems ArchitectureDocument31 pagesInterfacing Processors and Peripherals: CS151B/EE M116C Computer Systems ArchitecturetinhtrilacNo ratings yet

- Class: T.E. E &TC Subject: DSP Expt. No.: Date: Title: Implementation of Convolution Using DSP Processor ObjectiveDocument9 pagesClass: T.E. E &TC Subject: DSP Expt. No.: Date: Title: Implementation of Convolution Using DSP Processor ObjectiveMahadevNo ratings yet

- SEW Drive Error List PDFDocument15 pagesSEW Drive Error List PDFkarangoyals03100% (3)

- Evolution of MicroprocessorsDocument22 pagesEvolution of MicroprocessorsVishal ChetalNo ratings yet

- 8th Semester SyllabusDocument2 pages8th Semester Syllabuschill_ashwinNo ratings yet

- Computer Notes Class 9 Chapter TitlesDocument106 pagesComputer Notes Class 9 Chapter Titlesmochinkhan33% (6)

- ECE 513 Microprocessor / Microcontroller Systems: By: Engr. Junard P. KaquilalaDocument34 pagesECE 513 Microprocessor / Microcontroller Systems: By: Engr. Junard P. KaquilalaGenesis AlquizarNo ratings yet

- GEARS January-February 2017Document124 pagesGEARS January-February 2017Rodger Bland100% (2)

- Architecture of TMS320C50 DSP ProcessorDocument8 pagesArchitecture of TMS320C50 DSP ProcessorNayab Rasool SKNo ratings yet

- PowerMac G5 Developer NoteDocument94 pagesPowerMac G5 Developer NotesobarNo ratings yet

- Manual Intel D815EEA2-D815EPEA2 P3 Socket370Document146 pagesManual Intel D815EEA2-D815EPEA2 P3 Socket370Raul MejicanoNo ratings yet