Professional Documents

Culture Documents

Berkeley - Notes On Tensor Product

Uploaded by

IonutgmailOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Berkeley - Notes On Tensor Product

Uploaded by

IonutgmailCopyright:

Available Formats

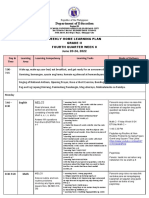

221A Lecture Notes

Notes on Tensor Product

1 What is Tensor?

After discussing the tensor product in the class, I received many questions

what it means. Ive also talked to Daniel, and he felt this is a subject he

had learned on the way here and there, never in a course or a book. I myself

dont remember where and when I learned about it. It may be worth solving

this problem once and for all.

Apparently, Tensor is a manufacturer of fancy oor lamps. See

http://growinglifestyle.shop.com/cc.class/cc?pcd=7878065&ccsyn=13549.

For us, the word tensor refers to objects that have multiple indices. In

comparison, a scalar does not have an index, and a vector one index. It

appears in many dierent contexts, but this point is always the same.

2 Direct Sum

Before getting into the subject of tensor product, let me rst discuss direct

sum. This is a way of getting a new big vector space from two (or more)

smaller vector spaces in the simplest way one can imagine: you just line them

up.

2.1 Space

You start with two vector spaces, V that is n-dimensional, and W that is

m-dimensional. The tensor product of these two vector spaces is n + m-

dimensional. Here is how it works.

Let {e

1

, e

2

, , e

n

} be the basis system of V , and similarly {

f

1

,

f

2

, ,

f

m

}

that of W. We now dene n + m basis vectors {e

1

, , e

n

,

f

1

, ,

f

m

}. The

vector space spanned by these basis vectors is V W, and is called the direct

sum of V and W.

1

2.2 Vectors

Any vector v V can be written as a linear combination of the basis vectors,

v = v

1

e

1

+ v

2

e

2

+ + v

n

e

n

. We normally express this fact in the form of a

column vector,

v =

_

_

_

_

_

_

v

1

v

2

.

.

.

v

n

_

_

_

_

_

_

V

_

_

n. (1)

Here, it is emphasized that this is an element of the vector space V , and the

column vector has n components. In particular, the basis vectors themselves

can be written as,

e

1

=

_

_

_

_

_

_

1

0

.

.

.

0

_

_

_

_

_

_

V

, e

2

=

_

_

_

_

_

_

0

1

.

.

.

0

_

_

_

_

_

_

V

, , e

n

=

_

_

_

_

_

_

0

0

.

.

.

1

_

_

_

_

_

_

V

. (2)

If the basis system is orthonormal, i.e., e

i

e

j

=

ij

, then v

i

= e

i

v. Similarly

for any vector w, we can write

w =

_

_

_

_

_

_

w

1

w

2

.

.

.

w

n

_

_

_

_

_

_

W

_

_

m. (3)

The basis vectors are

f

1

=

_

_

_

_

_

_

1

0

.

.

.

0

_

_

_

_

_

_

W

,

f

2

=

_

_

_

_

_

_

0

1

.

.

.

0

_

_

_

_

_

_

W

, ,

f

m

=

_

_

_

_

_

_

0

0

.

.

.

1

_

_

_

_

_

_

W

. (4)

The vectors are naturally elements of the direct sum, just by lling zeros

2

to the unused entries,

v =

_

_

_

_

_

_

_

_

_

_

_

_

v

1

.

.

.

v

n

0

.

.

.

0

_

_

_

_

_

_

_

_

_

_

_

_

_

_

n + m, w =

_

_

_

_

_

_

_

_

_

_

_

_

0

.

.

.

0

w

1

.

.

.

w

m

_

_

_

_

_

_

_

_

_

_

_

_

_

_

n + m. (5)

One can also dene a direct sum of two non-zero vectors,

v w =

_

_

_

_

_

_

_

_

_

_

_

_

v

1

.

.

.

v

n

w

1

.

.

.

w

m

_

_

_

_

_

_

_

_

_

_

_

_

=

_

v

w

__

n + m, (6)

where the last expression is used to save space and it is understood that v

and w are each column vector.

2.3 Matrices

A matrix is mathematically a linear map from a vector space to another

vector space. Here, we specialize to the maps from a vector space to the

same one because of our interest in applications to quantum mechanics, A :

V V , e.g.,

v Av, (7)

or

_

_

_

_

_

_

v

1

v

2

.

.

.

v

n

_

_

_

_

_

_

V

_

_

_

_

_

_

a

11

a

12

a

1n

a

22

a

22

a

2n

.

.

.

.

.

.

.

.

.

.

.

.

a

n1

a

n2

a

nn

_

_

_

_

_

_

V

_

_

_

_

_

_

v

1

v

2

.

.

.

v

n

_

_

_

_

_

_

V

. (8)

Similarly, B : W W, and B : w B w.

On the direct sum space, the same matrices can still act on the vectors,

so that v Av, and w B w. This matrix is written as A B. This can

3

be achieved by lining up all matrix elements in a block-diagonal form,

A B =

_

A 0

nm

0

mn

B

_

. (9)

For instance, if n = 2 and m = 3, and

A =

_

a

11

a

12

a

21

a

22

_

V

, B =

_

_

_

b

11

b

12

b

13

b

21

b

22

b

23

b

31

b

32

b

33

_

_

_

W

, (10)

where it is emphasized that A and B act on dierent spaces. Their direct

sum is

A B =

_

_

_

_

_

_

_

_

a

11

a

12

0 0 0

a

21

a

22

0 0 0

0 0 b

11

b

12

b

13

0 0 b

21

b

22

b

23

0 0 b

31

b

32

b

33

_

_

_

_

_

_

_

_

. (11)

The reason why the block-diagonal form is appropriate is clear once you

act it on v w,

(AB)(v w) =

_

A 0

nm

0

mn

B

__

v

w

_

=

_

Av

B w

_

= (Av)(B w). (12)

If you have two matrices, their multiplications are done on each vector

space separately,

(A

1

B

1

)(A

2

B

2

) = (A

1

A

2

) (B

1

B

2

). (13)

Note that not every matrix on V W can be written as a direct sum of a

matrix on V and another on W. There are (n+m)

2

independent matrices on

V W, while there are only n

2

and m

2

matrices on V and W, respectively.

Remaining (n+m)

2

n

2

m

2

= 2nm matrices cannot be written as a direct

sum.

Other useful formulae are

det(A B) = (detA)(detB), (14)

Tr(A B) = (TrA) + (TrB). (15)

4

3 Tensor Product

The word tensor product refers to another way of constructing a big vector

space out of two (or more) smaller vector spaces. You can see that the spirit

of the word tensor is there. It is also called Kronecker product or direct

product.

3.1 Space

You start with two vector spaces, V that is n-dimensional, and W that

is m-dimensional. The tensor product of these two vector spaces is nm-

dimensional. Here is how it works.

Let {e

1

, e

2

, , e

n

} be the basis system of V , and similarly {

f

1

,

f

2

, ,

f

m

}

that of W. We now dene nm basis vectors e

i

f

j

, where i = 1, , n and

j = 1, , m. Never mind what means at this moment. We will be more

explicit later. Just regard it a symbol that denes a new set of basis vectors.

This is why the word tensor is used for this: the basis vectors have two

indices.

3.2 Vectors

We use the same notation for the column vectors as in Section 2.2.

The tensor product is bilinear, namely linear in V and also linear in W.

(If there are more than two vector spaces, it is multilinear.) What it implies

is that v w = (

n

i

v

i

e

i

) (

m

j

w

j

f

j

) =

n

i

m

j

v

i

w

j

(e

i

f

j

). In other

words, it is a vector in V W, spanned by the basis vectors e

i

f

j

, and the

coecients are given by v

i

w

j

for each basis vector.

One way to make it very explicit is to literally write it out. For example,

let us take n = 2 and m = 3. The tensor product space is nm = 6 dimen-

sional. The new basis vectors are e

1

f

1

, e

1

f

2

, e

1

f

3

, e

2

f

1

, e

2

f

2

,

and e

2

f

3

. Let us write them as a six-component column vector,

e

1

f

1

=

_

_

_

_

_

_

_

_

_

_

1

0

0

0

0

0

_

_

_

_

_

_

_

_

_

_

, e

1

f

2

=

_

_

_

_

_

_

_

_

_

_

0

1

0

0

0

0

_

_

_

_

_

_

_

_

_

_

, e

1

f

3

=

_

_

_

_

_

_

_

_

_

_

0

0

1

0

0

0

_

_

_

_

_

_

_

_

_

_

,

5

e

2

f

1

=

_

_

_

_

_

_

_

_

_

_

0

0

0

1

0

0

_

_

_

_

_

_

_

_

_

_

, e

2

f

2

=

_

_

_

_

_

_

_

_

_

_

0

0

0

0

1

0

_

_

_

_

_

_

_

_

_

_

, e

2

f

3

=

_

_

_

_

_

_

_

_

_

_

0

0

0

0

0

1

_

_

_

_

_

_

_

_

_

_

. (16)

Here, I drew a horizontal lines to separate two sets of basis vectors, the rst

set that is made of e

1

, and the second made of e

2

. For general vectors v and

w, the tensor product is

v w =

_

_

_

_

_

_

_

_

_

_

v

1

w

1

v

1

w

2

v

1

w

3

v

2

w

1

v

2

w

2

v

2

w

3

_

_

_

_

_

_

_

_

_

_

. (17)

3.3 Matrices

A matrix is mathematically a linear map from a vector space to another

vector space. Here, we specialize to the maps from a vector space to the

same one because of our interest in applications to quantum mechanics, A :

V V , e.g.,

v Av, (18)

or

_

_

_

_

_

_

v

1

v

2

.

.

.

v

n

_

_

_

_

_

_

V

_

_

_

_

_

_

a

11

a

12

a

1n

a

22

a

22

a

2n

.

.

.

.

.

.

.

.

.

.

.

.

a

n1

a

n2

a

nn

_

_

_

_

_

_

_

_

_

_

_

_

v

1

v

2

.

.

.

v

n

_

_

_

_

_

_

V

. (19)

On the tensor product space, the same matrix can still act on the vectors,

so that v Av, but w w untouched. This matrix is written as A I,

where I is the identity matrix. In the previous example of n = 2 and m = 3,

6

the matrix A is two-by-two, while A I is six-by-six,

A I =

_

_

_

_

_

_

_

_

_

_

a

11

0 0 a

12

0 0

0 a

11

0 0 a

12

0

0 0 a

11

0 0 a

12

a

21

0 0 a

22

0 0

0 a

21

0 0 a

22

0

0 0 a

21

0 0 a

22

_

_

_

_

_

_

_

_

_

_

. (20)

The reason why this expression is appropriate is clear once you act it on

v w,

(A I)(v w) =

_

_

_

_

_

_

_

_

_

_

a

11

0 0 a

12

0 0

0 a

11

0 0 a

12

0

0 0 a

11

0 0 a

12

a

21

0 0 a

22

0 0

0 a

21

0 0 a

22

0

0 0 a

21

0 0 a

22

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

v

1

w

1

v

1

w

2

v

1

w

3

v

2

w

1

v

2

w

2

v

2

w

3

_

_

_

_

_

_

_

_

_

_

=

_

_

_

_

_

_

_

_

_

_

(a

11

v

1

+ a

12

v

2

)w

1

(a

11

v

1

+ a

12

v

2

)w

2

(a

11

v

1

+ a

12

v

2

)w

3

(a

21

v

1

+ a

22

v

2

)w

1

(a

21

v

1

+ a

22

v

2

)w

2

(a

21

v

1

+ a

22

v

2

)w

3

_

_

_

_

_

_

_

_

_

_

= (Av) w. (21)

Clearly the matrix A acts only on v V and leaves w W untouched.

Similarly, the matrix B : W W maps w B w. It can also act on

V W as I B, where

I B =

_

_

_

_

_

_

_

_

_

_

b

11

b

12

b

13

0 0 0

b

21

b

22

b

23

0 0 0

b

31

b

32

b

33

0 0 0

0 0 0 b

11

b

12

b

13

0 0 0 b

21

b

22

b

23

0 0 0 b

31

b

32

b

33

_

_

_

_

_

_

_

_

_

_

. (22)

7

It acts on v w as

(I B)(v w) =

_

_

_

_

_

_

_

_

_

_

b

11

b

12

b

13

0 0 0

b

21

b

22

b

23

0 0 0

b

31

b

32

b

33

0 0 0

0 0 0 b

11

b

12

b

13

0 0 0 b

21

b

22

b

23

0 0 0 b

31

b

32

b

33

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

v

1

w

1

v

1

w

2

v

1

w

3

v

2

w

1

v

2

w

2

v

2

w

3

_

_

_

_

_

_

_

_

_

_

=

_

_

_

_

_

_

_

_

_

_

v

1

(b

11

w

1

+ b

12

w

2

+ b

13

w

3

)

v

1

(b

21

w

1

+ b

22

w

2

+ b

23

w

3

)

v

1

(b

31

w

1

+ b

32

w

2

+ b

33

w

3

)

v

2

(b

11

w

1

+ b

12

w

2

+ b

13

w

3

)

v

2

(b

21

w

1

+ b

22

w

2

+ b

23

w

3

)

v

2

(b

31

w

1

+ b

32

w

2

+ b

33

w

3

)

_

_

_

_

_

_

_

_

_

_

= v (B w). (23)

In general, (AI)(v w) = (Av) w, and (I B)(v w) = v (B w).

If you have two matrices, their multiplications are done on each vector

space separately,

(A

1

I)(A

2

I) = (A

1

A

2

) I,

(I B

1

)(I B

2

) = I (B

1

B

2

),

(A I)(I B) = (I B)(A I) = (A B). (24)

The last expression allows us to write out A B explicitly,

A B =

_

_

_

_

_

_

_

_

_

_

a

11

b

11

a

11

b

12

a

11

b

13

a

12

b

11

a

12

b

12

a

12

b

13

a

11

b

21

a

11

b

22

a

11

b

23

a

12

b

21

a

12

b

22

a

12

b

23

a

11

b

31

a

11

b

32

a

11

b

33

a

12

b

31

a

12

b

32

a

12

b

33

a

21

b

11

a

21

b

12

a

21

b

13

a

22

b

11

a

22

b

12

a

22

b

13

a

21

b

21

a

21

b

22

a

21

b

23

a

22

b

21

a

22

b

22

a

22

b

23

a

21

b

31

a

21

b

32

a

21

b

33

a

22

b

31

a

22

b

32

a

22

b

33

_

_

_

_

_

_

_

_

_

_

. (25)

It is easy to verify that (A B)(v w) = (Av) (B w).

Note that not every matrix on V W can be written as a tensor product

of a matrix on V and another on W.

Other useful formulae are

det(A B) = (detA)

m

(detB)

n

, (26)

Tr(A B) = (TrA)(TrB). (27)

8

In Mathematica, you can dene the tensor product of two square matrices

by

Needs["LinearAlgebraMatrixManipulation"];

KroneckerProduct[a_?SquareMatrixQ, b_?SquareMatrixQ] :=

BlockMatrix[Outer[Times, a, b]]

and then

KroneckerProduct[A,B]

3.4 Tensor Product in Quantum Mechanics

In quantum mechanics, we associate a Hilbert space for each dynamical de-

gree of freedom. For example, a free particle in three dimensions has three

dynamical degrees of freedom, p

x

, p

y

, and p

z

. Note that we can specify only

p

x

or x, but not both, and hence each dimension gives only one degree of

freedom, unlike in classical mechanics where you have two. Therefore, mo-

mentum eigenkets P

x

|p

x

= p

x

|p

x

from a basis system for the Hilbert space

H

x

, etc.

The eigenstate of the full Hamiltonian is obtained by the tensor product

of momentum eigenstates in each direction,

|p

x

, p

y

, p

z

= |p

x

|p

y

|p

z

. (28)

We normally dont bother writing it this way, but it is nothing but a tensor

product.

The operator P

x

is then P

x

I I, and acts as

(P

x

II)(|p

x

|p

y

|p

z

) = (P

x

|p

x

)(I|p

y

)(I|p

z

) = p

x

|p

x

|p

y

|p

z

(29)

and similarly for P

y

and P

z

.

The Hamiltonian is actually

H =

1

2m

_

(P

x

I I)

2

+ (I P

y

I)

2

+ (I I P

z

)

2

_

. (30)

Clearly we dont want to write the tensor products explicitly each time!

9

3.5 Addition of Angular Momenta

Using the example of n = 2 and m = 3, one can discuss the addition of

s = 1/2 and l = 1. V is two-dimensional, representing the spin 1/2, while

W is three-dimensional, representing the orbital angular momentum one. As

usual, the basis vectors are

|

1

2

,

1

2

=

_

1

0

_

, |

1

2

,

1

2

=

_

0

1

_

, (31)

for s = 1/2, and

|1, 1 =

_

_

_

1

0

0

_

_

_, |1, 0 =

_

_

_

0

1

0

_

_

_, |1, 1 =

_

_

_

0

0

1

_

_

_, (32)

for l = 1. The matrix representations of the angular momentum operators

are

S

+

= h

_

0 1

0 0

_

, S

= h

_

0 0

1 0

_

, S

z

=

h

2

_

1 0

0 1

_

, (33)

while

L

+

= h

_

_

_

0

2 0

0 0

2

0 0 0

_

_

_, L

= h

_

_

_

0 0 0

2 0 0

0

2 0

_

_

_, L

z

= h

_

_

_

1 0 0

0 0 0

0 0 1

_

_

_.

(34)

On the tensor product space V W, they become

S

+

I = h

_

_

_

_

_

_

_

_

_

_

0 0 0 1 0 0

0 0 0 0 1 0

0 0 0 0 0 1

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

_

_

_

_

_

_

_

_

_

_

, I L

+

= h

_

_

_

_

_

_

_

_

_

_

_

0

2 0 0 0 0

0 0

2 0 0 0

0 0 0 0 0 0

0 0 0 0

2 0

0 0 0 0 0

2

0 0 0 0 0 0

_

_

_

_

_

_

_

_

_

_

_

,

S

I = h

_

_

_

_

_

_

_

_

_

_

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

1 0 0 0 0 0

0 1 0 0 0 0

0 0 1 0 0 0

_

_

_

_

_

_

_

_

_

_

, I L

= h

_

_

_

_

_

_

_

_

_

_

_

0 0 0 0 0 0

2 0 0 0 0 0

0

2 0 0 0 0

0 0 0 0 0 0

0 0 0

2 0 0

0 0 0 0

2 0

_

_

_

_

_

_

_

_

_

_

_

,

10

S

z

I =

h

2

_

_

_

_

_

_

_

_

_

_

1 0 0 0 0 0

0 1 0 0 0 0

0 0 1 0 0 0

0 0 0 1 0 0

0 0 0 0 1 0

0 0 0 0 0 1

_

_

_

_

_

_

_

_

_

_

, I L

z

= h

_

_

_

_

_

_

_

_

_

_

1 0 0 0 0 0

0 0 0 0 0 0

0 0 1 0 0 0

0 0 0 1 0 0

0 0 0 0 0 0

0 0 0 0 0 1

_

_

_

_

_

_

_

_

_

_

.

(35)

The addition of angular momenta is done simply by adding these matrices,

J

,z

= S

,z

I + I L

,z

,

J

+

= h

_

_

_

_

_

_

_

_

_

_

_

0

2 0 1 0 0

0 0

2 0 1 0

0 0 0 0 0 1

0 0 0 0

2 0

0 0 0 0 0

2

0 0 0 0 0 0

_

_

_

_

_

_

_

_

_

_

_

,

J

= h

_

_

_

_

_

_

_

_

_

_

_

0 0 0 0 0 0

2 0 0 0 0 0

0

2 0 0 0 0

1 0 0 0 0 0

0 1 0

2 0 0

0 0 1 0

2 0

_

_

_

_

_

_

_

_

_

_

_

,

J

z

= h

_

_

_

_

_

_

_

_

_

_

3

2

0 0 0 0 0

0

1

2

0 0 0 0

0 0

1

2

0 0 0

0 0 0

1

2

0 0

0 0 0 0

1

2

0

0 0 0 0 0

3

2

_

_

_

_

_

_

_

_

_

_

. (36)

It is clear that

|

3

2

,

3

2

=

_

_

_

_

_

_

_

_

_

_

1

0

0

0

0

0

_

_

_

_

_

_

_

_

_

_

, |

3

2

,

3

2

=

_

_

_

_

_

_

_

_

_

_

0

0

0

0

0

1

_

_

_

_

_

_

_

_

_

_

. (37)

11

They satisfy J

+

|

3

2

,

3

2

= J

|

3

2

,

3

2

= 0. By using the general formula

J

|j, m =

_

j(j + 1) m(m1)|j, m1, we nd

J

|

3

2

,

3

2

=

_

_

_

_

_

_

_

_

_

_

0

2

0

1

0

0

_

_

_

_

_

_

_

_

_

_

=

3|

3

2

,

1

2

, J

+

|

3

2

,

3

2

=

_

_

_

_

_

_

_

_

_

_

0

0

1

0

2

0

_

_

_

_

_

_

_

_

_

_

=

3|

3

2

,

1

2

.

(38)

We normally write them without using column vectors,

J

|

3

2

,

3

2

= J

|

1

2

,

1

2

|1, 1

= |

1

2

,

1

2

|1, 1 +

2|

1

2

,

1

2

|1, 0 =

3|

3

2

,

1

2

, (39)

J

+

|

3

2

,

3

2

= J

+

|

1

2

,

1

2

|1, 1

= |

1

2

,

1

2

|1, 1 +

2|

1

2

,

1

2

|1, 0 =

3|

3

2

,

1

2

. (40)

Their orthogonal linear combinations belong to the j = 1/2 representation,

|

1

2

,

1

2

=

1

3

_

_

_

_

_

_

_

_

_

_

0

1

0

2

0

0

_

_

_

_

_

_

_

_

_

_

, |

1

2

,

1

2

=

1

3

_

_

_

_

_

_

_

_

_

_

0

0

2

0

1

0

_

_

_

_

_

_

_

_

_

_

. (41)

It is easy to verify J

+

|

1

2

,

1

2

= J

|

1

2

,

1

2

= 0, and J

|

1

2

,

1

2

= |

1

2

,

1

2

.

Now we can go to a new basis system by a unitary rotation

U =

_

_

_

_

_

_

_

_

_

_

_

_

_

1 0 0 0 0 0

0

_

2

3

0

1

3

0 0

0 0

1

3

0

_

2

3

0

0 0 0 0 0 1

0

1

3

0

_

2

3

0 0

0 0

_

2

3

0

1

3

0

_

_

_

_

_

_

_

_

_

_

_

_

_

. (42)

12

This matrix puts transpose of vectors in successive rows so that the vectors

of denite j and m are mapped to simply each component of the vector,

U|

3

2

,

3

2

=

_

_

_

_

_

_

_

_

_

_

1

0

0

0

0

0

_

_

_

_

_

_

_

_

_

_

, U|

3

2

,

1

2

=

_

_

_

_

_

_

_

_

_

_

0

1

0

0

0

0

_

_

_

_

_

_

_

_

_

_

, U|

3

2

,

1

2

=

_

_

_

_

_

_

_

_

_

_

0

0

1

0

0

0

_

_

_

_

_

_

_

_

_

_

, U|

3

2

,

3

2

=

_

_

_

_

_

_

_

_

_

_

0

0

0

1

0

0

_

_

_

_

_

_

_

_

_

_

,

U|

1

2

,

1

2

=

_

_

_

_

_

_

_

_

_

_

0

0

0

0

1

0

_

_

_

_

_

_

_

_

_

_

, U|

3

2

,

3

2

=

_

_

_

_

_

_

_

_

_

_

0

0

0

0

0

1

_

_

_

_

_

_

_

_

_

_

. (43)

For the generators, we nd

UJ

z

U

= h

_

_

_

_

_

_

_

_

_

_

3

2

0 0 0 0 0

0

1

2

0 0 0 0

0 0

1

2

0 0 0

0 0 0

3

2

0 0

0 0 0 0

1

2

0

0 0 0 0 0

1

2

_

_

_

_

_

_

_

_

_

_

, (44)

UJ

+

U

= h

_

_

_

_

_

_

_

_

_

_

0

3 0 0 0 0

0 0 2 0 0 0

0 0 0

3 0 0

0 0 0 0 0 0

0 0 0 0 0 1

0 0 0 0 0 0

_

_

_

_

_

_

_

_

_

_

, (45)

UJ

= h

_

_

_

_

_

_

_

_

_

_

0 0 0 0 0 0

3 0 0 0 0 0

0 2 0 0 0 0

0 0

3 0 0 0

0 0 0 0 0 0

0 0 0 0 1 0

_

_

_

_

_

_

_

_

_

_

. (46)

Here, the matrices are written in a block-diagonal form. What we see here

is that this space is actually a direct sum of two representations j = 3/2 and

13

j = 1/2. In other words,

V

1/2

V

1

= V

3/2

V

1/2

. (47)

This is what we do with ClebschGordan coecients. Namely that the

ClebschGordan coecients dene a unitary transformation that allow us

to decompose the tensor product space into a direct sum of irreducible rep-

resentations of the angular momentum. As a mnemonic, we often write it

as

2 3 = 4 2. (48)

Here, the numbers refer to the dimensionality of each representation 2j + 1.

If I replace by and by +, my eight-year old daughter can conrm that

this equation is true. The added meaning here is that the right-hand side

shows a specic way how the tensor product space decomposes to a direct

sum.

14

You might also like

- Solutions To Exam 2010Document10 pagesSolutions To Exam 2010IonutgmailNo ratings yet

- GrouDocument44 pagesGrouIonutgmailNo ratings yet

- Lie 2006 ExamDocument4 pagesLie 2006 ExamIonutgmailNo ratings yet

- Lie 2006 ExamDocument4 pagesLie 2006 ExamIonutgmailNo ratings yet

- Lie 2008 ExamDocument5 pagesLie 2008 ExamIonutgmailNo ratings yet

- King'S College London: MSC ExaminationDocument6 pagesKing'S College London: MSC ExaminationIonutgmailNo ratings yet

- King's College London Lie Algebra ExamDocument5 pagesKing's College London Lie Algebra ExamIonutgmailNo ratings yet

- James Joyce: A Critical Introduction - Harry LevinDocument94 pagesJames Joyce: A Critical Introduction - Harry LevinIonutgmail50% (2)

- Bloom - Joyce Part 2Document42 pagesBloom - Joyce Part 2IonutgmailNo ratings yet

- Faessler, Simkovic, Double Beta DecayDocument42 pagesFaessler, Simkovic, Double Beta DecayIonutgmailNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5783)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Department of Education: Weekly Home Learning Plan Grade Ii Fourth Quarter Week 8Document8 pagesDepartment of Education: Weekly Home Learning Plan Grade Ii Fourth Quarter Week 8Evelyn DEL ROSARIONo ratings yet

- Ch07 Spread Footings - Geotech Ultimate Limit StatesDocument49 pagesCh07 Spread Footings - Geotech Ultimate Limit StatesVaibhav SharmaNo ratings yet

- SOLVING LINEAR SYSTEMS OF EQUATIONS (40 CHARACTERSDocument3 pagesSOLVING LINEAR SYSTEMS OF EQUATIONS (40 CHARACTERSwaleedNo ratings yet

- Variable Speed Pump Efficiency Calculation For Fluid Flow Systems With and Without Static HeadDocument10 pagesVariable Speed Pump Efficiency Calculation For Fluid Flow Systems With and Without Static HeadVũ Tuệ MinhNo ratings yet

- Module 2Document42 pagesModule 2DhananjayaNo ratings yet

- 5 Important Methods Used For Studying Comparative EducationDocument35 pages5 Important Methods Used For Studying Comparative EducationPatrick Joseph63% (8)

- 2019-10 Best Practices For Ovirt Backup and Recovery PDFDocument33 pages2019-10 Best Practices For Ovirt Backup and Recovery PDFAntonius SonyNo ratings yet

- WassiDocument12 pagesWassiwaseem0808No ratings yet

- Single-Phase Induction Generators PDFDocument11 pagesSingle-Phase Induction Generators PDFalokinxx100% (1)

- CGV 18cs67 Lab ManualDocument45 pagesCGV 18cs67 Lab ManualNagamani DNo ratings yet

- Afu 08504 - International Capital Bdgeting - Tutorial QuestionsDocument4 pagesAfu 08504 - International Capital Bdgeting - Tutorial QuestionsHashim SaidNo ratings yet

- FALL PROTECTION ON SCISSOR LIFTS PDF 2 PDFDocument3 pagesFALL PROTECTION ON SCISSOR LIFTS PDF 2 PDFJISHNU TKNo ratings yet

- IntroductionDocument34 pagesIntroductionmarranNo ratings yet

- Key formulas for introductory statisticsDocument8 pagesKey formulas for introductory statisticsimam awaluddinNo ratings yet

- Ramdump Memshare GPS 2019-04-01 09-39-17 PropsDocument11 pagesRamdump Memshare GPS 2019-04-01 09-39-17 PropsArdillaNo ratings yet

- Human Resouse Accounting Nature and Its ApplicationsDocument12 pagesHuman Resouse Accounting Nature and Its ApplicationsParas JainNo ratings yet

- GIS Arrester PDFDocument0 pagesGIS Arrester PDFMrC03No ratings yet

- Equipment, Preparation and TerminologyDocument4 pagesEquipment, Preparation and TerminologyHeidi SeversonNo ratings yet

- Lanegan (Greg Prato)Document254 pagesLanegan (Greg Prato)Maria LuisaNo ratings yet

- Ali ExpressDocument3 pagesAli ExpressAnsa AhmedNo ratings yet

- Mechanics of Deformable BodiesDocument21 pagesMechanics of Deformable BodiesVarun. hrNo ratings yet

- Ijimekko To Nakimushi-Kun (The Bully and The Crybaby) MangaDocument1 pageIjimekko To Nakimushi-Kun (The Bully and The Crybaby) MangaNguyễn Thị Mai Khanh - MĐC - 11A22No ratings yet

- Chicago Electric Inverter Plasma Cutter - 35A Model 45949Document12 pagesChicago Electric Inverter Plasma Cutter - 35A Model 45949trollforgeNo ratings yet

- Amul ReportDocument48 pagesAmul ReportUjwal JaiswalNo ratings yet

- Programming Language Foundations PDFDocument338 pagesProgramming Language Foundations PDFTOURE100% (2)

- Land Measurement in PunjabDocument3 pagesLand Measurement in PunjabJunaid Iqbal33% (3)

- Second Law of EntrophyDocument22 pagesSecond Law of EntrophyMia Betia BalmacedaNo ratings yet

- Identifying The TopicDocument2 pagesIdentifying The TopicrioNo ratings yet

- Injection Timing (5L) : InspectionDocument2 pagesInjection Timing (5L) : InspectionaliNo ratings yet

- Electrosteel Castings Limited (ECL) - Technology That CaresDocument4 pagesElectrosteel Castings Limited (ECL) - Technology That CaresUjjawal PrakashNo ratings yet