Professional Documents

Culture Documents

An Analysis of Some Graph Theoretical Cluster Techniques

Uploaded by

pakalagopalOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

An Analysis of Some Graph Theoretical Cluster Techniques

Uploaded by

pakalagopalCopyright:

Available Formats

An Analysis of Some Graph Theoretical Cluster Techniques

J. GARY AUGUSTSON AND JACK M I N K E R

University of Maryland,* College Park, Maryland

ABSTRACT. Several graph theoretic cluster techniques aimed at the automatic generation of thesauri for information retrieval systems are explored. Experimental cluster analysis is performed on a sample corpus of 2267 documents. A term-term similarity matrix is constructed for the 3950 unique terms used to index the documents. "Various threshold values, T, are applied to the similarity matrix to provide a series of binary threshold matrices. The corresponding graph of each binary threshold matrix is used to obtain the term clusters. Three definitions of a cluster are analyzed: (1) the connected components of the threshold matrix; (2) the maximal complete subgraphs of the connected components of the threshold matrix; (3) clusters of the maximal complete subgraphs of the threshold matrix, as described by Gotlieb and Kumar. Algorithms are described and analyzed for obtaining each cluster type. The algorithms are designed to be useful for large document and index collections. Two algorithms have been tested that find maximal complete subgraphs. An algorithm developed by Bierstone offers a significant time improvement over one suggested by Bonner. For threshold levels T > 0.6, basically the same clusters are developed regardless of the cluster definition used. In such situations one need only find the connected components of the graph to develop the clusters.

KEY WORDS AND PHRA.SES: cluster analysis, graph-theoretic, connected component, maximal complete subgraph, clique, binary threshold matrix, information storage and retrieval, similarity matrix, term-term matrix

CR CATEGORIES:

3.71, 5.32

1. Introduction

One of t h e m a j o r p r o b l e m s facing a user of p r e s e n t - d a y i n f o r m a t i o n systems is how to e x t r a c t i n f o r m a t i o n p e r t i n e n t to his needs. M o s t users are n o t sufficiently familiar with a d o c u m e n t collection to assure the retrieval of r e l e v a n t d o c u m e n t s . I n most i n f o r m a t i o n systems, w h e t h e r a u t o m a t e d or not, some relationship can be established b e t w e e n t h e v a r i o u s t e r m s used to index d o c u m e n t s . Once relationships b e t w e e n index t e r m s of a collection are d e t e r m i n e d , t h e y can be provided to the user so t h a t he m a y q u e r y the s y s t e m more effectively. E x t e n s i v e experimental work has b e e n u n d e r t a k e n in order to develop statistically d e t e r m i n e d t e r m associations. However, work performed b y S a l t o n [35] a n d Lesk [23] on a l i m i t e d sample d o c u m e n t collection indicates t h a t a well-constructed t h e s a u r u s m a y prove to be t h e best m e t h o d of exhibiting t e r m associations. If this observation, b a s e d on a small corpus, proves to be true, the p r o b l e m r e m a i n s t h a t , even t h o u g h a * Computer Science Center. The computer time for this paper was supported by National Aeronautics and Space Administration Grant NsG-398 to the Computer Science Center of the University of Maryland. Part of the work was sponsored under National Bureau of Standards contract No. CST-821-5-69.

Journalof the Association for Computing Machinery, Vol. 17, No. 4. October1970,pp. 571-588.

572

J . G. A U G U S T S O N A N D J . MINKF.J~

relationship between index terms generally exists, very few thesauri of index terms are available. Experimentation in the field of cluster analysis is aimed at providing the user of an iaformation system with an automatically generated thesaurus. The thesaurus produced could provide a twofold purpose. First, it could constitute a reasonable representation of the interrelatedness of the index terms that could be used to query the document collection. Second, if a better thesaurus is desired, the term relations produced could provide an original partition of the terms which subject. area specialists could then refine. This paper presents an analysis and comparison of term clustering mechanisms which utilize graph theoretical techniques to produce a term thesaurus for a sample data base consisting of 2267 documents indexed by 3950 unique index terms. The following graph theoretical definitions are used to define different levels of cluster definition: (1) connected components1; (2) maximal complete subgraphs2; (3) clusters of maximal complete subgraphs. Algorithms for producing each type of cluster are described and analyzed. The algorithms of Bierstone [6] and Bonner [7] are used to produce maximal complete subgraphs of the sample data base. Since maximal complete subgraphs provide the basis for the more refined graph theoretical cluster definitions, these two algorithms are explained and compared in some detail. The results of our research show that Bierstone's approach of building all complete subgraphs until they eventually become maximal is superior in terms of time and space requirements to Bonner's approach of iteratively producing one maximal complete subgraph after another. The clustering techniques that are discussed in this paper could be applied to data sets larger than what is described in this paper. The series of programs used to construct the similarity matrix are capable of providing results from any size data set; however, since massive data sets must be sorted, the time requirements for creating a similarity matrix for large data sets could be prohibitive. In our sample data set, approximately 30 minutes of 7094 computer time was required to produce the similarity matrix starting from a document-term matrix representing 2267 documents and 3950 index terms. Most of this time was spent in performing massive sorts. It can be expected that larger data sets will require more computer time. The algorithm used to create the connected components of a data set is restricted only by the number of index terms contained in the input data set. Our particular implementation could handle a data set containing on the order of 104 unique terms. The Bierstone algorithm for producing maximal complete subgraphs is limited only by the number of nodes in the input connected component. Our experimental system only allowed connected components of 72 terms or less. A simple alteration to Bierstone's algorithm allows the handling of input connected components of over 2000 nodes. The capabilities of both these algorithms could be increased ii they were implemented on computers with larger core memory facilities. Our experi. mental work has shown that time should not be a limiting factor in producing 1 A connected componentof a graph consists of the set of nodes that are mutually reachableby proceeding along the edges of the graph. A complete subgraph is a subgraph in which all pairs of nodes are connected by an edge Such a completesubgraph is maximal when it is not contained in any other completesubgraph

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

An Analysis of Some Graph Theoretical Cluster Techniques

573

either the connected components or the maximal complete subgraphs from any size input set.

2. Related Work in Cluster Analysis

2.1. GENERAL CLUSTER TECHNIQUES. Many individuals have made substantial contributions to the field of cluster analysis. Ball [4] surveys many of these efforts. In this section we note, briefly, some of the previous contributions to developing clusters of terms in a document collection. Tanimoto [34, 43], in the late 1950s, studied aspects of this problem. We use Tanimoto's similarity measure in this study. In 1960, Borko [8] used the principle of factor analysis to develop clusters for a 90 X 90 correlation matrix. Stiles and Salisbury [42] have utilized a so-called Bcoefficient to subdivide term profiles into distinct sets. Baker [3], in 1962, suggested the use of latent class analysis to develop clusters. Needham [25] has experimented with cluster finding techniques using what he calls arithmetic cohesion, and terms his process "clump" finding. Speck-Jones [39], at the Cambridge Language Research Unit, has extended Needham's work and has experimented with a set of 641 terms. Recently, Dattola [13] has developed a cluster method based on an adaptation of a technique suggested by Doyle [14]. Dattola's technique assures that his method will converge to a set of clusters, whereas Doyle's approach need not terminate. 2.2. GRAPH THEORETICAL CLUSTER TECHNIQUES. The original suggestion to use graph theoretical definitions of a cluster was made, perhaps, by Kuhns [22] in December 1959. Kuhns, in his paper, defines the maximal complete subgraph of a graph as a cluster. He does not provide experimental results. Parker-Rhodes and Needham [27, 30, 31] have defined what is called a G-R clump, an iterative procedure having some graph theoretical relations. Dale and Dale [12] have experimented with this technique. Sp~ck-J'ones [37] has reported on an extension of clustering work performed by herself and Needham. Clusters were produced from a data base of 712 terms using four definitions of a cluster, which she terms (1) strings, (2) stars, (3) cliques (maximal complete subgraphs), and (4) clumps. Gotlleb and Kumar [16] also use the concept of maximal complete subgraphs for defining clusters. They employ the Library of Congress's subject heading list to develop clusters of terms rather than a document collection from which one develops a term-term matrix. An important aspect of their work is the suggestion to form clusters of the maximal complete subgraphs. We experiment with this approach in this paper. For other work in cluster analysis, see the References.

3. Experimental System

3.1. O V E R V I E W OF THE EXPERIMENTAL SYSTEM. The experimental work reported on in this paper is presented in a more extensive paper [2]. The work consisted of the development of a data base, consisting of a set of documents and a

Journal of the Association for Computing Machinery, ol. 17, No. 4, October 1970

574

J. G. AUGUSTSON AND J. MINKE~

set of terms used to index the documents. A similarity matrix with entries betweer 0 and 1 is constructed from the document-term matrix to show the interrelatednes~, of the various index terms. Various threshold values are applied to the similarit~ matrix to produce the threshold matrices upon which the clustering process k, performed. The connected components of the threshold matrices provide the weak. est definition of a cluster; the maximal complete subgraphs of the threshold matrice~ provide the strictest definition of a cluster. (Figure 1 illustrates a typical elustel graph, and the definitions used for a cluster.) Some combining of the maxima] complete subgraphs is performed in order to provide a definition of a cluster inter. mediate between the connected components of a graph and the maximal complete subgraphs of the graph. A corpus consisting of 2267 documents indexed by 3950 unique terms, and concerning a wide variety of topics, was used for the study. A term-term similarity matrix, consisting of elements aij, using the Tanimoto [34] similarity measure, wa~ then constructed. The element ali of the term-term matrix represents the degree to which terms i and j of the document collection are interrelated. A series of binary threshold matrices were constructed from the resultant similarity matrix for value~ of T = 0.1, T = 0.2, T = 0.3, T = 0.4, T = 0.5, T = 0.6, and T = 0.7. If the entries a~s of the similarity matrix were greater than or equal to the threshold value T, then the corresponding entry of the threshold matrix was set to one; otherwise, it was set to zero. The binary symmetric threshold matrix is equivalent to an undirected graph where the terms are the nodes of the graph, and where an edge exists between nodes i and j if the threshold matrix has a one in the (i, j)-th position. An algorithm was developed to partition each threshold matrix into a set of disjoint threshold matrices, corresponding to the connected components of the graph represented by the threshold matrix. Algorithms developed by Bonner [7] and Bierstone [6] were modified and implemented to find the maximal complete subgraphs of the connected components of the threshold matrices. Maximal complete Sabotage Leg:lc pa~an:: eruitment Clandei~~uerrillas Insurgene~Par Motivations

FIG. 1. Typical clusters

Bombers Strategic~ ~Missiles

amilitary

1. The graph has two connected components--the components: {Bombers, Strategic, Missiles} and {Legal, Sabotage, Recruitment, Propaganda, Clandestine, Guerrillas, Insurgency, Paramilitary and Motivations}. 2. The graph has four maximal complete sets: {Bombers, Missiles, Strategic}, [Legal, Sabotage, Recruitment, Propaganda}, {Propaganda, Clandestine, Guerrillas, Insurgency, Paramilitary}, {Motivations, Clandestine, Guerrillas, Insurgency, Paramilitary}. 3. The graph has three grouped maximal complete sets: {Bombers, Strategic, Missiles}, {Sabotage, Legal, Recruitment, Propaganda}, {Propaganda, Clandestine, Guerrillas, Insurgency, Paramilitary, Motivations}.

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

An Analysis of Some Graph Theoretical Cluster Techniques

575

subgraphs were produced only from threshold matrices for T = 0.4, T = 0.5, T = 0.6, and T = 0.7 due to the large size of the connected components found for values of T < 0.4. Using the procedure suggested by Gotlieb and K u m a r [16], those maximal complete subgraphs which had a significant number of common terms were grouped together to form new clusters. These clusters provide our third definition of a cluster and represent an intermediate definition between those clusters defined by the connected components of the threshold matrices and those defined by the maximal complete subgraphs of the connected components. The clusters produced from the several threshold matrices and the three cluster definitions were analyzed and compared as to general composition and content. 3.2. DESCRIPTION OF THE CORPUS. The corpus used for this study consists of 2267 documents composed basically of research, development, test, and evaluation information from 22 broad subject fields of science covering a five-year period from 1963 to 1968. The majority of the documents are from the fields of mathematics, physics, and communication. For each document, the author, title, abstract, and descriptors were available. For the work reported on in this paper, we are concerned only with the title and the descriptors. Approximately 90 percent of the documents were assigned descriptors at composition time by the author. The remaining documents were indexed b y nonsubject-matter-oriented individuals with the aid of a master descriptor dictionary. Nearly one-fourth of the contributing authors had access to this master descriptor dictionary while indexing their own documents. Regardless of who performed the initial indexing of the documents, all indexed documents were post-edited b y library personnel prior to insertion into the collection in order to assure proper indexing. The authors of this paper were not involved in this process, but merely used the results of the above efforts. As this corpus was already in machine readable form, the tedious work of gathering and encoding a representative set of documents was avoided. The corpus is a subset of a much larger collection composed of documents from the same subject areas. Though no previous experimental work had been performed on this partieular set, it was possible to consult with individuals who were better acquainted with the contents of the entire collection to determine if the clusters produced were meaningful. 3.3. SELECTION OF A SIMILARITY MEASURE. Some measure of the relatedness between terms used to index the documents of the data set must be established in order to perform cluster analysis. Several different similarity measures have been proposed [7, 12, 18, 21, 41, 43]. Since in-depth comparisons and evaluations concerning various similarity measures have been conducted before b y other authors [18, 21, 36], only one similarity measure was studied. Spiirck-Jones [37], in partieular, comments that the several similarity definitions used in her cluster production experiments did not appear to give radically different results. The Tanimoto [34] similarity measure was used for this work. Tanimoto d e e m s the similarity measure between two index terms i and j to be:

S(i, j) = a , i / ( a . + ajj - alj),

(1)

where ao' represents the number of documents in which both i and j occur as index

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

576

J.G.

AUGUSTSON AND J. MINKER

terms, and a , represents the number of documents in which term i is used as an index term. 3.4. CREATIONOF THE THRESHOLDMATRIX. To find clusters according to the three definitions considered, it was necessary to determine the term-term association matrix. If C = [C~i] represents the document-term matrix, ~ then Z = C r- C is the termterm matrix. 4 When the term-term matrix is suitably normalized as, for example, suggested by Tanimoto, the entries of the normalized term-term matrLx 5 have values between 0 and 1. By appl~dng a cutoff value of T to the similarity matrix, whereby two terms are considered associated if the entries in the similarity matrix are >_T, this matrix is converted into a binary matrix termed the threshold matrix. The actual production of the term-term matrix was achieved by a series of programs which avoided the problem of multiplying large matrices. A description of this program is given in [2]. The program is applicable to large matrices and is unconstrained by the size of the data set.

3.5, CONSTRUCTION OF THE CONNECTED COMPONENTS. In developing term clusters for large document collections, it is helpful to first reduce the graph in question to its connected components. Since elements of clusters must be interrelated to one another, it would be wasteful to attempt to find clusters between terms in separate connected components. By reducing a graph to its connected components and handling each component as a distinct graph, term relations of large document collections can be reduced to a size that is manageable within the core limits of a conventional computer. The connected components are further required since they provide our weakest definition of a cluster. An algorithm was developed which produced the connected components of an input graph and was dependent only upon the number of nodes in the input graph. The output connected components provided both resultant clusters and distinct divisions of the data set for input to the maximal complete subgraph algorithms. The algorithm developed is described in [2]. It is an adaptation of the algorithm developed by Galler and Fisher and described in [19, p. 353]. The adaptation permits one to find connected components in large graphs. A graph with 2084 nodes and 6630 edges developed 475 connected components in 1.87 minutes on an IBM 7094 computer. This time includes the time required to input the graph from magnetic tape, and output the connected components onto magnetic tape. For all graphs discussed in this paper, the times required to find the connected components in the graphs are given in Appendix 3.

3.6. DEVELOPMENT OF THE M.AXIMAL COMPLETE SETS. Several algorithms have been developed for generating maximal complete subgraphs of a graph. These algorithms were introduced by Harary and Ross, Bierstone [6], and Bonner [7]. Apparently, the Harary-Ross algorithm was the first developed; however, it involves the computation and manipulation of large matrices for large input graphs. The Bierstone and Bonner algorithms are more adaptable to cluster analysis for

' An n X m binary matrix representing a data set of n documents and m unique index terms. If document i is indexed by term j, then C~ = 1; otherwise C~ -- 0. 4 An m X m symmetric matrix where Z~ represents the number of documents which have been indexed by both terms j and k. 6 Also referred to as the similarity matrix.

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

An Analysis of Some Graph Theoretical Cluster Techniques

577

large data sets and, as a result, were implemented for our work. Input to the two algorithms consisted of the connected components of the threshold matrices produced previously. Both algorithms were implemented in FORTe~N IV and M A P for the I B M 7094 computer with a 32K-word core memory. 3.6.1. Overview of the Bierstone Algorithm. The Bierstone algorithm, e described in detail in Appendix 1, was selected as the major cluster producing algorithm for this paper. The representation of a graph is input to the algorithm with each node Pi (J = 1, . . . , n) assigned a lmique number. For each node Ps, there is associated a set Ms where Mj = {pk I the pair (Ps, pk) represents an edge of the graph and k > j}. The algorithm utilizes a set of elements C where complete subgraphs are built. The algorithm attempts to find complete subgraphs of the set of connected nodes represented b y the set {pj} U M s (Ms # 0) which can be combined with the set of complete subgraphs Cn that have already been developed or can be introduced as new unique complete subgraphs of the data set. Upon termination of the algorithm, all of the complete subgraphs C. represent maximal complete subgraphs of the input set. 3.6.2. Overview of the Bonnet Algorithm. The Bonner algorithm/ described in detail in Appendix 2, requires input in the form of a threshold matrix with each node Ps (J = 1, . . . , m) assigned a unique number. The Bonner algorithm builds clusters one at a time, while retaining needed information in a series of pushdown lists reflecting the elements of the cluster itself, candidates for addition to the cluster, and an identifier of the last node added to the cluster. Each time a node is added to the cluster, the list of candidate nodes is updated to reflect those nodes connected to, but not contained within, the cluster. A complete subgraph is formed when there are no candidates for addition to the cluster with a larger numeric representation than the last node added. This complete subgraph and the information saved in the associated tables serves as the starting point for the production of the next cluster. Due to the iterative nature of the algorithm, if, upon forming a complete subgraph, the candidate list contains nodes which are not part of the cluster just formed, it can be determined t h a t the complete subgraph just formed does not represent a unique maximal complete subgraph of the data base.

4. Analysis and Comparison of the Bierstone and the Bonner Algorithms

Bonner asserts that, due to storage limitations imposed by the machine he used (an I B M 7090 with a memory size of 32K words), the maximum allowable sample size the algorithm can handle is 350 input terms. Bonner does not subdivide the input threshold matrix into a series of disjoint threshold matrices (Bonner's examples show that the elements may be disjoint) thereby permitting each of the disjoint matrices to be treated as separate input data sets. Assuming there are disjoint sets, this could enable an appreciable increase in the maximum allowable 6The algorithm was developed by Mr. E. Bierstone, a student in the mathematics department at the University of Toronto, and appears in unpublished notes by Mr. Bierstone [6]. A minor modification was required to complete the algorithm [1, 2]. To the best of our knowledge the algorithm has not been implemented previously. 7The Bonner algorithm as published in [7] omits a significant step. Appendix 2 provides the corrected algorithm.

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

578

J . G. A U G U S T S O N

A N D J . MINKER

sample size. As an example, for the threshold value T = 0.4, our data set, which consists of 2084 unique index terms, subdivided into 475 disjoint threshold matrices (each corresponding to a connected component of the representative graph). Each of the sets was then used as input to Bonner's algorithm with no problems arising concerning space. Similarly, the Bierstone algorithm was able to handle each of the input graphs with no problem concerning size. The maximum size that can be handled by the Bierstone algorithm depends upon the size of the input graph and the number of maximal complete subgraphs present in the graph. A graph of up to approximately 1000 nodes can be handled. In [1, 2] we describe an alternative implementation of the Bierstone algorithm which conserves storage space by not requiring the entire connection matrix to appear in core at one time. This alteration permits the handling of input graphs containing at least 2000 nodes. These remarks apply to an IBM 7094 in which the cluster algorithm and data space assigned consist of between 20,000 and 25,000 words of core space. Bonner claims that his algorithm offers an improvement over previous methods [22, 27] since he does not output the same cluster repeatedly or continually print out subsets of clusters already found. Indeed, this offers an improvement in the type of output produced, but its saving in processing time does not appear to be that great. In this study, the larger clusters of the data sets were produced by the Bierstone algorithm in significantly less time than it took for the Bonner algorithm. An analysis of the Bonner algorithm shows that, although each cluster is output only once with none of its subsets output, many such subsets and duplicate clusters were found by the algorithm and rejected only when the candidate list C~ was found to be nonempty after complete production of the cluster (Step 6 of the algorithm). Since clusters are built up one item at a time, beginning with the first index term, the production of clusters is dependent upon the numerical values originally assigned to the input terms. The time involved in finding all clusters varies according to the location of the clusters with respect to the numbering scheme. This fact was most evident in the results produced by this algorithm for our data base. A timing algorithm was utilized to determine how long it took the Bonner algorithm to produce the resultant set of clusters from each input set. These results were compared with the time needed by the Bierstone algorithm to produce the exact same clusters. Table I shows the comparative results. In several instances, the Bonner algorithm worked as fast or faster than the Bierstone algorithm. However, such figures are misleading as most all of these input sets contained only from one to three small clusters. The most indicative comparative results are reflected by those input sets which contained several clusters of varying size. In all but one case, the Bierstone algorithm was at least twice as fast as the Bonner algorithm. In the two largest input sets, the Bierstone algorithm proved to be much faster. One large and highly connected input set consisting of 72 terms was found to have three maximal complete subgraphs of 64 terms each and five smaller maximal complete subgraphs of six terms each. The Bierstone algorithm took 0.133 seconds to develop the clusters. The Bonner algorithm needed 2.183 seconds to produce the same results. A somewhat smaller input set (67 terms) was found to have five maximal complete subgraphs of 47 terms each and six additional maximal complete subgraphs of 3 or 4 terms each in 0.733 seconds by the Bierstone algorithm (this

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

An Analysis of Some Graph Theoretical Cluster Techniques

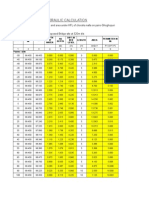

T A B L E I. COMPARATIVE RESULTS OF TIME REQUIREMENTS FOR THE BONNER AND BIERSTONE ALGORITHMS

579

A = T i m e r e q u i r e d f o r t h e B i e r s t o n e a l g o r i t h m to find t h e m a x i m a l complete s u b g r a p h c l u s t e r s in a n i n p u t set. B = T i m e r e q u i r e d for t h e B o n n e r a l g o r i t h m to find t h e s a m e m a x i m a l c o m p l e t e s u b g r a p h c l u s t e r s in t h e s a m e d a t a s e t .

Comparative time No. applicable data sets

B < A B = A B = 1.5A

B = 2A

B = 3A

11 a 38 b 4 31 12

B=4A

B = 5A

1

3

B = 10A B = 17A B > 200A

1 1 1

Of t h e s e : 8 c o n t a i n e d one c l u s t e r ; 1 c o n t a i n e d t w o c l u s t e r s ; 2 c o n t a i n e d three clusters. b Of these: 15 c o n t a i n e d one c l u s t e r ; 14 c o n t a i n e d t w o c l u s t e r s ; 5 c o n t a i n e d three clusters.

was the most processing time required of all the input sets). The Bonner algorithm, in processing the same data set, worked for nearly two minutes without producing final results. The activities of the Bonner algorithm were carefully analyzed by means of detailed debug printouts. It was discovered that the five large clusters were produced quickly and then the algorithm proceeded to spend the rest of its time rejecting subsets of these clusters in an attempt to work itself back through the data set to find the other clusters. The failure of the Bonner algorithm to produce results for the above data set, while having relatively little trouble in finding the maximal complete subgraphs of a larger and more complex input set, demonstrates the fact that processing time for the algorithm is highly dependent upon the original numerical values assigned to the data terms. In the first of the two examples above, the original numbering scheme was such that the vast majority of the complete subgraphs of the large clusters already found were rejected without having to completely reproduce the new cluster (Step 8 of the algorithm). However, in the second example, due to the location of the nodes which caused the distinction between the five large clusters, a greater percentage of the complete subgraphs of the larger maximal complete subgraphs had to be completely produced by the algorithm and finally rejected only when it was discovered that, upon producing the cluster, the candidate list C~ was not empty (Step 6 of the algorithm). For a maximal complete subgraph containing n nodes, the number of complete subgraphs contained within it. becomes excessive when n is large. For any maximal complete subgraph containing n nodes, we can produce (~-1) complete subgraphs containing n -- 1 nodes; we also can find (~-2) complete subgraphs with n -- 2 nodes. By continuing this procedure, one can see that the total number of complete subgraphs contained in any maximal complete subgraph of n terms is ~'=-~ (~). From the binomial theorem, we know that 2~ = ~ 0 (~). Therefore, the total

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

580'

J.

G. A U G U S T S O N A N D J . M I N K E R

number of complete subgraphs of a maximal complete subgraph of degree n is equal to 2 '~ - 2 - n - [ n ( n - - 1)/2]. In the case where n -- 37, as was found in the above data set, we therefore have 1.37 X 10I~ complete subgraphs that may be tested by Bonner's algorithm. Therefore, any significant decrease in the number of complete subgraphs eliminated at Step 8 of the algorithm could cause the processing time of an involved input set to get out of hand. This cannot happen with the Bierstone algorithm.

5. R e f i n e m e n t o f C l u s t e r s v i a Gotlieb a n d K u m a r Algorithm

Clusters formed by maximal complete subgraphs may overlap for highly connected input sets. For example, one of the larger data sets processed by the Bierstone algorithm was found to have three maximal complete subgraphs of 64 terms, each of which had 63 terms in common with the other two maximal complete subgraphs. An additional five smaller maximal complete subgraphs of the same input set were found to contain 6 terms each, 5 of which were common to all five maximal complete subgraphs. As was previously discussed, the maximal complete subgraphs form our strictest definition of a cluster. It is evident from the above example that such a definition may not be desirable in a system whose aim is to produce a concise set of clusters of highly related terms. In the above example, three distinct clusters of 64 terms are formed due to the fact that three nodes, all of which are connected to 63 common nodes, have no interconnections. It would seem that the number of common connections these nodes possess should override the fact that the nodes are not directly related. Gotlieb and Kumar [16] have developed a procedure for combining such clusters into diffuse classes of index terms. They form a cluster-cluster similarity matrix with entries d~# defined as

d,j = 1 -

I C, N Cj

I/I

C, U C~ I,

(2)

where ICe n Cj] is equal to the number of terms the two maximal complete sets have in common, and ]C~ U Ci I is equal to the total number of lmique terms contained in cluster C~ and in cluster Cj. The values d~j represent the proportion of terms contained jointly in the two clusters. As with the term-term similarity matrix, we set a threshold level ~ for the cluster-cluster similarity matrix; the resulting binary matrix again represents a graph. The entries d~j are essentially the set theoretic representation of the Tanimoto measure. (It is actually one minus the Tanimoto measure. The entries are used to be consistent with Gotlieb and Kumax's paper.) Clusters of the clusters may now be found by considering the matrix D as our input graph. Any of the criteria for determining clusters of an input graph can be selected for producing clusters of the input maximal complete subgraphs. Gotlieb and Kumax state that clusters should be the maximal complete sets; that is, the same definition as he uses to form the clusters between terms, s For our application, we require that the elements of the second generation clusters form a connected component. The clustering of cluster~ is repeated, with the values of d~j computed from the resultant clusters of the pres Although Gotlieb and Kumar state that they use the maximal complete subgraphs of th~ newly formed graph to develop diffuse concepts, the experimentM results provided in thei] paper suggest that the connected components were actually used.

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

An Analysis of Some Graph Theoretical Cluster Techniques

581

vious iteration, until a point is reached where no combinations of the elements can be made. Results were obtained for the threshold levels ~ = 0.5 and ~ = 0.7. Rather than find the diffuse concepts b y means of a separate pass on the resultant maximal complete subgraphs output b y the Bierstone algorithm, Gotlieb's combining scheme was incorporated as part of the Bierstone algorithm. This additional processing roughly doubled the required execution time for producing clusters. The diffuse concepts produced from the known maximal complete subgraphs appear, on a subjective basis, to be quite good. For example, both of the ~ values combined the previously mentioned sample data set into two diffuse concepts, one consisting of 66 nodes (containing the 63 common nodes plus the three nodes which were not interconnected) and the other consisting of six nodes (containing the five common nodes plus the five nodes which were connected to these nodes in the original maximal complete subgraphs).

6. Experimental Results

The clustering procedure using the Bierstone algorithm was applied to several different threshold matrices of the original term-term matrix. Threshold matrices for values of T = 0.4, T = 0.5, T = 0.6, and T = 0.7 were generated. Each of these threshold matrices was then divided into a set of disjoint threshold matrices (representing the connected components of the corresponding graph) and used as input to the Bierstone algorithm. Threshold matrices were constructed for values of T = 0.1, T = 0.2, and T = 0.3 but, since each matrix contained one connected component of at least 1150 terms, the Bierstone algorithm was not applied. I t was found t h a t the number of nodes contained in the largest connected component of the graph described b y the threshold matrix varies the greatest between the threshold matrices of T = 0.3 (1150 nodes) and T = 0.4 (72 nodes). The fact t h a t the size of the largest connected component in the threshold matrices for T = 0.4, T = 0.5 (69 nodes), T = 0.6 (67 nodes), and T = 0.7 (66 nodes) remains fairly constant, while the same values for T = 0.1 (3783 nodes), T = 0.2 (2797 nodes), and T = 0.3 v a r y so greatly, tends to indicate that some stabilization of the threshold matrix occurs around a threshold value of T = 0.4. For T = 0.4, two additional clustering procedures were applied. In these two cases, maximal complete subgraphs of a connected component were combined via the clustering technique described b y Gotlieb and K u m a r using threshold values = 0.5 and 5 = 0.7. These grouped maximal complete subgraphs were considered as the resultant clusters. 6.1. STRUCTURAL COMPOSITION OF CLUSTERS. For values of T = 0.4 and T = 0.5, the average size of the clusters defined b y the connected components was 6.5 terms. Clusters defined b y the maximal complete subgraphs of the connected components had an average of 5.1 terms per cluster. However, the maximal complete subgraphs of the connected components introduced approximately 60 percent more clusters. This was to be expected as m a n y of the connected components contained several maximal complete subgraphs consisting of some of the terms of the connected component. For values of T = 0.6 and T -- 0.7, very little change in the average size of the

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

582

J. G. A U G U S T S O N A N D J . M I N K E R

clusters was detected between clusters defined by connected components and clusters defined by maximal complete subgraphs of connected components. The total number of clusters defined by maximal complete subgraphs was one less than those defined by connected components for both T = 0.6 and T = 0.7. The reason for each of the preceding results can be determined by considering the composition of the two threshold matrices for these values of T. In each case, an extremely high percentage of the connected components were also maximal complete subgraphs (97.3 percent for T = 0.6 and 99.2 percent for T = 0.7), and most of the terms of the input data set were contained in those maximal complete subgraphs (91.6 percent for T = 0.6 and 93.3 percent for T = 0.7). Thus, very few additional clusters were produced by searching for maximal complete subgraphs in the small number of connected components which were not themselves maximal complete subgraphs. Those found had very little effect on the average size cluster produced. The total number of clusters was reduced because fewer clusters were added by the discovery of connected components which contained maximal complete subgraphs than were deleted by the presence of connected components which contained no maximal complete subgraphs. As was to be expected, when the Gotlieb-Kumar algorithm was used to combine maximal complete subgraphs found in the threshold matrix for T = 0.4, the total number of resultant clusters was reduced. However, interestingly enough, the average size of the clusters produced decreased only slightly. This apparently was due to the fact that a good number of maximal complete subgraphs of two elements, previously not considered as clusters, combined to form clusters of three and four terms. This same reason could be given to explain why the number of clusters produced for ~ = 0.7 was 4 percent greater than the number produced for ~ = 0.5. Conceptually, it would seem that clusters produced for a larger value, which induces more combining of clusters, would produce fewer and larger clusters. The clusters produced for ~ = 0.7 were slightly larger on the average than those produced for ~ = 0.5. One further point of interest is that the average size of the clusters defined by maximal complete subgraphs was approximately constant for all values of T (see Figures 2 and 3). At the same time, the number of clusters produced for the lower values of T was significantly greater than the number produced for the larger values of T. For example, for T = 0.4, 402 clusters were found, nearly three times the 148 found for T = 0.7. Apparently, the threshold value applied does not affect the average size of the clusters produced, but more directly affects the number of clusters produced. Admittedly, all the clusters produced were results of the same data base, but it seems that this is a fair conclusion to make from the work conducted in this study. It would be of interest to apply the techniques of this study to other data sets to determine if similar results would be obtained. 6.2. SUMMARY OF MAJOR CONCLUSIONS. The following list summarizes the major conclusions of this study. 1. The Bierstone algorithm, which develops maximal complete subgraphs for an input graph, appears to be the most efficient one presently available. It avoids the problems of repeatedly outputting the same maximal complete subgraph and of outputting complete subgraphs which are not maximal. At the same time, it operates significantly faster than recent algorithms proposed by Bonner [7] and SpiirckJones [39].

Journal of the Association for Computing Machinery, Vol. 17, No. 4. October 1970

An Analysis of Some Graph Theoretical Cluster Techniques

583

I

l

1

6

I

I I

400

500

600 Threshold value T

700

Fro. 2. Average cluster size: . . . . , connected component clusters; ~ . , subgraph clusters

maximal complete

400

~.,

300

200

100

I

400 500

I

600

I

700

,,

Threshold value T

Eo. 3. Total number of clusters: . . . . , connected component clusters; - - - , complete subgraph clusters

maximal

2. Threshold matrices produced for values of T _~ 0.6 yield basically the same Jsters regardless of which of the three cluster definitions is used. This is sub~ntiated b y the d a t a in Appendix 3 which shows the large percentage of concted components which are also maximal complete subgraphs for large values of This observation, if found to be valid for other data bases, could save considerle computer time b y requiring the use of only the algorithm to find the connected mponents of the graph.

J o u r n a l of t h e Association for C o m p u t i n g Machinery, Vol. 17, No. 4, O c to b e r 1970

584

J . G. A . U G U S T S O N A N D J . M I N K E R

3. The average size of the clusters defined by the maximal complete subgraphs does not appear to be dependent upon the threshold value applied. However, the total number of clusters produced increases significantly as the threshold value decreases. 4. Clusters defined by connected components of the threshold matrix for values of T < 0.5 may be large in size and contain highly related subgraphs which have little, if any, interrelatedness. Such subgraphs may become part of the same connected component cluster through the existence of general terms which are strongly related to the content terms of serveral unrelated subsets of the connected component. 5. Clusters defined by maximal complete subgraphs of the connected components of the threshold matrix for values of T < 0.5, tend to subdivide the connected component into highly related and overlapping clusters. Such overlapping clusters will generally reflect specific aspects of the same general area of interest. 6. Clusters defined by grouped maximal complete subgraphs tend to combine highly overlapping clusters into one general cluster. Such clusters usually are composed of the elements contained in the union of the overlapping clusters. 7. Clusters produced from the threshold matrices for values T > 0.6 tend to divide the terms into sets of disjoint clusters which are small in size and general in nature. For overlapping maximal complete subgraphs found for lower values of T, the representative clusters for higher values of T will generally correspond to the intersection of the elements contained in the overlapping maximal complete subgraphs. It is important to note that the conclusions and evaluations presented in this paper are based on three different cluster definitions produced from four different threshold matrices on one data base. The evaluation of a cluster and the determination of its relevance to the data set can be a function of what clusters are considered. It is clear from this study that no single threshold value or cluster definition can be guaranteed to produce worthwhile clusters regardless of the input data set. Rather, several different threshold values and cluster definitions should be tested to determine which produces the best results for the particular data set. The user can gain greater insight into the structure of the data base by viewing such alternative clusters. The decision of what parameters to use in defining clusters of a data set should be dependent upon how the clustering process is to be implemented and, in fight of this, what type clusters will provide the most meaningful results. This will be explored in a companion paper.

Appendix 1. The Bierstone Algorithm for Finding Maximal Complete Subgraphs

The following notation is used by the algorithm: p~ Ms C~ W S, T the kth node of the data set. an array containing all nodes p~ to which node Pi is connected such that

k>j.

a set of arrays in which complete subgraphs are built;upon termination of the algorithm these complete subgraphs will be maximal. a temporary storage location which contains those nodes of the Mi being processed which have not yet been put into some member of C~. temporary storage locations.

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

An Analysis of Some Graph Theoretical Cluster Techniques

The o p e r a t i o n of t h e a l g o r i t h m is as follows.

585

ALGORITHM Step 1. i = 0, j ~- number of nodes in the input data set. Step 2. Step 3.

j=j--1.

If Mj = 0, go to Step 2; otherwise, continue to Step 4.

Step 4. For each p~ E M j , set i -- i -~ 1 and define the complete subgraph C~ -- {Ps, pk}Step 51

j=j-1.

Step 6. I f j = 0, all input sets Mj have been processed and the set of arrays C represents the nodal sets of all maximal complete subgraphs of the input data set; if j ~ 0, continue to Step 7. Step 7. If Mj = 0, go to Step 5 to get the next input array. Otherwise, set W -~ M r , L ~- i (the number of complete subgraphs produced so far), n -- 0, and continue to Step 8. Step8. n = n~ 1.

Step 9. If n > L, all complete subgraphs Ck have been searched: go to Step 17; otherwise, continue to Step 10. Step 10. Define the complete subgraph T ffi C,~ N Mr If T contains fewer than 2 nodes, go to Step 8; otherwise, delete from W all nodes contained in T N W and go to Step 11. Stepll. I f T = C,~gotoStep15.

Step 12. If T = M r , s e t i - ~ i ~ 1 and define the completesubgraphCi = T (J {p/}, and go to Step 5 to get the next input array; otherwise, continue to Step 13. Step 13. If T i s a s u b s e t of any completesubgraphCq (q = 1 , . . . , n 1, n ~ 1 , . . . , i) that contains p j , ignore this complete subgraph as it is already contained in C,, and go to Step 8; otherwise, set S = T U {p#} and continue to Step 14. Step 14. If some complete subgraph Cq (q ffi L ~ 1, .. , i) is a subset of the complete subgraph S, redefine the complete subgraph Cq -- S and delete any Cr (r = q T 1, ... , i) which is also a subset of S; otherwise, set i = i T 1 and define the new complete subgraph C~ ffi S. Go to Step 8. Step 15. Redefine the complete subgraph Cn as C~ = Cn [J p~' Delete any Cq (q = n ~ 1, .. , i) that is a subset of the altered C,~. Continue to Step 16. Step 16. If T = M r , go to Step 5 to get the next input array; otherwise, go to Step 8.

Step 17. For each pk remaining in W, set i = i ~ 1 and create the new complete subgraph C~ ffi {p~ , p~}. Go to Step 5 to get a new input array.

Appendix 2. The Bonner Cluster-Building Algorithm

This a l g o r i t h m builds u p a cluster ( m a x i m a l c o m p l e t e su b g r ap h ) one o b j e c t a t a time, k e e p i n g t r a c k of i t e m s at each l ev el i of t h e buildup. T h e following i t e m s are used b y t h e a l g o r i t h m : A~ C~ L~ an a r r a y r e p r e s e n t i n g t h e set of o b j e c t s in t h e cluster a t this point. an a r r a y r e p r e s e n t i n g t h e set of o b j e c t s w h i c h could possibly be a d d e d to A~ to f u r t h e r increase t h e cluster. a n a r r a y of n u m b e r s w h e r e i t h e l e m e n t represents: t h e last o b j e c t of C~ to be considered for a d d i t i o n to t h e cluster.

Journal of the Association for C o m p u t i n g Machinery, Vol. 17, No. 4, October 1970

586 Sj~

J. G.

AUGUSTSON AND J. M I N K E R

t h e i n p u t t h r e s h o l d m a t r i x w h e r e SL~ r e p r e s e n t s t h e set of all m e m b e r s connected to object L~.

E l e m e n t s for A , C, a n d L a r e s t o r e d for e a c h i w h i c h is s m a l l e r t h a n or e q u a l t o t h e p r e s e n t i. T h e a l g o r i t h m p r o c e e d s as follows.

ALGORITHM Stepl. Set:i = 1;Ci-all objects, At = no objects, Li = 1. Step 2. Search C~ for the presence of object L~. If it is present go to Step 3; if not, set L~= L~ + 1 and go to Step 5. Step 3. Step 4. S e t C ~ + l - - {Ci flSLi} - L~,A~+I = A~ U L ~ . SetL~+, = L ~ + 1, i = i + 1.

Step 5. When no element of C~ is larger than L~, a complete subgraph has been found--go to Step 6; otherwise, go to Step 2. Step 6. Set T = A~. If C~ is empty, store As as a maximal complete subgraph. If C~ is not empty, this means either the complete subgraph A~ has been found before or it is a subset of a maximal complete subgraph found before. Step 7. i = i -- 1. If i = 0, all maximal complete subgraphs have been found---stop; otherwise, go to Step 8. Step 8. Form the set of all objects in C~ with numbers greater than L~. If these are not a subset of T, go to Step 9. If they are a subset of T, it means t h a t the complete subgraphs found from these objects would only be a subset of the maximal complete subgraph T just found; therefore, go to Step 7. Step 9. L~ = L ~ + 1. G o t o S t e p 2 .

Appendix

3.

Structural

Composition

of Threshold

Matrices

T h e s e a r e s h o w n in T a b l e I I . T A B L E II.

Ta Edges

STRUCTURAL COMPOSITION OF THRESHOLD MATRICES

Nodesb No. C.C.e N.N. d % Nodes in MCS o % C.C.,in MCS" Time,mln.g

0.1 0.2 0.3 0.4 0.5 0.6 0.7

22,993 12,476 8,542 6,630 6,532 4,772 4,696

3,848 3,255 2,603 2,084 2,001 1,314 1,222

24 146 349 475 450 411 379

3,783 2,797 1,150 72 69 67 66

1.3 9.1 22.5 41.6 41.1 91.6 93.3

87.5 82.2 68.8 69.1 68.0 97.3 99.2

1.07 .68 1.58 1.87 1.80 1.85 1.48

T is the threshold level. The number of nodes that are connected to at least one other node. The total number of terms (nodes) in the data set was 3,950. c N o . C.C. is the number of connected components in the graph. d N . N . is the number of nodes in the largest connected component. e % Nodes i n M C S : The percentage of nodes contained in connected components which form maximal complete subgraphs. f % C.C. i n M C S : The percentage of the connected components which form maximal complete subgraphs. g T i m e is the time in minutes required to find all connected components of the graph.

b Nodes:

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

An Analysis of Some Graph Theoretical Cluster Techniques

ACKNOWLEDGMENTS.

587

T h e a u t h o r s wish to express t h e i r a p p r e c i a t i o n t o M r . H. Edmund Stiles, who g e n e r o u s l y m a d e t h e c o r p u s a v a i l a b l e to us in m a c h i n e r e a d able form. W e also wish to express our a p p r e c i a t i o n to Professor C. C. G o t l i e b of the U n i v e r s i t y of T o r o n t o for calling o u r a t t e n t i o n to t h e B i e r s t o n e a l g o r i t h m described in t h i s p a p e r . REFERENCES [NoTs. References preceded by an asterisk are not cited in text.] 1. AUGUSTSON, J. G., .&NDMINKER, J. An analysis of some graph theoretical cluster techniques. Computer Science Center Tech. Rep. No. TR70-106, U. of Maryland, College Park, Md., Jan. 1970. 2. - - . Experiments with graph theoretical clustering techniques. Thesis submitted to the Faculty of the Graduate School of the University of Maryland in partial fulfillment of requirements for MS degree, U. of Maryland, College Park, Md., 1969. 3. BAKER,F.B. Information retrieval based upon latent class analysis. J. ACM 9, 4 (Oct. 1962), 512-521. 4. B~LI~,G . H . Data analysis in the social sciences: What about the details? Proc. AFIPS 1965 Fall Joint Comput. Conf., Vol. 27, Pt. 1, pp. 533-559. *5. BERGE, C., AND GHOUILx-HouRI, A. Programming, Games, and Networks. Wiley, New York, 1965. 6. BIERSTONE, E. Cliques and generalized cliques in a finite linear graph. Unpublished rep. 7. BONNER, R . E . On some clustering techniques. IBM J. Res. Develop. 8, 1 (Jan. 1964), 22-32. 8. BoRxo, H. The construction of an empirically based mathematically derived classification system. Rep. No. SP-585, System Development Corp., Santa Monica, Calif., Oct. 26, 1961. *9. - - . Research in document classification and file organization. Rep. No. SP-1423, System Development Corp., Santa Monica, Calif., 1963. '10. - AND BERNICK, M. D. Automatic document classification. Part II--Additional experiments. Tech. Memo TM-771/001/00, System Development Corp., Santa Monica, Calif., Oct. 18, 1963. "11. COSATI subject category list (DoD--modified). AD-624 000, Defense Documentation Center, Defense Supply Agency, Oct. 1965. 12. DALE, A. G . , AND D A L E , N. Some clumping experiments for information retrieval. LRC 64-WPI1, Linguistic Research Center, U. of Texas, Austin, Texas, Feb. 1964. 13. D&TTOL&,R . T . A fast algorithm for automatic classification. In Information Storage and Retrieval series, Scientific Rep. No. ISR-14, Cornell U., Ithaca, N. Y.; Oct. 1968, Ch. V. 14. DOYL~, L . B . Breaking the cost barrier in automatic classification. Rep. No. SP-2516, System Development Corp., Santa Monica, Calif., July 1966. '15. EURATOM-thesaurus: Keywords used within EURATOM's nuclear energy documentation project. Directorate "Dissemination of Information," Center for Information and Documentation, 1964. 16. GOTLIEB, C. C., AND KUMAR, S. Semantic clustering of index terms. J. ACM 15, 4 (Oct. 1968), 493-513. '17. GIULIANO,V. E . , AND JONES, P . E . Linear associative information retrieval. In Howerton, P. W., and Weeks, D. C. (Eds.), Vistas in Information Handling, Vol. 1, Spartan Books, Washington, D. C., 1963, Ch. 2, pp. 30-46. 18. IvlE, E. L. Search procedures based on measures of relatedness between documents. Ph.D. thesis, MIT, Cambridge, Mass., May 1966. 19. KNUTH, D. E. The Art of Computer Programming: Vol. 1, Fundamental Algorithms. Addison-Wesley, Reading, Mass., 1968. *20. KOCHEN, M., AND WONG, E. Concerning the possibility of a cooperative information exchange. IBM J. Res. Develop. 6, 2 (April 1962), 270-271.

Journal of the Association for Computing Machinery, Vol. 17, No. 4, October 1970

588

J. G. A.UGUSTSON A N D J. M I N K E R

21. KUHNS,J . L . The continuum of coefficients of association. In Stevens, M. E., Giuliano, V. E., and Heilprin, L. B. (Eds.), Proc. Symposium in Statistical Association Methods for Mechanized Documentation, US Dep. of Commerce, Washington, D. C., Dec. 1965. 22. - - . Mathematical analysis of correlation clusters. In Word correlation and automatic indexing, Progress Rep. No. 2, C82-OU1, Ramo-Wooldridge, Canoga Park, Calif., Dec. 1959, Appendix D. 23. LESK, M. E. Word-word association in document retrieval systems. In Information storage and retrieval, Scientific Rep. No. ISR-13, Cornell U., Ithaca, New York, Jan.. 1968, Section IX. *24. MEETH.4.M,A . R . Graph separability and word grouping. Proc. 21st Nat. Conf. ACM, 1966, ACM Pub P-66, Thompson Book Co., Washington, D. C., pp. 513-514. 25. NEEDH.4.M,R . M . A method for using computers in information classification. In Popplewell, C. M. CEd0, Information Processing 1962, Proc. I F I P Congr. 62, North-Holland, Amsterdam, 1963, pp. 284--287. *26. - - . The termination of certain iterative processes. Memorandum RM-5188-PR, Rand Corp., Santa Monica, Calif., Nov. 1966. 27. . The theory of clumps II. Rep. No. ML 139, Cambridge Language Research Unit, Cambridge, England, March 1961. *28. OGILVrE, J . C . The distribution of number and size of connected components in random graphs of medium size. In Morrell, A. J. H. (Ed.), Information Processing 68, Proc. IFIP Congress 68, Vol. 2---Hardware, Applications, North-Holland, Amsterdam, 1969, pp. 1527-1530. *29. ORE, O. Graphs and Their Use. Random House, New York, 1963. 30. PARKER-RHODES, A. F. Contributions to the theory of clumps: The usefulness and feasibility of the theory. Rep. No. ML 138, Cambridge Language Research Unit, Cambridge, England, March 1961. 31. XND NEEDHAM, R . M . The theory of clumps. Rep. No. ML 126, Cambridge Language Research Unit, Cambridge, England, Feb. 1960. *32. PRICE, N., XND SCHI~INOVICH, S. A clustering experiment: First step towards a computer-generated classification scheme. Inform. Storage and Retrieval $, 3 (Aug. 1968), 271-280. *33. ROCCHIO,J. J., JR. Document retrieval systems--optimization and evaluation. Scientific Rep. No. ISR-10, Computation Laboratory, Harvard U., Cambridge, Mass., 1966. 34. ROGERS, D., AND TANI~OTO, T. A computer program for classifying plants. Science 132 (Oct. 1960), 1115-1118. 35. SALTON, G. Automatic Information Organization and Retrieval. McGraw-Hill, New York, 1968. 36. SHEPXRD, M. J., AND WILLMOTT, A . J . Cluster analysis on the Atlas computer. Computer J. 11, 1 (May 1968), 57-62. 37. SPXRCK-JONES,K. Automatic term classification and information retrieval. In Morrell, A. J. H. (Ed.), Information Processing 68, Proc. I F I P Congress 68, Vol. 2---Hardware, Applications, North-Holland, Amsterdam, 1969, pp. 1290-1295. *38. Mechanized semantic classification. 1961 International Conf. on Machine Translation of Languages and Applied Language Analysis, National Physics Laboratory Symposium No. 13, Volume II, 1962, Paper 25, pp. 417--435. 39. - - AND JACKSON, D. Current approaches to classification and clump-finding at Cambridge Language Research Unit. Computer J. 10, 1 (May 1967), 29-37. *40. STEVENS, M . E . Automatic indexing: A state of the art report. NBS Monograph 91, US Dep. of Commerce, Washington, D. C., March 1965. 41. STILES, H. E. The association factor in information retrieval. J. ACM 8, 2 (April 1961), 271-279. 42. - - AND SALISBURY, B. A. The use of the B-coefficient in information retrieval. Unpublished rep., Sept. 1967. 43. TANI~OTO, T. An elementary mathematical theory of classification and prediction. Rep., IBM Corp., 1958.

RECEIVED JUNE,

1969; REVISED JUNE, 1970

Journal of the Association for Computing Machinery, Vo|. 17, No. 4, October 1970

You might also like

- Community Detection Using Statistically Significant Subgraph MiningDocument10 pagesCommunity Detection Using Statistically Significant Subgraph MiningAnonymousNo ratings yet

- 1 s2.0 S0031320311005188 MainDocument15 pages1 s2.0 S0031320311005188 MainRohhan RabariNo ratings yet

- A Novel Multi-Viewpoint Based Similarity Measure For Document ClusteringDocument4 pagesA Novel Multi-Viewpoint Based Similarity Measure For Document ClusteringIJMERNo ratings yet

- Latent Semantic Kernels Improve Text CategorizationDocument27 pagesLatent Semantic Kernels Improve Text CategorizationdarktuaregNo ratings yet

- Analysis of Dendrogram Tree For Identifying and Visualizing Trends in Multi-Attribute Transactional DataDocument5 pagesAnalysis of Dendrogram Tree For Identifying and Visualizing Trends in Multi-Attribute Transactional Datasurendiran123No ratings yet

- A Three-Way Cluster Ensemble Approach For Large-Scale DataDocument18 pagesA Three-Way Cluster Ensemble Approach For Large-Scale DataAnwar ShahNo ratings yet

- OPTICS: Ordering Points To Identify The Clustering StructureDocument12 pagesOPTICS: Ordering Points To Identify The Clustering StructureqoberifNo ratings yet

- Content-Based Audio Retrieval Using A Generalized AlgorithmDocument13 pagesContent-Based Audio Retrieval Using A Generalized AlgorithmMarcoVillaranReyesNo ratings yet

- Clustering Algorithm With A Novel Similarity Measure: Gaddam Saidi Reddy, Dr.R.V.KrishnaiahDocument6 pagesClustering Algorithm With A Novel Similarity Measure: Gaddam Saidi Reddy, Dr.R.V.KrishnaiahInternational Organization of Scientific Research (IOSR)No ratings yet

- Document Clustering in Web Search Engine: International Journal of Computer Trends and Technology-volume3Issue2 - 2012Document4 pagesDocument Clustering in Web Search Engine: International Journal of Computer Trends and Technology-volume3Issue2 - 2012surendiran123No ratings yet

- Informative Pattern Discovery Using Combined MiningDocument5 pagesInformative Pattern Discovery Using Combined MiningInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Statistical Considerations On The K - Means AlgorithmDocument9 pagesStatistical Considerations On The K - Means AlgorithmVeronica DumitrescuNo ratings yet

- Image Content With Double Hashing Techniques: ISSN No. 2278-3091Document4 pagesImage Content With Double Hashing Techniques: ISSN No. 2278-3091WARSE JournalsNo ratings yet

- Hypergraph Convolutional Neural Network-Based Clustering TechniqueDocument9 pagesHypergraph Convolutional Neural Network-Based Clustering TechniqueIAES IJAINo ratings yet

- High Dimensional Data Clustering Using Cuckoo Search Optimization AlgorithmDocument5 pagesHigh Dimensional Data Clustering Using Cuckoo Search Optimization AlgorithmDHEENATHAYALAN KNo ratings yet

- Elevating Forensic Investigation System For File ClusteringDocument5 pagesElevating Forensic Investigation System For File ClusteringInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Comprehensive Review of K-Means Clustering AlgorithmsDocument5 pagesComprehensive Review of K-Means Clustering AlgorithmsEditor IjasreNo ratings yet

- An Empirical Evaluation of Density-Based Clustering TechniquesDocument8 pagesAn Empirical Evaluation of Density-Based Clustering Techniquessuchi87No ratings yet

- An Efficient Enhanced K-Means Clustering AlgorithmDocument8 pagesAn Efficient Enhanced K-Means Clustering Algorithmahmed_fahim98No ratings yet

- Efficient Data Clustering With Link ApproachDocument8 pagesEfficient Data Clustering With Link ApproachseventhsensegroupNo ratings yet

- Survey of Combined Clustering Approaches: Mr. Santosh D. Rokade, Mr. A. M. BainwadDocument5 pagesSurvey of Combined Clustering Approaches: Mr. Santosh D. Rokade, Mr. A. M. BainwadShakeel RanaNo ratings yet

- Improving Suffix Tree Clustering Algorithm For Web DocumentsDocument5 pagesImproving Suffix Tree Clustering Algorithm For Web DocumentsHidayah Nurul Hasanah ZenNo ratings yet

- Analysis of Time Series Rule Extraction Techniques: Hima Suresh, Dr. Kumudha RaimondDocument6 pagesAnalysis of Time Series Rule Extraction Techniques: Hima Suresh, Dr. Kumudha RaimondInternational Organization of Scientific Research (IOSR)No ratings yet

- Reaction Paper On BFR Clustering AlgorithmDocument5 pagesReaction Paper On BFR Clustering AlgorithmGautam GoswamiNo ratings yet

- Ba 2419551957Document3 pagesBa 2419551957IJMERNo ratings yet

- A New Shared Nearest Neighbor Clustering AlgorithmDocument16 pagesA New Shared Nearest Neighbor Clustering Algorithmmaryam SALAMINo ratings yet

- Duck Data Umpire by Cubical Kits: Sarathchand P.V. B.E (Cse), M.Tech (CS), (PHD) Professor and Research ScholarDocument4 pagesDuck Data Umpire by Cubical Kits: Sarathchand P.V. B.E (Cse), M.Tech (CS), (PHD) Professor and Research ScholarRakeshconclaveNo ratings yet

- A Development of Network Topology of Wireless Packet Communications For Disaster Situation With Genetic Algorithms or With Dijkstra'sDocument5 pagesA Development of Network Topology of Wireless Packet Communications For Disaster Situation With Genetic Algorithms or With Dijkstra'sPushpa Mohan RajNo ratings yet

- IJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchDocument5 pagesIJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchInternational Journal of computational Engineering research (IJCER)No ratings yet

- An Improved Technique For Document ClusteringDocument4 pagesAn Improved Technique For Document ClusteringInternational Jpurnal Of Technical Research And ApplicationsNo ratings yet

- Hierarchical Clustering PDFDocument5 pagesHierarchical Clustering PDFLikitha ReddyNo ratings yet

- Chameleon PDFDocument10 pagesChameleon PDFAnonymous iIy4RsPCJ100% (1)

- GDBSCANDocument30 pagesGDBSCANkkoushikgmailcomNo ratings yet

- K-Means Clustering Method For The Analysis of Log DataDocument3 pagesK-Means Clustering Method For The Analysis of Log DataidescitationNo ratings yet

- An Enhanced Clustering Algorithm To Analyze Spatial Data: Dr. Mahesh Kumar, Mr. Sachin YadavDocument3 pagesAn Enhanced Clustering Algorithm To Analyze Spatial Data: Dr. Mahesh Kumar, Mr. Sachin YadaverpublicationNo ratings yet

- Networktoolbox: Methods and Measures For Brain, Cognitive, and Psychometric Network Analysis in RDocument19 pagesNetworktoolbox: Methods and Measures For Brain, Cognitive, and Psychometric Network Analysis in REmanuel CordeiroNo ratings yet

- Iaetsd-Jaras-Comparative Analysis of Correlation and PsoDocument6 pagesIaetsd-Jaras-Comparative Analysis of Correlation and PsoiaetsdiaetsdNo ratings yet

- Unsupervised K-Means Clustering Algorithm Finds Optimal Number of ClustersDocument17 pagesUnsupervised K-Means Clustering Algorithm Finds Optimal Number of ClustersAhmad FaisalNo ratings yet

- A Fast Clustering Algorithm To Cluster Very Large Categorical Data Sets in Data MiningDocument13 pagesA Fast Clustering Algorithm To Cluster Very Large Categorical Data Sets in Data MiningSunny NguyenNo ratings yet

- The International Journal of Engineering and Science (The IJES)Document7 pagesThe International Journal of Engineering and Science (The IJES)theijesNo ratings yet

- Clique Approach For Networks: Applications For Coauthorship NetworksDocument6 pagesClique Approach For Networks: Applications For Coauthorship NetworksAlejandro TorresNo ratings yet

- Genetic Algorithm-Based Clustering TechniqueDocument11 pagesGenetic Algorithm-Based Clustering TechniqueArmansyah BarusNo ratings yet

- "Encoding Trees," Comput.: 1I15. New (71Document5 pages"Encoding Trees," Comput.: 1I15. New (71Jamilson DantasNo ratings yet

- Dynamic Approach To K-Means Clustering Algorithm-2Document16 pagesDynamic Approach To K-Means Clustering Algorithm-2IAEME PublicationNo ratings yet

- Ambo University: Inistitute of TechnologyDocument15 pagesAmbo University: Inistitute of TechnologyabayNo ratings yet

- A Graph Analytical Approach For Topic DetectionDocument21 pagesA Graph Analytical Approach For Topic Detectionhilllie3475No ratings yet

- New Algorithms for Fast Discovery of Association Rules Using Clustering and Single Database ScanDocument24 pagesNew Algorithms for Fast Discovery of Association Rules Using Clustering and Single Database ScanMade TokeNo ratings yet

- Automatic Clustering AlgorithmsDocument3 pagesAutomatic Clustering Algorithmsjohn949No ratings yet

- Introduction To KEA-Means Algorithm For Web Document ClusteringDocument5 pagesIntroduction To KEA-Means Algorithm For Web Document Clusteringsurendiran123No ratings yet

- Machine LearningDocument15 pagesMachine LearningShahid KINo ratings yet

- DownloadDocument25 pagesDownloadkmaraliNo ratings yet

- The General Considerations and Implementation In: K-Means Clustering Technique: MathematicaDocument10 pagesThe General Considerations and Implementation In: K-Means Clustering Technique: MathematicaMario ZamoraNo ratings yet

- DIE1105 - Exploring Afgjfgpplication-Level Semantics For Data JnfjcompressionDocument7 pagesDIE1105 - Exploring Afgjfgpplication-Level Semantics For Data JnfjcompressionSaidi ReddyNo ratings yet

- Websets: Extracting Sets of Entities From The Web Using Unsupervised Information ExtractionDocument10 pagesWebsets: Extracting Sets of Entities From The Web Using Unsupervised Information ExtractionAniket VermaNo ratings yet

- Detection of Forest Fire Using Wireless Sensor NetworkDocument5 pagesDetection of Forest Fire Using Wireless Sensor NetworkAnonymous gIhOX7VNo ratings yet

- Bootstrap Exploratory Graph AnalysisDocument22 pagesBootstrap Exploratory Graph AnalysisjpsilbatoNo ratings yet

- International Journal of Engineering Research and DevelopmentDocument5 pagesInternational Journal of Engineering Research and DevelopmentIJERDNo ratings yet

- A Scalable SelfDocument33 pagesA Scalable SelfCarlos CervantesNo ratings yet

- Ijettcs 2014 04 25 123Document5 pagesIjettcs 2014 04 25 123International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Graph Theoretic Methods in Multiagent NetworksFrom EverandGraph Theoretic Methods in Multiagent NetworksRating: 5 out of 5 stars5/5 (1)

- Title: Asdgasg Required Experience: 6-8 Yrs. in To Afdadf Location: HyderabadDocument1 pageTitle: Asdgasg Required Experience: 6-8 Yrs. in To Afdadf Location: HyderabadpakalagopalNo ratings yet

- Power ElectronicsDocument2 pagesPower ElectronicspakalagopalNo ratings yet

- Power ElectronicsDocument2 pagesPower ElectronicspakalagopalNo ratings yet

- Power ElectronicsDocument2 pagesPower ElectronicspakalagopalNo ratings yet

- SINGLE PHASE HALF CONTROLLED BRIDGE CONVERTERDocument43 pagesSINGLE PHASE HALF CONTROLLED BRIDGE CONVERTERpakalagopalNo ratings yet

- Fourth Convocation Notification Dt.25.09.2012Document1 pageFourth Convocation Notification Dt.25.09.2012pakalagopalNo ratings yet

- CUK CONVERTER: A Versatile DC-DC Converter TopologyDocument13 pagesCUK CONVERTER: A Versatile DC-DC Converter TopologypakalagopalNo ratings yet

- Semiya Bajji Recipe IngredientsDocument1 pageSemiya Bajji Recipe IngredientspakalagopalNo ratings yet

- Component DiagramDocument1 pageComponent DiagrampakalagopalNo ratings yet

- All The BestDocument2 pagesAll The BestpakalagopalNo ratings yet

- SBI Customer CareDocument1 pageSBI Customer CarepakalagopalNo ratings yet

- Project Ppt1Document2 pagesProject Ppt1pakalagopalNo ratings yet

- Architecture of The Digital Signal ProcessorDocument6 pagesArchitecture of The Digital Signal ProcessorpakalagopalNo ratings yet

- Chapter 1Document1 pageChapter 1pakalagopalNo ratings yet

- Performance Analysis of A Dual Cycle Engine With Considerations of Pressure Ratio and Cut-Off RatioDocument6 pagesPerformance Analysis of A Dual Cycle Engine With Considerations of Pressure Ratio and Cut-Off RatioRajanish BiswasNo ratings yet

- The Impact of Air Cooled Condensers On Plant Design and OperationsDocument12 pagesThe Impact of Air Cooled Condensers On Plant Design and Operationsandi_babyNo ratings yet

- 007 Noverina Chaniago PDFDocument9 pages007 Noverina Chaniago PDFMega TesrimegayaneNo ratings yet

- SQL Server Forensic AnalysisDocument510 pagesSQL Server Forensic Analysisj cdiezNo ratings yet

- A Project About Wild Animals Protection Week and Kalakad Mundanthurai Tiger ReserveDocument50 pagesA Project About Wild Animals Protection Week and Kalakad Mundanthurai Tiger ReserveSweetNo ratings yet

- Chicken Run: Activity Overview Suggested Teaching and Learning SequenceDocument1 pageChicken Run: Activity Overview Suggested Teaching and Learning SequencePaulieNo ratings yet

- Hastelloy B2 Alloy B2 UNS N10665 DIN 2.4617Document3 pagesHastelloy B2 Alloy B2 UNS N10665 DIN 2.4617SamkitNo ratings yet

- Flyht Case SolutionDocument2 pagesFlyht Case SolutionkarthikawarrierNo ratings yet

- Strategic Supply Chain Management (Om367, 04835) : Meeting Time & LocationDocument9 pagesStrategic Supply Chain Management (Om367, 04835) : Meeting Time & Locationw;pjo2hNo ratings yet

- ICICI Securities ReportDocument4 pagesICICI Securities ReportSaketh DahagamNo ratings yet

- African Nations Cup ProjectDocument15 pagesAfrican Nations Cup ProjectAgbor AyukNo ratings yet

- Jindal Power's Coal Handling Plant OverviewDocument29 pagesJindal Power's Coal Handling Plant Overviewsexyakshay26230% (1)

- Socio-Economic and Government Impact On Business: Lesson 4.4Document18 pagesSocio-Economic and Government Impact On Business: Lesson 4.4racelNo ratings yet

- Design Procedure For Steel Column Bases With Stiff PDFDocument8 pagesDesign Procedure For Steel Column Bases With Stiff PDFAchilles TroyNo ratings yet

- Liar's ParadoxDocument8 pagesLiar's ParadoxOmar Zia-ud-DinNo ratings yet

- Anti-Photo and Video Voyeurism Act SummaryDocument3 pagesAnti-Photo and Video Voyeurism Act SummaryHan SamNo ratings yet

- Pre-Trip Inspection For School BusDocument6 pagesPre-Trip Inspection For School BusReza HojjatNo ratings yet

- Ossy Compression Schemes Based On Transforms A Literature Review On Medical ImagesDocument7 pagesOssy Compression Schemes Based On Transforms A Literature Review On Medical ImagesijaitjournalNo ratings yet

- Consultancy Project Assessment SheetDocument1 pageConsultancy Project Assessment SheetSadeeqNo ratings yet

- CCE Student Wise SA1 Marks Report for Class 3 Section ADocument1 pageCCE Student Wise SA1 Marks Report for Class 3 Section AKalpana AttadaNo ratings yet

- It Complaint Management SystemDocument26 pagesIt Complaint Management SystemKapil GargNo ratings yet

- Double Your Income-Hustle & ConquerDocument54 pagesDouble Your Income-Hustle & ConquerHamza ElmoubarikNo ratings yet

- Semi-Log Analysis: Well Test Interpretation MethodologyDocument31 pagesSemi-Log Analysis: Well Test Interpretation MethodologyAshraf SeragNo ratings yet

- Hydraulic CaculationDocument66 pagesHydraulic CaculationgagajainNo ratings yet

- Charlie Mouse OutdoorsDocument48 pagesCharlie Mouse OutdoorsMarwa AhmedNo ratings yet

- Metrology Public PDFDocument9 pagesMetrology Public PDFLeopoldo TescumNo ratings yet

- DFA and DOLE Not Liable for Repatriation Costs of Undocumented OFWDocument80 pagesDFA and DOLE Not Liable for Repatriation Costs of Undocumented OFWdhanty20No ratings yet

- Investor Presentation (Company Update)Document48 pagesInvestor Presentation (Company Update)Shyam SunderNo ratings yet

- Wiring 87T E01Document4 pagesWiring 87T E01Hau NguyenNo ratings yet

- Annex 2.1: Contingency Plan Template For SchoolDocument21 pagesAnnex 2.1: Contingency Plan Template For SchoolJapeth PurisimaNo ratings yet