Professional Documents

Culture Documents

Machine Learning

Uploaded by

ramayantaCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Machine Learning

Uploaded by

ramayantaCopyright:

Available Formats

Machine learning

Machine learning

Machine learning, a branch of artificial intelligence, is about the construction and study of systems that can learn from data. For example, a machine learning system could be trained on email messages to learn to distinguish between spam and non-spam messages. After learning, it can then be used to classify new email messages into spam and non-spam folders. The core of machine learning deals with representation and generalization. Representation of data instances and functions evaluated on these instances are part of all machine learning systems. Generalization is the property that the system will perform well on unseen data instances; the conditions under which this can be guaranteed are a key object of study in the subfield of computational learning theory. There is a wide variety of machine learning tasks and successful applications. Optical character recognition, in which printed characters are recognized automatically based on previous examples, is a classic example of machine learning.[1]

Definition

In 1959, Arthur Samuel defined machine learning as a "Field of study that gives computers the ability to learn without being explicitly programmed". [] Tom M. Mitchell provided a widely quoted, more formal definition: "A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E".[2] This definition is notable for its defining machine learning in fundamentally operational rather than cognitive terms, thus following Alan Turing's proposal in Turing's paper "Computing Machinery and Intelligence" that the question "Can machines think?" be replaced with the question "Can machines do what we (as thinking entities) can do?"[3]

Generalization

Generalization in this context is the ability of an algorithm to perform accurately on new, unseen examples after having trained on a learning data set. The core objective of a learner is to generalize from its experience.[4][5] The training examples come from some generally unknown probability distribution and the learner has to extract from them something more general, something about that distribution, that allows it to produce useful predictions in new cases.

Machine learning and data mining

These two terms are commonly confused, as they often employ the same methods and overlap significantly. They can be roughly defined as follows: Machine learning focuses on prediction, based on known properties learned from the training data. Data mining (which is the analysis step of Knowledge Discovery in Databases) focuses on the discovery of (previously) unknown properties on the data. The two areas overlap in many ways: data mining uses many machine learning methods, but often with a slightly different goal in mind. On the other hand, machine learning also employs data mining methods as "unsupervised learning" or as a preprocessing step to improve learner accuracy. Much of the confusion between these two research communities (which do often have separate conferences and separate journals, ECML PKDD being a major exception) comes from the basic assumptions they work with: in machine learning, performance is usually evaluated with respect to the ability to reproduce known knowledge, while in Knowledge Discovery and Data Mining (KDD) the key task is the discovery of previously unknown knowledge. Evaluated with respect to known knowledge, an

Machine learning uninformed (unsupervised) method will easily be outperformed by supervised methods, while in a typical KDD task, supervised methods cannot be used due to the unavailability of training data.

Human interaction

Machine learning systems attempt to eliminate the need for human intuition in data analysis, while others adopt a collaborative approach between human and machine. Human intuition cannot, however, be entirely eliminated, since the system's designer must specify how the data is to be represented and what mechanisms will be used to search for a characterization of the data.

Algorithm types

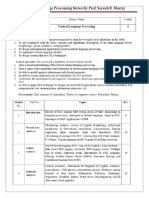

Machine learning algorithms can be organized into a taxonomy based on the desired outcome of the algorithm or the type of input available during training the machine [citation needed]. Supervised learning generates a function that maps inputs to desired outputs (also called labels, because they are often provided by human experts labeling the training examples). For example, in a classification problem, the learner approximates a function mapping a vector into classes by looking at input-output examples of the function. Unsupervised learning models a set of inputs, like clustering. See also data mining and knowledge discovery. Here, labels are not known during training. Semi-supervised learning combines both labeled and unlabeled examples to generate an appropriate function or classifier. Transduction, or transductive inference, tries to predict new outputs on specific and fixed (test) cases from observed, specific (training) cases. Reinforcement learning learns how to act given an observation of the world. Every action has some impact in the environment, and the environment provides feedback in the form of rewards that guides the learning algorithm. Learning to learn learns its own inductive bias based on previous experience.

Theory

The computational analysis of machine learning algorithms and their performance is a branch of theoretical computer science known as computational learning theory. Because training sets are finite and the future is uncertain, learning theory usually does not yield guarantees of the performance of algorithms. Instead, probabilistic bounds on the performance are quite common. In addition to performance bounds, computational learning theorists study the time complexity and feasibility of learning. In computational learning theory, a computation is considered feasible if it can be done in polynomial time. There are two kinds of time complexity results. Positive results show that a certain class of functions can be learned in polynomial time. Negative results show that certain classes cannot be learned in polynomial time. There are many similarities between machine learning theory and statistical inference, although they use different terms.

Machine learning

Approaches

Decision tree learning

Decision tree learning uses a decision tree as a predictive model which maps observations about an item to conclusions about the item's target value.

Association rule learning

Association rule learning is a method for discovering interesting relations between variables in large databases.

Artificial neural networks

An artificial neural network (ANN) learning algorithm, usually called "neural network" (NN), is a learning algorithm that is inspired by the structure and functional aspects of biological neural networks. Computations are structured in terms of an interconnected group of artificial neurons, processing information using a connectionist approach to computation. Modern neural networks are non-linear statistical data modeling tools. They are usually used to model complex relationships between inputs and outputs, to find patterns in data, or to capture the statistical structure in an unknown joint probability distribution between observed variables.

Genetic programming

Genetic programming (GP) is an evolutionary algorithm-based methodology inspired by biological evolution to find computer programs that perform a user-defined task. It is a specialization of genetic algorithms (GA) where each individual is a computer program. It is a machine learning technique used to optimize a population of computer programs according to a fitness landscape determined by a program's ability to perform a given computational task.

Inductive logic programming

Inductive logic programming (ILP) is an approach to rule learning using logic programming as a uniform representation for examples, background knowledge, and hypotheses. Given an encoding of the known background knowledge and a set of examples represented as a logical database of facts, an ILP system will derive a hypothesized logic program which entails all the positive and none of the negative examples.

Support vector machines

Support vector machines (SVMs) are a set of related supervised learning methods used for classification and regression. Given a set of training examples, each marked as belonging to one of two categories, an SVM training algorithm builds a model that predicts whether a new example falls into one category or the other.

Clustering

Cluster analysis is the assignment of a set of observations into subsets (called clusters) so that observations within the same cluster are similar according to some predesignated criterion or criteria, while observations drawn from different clusters are dissimilar. Different clustering techniques make different assumptions on the structure of the data, often defined by some similarity metric and evaluated for example by internal compactness (similarity between members of the same cluster) and separation between different clusters. Other methods are based on estimated density and graph connectivity. Clustering is a method of unsupervised learning, and a common technique for statistical data analysis.

Machine learning

Bayesian networks

A Bayesian network, belief network or directed acyclic graphical model is a probabilistic graphical model that represents a set of random variables and their conditional independencies via a directed acyclic graph (DAG). For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases. Efficient algorithms exist that perform inference and learning.

Reinforcement learning

Reinforcement learning is concerned with how an agent ought to take actions in an environment so as to maximize some notion of long-term reward. Reinforcement learning algorithms attempt to find a policy that maps states of the world to the actions the agent ought to take in those states. Reinforcement learning differs from the supervised learning problem in that correct input/output pairs are never presented, nor sub-optimal actions explicitly corrected.

Representation learning

Several learning algorithms, mostly unsupervised learning algorithms, aim at discovering better representations of the inputs provided during training. Classical examples include principal components analysis and cluster analysis. Representation learning algorithms often attempt to preserve the information in their input but transform it in a way that makes it useful, often as a pre-processing step before performing classification or predictions, allowing to reconstruct the inputs coming from the unknown data generating distribution, while not being necessarily faithful for configurations that are implausible under that distribution. Manifold learning algorithms attempt to do so under the constraint that the learned representation is low-dimensional. Sparse coding algorithms attempt to do so under the constraint that the learned representation is sparse (has many zeros). Multilinear subspace learning algorithms aim to learn low-dimensional representations directly from tensor representations for multidimensional data, without reshaping them into (high-dimensional) vectors.[6] Deep learning algorithms discover multiple levels of representation, or a hierarchy of features, with higher-level, more abstract features defined in terms of (or generating) lower-level features. It has been argued that an intelligent machine is one that learns a representation that disentangles the underlying factors of variation that explain the observed data.[7]

Similarity and metric learning

In this problem, the learning machine is given pairs of examples that are considered similar and pairs of less similar objects. It then needs to learn a similarity function (or a distance metric function) that can predict if new objects are similar. It is sometimes used in Recommendation systems.

Sparse Dictionary Learning

In this method, a datum is represented as a linear combination of basis functions, and the coefficients are assumed to be sparse. Let x be a d-dimensional datum, D be a d by n matrix, where each column of D represents a basis function. r is the coefficient to represent x using D. Mathematically, sparse dictionary learning means the following where r is sparse. Generally speaking, n is assumed to be larger than d to allow the freedom for a sparse representation. Sparse dictionary learning has been applied in several contexts. In classification, the problem is to determine which classes a previously unseen datum belongs to. Suppose a dictionary for each class has already been built. Then a new datum is associated with the class such that it's best sparsely represented by the corresponding dictionary. Sparse dictionary learning has also been applied in image de-noising. The key idea is that a clean image path can be sparsely represented by an image dictionary, but the noise cannot.[8]

Machine learning

Applications

Applications for machine learning include: Machine perception Computer vision Natural language processing Syntactic pattern recognition Search engines Medical diagnosis Bioinformatics Brain-machine interfaces Cheminformatics Detecting credit card fraud Stock market analysis Classifying DNA sequences Sequence mining Speech and handwriting recognition Object recognition in Computer vision Game playing Software engineering Adaptive websites Robot locomotion Computational Advertising Computational finance Structural health monitoring. Sentiment Analysis (or Opinion Mining). Affective computing Information Retrieval Recommender systems

In 2006, the online movie company Netflix held the first "Netflix Prize" competition to find a program to better predict user preferences and improve the accuracy on its existing Cinematch movie recommendation algorithm by at least 10%. A joint team made up of researchers from AT&T Labs-Research in collaboration with the teams Big Chaos and Pragmatic Theory built an ensemble model to win the Grand Prize in 2009 for $1 million.[9]

Software

Angoss KnowledgeSTUDIO, Apache Mahout, IBM SPSS Modeler, KNIME, KXEN Modeler, LIONsolver, MATLAB, mlpy, MCMLL, OpenCV, dlib, Oracle Data Mining, Orange, Python scikit-learn, R, RapidMiner, Salford Predictive Modeler, SAS Enterprise Miner, Shogun toolbox, STATISTICA Data Miner, and Weka are software suites containing a variety of machine learning algorithms.

Journals and conferences

Machine Learning (journal) Journal of Machine Learning Research Neural Computation (journal) Journal of Intelligent Systems(journal) [10] International Conference on Machine Learning (ICML) (conference)

Machine learning Neural Information Processing Systems (NIPS) (conference)

References

[1] Wernick, Yang, Brankov, Yourganov and Strother, Machine Learning in Medical Imaging, IEEE Signal Processing Magazine, vol. 27, no. 4, July 2010, pp. 25-38 [2] * Mitchell, T. (1997). Machine Learning, McGraw Hill. ISBN 0-07-042807-7, p.2. [4] Christopher M. Bishop (2006) Pattern Recognition and Machine Learning, Springer ISBN 0-387-31073-8. [5] Mehryar Mohri, Afshin Rostamizadeh, Ameet Talwalkar (2012) Foundations of Machine Learning, The MIT Press ISBN 9780262018258. [8] Aharon, M, M Elad, and A Bruckstein. 2006. K-SVD: An Algorithm for Designing Overcomplete Dictionaries for Sparse Representation. Signal Processing, IEEE Transactions on 54 (11): 4311-4322 [9] "BelKor Home Page" (http:/ / www2. research. att. com/ ~volinsky/ netflix/ ) research.att.com [10] http:/ / www. degruyter. de/ journals/ jisys/ detailEn. cfm

Further reading

Mehryar Mohri, Afshin Rostamizadeh, Ameet Talwalkar (2012). Foundations of Machine Learning (http:// www.cs.nyu.edu/~mohri/mlbook/), The MIT Press. ISBN 9780262018258. Ian H. Witten and Eibe Frank (2011). Data Mining: Practical machine learning tools and techniques Morgan Kaufmann, 664pp., ISBN 978-0123748560. Sergios Theodoridis, Konstantinos Koutroumbas (2009) "Pattern Recognition", 4th Edition, Academic Press, ISBN 978-1-59749-272-0. Mierswa, Ingo and Wurst, Michael and Klinkenberg, Ralf and Scholz, Martin and Euler, Timm: YALE: Rapid Prototyping for Complex Data Mining Tasks, in Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD-06), 2006. Bing Liu (2007), Web Data Mining: Exploring Hyperlinks, Contents and Usage Data (http://www.cs.uic.edu/ ~liub/WebMiningBook.html). Springer, ISBN 3-540-37881-2 Toby Segaran (2007), Programming Collective Intelligence, O'Reilly, ISBN 0-596-52932-5 Huang T.-M., Kecman V., Kopriva I. (2006), Kernel Based Algorithms for Mining Huge Data Sets, Supervised, Semi-supervised, and Unsupervised Learning (http://learning-from-data.com), Springer-Verlag, Berlin, Heidelberg, 260 pp.96 illus., Hardcover, ISBN 3-540-31681-7. Ethem Alpaydn (2004) Introduction to Machine Learning (Adaptive Computation and Machine Learning), MIT Press, ISBN 0-262-01211-1 MacKay, D.J.C. (2003). Information Theory, Inference, and Learning Algorithms (http://www.inference.phy. cam.ac.uk/mackay/itila/), Cambridge University Press. ISBN 0-521-64298-1. KECMAN Vojislav (2001), Learning and Soft Computing, Support Vector Machines, Neural Networks and Fuzzy Logic Models (http://support-vector.ws), The MIT Press, Cambridge, MA, 608 pp., 268 illus., ISBN 0-262-11255-8. Trevor Hastie, Robert Tibshirani and Jerome Friedman (2001). The Elements of Statistical Learning (http:// www-stat.stanford.edu/~tibs/ElemStatLearn/), Springer. ISBN 0-387-95284-5. Richard O. Duda, Peter E. Hart, David G. Stork (2001) Pattern classification (2nd edition), Wiley, New York, ISBN 0-471-05669-3. Bishop, C.M. (1995). Neural Networks for Pattern Recognition, Oxford University Press. ISBN 0-19-853864-2. Ryszard S. Michalski, George Tecuci (1994), Machine Learning: A Multistrategy Approach, Volume IV, Morgan Kaufmann, ISBN 1-55860-251-8. Sholom Weiss and Casimir Kulikowski (1991). Computer Systems That Learn, Morgan Kaufmann. ISBN 1-55860-065-5. Yves Kodratoff, Ryszard S. Michalski (1990), Machine Learning: An Artificial Intelligence Approach, Volume III, Morgan Kaufmann, ISBN 1-55860-119-8.

Machine learning Ryszard S. Michalski, Jaime G. Carbonell, Tom M. Mitchell (1986), Machine Learning: An Artificial Intelligence Approach, Volume II, Morgan Kaufmann, ISBN 0-934613-00-1. Ryszard S. Michalski, Jaime G. Carbonell, Tom M. Mitchell (1983), Machine Learning: An Artificial Intelligence Approach, Tioga Publishing Company, ISBN 0-935382-05-4. Vladimir Vapnik (1998). Statistical Learning Theory. Wiley-Interscience, ISBN 0-471-03003-1. Ray Solomonoff, An Inductive Inference Machine, IRE Convention Record, Section on Information Theory, Part 2, pp., 56-62, 1957. Ray Solomonoff, " An Inductive Inference Machine (http://world.std.com/~rjs/indinf56.pdf)" A privately circulated report from the 1956 Dartmouth Summer Research Conference on AI.

External links

International Machine Learning Society (http://machinelearning.org/) Popular online course by Andrew Ng, at ml-class.org (http://www.ml-class.org). It uses GNU Octave. The course is a free version of Stanford University's actual course taught by Ng, whose lectures are also available for free (http://see.stanford.edu/see/courseinfo.aspx?coll=348ca38a-3a6d-4052-937d-cb017338d7b1). Machine Learning Video Lectures (http://videolectures.net/Top/Computer_Science/Machine_Learning/)

Article Sources and Contributors

Article Sources and Contributors

Machine learning Source: http://en.wikipedia.org/w/index.php?oldid=558078819 Contributors: 2620:0:1003:1008:BE30:5BFF:FED5:DA1, APH, AXRL, Aaron Kauppi, Aaronbrick, Aceituno, Addingrefs, Adiel, Adoniscik, Ahoerstemeier, Ahyeek, Aiwing, AnAj, Andr P Ricardo, Ankit.ufl, Anubhab91, Arcenciel, Arrandale, Arvindn, Ataulf, Autologin, BD2412, BMF81, Baguasquirrel, Beetstra, BenKovitz, Bender235, BertSeghers, Biochaos, Bkuhlman80, BlaiseFEgan, Blaz.zupan, Bonadea, Boxplot, Brettrmurphy, Bumbulski, Buridan, Businessman332211, CWenger, Calltech, Candace Gillhoolley, Casia wyq, Celendin, Centrx, Cfallin, Chafe66, ChangChienFu, ChaoticLogic, CharlesGillingham, Chire, Chriblo, Chris the speller, Chrisoneall, Clemwang, Clickey, Cmbishop, CommodiCast, Crasshopper, Ctacmo, CultureDrone, Cvdwalt, Damienfrancois, Dana2020, Dancter, Darnelr, DasAllFolks, Dave Runger, DaveWF, DavidCBryant, Davidmetcalfe, Debejyo, Debora.riu, Defza, Delirium, Denoir, Devantheryv, Dicklyon, Djfrost711, Doceddi, Dratman, Dsilver, Dzkd, Edouard.darchimbaud, Ego White Tray, Emrysk, Essjay, Evansad, Examtester, Fabiform, FidesLT, Fram, Francisbach, Funandtrvl, Furrykef, Gal chechik, Gareth Jones, Gene s, Genius002, Ggia, Giftlite, Gombang, GordonRoss, Grafen, Graytay, Gtfjbl, Haham hanuka, Happyrabbit, Helwr, Hike395, Hut 8.5, IjonTichyIjonTichy, InnocuousPilcrow, Innohead, Intgr, InverseHypercube, IradBG, Ishq2011, J04n, James Kidd, Jarble, Jbmurray, Jcautilli, Jdizzle123, Jim15936, JimmyShelter, Jmartinezot, Joehms22, Joerg Kurt Wegner, Jojit fb, JonHarder, JoshuSasori, Jrennie, Jrljrl, Jroudh, Jwojt, Jyoshimi, KYN, Keefaas, KellyCoinGuy, Keshav.dhandhania, Khalid hassani, Kinimod, Kithira, Kittensareawesome, Kku, KnightRider, Krexer, Kumioko (renamed), Kyhui, L Kensington, Lars Washington, Lawrence87, Levin, Lisasolomonsalford, LittleBenW, Liuyipei, LokiClock, Lordvolton, Lovok Sovok, MTJM, Magdon, Magioladitis, Marius.andreiana, Masatran, Mdann52, Mdd, Mereda, Michael Hardy, Misterwindupbird, Mneser, Moorejh, Mostafa mahdieh, Movado73, MrOllie, Mxn, Neo Poz, Nesbit, Netalarm, Nk, NotARusski, Nowozin, O.Koslowski, Ohandyya, Ohnoitsjamie, Pebkac, Penguinbroker, Peterdjones, Pgr94, Philpraxis, Piano non troppo, Pintaio, Plehn, Pmbhagat, Ppilotte, Pranjic973, Prari, Predictor, Proffviktor, PseudoOne, Quebec99, QuickUkie, Quintopia, Qwertyus, RJASE1, Rajah, Ralf Klinkenberg, Redgecko, RexSurvey, Rjwilmsi, Robiminer, Ronz, Rrenaud, Ruud Koot, Ryszard Michalski, Salih, Scigrex14, Scorpion451, Seabhcan, Seaphoto, Sebastjanmm, Shinosin, Shirik, Shizhao, Siculars, Silvonen, Sina2, Smorsy, Smsarmad, Soultaco, Spiral5800, Srinivasasha, StaticGull, Statpumpkin, Stephen Turner, Superbacana, Swordsmankirby, Tedickey, Tillander, Topbanana, Transcendence, Tritium6, Trondtr, Ulugen, Utcursch, VKokielov, Velblod, Vilapi, Vivohobson, Vsweiner, WMod-NS, Webidiap, WhatWasDone, Wht43, Why Not A Duck, WikiMSL, Wikidemon, Wikinacious, WilliamSewell, Winnerdy, WinterSpw, Wjbean, Wrdieter, Yoshua.Bengio, YrPolishUncle, Yworo, ZeroOne, Zosoin, , 374 anonymous edits

License

Creative Commons Attribution-Share Alike 3.0 Unported //creativecommons.org/licenses/by-sa/3.0/

You might also like

- DATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABNo ratings yet

- Machine Learning in Symbolic DomainsDocument112 pagesMachine Learning in Symbolic DomainsAsif Bin Latif100% (2)

- TCS Technical Interview QuestionsDocument2 pagesTCS Technical Interview QuestionsKalis KaliyappanNo ratings yet

- Government Polytechnic College: Machine LearningDocument22 pagesGovernment Polytechnic College: Machine LearningAnand SpNo ratings yet

- Machine Learning Report SummaryDocument30 pagesMachine Learning Report SummaryDinesh ChahalNo ratings yet

- Machine LearningDocument58 pagesMachine LearningAniket Kumar100% (2)

- Machine Learning NotesDocument15 pagesMachine Learning NotesxyzabsNo ratings yet

- Unit I Introduction To AI & ML - AVADocument85 pagesUnit I Introduction To AI & ML - AVAPratik KokateNo ratings yet

- Artificial Neural NetworkDocument37 pagesArtificial Neural NetworkashiammuNo ratings yet

- Machine Learning Notes: 2. All The Commands For EdaDocument5 pagesMachine Learning Notes: 2. All The Commands For Edanaveen katta100% (1)

- ML Algorithm EvolutionDocument21 pagesML Algorithm Evolutionrahul dravidNo ratings yet

- Machine LearningDocument5 pagesMachine LearningTECH INDIANo ratings yet

- Machine LearningDocument56 pagesMachine LearningMani Vrs100% (3)

- Machine Learning1Document11 pagesMachine Learning1Rishab Bhattacharyya100% (1)

- Machine LearningDocument62 pagesMachine LearningDoan Hieu100% (1)

- 49 Machine LearningDocument300 pages49 Machine LearningBalaji VenkateswaranNo ratings yet

- Presentation On Project: Presented byDocument26 pagesPresentation On Project: Presented byAnujNo ratings yet

- Introduction To Machine LearningDocument46 pagesIntroduction To Machine LearningMustafamna Al Salam100% (1)

- Machine Learning GuideDocument31 pagesMachine Learning GuideSana AkramNo ratings yet

- What Is Deep Learning?: Artificial Intelligence Machine LearningDocument3 pagesWhat Is Deep Learning?: Artificial Intelligence Machine Learninghadi645No ratings yet

- Machine LearningDocument46 pagesMachine LearningCharmil Gandhi100% (1)

- Introduction to Machine Learning in 40 CharactersDocument59 pagesIntroduction to Machine Learning in 40 CharactersJAYACHANDRAN J 20PHD0157No ratings yet

- Machine Learning CDocument24 pagesMachine Learning Cskathpalia806No ratings yet

- Unit - 5.1 - Introduction To Machine LearningDocument38 pagesUnit - 5.1 - Introduction To Machine LearningHarsh officialNo ratings yet

- Machine Learning Concepts and GoalsDocument22 pagesMachine Learning Concepts and Goalsravi joshiNo ratings yet

- L10a - Machine Learning Basic ConceptsDocument80 pagesL10a - Machine Learning Basic ConceptsMeilysaNo ratings yet

- Artificial Intelligence (AI) / Machine Learning (ML) : Limited Seats OnlyDocument2 pagesArtificial Intelligence (AI) / Machine Learning (ML) : Limited Seats OnlymukramkhanNo ratings yet

- Training Report On Machine LearningDocument27 pagesTraining Report On Machine LearningBhavesh yadavNo ratings yet

- Data Augmentation Techniques IDocument23 pagesData Augmentation Techniques IBabu GuptaNo ratings yet

- Machine Learning - Part 1Document80 pagesMachine Learning - Part 1cjon100% (1)

- RKGITM DL BasicsDocument21 pagesRKGITM DL BasicsAkansha Sharma100% (1)

- Convolutional Neural Networks FOR IMAGE CLASSIFICATIONDocument22 pagesConvolutional Neural Networks FOR IMAGE CLASSIFICATIONPrashanth Reddy SamaNo ratings yet

- Data Mining OverviewDocument14 pagesData Mining OverviewAnjana UdhayakumarNo ratings yet

- Deep Learning NotesDocument110 pagesDeep Learning NotesNishanth JoisNo ratings yet

- Machine Learning HandoutsDocument110 pagesMachine Learning HandoutsElad LiebmanNo ratings yet

- Accident Detection Using Mobile PhoneDocument4 pagesAccident Detection Using Mobile PhoneHrishikesh BasutkarNo ratings yet

- Machine LearningDocument134 pagesMachine LearningAnurag Singh100% (4)

- ML Notes Unit 1-2Document55 pagesML Notes Unit 1-2Mudit MattaNo ratings yet

- Graph Neural NetworksDocument27 pagesGraph Neural NetworksСергей Щербань100% (1)

- The Ultimate Collection of Machine Learning ResourcesDocument5 pagesThe Ultimate Collection of Machine Learning ResourcesSunilkumar ReddyNo ratings yet

- Machine Learning and Real-World ApplicationsDocument19 pagesMachine Learning and Real-World ApplicationsMachinePulse100% (1)

- Machine Learning: Presentation By: C. Vinoth Kumar SSN College of EngineeringDocument15 pagesMachine Learning: Presentation By: C. Vinoth Kumar SSN College of EngineeringLekshmiNo ratings yet

- Machine LearningDocument115 pagesMachine LearningRaja Meenakshi100% (4)

- Biyani's Think Tank: Concept Based NotesDocument49 pagesBiyani's Think Tank: Concept Based NotesGuruKPO71% (7)

- Python for Data Analysis and Visualization: US Baby Names 1880-2012Document34 pagesPython for Data Analysis and Visualization: US Baby Names 1880-2012Ομάδα ΑσφαλείαςNo ratings yet

- MachineLearning PresentationDocument71 pagesMachineLearning PresentationRam PrasadNo ratings yet

- Data Mining Lab ManualDocument2 pagesData Mining Lab Manualakbar2694No ratings yet

- Artificial Intelligence With Lab: Report: Machine LearningDocument6 pagesArtificial Intelligence With Lab: Report: Machine LearningxensosNo ratings yet

- SRM Valliammai Engineering College (An Autonomous Institution)Document9 pagesSRM Valliammai Engineering College (An Autonomous Institution)sureshNo ratings yet

- Machine LearningDocument76 pagesMachine LearningLDaggerson100% (2)

- MATLAB OCR Algorithm Converts Images to TextDocument5 pagesMATLAB OCR Algorithm Converts Images to Textvikky23791No ratings yet

- NLP Notes (Ch1-5) PDFDocument41 pagesNLP Notes (Ch1-5) PDFVARNESH GAWDE100% (1)

- Machine LearningDocument211 pagesMachine LearningHarish100% (1)

- CNN Architectures AnalyzedDocument54 pagesCNN Architectures AnalyzedRaahul SinghNo ratings yet

- Deep-Learning-TensorFlow DocumentationDocument35 pagesDeep-Learning-TensorFlow DocumentationLương DuẩnNo ratings yet

- Machine Learning Is The Subfield Of: Support Vector Machine Linear BoundaryDocument4 pagesMachine Learning Is The Subfield Of: Support Vector Machine Linear Boundarytajasvi agNo ratings yet

- Machine Learning Wikipedia SummaryDocument6 pagesMachine Learning Wikipedia SummarysaintjuanNo ratings yet

- Experiment 1: Aim: Understanding of Machine Learning AlgorithmsDocument7 pagesExperiment 1: Aim: Understanding of Machine Learning Algorithmsharshit gargNo ratings yet

- Viva AnswersDocument5 pagesViva Answers4SF19IS072 Preksha AlvaNo ratings yet

- Lab 1 ML 414Document5 pagesLab 1 ML 414Tushar SagarNo ratings yet

- Building Apps For BillionsDocument22 pagesBuilding Apps For BillionsramayantaNo ratings yet

- Entry Pass Request LetterDocument1 pageEntry Pass Request Letterramayanta67% (3)

- MediaTek LinkIt ONE Developers Guide v1 3Document67 pagesMediaTek LinkIt ONE Developers Guide v1 3Andrés MotoaNo ratings yet

- 1795$vedic Mathematics MethodsDocument160 pages1795$vedic Mathematics MethodsramayantaNo ratings yet

- Linux Foundations Jobs Report 2014Document6 pagesLinux Foundations Jobs Report 2014ramayantaNo ratings yet

- Chapter 06Document26 pagesChapter 06Ioana DuminicelNo ratings yet

- M500Document178 pagesM500Daviquin HurpeNo ratings yet

- Fundamental Principle of CountingDocument16 pagesFundamental Principle of CountingEcho AlbertoNo ratings yet

- Filetype Johnnie PDFDocument1 pageFiletype Johnnie PDFFrankNo ratings yet

- XML ImplementationDocument10 pagesXML ImplementationPraveen KumarNo ratings yet

- E TRMDocument7 pagesE TRMtsurendarNo ratings yet

- A Gateway To Industrial AutomationDocument13 pagesA Gateway To Industrial AutomationImran ShaukatNo ratings yet

- Online Shopping Case StudyDocument8 pagesOnline Shopping Case StudyVedant mehtaNo ratings yet

- CH 4 - ISMDocument23 pagesCH 4 - ISMsarveNo ratings yet

- Features of Research ProfessionalDocument4 pagesFeatures of Research ProfessionalakanyilmazNo ratings yet

- Mtap - Deped Program of Excellence in Mathematics Grade 5 Session 1Document28 pagesMtap - Deped Program of Excellence in Mathematics Grade 5 Session 1Gil FernandezNo ratings yet

- PROFINET Field Devices: Recommendations For Design and ImplementationDocument44 pagesPROFINET Field Devices: Recommendations For Design and Implementationfabiosouza2010No ratings yet

- MCA-Artificial Intelligence & Machine Learning (In Association with Samatrix) Academic ProgrammeDocument5 pagesMCA-Artificial Intelligence & Machine Learning (In Association with Samatrix) Academic ProgrammeRohit BurmanNo ratings yet

- Reciprocating Performance Output: Service/Stage Data Frame/Cylinder DataDocument2 pagesReciprocating Performance Output: Service/Stage Data Frame/Cylinder DataJose RattiaNo ratings yet

- Faizal Akbar Griya Prima Tonasa D1/7 Kel - Pai Kec. Biringkanaya Makassar PhoneDocument3 pagesFaizal Akbar Griya Prima Tonasa D1/7 Kel - Pai Kec. Biringkanaya Makassar PhoneAriefSuryoWidodoNo ratings yet

- Zappos Case - Group 1 - The Arief - IfE - V3Document19 pagesZappos Case - Group 1 - The Arief - IfE - V3asumantoNo ratings yet

- 15 Advanced PostgreSQL CommandsDocument11 pages15 Advanced PostgreSQL CommandsAbdulHakim Khalib HaliruNo ratings yet

- Signal and SystemsDocument2 pagesSignal and SystemsSaroj TimsinaNo ratings yet

- Operator's Guide Model: Electronic Chart Display and Information System (ECDIS) EnglishDocument12 pagesOperator's Guide Model: Electronic Chart Display and Information System (ECDIS) EnglishВладислав ПолищукNo ratings yet

- P HashDocument3 pagesP HashachimedesxNo ratings yet

- Ip Track CodeDocument4 pagesIp Track Codegur preetNo ratings yet

- Amity School of Engineering & Technology: B. Tech. (MAE), V Semester Rdbms Sunil VyasDocument13 pagesAmity School of Engineering & Technology: B. Tech. (MAE), V Semester Rdbms Sunil VyasJose AntonyNo ratings yet

- Actual Stored Procedure CodeDocument7 pagesActual Stored Procedure CodeJason HallNo ratings yet

- Aircraft Roll Control System Using LQR and Fuzzy Logic ControllerDocument5 pagesAircraft Roll Control System Using LQR and Fuzzy Logic ControllerАйбек АблайNo ratings yet

- UHD 4th Class Computer Science ExercisesDocument13 pagesUHD 4th Class Computer Science ExercisesuxNo ratings yet

- EViews 7 Whats NewDocument6 pagesEViews 7 Whats NewSuren MarkosovNo ratings yet

- Shafhi Mohammad Vs The State of Himachal Pradesh On 30 January, 2018 PDFDocument4 pagesShafhi Mohammad Vs The State of Himachal Pradesh On 30 January, 2018 PDFSwaraj SiddhantNo ratings yet

- Final ExamDocument36 pagesFinal Examramzy athayaNo ratings yet

- Instructions For Update Installation of Elsawin 4.10Document16 pagesInstructions For Update Installation of Elsawin 4.10garga_cata1983No ratings yet

- HEC-GeoDozer 10 Users ManualDocument72 pagesHEC-GeoDozer 10 Users ManualGonzagaToribioJunnisNo ratings yet

- CCCCCCCCCCCCCCCCCCCCCCCCCCC: Simulation of Data StructuresDocument27 pagesCCCCCCCCCCCCCCCCCCCCCCCCCCC: Simulation of Data Structuresram242No ratings yet