Professional Documents

Culture Documents

Proposals For Final Tabulation

Uploaded by

Julian A.0 ratings0% found this document useful (0 votes)

20 views4 pagesProposals for Final Tabulation

Original Title

Proposals for Final Tabulation

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentProposals for Final Tabulation

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

20 views4 pagesProposals For Final Tabulation

Uploaded by

Julian A.Proposals for Final Tabulation

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 4

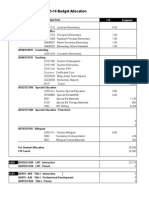

PROPOSALS FOR FINAL TABULATION & SELECTION

Summary of subcommittee meeting of Thursday 1/23/14

The following are presented as topics for discussion and/or proposals to be voted on in the

general committee meeting of February 7.

Scoring vs. Ranking There was some concern expressed that in the first screen, some

screeners scored programs all 4s or all 1s. If we use rank instead of score, then no

individual scorer can exert disproportionate influence on the result. We also avoid the

dilemma of two programs separated by only a few hundredths.

How ranking would be implemented There are a number of scenarios. Our favorite proposal:

each member fills out a screen for each curriculum. Once Adam has collected all the

screens and averages, he converts all 4 screens from each screener into a ranking.

Example: Committee member Q has scored the curricula as follows:

Program A - 2.6

Program B - 2.0

Program C - 2.9

Program D - 1.8

This is converted into the ranking:

Program C = 1

Program A = 2

Program B = 3

Program D = 4

Presentation to the committee

First, community input will be tabulated and a summary report produced for everyone to

review. This summary should be published ASAP, preferably before the general meeting.

At the general meeting Adam will present the rankings in a table, like so:

Program A Program B Program C Program D

Rank 1s 12 8 5 1

Rank 2s 5 7 2 11

Rank 3s 4 6 11 4

Rank 4s 4 8 6 7

Instant Runoff Voting (IRV) In the event one program gets a simple majority of Rank 1s on

the first round, that program is selected. In our committee of 28 members, 15 constitutes a

simple majority.

In the more likely event that no single program garners a simple majority, we would

eliminate the program with the fewest Rank 1s. In the above example, that would be

program D. After eliminating D, D would be removed from everyones ranking altogether

effectively giving us a ranking of the top 3 programs. In particular, the one person

who ranked D highest would see their second choice promoted to a Rank 1, their third

choice to a Rank 2, and their fourth choice to a Rank 3. For those of you conversant with

eXcel, think of deleting all rankings of D and moving all data up one row. Hope this helps.

In the event there is a tie for last place in Rank 1s, we will look at Rank 4s for those two

programs. Whichever program has the most Rank 4s will be eliminated. Bye-bye!

This process continues until one program garners a simple majority.

Raters complete new screener: (whether scoring or ranking)

Scoring/ranking proposal:

We will summarize each rater by their 1st 2nd 3rd etc. overall choice where the ranks are

determined by the scores derived from their scoring shee.

Example: e.x., For each curriculum comput X=the sum of the core scores for the rater,

Z=sum of the ease of use scores for the rater, and Y=0.6X+0.4Z. Each rater will get one Y

for each curriculum, and the order of the Ys determine the order of their preferences.

Properties: If we use ranks instead of scores then no individual scorer can exert

disproportionate influence on the result. A ranking system does not downgrade the influence

of any individual scorers, it upgrades the influence of all scorers to the same level.

[Option 2: Same as above but where instead of scoring each criterion we rank, and combine

the ranks using the same procedure. Arguments against this are summarized below].

Process proposal: How we use this to come up with a final result.

* Meet and discuss community input, and then rankings are revealed; have open discussion

regarding options allowing community and each other to sway each of us to revise our

scores.

* Allow people to revise their screener (maybe allowing them to provide a sentence or two

about what it is that convinced them to change it).

* Retabulate the rankings and display the number of 1st choices per option.

* Eliminate one with a) fewest first place votes (tie being fewest second place votes), or b)

most last place votes.

* Repeat until one is remaining:

Conclusions

We are sure:

That each committee member will ultimately produce a ranking derived from their new

scoring worksheets, and that this is how we will consolidate everyones input (using

something in the genus of IRV) and determine a top 1 or 2

Because (we have a whole powerpointfull of reasons)

That we will run a first pass, meet to discuss (including the community), and revise our

scores

Because discussion will make sure everyone is informed and provide an opportunity to

involve community feedback.

We will all be permitted to revise our scores based on this ranking prior to a retabulation

of the final ranks for each rater.

We think probably:

That each individual screeners ranking will be based on a total score output by our screener

because this makes everyone put in the effort

Alternatively: each criterion is ranked by every screener

but we dont like this because it is a lot of work and even more tabulation, it opens up far

decisions on how to add up ranks to achieve the final overall ranks, and could also

appear to outside people that we are just picking our favorites rather than carefully

considering the criteria.

That each individual screener will have the opportunity to subjectively break ties on their

ranking

This is vulnerable to slimy dealings but which are easy to detect.

But we are not going to deal slimily

Any other way will be less neat

That after we run the IRV algorithm and come up with a top 2, we will meet to discuss and

decide between these.

Because its only a few more hours and potentially useful with a binary choice

That we will not meet to discuss a third time

Because this will take a lot of time and will probably not change opinion much

something in the genus of IRV:

Does not really matter what

Proposal: eliminate the program with the fewest top votes; as a tiebreaker, eliminate

whichever has more last place votes

Retally and repeat

Example of report:

A B C D

first 9 9 5 1

second 3 7 2 11

third 6 4 11 4

fourth 4 8 6 7

1 2 4 5 7 8 9 10 11 12 13 14 15 18 20 21 22 23 24 25

mymath.csv.core 3 1 1 1 1.0 4 3 4 3 1 2 4 3 3 3 2 2 2.5 4 2.5

env.csv.core 3 2 3 2 2.0 2 2 1 1 3 1 2 2 2 2 4 1 1.0 3 2.5

go.csv.core 3 3 4 3 3.5 1 1 3 4 2 4 3 1 1 4 1 4 2.5 2 4.0

mif.csv.core 1 4 2 4 3.5 3 4 2 2 4 3 1 4 4 1 3 3 4.0 1 1.0

You might also like

- Wright Ballard ResponseDocument25 pagesWright Ballard ResponseJulian A.No ratings yet

- 2015-2016 Service-Based Budgeting Follow-Up ADocument27 pages2015-2016 Service-Based Budgeting Follow-Up AJulian A.No ratings yet

- Schoolid Equity Factor NBR % of Below Grade Level StudentsDocument5 pagesSchoolid Equity Factor NBR % of Below Grade Level StudentsJulian A.No ratings yet

- 5 Year Projections 2015 To 2020Document1,132 pages5 Year Projections 2015 To 2020Julian A.No ratings yet

- CSIHS Sped Funds IBDocument5 pagesCSIHS Sped Funds IBJulian A.No ratings yet

- Seattle Schools Staffing Adjustment Appendix 2015Document6 pagesSeattle Schools Staffing Adjustment Appendix 2015westello7136No ratings yet

- Seattle Schools Staffing Adjustment 10/2015Document2 pagesSeattle Schools Staffing Adjustment 10/2015westello7136No ratings yet

- Proposed Prioritization by Type - 050715 v3Document301 pagesProposed Prioritization by Type - 050715 v3Julian A.No ratings yet

- SPS CDHL Final ReportDocument48 pagesSPS CDHL Final ReportJulian A.No ratings yet

- Final ELL Staffing For 10-12-2015 Updated at 2 10pmDocument12 pagesFinal ELL Staffing For 10-12-2015 Updated at 2 10pmJulian A.No ratings yet

- Master MayShowRate ProjbygradeupdatedwbrentstaticforimportDocument558 pagesMaster MayShowRate ProjbygradeupdatedwbrentstaticforimportJulian A.No ratings yet

- Teacher FTE IA FTEDocument45 pagesTeacher FTE IA FTEJulian A.No ratings yet

- Seattle Schools Staffing Adjustment 10/2015Document2 pagesSeattle Schools Staffing Adjustment 10/2015westello7136No ratings yet

- 2015-16 WSS School BudgetsDocument667 pages2015-16 WSS School BudgetsJulian A.No ratings yet

- DRAFT Resolution To Suspend SBAC (Common Core) Testing PETERS & PATU (Seattle)Document4 pagesDRAFT Resolution To Suspend SBAC (Common Core) Testing PETERS & PATU (Seattle)Julian A.No ratings yet

- Seattle SBAC Resolution Board Action Report Doc DRAFT Revision 5.0Document3 pagesSeattle SBAC Resolution Board Action Report Doc DRAFT Revision 5.0Julian A.No ratings yet

- Kroon Decides Whats OkayDocument6 pagesKroon Decides Whats OkayJulian A.No ratings yet

- Oct FTE Adjustment Matrix V3 20141015Document4 pagesOct FTE Adjustment Matrix V3 20141015LynnSPSNo ratings yet

- Too Much TrainingDocument7 pagesToo Much TrainingJulian A.No ratings yet

- Whats ProjectedDocument2 pagesWhats ProjectedJulian A.No ratings yet

- Brent Kroon Interim Director, Enrollment Planning Seattle Public Schools (206) 252-0747Document2 pagesBrent Kroon Interim Director, Enrollment Planning Seattle Public Schools (206) 252-0747Julian A.No ratings yet

- But Were Not ReadyDocument3 pagesBut Were Not ReadyJulian A.No ratings yet

- Sanctioned Students Enrollment ProcessDocument1 pageSanctioned Students Enrollment ProcessJulian A.No ratings yet

- Non Rep Upgrades - 5-14-14 Thru 5-26-15Document12 pagesNon Rep Upgrades - 5-14-14 Thru 5-26-15Julian A.No ratings yet

- Who Reqd PRRDocument2 pagesWho Reqd PRRJulian A.No ratings yet

- Suspended or Expelled From Another DistrictDocument1 pageSuspended or Expelled From Another DistrictJulian A.No ratings yet

- Not For DistributionDocument3 pagesNot For DistributionJulian A.No ratings yet

- February Enrollment NumbersDocument70 pagesFebruary Enrollment NumbersJulian A.No ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- SLSU Course Syllabus for Speech and Oral CommunicationDocument9 pagesSLSU Course Syllabus for Speech and Oral CommunicationKaren MolinaNo ratings yet

- VALIDATION AND INTEGRATION OF THE NCBTS-BASED TOS FOR LET MAPEHDocument30 pagesVALIDATION AND INTEGRATION OF THE NCBTS-BASED TOS FOR LET MAPEHhungryniceties100% (1)

- 3rd CO LPDocument7 pages3rd CO LPGeraldine A. AndayaNo ratings yet

- Belief StatementDocument7 pagesBelief Statementapi-366576866No ratings yet

- UTM Mechanical Engineering course outlineDocument4 pagesUTM Mechanical Engineering course outlineSyuhadah KhusainiNo ratings yet

- Religion Lesson Plan 3Document4 pagesReligion Lesson Plan 3api-229687706No ratings yet

- A Clear Rationale For LearnerDocument12 pagesA Clear Rationale For LearnerEduardo SabioNo ratings yet

- Strategies To Teach SpeakingDocument14 pagesStrategies To Teach SpeakingDías Académicos Sharing SessionsNo ratings yet

- 4 2MethodologicalPluralismDocument15 pages4 2MethodologicalPluralismDiego Mauricio Paucar VillacortaNo ratings yet

- Career Decisions 3 02 WorksheetDocument7 pagesCareer Decisions 3 02 Worksheetapi-359030415No ratings yet

- CLOSE PROTECTION APPLICATIONDocument4 pagesCLOSE PROTECTION APPLICATIONAnonymous pOggsIhOMNo ratings yet

- Icdl Ict in Education Syllabus 1.0Document6 pagesIcdl Ict in Education Syllabus 1.0Xronia YiorgosNo ratings yet

- 27824Document47 pages27824Sandy Medyo GwapzNo ratings yet

- Spelling Pronunciation Errors Among Students in The University of NigeriaDocument12 pagesSpelling Pronunciation Errors Among Students in The University of NigeriaTJPRC PublicationsNo ratings yet

- Bon Air Close Reading WorksheetDocument4 pagesBon Air Close Reading Worksheetapi-280058935No ratings yet

- Model Form NotesDocument7 pagesModel Form NotesDianita PachecoNo ratings yet

- KARL BÜHLER'S AND ERNST CASSIRER'S - Verbum - XXXI - 1-2 - 1 - 4 - HalawaDocument24 pagesKARL BÜHLER'S AND ERNST CASSIRER'S - Verbum - XXXI - 1-2 - 1 - 4 - HalawaMeti MallikarjunNo ratings yet

- Cambridge International General Certificate of Secondary EducationDocument12 pagesCambridge International General Certificate of Secondary EducationGurpreet GabaNo ratings yet

- Teacher Network Report Card CommentsDocument6 pagesTeacher Network Report Card CommentsMechi BogarinNo ratings yet

- Sample Fellowship Offer LetterDocument2 pagesSample Fellowship Offer LetterandviraniNo ratings yet

- RBEC HistoryDocument1 pageRBEC HistoryJenel Apostol Albino100% (1)

- Macam-Macam TeksDocument18 pagesMacam-Macam TeksBcex PesantrenNo ratings yet

- Group 3 Holistic Education-An Approach For 21 CenturyDocument9 pagesGroup 3 Holistic Education-An Approach For 21 Centuryapi-329361916No ratings yet

- Isipin Mo, Iguhit Mo—Magagawa MoDocument5 pagesIsipin Mo, Iguhit Mo—Magagawa MoLip Vermilion100% (2)

- Hand-0ut 1 Inres PrelimsDocument7 pagesHand-0ut 1 Inres PrelimsJustin LimboNo ratings yet

- KHDA - Al Mawakeb School Al Garhoud 2016-2017Document26 pagesKHDA - Al Mawakeb School Al Garhoud 2016-2017Edarabia.comNo ratings yet

- Vote of ThanksDocument4 pagesVote of Thanksrosanth5713100% (1)

- Wilmington Head Start IncDocument10 pagesWilmington Head Start Incapi-355318161No ratings yet

- Knox Handbook 2012-2013Document61 pagesKnox Handbook 2012-2013knoxacademyNo ratings yet

- Miss Samantha Behan - Online ResumeDocument2 pagesMiss Samantha Behan - Online Resumeapi-362415535No ratings yet