Professional Documents

Culture Documents

Pub13 Barchino2011 EngineeringEducation AssessmentDesign

Uploaded by

ostojic007Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Pub13 Barchino2011 EngineeringEducation AssessmentDesign

Uploaded by

ostojic007Copyright:

Available Formats

Assessment Design: A Step

Towards Interoperability

ROBERTO BARCHINO, LUIS DE MARCOS, JOSE

MARI

A GUTIE

RREZ, SALVADOR OTO

N,

LOURDES JIME

NEZ, JOSE

ANTONIO GUTIE

RREZ, JOSE

RAMO

N HILERA,

JOSE

JAVIER MARTI

NEZ

Computer Science Department, University of Alcala, Madrid, Spain

Received 12 July 2007; accepted 6 April 2009

ABSTRACT: The assessment in e-learning systems is a basic element to successfully complete any formative

action. In this article we present a generic, exible, interoperable and reusable language for design assessments,

and its implementation in the EDVI Learning Management System. This language is based on XML and we have

used XML Schema technology. We also describe the language attributes required to set the assessment plan

based on our practical experience in e-learning courses. The main purpose of this language is to make the

assessment design interoperable among systems in a simple and efcient way. An adequate implementation will

also reduce the time required to congure assessment activities, simplifying and automating them. 2009 Wiley

Periodicals, Inc. Comput Appl Eng Educ 19: 770776, 2011; View this article online at wileyonlinelibrary.com/journal/

cae; DOI 10.1002/cae.20363

Keywords: assessment; e-learning; interoperability

INTRODUCTION

Information Technologies are widely spread in our society and its

inuence in the learning process is very important at present time.

This inuence has lead to the risen of new specic techniques for

teaching and learning, called e-learning. The used technological

key in e-learning is Internet and it is used to perform most of the

activities of the learning process as proposed in the Learning

Technology System Architecture (LTSA) from the Learning

Technology Standards Committee of IEEE [1]. Figure 1 shows a

diagram including main elements, relations and actions of the

LTSA architecture.

This architecture denes the basic entities which are found in

e-learning systems: learners, teachers, contents and assessments;

and it denes assessment as the evaluation reached by the learner, it

is the degree in knowledge and abilities assimilation. This

knowledge and abilities must be established a priori and the

achieved level must be assessed through some evaluation tools.

There are some modelling languages for e-learning systems.

These languages offer semantic notations for creating learning

objects which can be reused. Most of them are focused on the

creation of teacher tools to design the whole process. The

European Committee for Standardization in the Learning

Technologies Standard Observatory [2] presents the most

important languages which are:

*

OUNL EMLOpen University of the Netherlands Educa-

tional Modelling Languages. http://learningnetworks.org/.

*

IMS Learning Design. http://www.imsglobal.org/learning-

design/.

*

PALO Language. http://sensei.lsi.uned.es/palo/.

*

CDFARIADNE Curriculum (or course) description for-

mat. http://www.ariadne-eu.org/.

*

LMMLLearning Material Markup Language. http://

www.lmml.de.

*

TARGETEAM. http://www11.in.tum.de/forschung/projekte/.

*

TML/Netquest. http://www.ilrt.bris.ac.uk/netquest/.

As the focus of these languages is the whole learning

process, the assessment process remains undened in many of

their characteristics. For the assessment process through Internet,

the same activities set for Computer-Assisted Assessment (CAA)

can be applied:

*

Question presentation.

*

Answer check.

*

Score.

*

Examination Interpretation and analysis.

So, it can be said that CAA reinforce the classic evaluation

process trying to apply, as it, using Information Technologies as

support. Also, Internet assessment systems, as a kind of CAA

Correspondence to R. Barchino (roberto.barchino@uah.es).

Contract grant sponsor: MITyC; Contract grant numbers: FIT-

350200-2007-6, FIT-350101-2007-9, PAV-070000-2007-103. Con-

tract grant sponsor: MEC; Contract grant number: CIT-410000-2007-

5. Contract grant sponsor: JCCM; Contract grant number: EM2007-

004.

2009 Wiley Periodicals Inc.

770

make suitable this afrmation. To help in the creation of

assessment process, IMS Global Learning Consortium, Inc.,

propose the most relevant specication, QTI (Question & Test

Interoperability) [3]. QTI defines a model to represent questions

and test data and their corresponding results. The reuse of the data

is supported with the definition of a XML [4] based language to

represent it. But the QTI specification focuses on a really low

level and detailed part of the process, which is only useful to

represent the involved data in the assessment, not the process

itself. In addition to the IMS QTI specification, there are other

institutions that research in the assessment field:

*

TENCompetence Assessment Model. http://www.

tencompetence.org/.

*

OUNLs assessment model. http://dspace.ou.nl/handle/

1820/558/.

*

FREMA. http://www.frema.ecs.soton.ac.uk/.

To ll the gap existing between the global denition of a

learning process, the detailed specication of the evaluation

contents, and to achieve the possibility of modelling the

assessment process as a unique reusable piece, we propose a

new language. Figure 2 represents the relation between the

assessment conguration based on assessment attributes using

our language and the lower level QTI elements.

ASSESSMENT DESIGN

In order to achieve an effective assessment design and to allow its

reuse in further learning processes the proposed language must be

abstract enough. By looking more in depth these concepts we

find that:

*

Reusable: Capability of been reused in some different

learning processes without making any change or adjust-

ment (if the configuration fits the specific necessities).

*

Effective: Capability of the modelled assessment to

represent the established objectives and to obtain from

learners their degree of achievement.

In order to establish real characteristics of a learning

process, some process carried out in the company Sunion

Formacion y Tecnolog a S.A.

1

have been studied, as a part of its

on-line learning program for its workers during 2006 and 2007.

We have also developed some related research joining efforts

with this organisation.

2,3,4

After that, four assessment types have been found in every

learning process:

*

Initial Evaluation: Carried out before the real beginning of

the course. This Evaluation aims to get the initial learners

knowledge level related to the subject of the course.

*

Final Evaluation: Carried out after the end of the course.

The objective is to check if the learners reached the desired

level of knowledge.

*

Module Evaluation: Made at the end of every chapter in the

course. This test can help to make a continuous evaluation

or it can be used to help the learners to check their

evolution.

*

Free Evaluation: Without time limit. This type of evaluation

allows the learners to check their progress and the level of

knowledge reached. Also they can find problems and ask for

help if needed.

Also, the needed attributes to represent the characteristics of

every one of these types of evaluations have been found.

Assessment Attributes

The analysis brought out a group of parameters available to the

learning manager to program the activities. This analysis was

based on the Item Response TheoryIRT and the Computerized

Adaptive TestCAT [5]. The IRT is based on the students

probability in right answered questions. The CAT are exams

managed by a computer tool where the presentation of the

following question to answer and the decision to end the test, are

made in a dynamic and automatic way, which is based on the

estimation of the students knowledge level [6].

2

Research supported by the Dep. of Industry, Tourism and

Commerce of Spain. FIT-350200-2007-6

3

Research supported by the Dep. of Industry, Tourism and

Commerce of Spain. FIT-350101-2007-9

4

Research supported by the Dep. of Industry, Tourism and

Commerce of Spain. PAV-070000-2007-103

Figure 1 Learning Technology System Architecture.

1

Company belonging to Gesfor Group and with experience in e-

learning initiatives. http://www.sunion.es/

Figure 2 Abstraction level.

ASSESSMENT DESIGN 771

These attributes are:

*

Type of exam. This allows establishing general character-

istics of the exam like direct or questions random selection.

*

Number of answers for each question. This attribute

identifies the whole number of offered answers for each

question. The exam will use the same number of answers.

*

Number of correct answers for each question. This global

attribute sets the number of right answers in order to allow

creating single or multiple choice tests.

*

Number of questions per exam.

*

Maximum result. Associated to the best combination of

answers.

*

Minimum result. Associated to the worst combination of

answers.

*

Needed percentage for success. The percentage of correct

answers to pass, which is considered by the learning

manager.

*

Duration. The time when the exam will be available in the

Learning Management SystemLMS.

*

Relevant. Attribute which identifies the results required to

score the final marks of the course.

*

Mandatory. Attribute which establishes if the exam must be

fulfilled or not in order to achieve success in the whole

course.

*

Number of repeats. This number can be configured by the

learning manager.

*

Exam can be continued. With this option the learning

manager can establish if the exam can be answered in

different sessions.

*

Correct answers showed. Allows configuring if the students

will have access to the correct answers for the exam.

*

Evaluation weight. Allows the manager establishes by using

this attribute the weight of each exam in the learning

process.

By the manager congures the platform using the attributes,

the LMS EDVI [79] completes the related activities to

implement the described assessment. The final interface used to

set the attributes is shown in Figure 3 (in Spanish).

The attributes do not identify any type of question

(numerical, calculated, matching, etc.) or the usage of the

multimedia objects (pictures, graphics, diagrams, audio, video

streams, etc.) because of the QTI specication provides it. It is

therefore necessary to have a repository of questions in QTI

format to allow these attributes to lter the questions that the

teacher considers valid in the exam.

LANGUAGE DEFINITION

The language is dened as a XML dialect. One main objective in

the denition of the language has been to allow the import and

export of assessment congurations by the LMS. These

congurations are intended to be compatible with the SCORM

[10] content packaging in order to include them inside the

SCORM ZIP package. Other main objective for the language is

the definitions reuse. It will be possible to use the same

assessment configuration in some learning process, related or

not; and in future editions of the same process.

Figure 3 Configuration assessment interface.

772 BARCHINO ET AL.

There are two possibilities to dene a XML dialect: XML

Schema

5

and DTDs.

6

The language is defined by using XML

Schema because of its richness of data types. The following

file represents the dialect proposed for making the assessment

definitions.

The root element of the document tree will be assessment,

a complex type created using up to four test elements. The

gure below shows the composition of the assessment model

(Fig. 4).

5

The XML Schema allows defining the elements and structure of

XML documents using and XML dialect and with a wide variety of

data types.

6

The Document Type Definition allows defining the contents and

structure of XML documents using a specific syntax and including a

limited and simple set of data types.

<?xml version1.0 encodingUTF-8?>

<!

Document: CAML.xsd

Date: 27-10-2006, 12:40

Author: Roberto Barchino

Description:

Language for representations of assessment educational composed by various types of tests and its attributes.

version: 2

>

<xsd:schema xmlns:xsdhttp://www.w3.org/2001/XMLSchema

xmlns:nshttp://cc.uah.es/barchi/CAML xmlnshttp://cc.uah.es/barchi/CAML

targetNamespacehttp://cc.uah.es/barchi/CAML elementFormDefaultqualied>

<! ######################################################### >

<! root element >

<xsd:element nameassessment>

<xsd:complexType>

<xsd:sequence>

<xsd:element nametest typereg-test maxOccurs4/>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<! ########################################################## >

<! types of elements for testing >

<xsd:complexType namereg-test>

<xsd:sequence>

<xsd:element namequestions-number typexsd:integer/>

<xsd:element nameanswers-number typexsd:integer/>

<xsd:element namecorrect-answers-number typexsd:integer/>

<xsd:element namemaximum-qualication typexsd:integer/>

<xsd:element nameminimum-qualication typexsd:integer/>

<xsd:element namepercentage-pass typexsd:integer/>

<xsd:element nametimes-number typexsd:integer/>

<xsd:element nameweight-test typexsd:integer/>

</xsd:sequence>

<xsd:attribute nametest-sched typetype_sched userequired/>

<xsd:attribute namescore typetype_yes-no useoptional defaultno/>

<xsd:attribute namemandatory typetype_yes-no useoptional defaultno/>

<xsd:attribute namesession typetype_yes-no useoptional defaultno/>

<xsd:attribute namevisible-answers typetype_yes-no useoptional defaultno/>

</xsd:complexType>

<! ########################################################## >

<! test for the elements for testing >

<xsd:simpleType nametype_yes-no>

<xsd:restriction basexsd:string>

<xsd:enumeration valueyes/>

<xsd:enumeration valueno/>

</xsd:restriction>

</xsd:simpleType>

<xsd:simpleType nametype_sched>

<xsd:restriction basexsd:string>

<xsd:enumeration valueinitial/>

<xsd:enumeration valuenal/>

<xsd:enumeration valuefree/>

<xsd:enumeration valuemodule/>

</xsd:restriction>

</xsd:simpleType>

</xsd:schema>

Figure 4 Assessment composite.

ASSESSMENT DESIGN 773

Example

This section includes an example of assessment conguration

using the language proposed, as it is dened in the XML Schema.

So this example is a well-formed and valid XML document. This

example shows an assessment conguration which establishes the

characteristics of the four possible types of assessment: the initial,

module, nal and free.

LMS Interoperability

Having dened the language, it is necessary to lay the foundation

for achieving interoperability between different LMSs, because

the LMSs will really use this tool in the teacher assessment

process.

This language has been incorporated as an option within the

LMS EDVI, which allows to import and export assessment

conguration les. To extend this tool to other LMS should take

into account the following issues:

*

The LMS must be compliant with the QTI standard for

dening the questions. A QTI repository to store the

questions must be also be implemented.

*

A database where to store the information of the assessment

settings (Assessment Design Repository) must be imple-

mented.

*

You must have XML Schema le dening the conguration

of an evaluation.

*

Finally it has to implement the logic necessary to perform

the following functions:

*

Generate an XML le with the conguration of an

evaluation, that is, a function which we can obtain

information from our data store and generate an XML

language dened.

*

Function through which we check if an XML le that

contains a conguration of an evaluation is valid or not

(Fig. 5).

CONCLUSIONS

This article presents new language to help learning managers to

build assessment related tasks in e-learning systems. The

proposed language allows setting assessments characteristics by

the use of high level and reusable denitions.

<?xml version1.0 encodingUTF-8?>

<assessment xmlnshttp://cc.uah.es/barchi/CAML

xmlns:xsihttp://www.w3.org/2001/XMLSchema-instance

xsi:schemaLocationhttp://cc.uah.es/barchi/CAML CAML.xsd>

<test test-schedinitial scoreno mandatoryyes sessionno visible-answersno >

<questions-number>10</questions-number>

<answers-number>0</answers-number>

<correct-answers-number>0</correct-answers-number>

<maximum-qualication>0</maximum-qualication>

<minimum-qualication>0</minimum-qualication>

<percentage-pass>0</percentage-pass>

<times-number>0</times-number>

<weight-test>0</weight-test>

</test>

<test test-schedmodule scoreyes mandatoryyes sessionno visible-answersno >

<questions-number>30</questions-number>

<answers-number>4</answers-number>

<correct-answers-number>1</correct-answers-number>

<maximum-qualication>10</maximum-qualication>

<minimum-qualication>0</minimum-qualication>

<percentage-pass>50</percentage-pass>

<times-number>60</times-number>

<weight-test>60</weight-test>

</test>

<test test-schednal scoreyes mandatoryyes sessionno visible-answersno>

<questions-number>50</questions-number>

<answers-number>6</answers-number>

<correct-answers-number>2</correct-answers-number>

<maximum-qualication>10</maximum-qualication>

<minimum-qualication>0</minimum-qualication>

<percentage-pass>50</percentage-pass>

<times-number>0</times-number>

<weight-test>40</weight-test>

</test>

<test test-schedfree scoreno mandatoryno sessionyes visible-answersyes >

<questions-number>100</questions-number>

<answers-number>4</answers-number>

<correct-answers-number>1</correct-answers-number>

<maximum-qualication>0</maximum-qualication>

<minimum-qualication>0</minimum-qualication>

<percentage-pass>0</percentage-pass>

<times-number>0</times-number>

<weight-test>0</weight-test>

</test>

</assessment>

774 BARCHINO ET AL.

This language has been designed and implemented taking

into account the results of studies made by Sunion (a training

company) which carried at several survey to its students

using EDVI as Learning Management System (LMS). It is

possible its extension to other commercial LMS like Moodle or

Claroline.

We have also described the main results of the assessment

design. The rst is the time savings obtained by learning

managers which can be applied to other teaching activities. The

second result is the students initial knowledge about assessment

process as it is xed in a conguration le previously dened.

This issue is highly valued by the students as they know the

relevance of activities. This knowledge helps to decrease the

number of abandons and increase the students motivation.

Currently this language is being used in the inner courses of

the Computer Science Department of Alcala University. The

second planned stage includes its usage in every learning process

performed by this Department.

ACKNOWLEDGMENTS

Authors want to thank all members of the research group

Information Technologies for the Learning and Knowledge

(http://www.cc.uah.es/tifyc) for their support in developing the

described projects in this article. MITyC: grants FIT-350200-

2007-6, FIT-350101-2007-9 and PAV-070000-2007-103. MEC:

grant CIT-410000-2007-5. JCCM: grant EM2007-004.

REFERENCES

[1] Learning Technology System ArchitectureLTSA Home Page.

Current LTSA specication, http://edutool.com/ltsa/, 2006.

[2] European Committee for Standardization. Information Society

Standardization System. Learning Technologies Standards Observ-

atory. http://www.cen-ltso.net/Users/main.aspx, 2008.

[3] IMS Global Learning Consortium: Question and Test Interoper-

ability Specification, http://www.imsglobal.org/question/index.

html, 2009.

[4] XML. Extensible Markup Language (XML) of World Wide Web

Consortium. http://www.w3.org/XML/, 2006.

[5] R. Barchino, M. J. Gutierrez, S. Oton, and L. Jimenez, Experiences

in applying mobile technologies in e-learning environment, Int J

Eng Educ 23-3 (2007), 454459.

[6] R. Barchino, Assessment in learning technologies standards, US-

China Educ Rev 2 (2005), 3135.

[7] R. Barchino, J. R. Hilera, E. Garc a, and M. J. Gutierrez, EDVI: Un

Sistema de Apoyo a la Ensenanza Presencial Basado en Internet.

VII Jornadas de Ensenanza Universitaria de la Informatica

JENUI-2001, Palma de Mallorca, Espana, 2001.

[8] R. Barchino, L. M. Jimenez, and J. A. Gutierrez, EDVI Pro 2004:

Un Sistema de Apoyo a la Ensenanza Presencial Basado en Internet.

3ra. Conferencia Ibero-Americana en Sistemas, Cibernetica e

InformaticaCISCI2004, Orlando, EEUU, 2004.

[9] R. Barchino, S. Oton, and J. M. Gutierrez, An Example of Learning

Management System. IADIS Virtual Multi Conference on Com-

puter Science and Information Systems MCCSIS, 2005.

[10] Advanced Distributed LearningADL. Sharable Content Object

Referente ModelSCORM. http://www.adlnet.org/, 2004.

Figure 5 LMS architecture.

ASSESSMENT DESIGN 775

BIOGRAPHIES

Roberto Barchino has a Computer Science

Engineering degree from Polytechnics Univer-

sity of Madrid, and a Ph.D. from University of

Alcala. Currently, he is Associate Professor in

the Computer Science Department of the

University of Alcala, Madrid, Spain and Tutor

Professor in the Open University of Spain

(UNED). He has been invited by the Diparti-

mento di Informatica e Automatica, Univer-

sity of Rome Tre, Rome, Italy. He is author of more than 70 scientific

works, some of them directly related to Learning Technology. He is

also member of the group for the standardization of Learning

Technology in the Spanish Official Body for Standardization

(AENOR).

Luis de Marcos has a BSc (2001) and an MSc

(2005) in Computer Science from the Univer-

sity of Alcala, where he is now a researcher and

a PhD candidate in the Information, Docu-

mentation and Knowledge program. He is

currently finishing his doctoral dissertation in

intelligent agents for combinatorial optimiza-

tion. More than 20 publications in relevant

conferences and journals back his research

activity so far. His research interests include competencies and

learning objectives definition, adaptable and adaptative systems,

learning objects, mobile and ambient learning, intelligent agents,

agent systems and bio-inspiration in computer science.

Jose M. Gutierrez is professor at the Uni-

versity of Alcala since 1998. At the moment he

works on mobile devices integration possibil-

ities in information systems, having presented

his research results in Congresses and having

produced a book about mobile phones pro-

gramming. His previous work deals with

representation of information mainly using

XML dialects and the possibilities of systems

automation by representing the semantics of the systems through this

language. For two years, he has been working in several projects

helping the information systems development in the Institutions and

Universities of various Iberoamerican countries and since 2003 he

worked in more than 8 public funded research projects, and he has

directed one of them concerning e-tourist. Even though, he

participates in lots of e-learning activities, ranging from the

publication of investigation results to the creation of new e-learning

tools.

Salvador Oton Tortosa has a Computer

Sciences Engineering degree by Murcia Uni-

versity (1996) and a PhD from University of

Alcala (2006). Currently, he is a permanent

professor in the Computer Science Department

of the University of Alcala. He is author or co-

author of more than 60 scientific works (books,

articles, papers and research projects), some of

them directly related to Learning Technology.

His research interests focus on learning objects and e-learning

standardization and mainly in distributed learning objects repositories

and interoperability.

Mar a Lourdes Jimenez was born in Madrid,

Spain in 1973. She received a five-year degree

in Mathematics from the Complutense of

Madrid. She has been working at the Univer-

sity of Alcala in the Computer Science

Department since May 2002. From 2004, she

has been a virtual tutor of the Open University

of Spain - UNED. She obtained a Ph.D. in

Computer Science from University of Alcala in

2006. She has been invited to the Universita degli Studi Roma Tre in

Rome, Italy, to study Ontologies for knowledge representation with

the aim of Expert Systems Designment and Implementation. Recently

she has supervised several Thesis.

Jose Antonio Gutierrez de Mesa obtained a

university degree in Computer Science from

the Polytechnics University of Madrid, Doc-

umentation from the Alcala University, Math-

ematical degree from de University

Complutense of Madrid and a PhD from the

Mathematical Department of the University of

Alcala. From September 1992 to April 1994,

he worked as a lecturer in the Mathematical

Department of the Alcala University, and before he joined the

Computer Science Department of University of Alcala as permanent

professor. From 1998 to 2001, he was the principal of Computer

Services at the University of Alcala, and since then he has worked as a

full-time teacher. Currently, he is also the chief of innovation

programs at the University of Alcala. His research interests mainly

focus on topics related to software engineering and knowledge

representation and supervise several PhD works in these areas.

Jose Ramon Hilera received a PhD from the

Computer Science Department of the Univer-

sity of Alcala (1997). He has been a permanent

professor at the University of Alcala since

1992. His research interests mainly focus on

topics related to Web engineering, e-learning

and knowledge representation. He was a

member of the sub-committee Information

technology for learning, education and train-

ing and member of the Working Group Quality of virtual

education, in the Spanish Association for Standardization and

Certification (AENOR), member organization of ISO. He has

participated in the elaboration of the Spanish standard UNE

66181, Quality management. Quality of virtual education, published

in 2008.

Jose Javier Mart nez Herraiz obtained a

University degree in Computer Science from

the Technical University of Madrid and a PhD

from Alcala University (2004). Between 1988

and 1999, he worked for private telecommuni-

cation business companies as an analyst,

project manager and consultant. Since 1994,

he has been a professor in the Department of

Computer Science at the University of Alcala,

Madrid. He has practical work experience on software process

technology and modeling, methodologies to software projects

planning and managing, software maintenance and internacional

ERP projects. He is currently working on e-learning technology and is

Computer Science Department Director.

776 BARCHINO ET AL.

You might also like

- Group Project Software Management: A Guide for University Students and InstructorsFrom EverandGroup Project Software Management: A Guide for University Students and InstructorsNo ratings yet

- Developing Advanced Windows Store Apps - II - INTL PDFDocument175 pagesDeveloping Advanced Windows Store Apps - II - INTL PDFIl Louis0% (1)

- QTI PlayerDocument8 pagesQTI PlayerKiran Kumar PNo ratings yet

- Advance Windows Store Apps Development - I - v1.0 - INTLDocument206 pagesAdvance Windows Store Apps Development - I - v1.0 - INTLVi VũNo ratings yet

- Online E-Learning Portal System Synopsis - RemovedDocument6 pagesOnline E-Learning Portal System Synopsis - RemovedSanjay H MNo ratings yet

- Testing Objectives for e-Learning Interoperability & ConformanceDocument19 pagesTesting Objectives for e-Learning Interoperability & ConformanceAmanuel KassaNo ratings yet

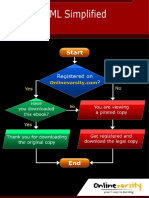

- XML SimplifiedDocument266 pagesXML SimplifiedstephanrazaNo ratings yet

- BSC Final Year Project-Teachers CBT Testing Centre ApplicationDocument128 pagesBSC Final Year Project-Teachers CBT Testing Centre ApplicationRennie Ramlochan100% (1)

- Employee Training Management Portal DesignDocument15 pagesEmployee Training Management Portal Designsuresh pNo ratings yet

- E-Learning Model For Assessment: Roberto Barchino, José M. Gutiérrez, Salvador OtónDocument5 pagesE-Learning Model For Assessment: Roberto Barchino, José M. Gutiérrez, Salvador OtónScott BellNo ratings yet

- Systems Analysis & Design ML GUIDEDocument11 pagesSystems Analysis & Design ML GUIDEIan Dela Cruz OguinNo ratings yet

- A Quantitative Assessment Method For Simulation-Based E-LearningsDocument10 pagesA Quantitative Assessment Method For Simulation-Based E-LearningsagssugaNo ratings yet

- The Instructional Design Maturity Model Approach For Developing Online CoursesDocument12 pagesThe Instructional Design Maturity Model Approach For Developing Online CoursesiPal Interactive Learning Inc100% (1)

- XML SimplifiedDocument266 pagesXML SimplifiedHồThanhDanh100% (1)

- Elearning Evaluation ArticleDocument11 pagesElearning Evaluation ArticleUke RalmugizNo ratings yet

- Lo 3Document10 pagesLo 3abe israeldilbatoNo ratings yet

- Topic Report Ec 303Document3 pagesTopic Report Ec 303Reyvie GalanzaNo ratings yet

- An Insight To Object Analysis and Design - INTLDocument168 pagesAn Insight To Object Analysis and Design - INTLFaheem AhmedNo ratings yet

- B-Learning Quality Dimensions, Criteria and Pedagogical ApproachDocument18 pagesB-Learning Quality Dimensions, Criteria and Pedagogical ApproachPaulaPeresAlmeidaNo ratings yet

- Importance of Online Assessment in The Elearning ProcessDocument6 pagesImportance of Online Assessment in The Elearning Processthanhtrang23No ratings yet

- XML SimplifiedDocument259 pagesXML SimplifiedGiang Phan100% (1)

- Tzeng2007 PDFDocument17 pagesTzeng2007 PDFLinda PertiwiNo ratings yet

- The Improvement of Learning Effectiveness in The Lesson Study by Using E-RubricDocument9 pagesThe Improvement of Learning Effectiveness in The Lesson Study by Using E-RubricAlim SumarnoNo ratings yet

- Rationale: OPTIC - Observation Protocol For Technology Integration in The Classroom User GuideDocument8 pagesRationale: OPTIC - Observation Protocol For Technology Integration in The Classroom User GuidehemithikeNo ratings yet

- Midterm Exam 3rd Tri 2019-2020Document5 pagesMidterm Exam 3rd Tri 2019-2020tsinitongmaestroNo ratings yet

- The Value of Computer-Based Tools For Skills AssessmentsDocument9 pagesThe Value of Computer-Based Tools For Skills AssessmentsnjennsNo ratings yet

- Elearning PDFDocument17 pagesElearning PDFAmit AgarwalNo ratings yet

- Ejel Volume5 Issue1 Article30Document10 pagesEjel Volume5 Issue1 Article30Syaepudin At-TanaryNo ratings yet

- Distributed Programming in Java - NEWDocument473 pagesDistributed Programming in Java - NEWGiang PhanNo ratings yet

- SEN MICROPROJECT (3)Document14 pagesSEN MICROPROJECT (3)manasajaysingh06No ratings yet

- VIII. Instructional TechnologyDocument3 pagesVIII. Instructional TechnologyAubrey Nativity Ostulano YangzonNo ratings yet

- Blackburn Assignment3Document11 pagesBlackburn Assignment3api-280084079No ratings yet

- School Management Systems ProjectDocument19 pagesSchool Management Systems ProjectWesley Chuck Anaglate100% (3)

- Senior Secondary in Nigeria Result Processing SystemDocument7 pagesSenior Secondary in Nigeria Result Processing SystemPeter Oluwasheyi Oluokun (DCompiler)No ratings yet

- 31725H - Unit 6 - Pef - 20210317Document28 pages31725H - Unit 6 - Pef - 20210317hello1737828No ratings yet

- Building Applications in C# TGDocument832 pagesBuilding Applications in C# TGPrince Alu100% (1)

- Windows Store Apps Development-I AptechDocument160 pagesWindows Store Apps Development-I AptechArthasNo ratings yet

- Detcandrews San Expert Appraisal620assignment2Document9 pagesDetcandrews San Expert Appraisal620assignment2api-301972579No ratings yet

- Computerized Teacher Evaluation System ThesisDocument6 pagesComputerized Teacher Evaluation System ThesisAsia Smith100% (2)

- Object-Oriented Programming in Java - InTLDocument524 pagesObject-Oriented Programming in Java - InTLGiang PhanNo ratings yet

- Solution Design SampleDocument18 pagesSolution Design SamplechakrapanidusettiNo ratings yet

- Usability e LearningDocument10 pagesUsability e LearningMohamed GuettafNo ratings yet

- Open Sky IeltsDocument11 pagesOpen Sky IeltsRanveerNo ratings yet

- E-Learning System for Philippine LiteratureDocument9 pagesE-Learning System for Philippine LiteratureDan Lhery Susano GregoriousNo ratings yet

- CT071 3 M Oosse 2Document33 pagesCT071 3 M Oosse 2Dipesh GurungNo ratings yet

- TRM 201Document7 pagesTRM 201mhobodpNo ratings yet

- Laguna University: Board Exam Reviewer For Teacher With Item AnalysisDocument23 pagesLaguna University: Board Exam Reviewer For Teacher With Item AnalysisDarcy HaliliNo ratings yet

- The Quis Requirement Specification of A Next Generation E-Learning SystemDocument6 pagesThe Quis Requirement Specification of A Next Generation E-Learning Systemanurag064No ratings yet

- 2 Software Lesson IdeaDocument1 page2 Software Lesson Ideaapi-245723026No ratings yet

- Educational Evaluation Lesson 3 - Cipp ModelDocument5 pagesEducational Evaluation Lesson 3 - Cipp ModelMarvinbautistaNo ratings yet

- p80 Ardito PDFDocument5 pagesp80 Ardito PDFEvelin SoaresNo ratings yet

- Web Component Development Using Java - INTLDocument404 pagesWeb Component Development Using Java - INTLNguyễn Đức TùngNo ratings yet

- Chapter - 1Document56 pagesChapter - 1Vinayaga MoorthyNo ratings yet

- Sat Manual 04jun04Document429 pagesSat Manual 04jun04anon-504116100% (5)

- Guidelines for academic program assessmentDocument50 pagesGuidelines for academic program assessmentHinaNo ratings yet

- Subject: It Capstone Project: University of Education Lahore, D.G.Khan CampusDocument18 pagesSubject: It Capstone Project: University of Education Lahore, D.G.Khan CampusIT LAb 2No ratings yet

- Course RequirementsDocument35 pagesCourse RequirementsElizabethNo ratings yet

- What Is E-Assessment?: Abdur Rehman B.Ed ViiDocument22 pagesWhat Is E-Assessment?: Abdur Rehman B.Ed ViiAbdur RehmanNo ratings yet

- Securing Web Applications DDocument118 pagesSecuring Web Applications DTri LuongNo ratings yet

- 97a39505016683c Ek PDFDocument4 pages97a39505016683c Ek PDFostojic007No ratings yet

- Evaluation of Power System Transient Stability and Definition of The Basic Criterion PDFDocument8 pagesEvaluation of Power System Transient Stability and Definition of The Basic Criterion PDFostojic007No ratings yet

- Amroune Effects of Different Parameters On Power System Transient Stability Studies-Bouktir 28-33Document6 pagesAmroune Effects of Different Parameters On Power System Transient Stability Studies-Bouktir 28-33ostojic007No ratings yet

- Model For Predicting DC Flashover Voltage of Pre-Contaminated and Ice-Covered Long Insulator Strings Under Low Air Pressure PDFDocument16 pagesModel For Predicting DC Flashover Voltage of Pre-Contaminated and Ice-Covered Long Insulator Strings Under Low Air Pressure PDFostojic007No ratings yet

- CCT influences and power system stabilityDocument6 pagesCCT influences and power system stabilityostojic007No ratings yet

- Simulation of The Electric Field On High Voltage Insulators Using The Finite Element MethodDocument4 pagesSimulation of The Electric Field On High Voltage Insulators Using The Finite Element Methodostojic007No ratings yet

- 3214 Ecij 04Document11 pages3214 Ecij 04qais652002No ratings yet

- Online Application and Fast Solving Method For Critical Clearing Time of Three-Phase Short Circuit in Power SystemDocument7 pagesOnline Application and Fast Solving Method For Critical Clearing Time of Three-Phase Short Circuit in Power Systemostojic007No ratings yet

- Power 07Document6 pagesPower 07ostojic007No ratings yet

- Online Application and Fast Solving Method For Critical Clearing Time of Three-Phase Short Circuit in Power SystemDocument7 pagesOnline Application and Fast Solving Method For Critical Clearing Time of Three-Phase Short Circuit in Power Systemostojic007No ratings yet

- Simulation of The Electric Field On Composite Insulators Using The Finite Elements MethodDocument5 pagesSimulation of The Electric Field On Composite Insulators Using The Finite Elements Methodostojic007No ratings yet

- 37043623Document5 pages37043623ostojic007No ratings yet

- Potential and Electric-Field Calculation Along An Ice-Covered Composite Insulator With FEMDocument5 pagesPotential and Electric-Field Calculation Along An Ice-Covered Composite Insulator With FEMostojic007No ratings yet

- Electric Field Analysis of High Voltage InsulatorsDocument5 pagesElectric Field Analysis of High Voltage Insulatorsostojic007No ratings yet

- Electric Field Along Surface of Silicone Rubber Insulator Under Various Contamination Conditions Using FEM PDFDocument10 pagesElectric Field Along Surface of Silicone Rubber Insulator Under Various Contamination Conditions Using FEM PDFostojic007No ratings yet

- Finite Element Analysis On Post Type Silicon Rubber Insulator Using MATLABDocument6 pagesFinite Element Analysis On Post Type Silicon Rubber Insulator Using MATLABostojic007No ratings yet

- Optimal Corona Ring Selection For 230 KV Ceramic I String Insulator Using 3D SimulationDocument6 pagesOptimal Corona Ring Selection For 230 KV Ceramic I String Insulator Using 3D Simulationostojic007No ratings yet

- Finite Element Modeling For Electric Field and Voltage Distribution Along The Polluted Polymeric InsulatorDocument11 pagesFinite Element Modeling For Electric Field and Voltage Distribution Along The Polluted Polymeric Insulatorostojic007No ratings yet

- 10 1 1 455 7534 PDFDocument79 pages10 1 1 455 7534 PDFostojic007No ratings yet

- Method of Transmission Power Networks Reliability eDocument5 pagesMethod of Transmission Power Networks Reliability eostojic007No ratings yet

- Symmetrical Components in Time Domain and Their Application To Power Network CakculationsDocument7 pagesSymmetrical Components in Time Domain and Their Application To Power Network CakculationsChristos HoutouridisNo ratings yet

- Reliability of Electrical Insulating Systems - Statistical Approach To Empirical Endurance ModelsDocument5 pagesReliability of Electrical Insulating Systems - Statistical Approach To Empirical Endurance Modelsostojic007No ratings yet

- Paper - Optimal Placement of PMUs Using Improved Tabu Search For Complete Observability and Out-Of-Step PredictionDocument18 pagesPaper - Optimal Placement of PMUs Using Improved Tabu Search For Complete Observability and Out-Of-Step PredictionAhmed SeddikNo ratings yet

- CIGRE 2008: 21, Rue D'artois, F-75008 PARISDocument8 pagesCIGRE 2008: 21, Rue D'artois, F-75008 PARISostojic007No ratings yet

- Microgrid Fault Protection Based On Symmetrical and Differential Current ComponentsDocument72 pagesMicrogrid Fault Protection Based On Symmetrical and Differential Current Componentsostojic007No ratings yet

- IEEE 10 Generator 39 Bus System: General OutlineDocument7 pagesIEEE 10 Generator 39 Bus System: General Outlineadau100% (1)

- Symmetrical Components in Time Domain and Their Application To Power Network CakculationsDocument7 pagesSymmetrical Components in Time Domain and Their Application To Power Network CakculationsChristos HoutouridisNo ratings yet

- Generalized Symetrical Components For Periodic and Non-Sinusoidal Tree Phase SignalsDocument7 pagesGeneralized Symetrical Components For Periodic and Non-Sinusoidal Tree Phase Signalsostojic007No ratings yet

- Loss-Of-Excitation Protection and Underexcitation Controls Correlation For Synchronous Generators in A Real-Time Digital SimulatorDocument12 pagesLoss-Of-Excitation Protection and Underexcitation Controls Correlation For Synchronous Generators in A Real-Time Digital Simulatorostojic007100% (1)

- A New Adaptive Loss of Excitation Relay Augmented by Rate of Change of ReactanceDocument5 pagesA New Adaptive Loss of Excitation Relay Augmented by Rate of Change of Reactanceostojic007No ratings yet

- Whisper Flo XF 3 PhaseDocument16 pagesWhisper Flo XF 3 Phasehargote_2No ratings yet

- (23005319 - Acta Mechanica Et Automatica) A Study of The Preload Force in Metal-Elastomer Torsion SpringsDocument6 pages(23005319 - Acta Mechanica Et Automatica) A Study of The Preload Force in Metal-Elastomer Torsion Springsstefan.vince536No ratings yet

- Lecture02 NoteDocument23 pagesLecture02 NoteJibril JundiNo ratings yet

- How Psychology Has Changed Over TimeDocument2 pagesHow Psychology Has Changed Over TimeMaedot HaddisNo ratings yet

- Astera Data Integration BootcampDocument4 pagesAstera Data Integration BootcampTalha MehtabNo ratings yet

- Ujian Madrasah Kelas VIDocument6 pagesUjian Madrasah Kelas VIrahniez faurizkaNo ratings yet

- Journal Entries & Ledgers ExplainedDocument14 pagesJournal Entries & Ledgers ExplainedColleen GuimbalNo ratings yet

- The European Journal of Applied Economics - Vol. 16 #2Document180 pagesThe European Journal of Applied Economics - Vol. 16 #2Aleksandar MihajlovićNo ratings yet

- CAS-GEC04 Module11 Food-SecurityDocument6 pagesCAS-GEC04 Module11 Food-SecurityPermalino Borja Rose AnneNo ratings yet

- ServiceDocument47 pagesServiceMarko KoširNo ratings yet

- On The Behavior of Gravitational Force at Small ScalesDocument6 pagesOn The Behavior of Gravitational Force at Small ScalesMassimiliano VellaNo ratings yet

- Nokia MMS Java Library v1.1Document14 pagesNokia MMS Java Library v1.1nadrian1153848No ratings yet

- Pradhan Mantri Gramin Digital Saksharta Abhiyan (PMGDISHA) Digital Literacy Programme For Rural CitizensDocument2 pagesPradhan Mantri Gramin Digital Saksharta Abhiyan (PMGDISHA) Digital Literacy Programme For Rural Citizenssairam namakkalNo ratings yet

- Thin Film Deposition TechniquesDocument20 pagesThin Film Deposition TechniquesShayan Ahmad Khattak, BS Physics Student, UoPNo ratings yet

- Mercedes BenzDocument56 pagesMercedes BenzRoland Joldis100% (1)

- Acne Treatment Strategies and TherapiesDocument32 pagesAcne Treatment Strategies and TherapiesdokterasadNo ratings yet

- Accomplishment Report 2021-2022Document45 pagesAccomplishment Report 2021-2022Emmanuel Ivan GarganeraNo ratings yet

- Ir35 For Freelancers by YunojunoDocument17 pagesIr35 For Freelancers by YunojunoOlaf RazzoliNo ratings yet

- RUJUKANDocument3 pagesRUJUKANMaryTibanNo ratings yet

- Casting Procedures and Defects GuideDocument91 pagesCasting Procedures and Defects GuideJitender Reddy0% (1)

- MBO, Management by Objectives, Pooja Godiyal, Assistant ProfessorDocument20 pagesMBO, Management by Objectives, Pooja Godiyal, Assistant ProfessorPooja GodiyalNo ratings yet

- To Introduce BgjgjgmyselfDocument2 pagesTo Introduce Bgjgjgmyselflikith333No ratings yet

- KSEB Liable to Pay Compensation for Son's Electrocution: Kerala HC CaseDocument18 pagesKSEB Liable to Pay Compensation for Son's Electrocution: Kerala HC CaseAkhila.ENo ratings yet

- Resume of Deliagonzalez34 - 1Document2 pagesResume of Deliagonzalez34 - 1api-24443855No ratings yet

- Controle de Abastecimento e ManutençãoDocument409 pagesControle de Abastecimento e ManutençãoHAROLDO LAGE VIEIRANo ratings yet

- Yellowstone Food WebDocument4 pagesYellowstone Food WebAmsyidi AsmidaNo ratings yet

- Algorithms For Image Processing and Computer Vision: J.R. ParkerDocument8 pagesAlgorithms For Image Processing and Computer Vision: J.R. ParkerJiaqian NingNo ratings yet

- 10 1 1 124 9636 PDFDocument11 pages10 1 1 124 9636 PDFBrian FreemanNo ratings yet

- Exercises2 SolutionsDocument7 pagesExercises2 Solutionspedroagv08No ratings yet

- Cold Rolled Steel Sections - Specification: Kenya StandardDocument21 pagesCold Rolled Steel Sections - Specification: Kenya StandardPEng. Tech. Alvince KoreroNo ratings yet