Professional Documents

Culture Documents

A Novel Voice Verification System Using Wavelets

Uploaded by

viswakshaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Novel Voice Verification System Using Wavelets

Uploaded by

viswakshaCopyright:

Available Formats

A novel voice verification system using wavelets

Abstract:

This paper presents a novel voice verification system using wavelet transforms. The

conventional signal processing techniques assume the signal to be stationary and are ineffective

in recognizing non stationary signals such as the voice signals. Voice signals which are more

dynamic could be analyzed with far better accuracy using wavelet transform. The developed

voice recognition system is word dependant voice verification system combining the RASTA

and LPC. The voice signal is filtered using the special purpose voice signal filter using the

Relative Spectral Algorithm (RASTA). The signals are denoised and decomposed to derive the

wavelet coefficients and thereby a statistical computation is carried out. Further the formant or

the resonance of the voices signal is detected using the Linear Predictive Coding (LPC). With the

statistical computation on the coefficients alone, the accuracy of the verifying sample individual

voice to his own voice is quite high (around 75% to 80%). The reliability of the signal

verification is strengthened by combining entailments from these two completely different

aspects of the individual voice. For voice comparison purposes four out five individuals are

verified and the results show higher percentage of accuracy. The accuracy of the system can be

improved by incorporating advanced pattern recognition techniques such as Hidden Markov

Model (HMM).

CHAPTER 1

INTRODUCTION

The audio signal especially voice signal is becoming one of the major part in humans

daily lives. The basic function of the voice signal is that it is used as one of the major tools for

communication. However, due to technological advancement, the voice signal is further

processed using software applications and the voice signal information is utilized in various

application systems. The fundamental idea of this project is to use wavelets as a mean of

extracting features from a voice signal. The wavelet technique is considered a relatively new

technique in the field of signal processing compared to other methods or techniques currently

employed in this field. Current methods used in the field of signal processing include Fourier

Transform and Short Term Fourier Transform (STFT) [1] [2]. However due to severe limitations

imposed by both the Fourier Transform and Short Term Fourier Transform in analyzing signals

deems them ineffective in analyzing complex and dynamic signals such as the voice signal

[3][4]. In order to substitute the shortcomings imposed by both the common signal processing

methods, the wavelet signal processing technique is used. The wavelet technique is used to

extract the features in the voice signal by processing data at different scales. The wavelet

technique manipulates the scales to give a higher correlation in detecting the various frequency

components in the signal. These features are then further processed in order to construct the

voice recognition system. Extracting the features of the voice signal does not limit the

capabilities of this technique to a particular application alone, but it opens the door to a wide

range of possibilities as different applications can benefit from the voice extracted features.

Applications such as speech recognition system, speech to text translators, and voice based

security system are some of the future systems that can be developed.

II. WAVELET TRANSFORM

Wavelets are mathematical functions that satisfy certain requirements [5]. From a mathematical

point of view, the wavelet is described as a function that should integrate to zero and it has a

waveform that has a limited duration. The wavelet is also finite of length which means that it is

compactly supported. Wavelet analyze a signal using different scales. This approach towards

signal processing is called multi-resolution analysis. The scale is similar to the window function

in STFT. However the signal is not segmented or divided equally by using a fixed window

length. Multi-Resolution Analysis (MRA) analyze the frequency components of the signal with

different resolutions. This approach especially makes sense for non-periodic signal such as the

voice signal which has low-frequency components dominating for long durations and short

durations of high-frequency components [6]. A large scale can be interpreted as a large

window. Using a large scale to analyze the signal, the gross features of a signal can be obtained.

Vice versa, a small scale is interpreted as a narrow window and the small scale is used to

detect the fine details of the signal. This property of wavelet analysis makes it very powerful and

useful in detecting or revealing hidden aspects of data and since wavelet transform provides a

different perspective in analyzing a signal, compression or de-noising a signal can be carried out

without much signal degradation. Local features of a signal can be detected with far better

accuracy with wavelet transform

A. Discrete Wavelet Transform

Discrete Wavelet Transform (DWT) is a revised version of Continuous Wavelet

Transform(CWT). The DWT compensates for the huge amount of data generated by the CWT.

The basic operation principles of DWT are similar to the CWT however the scales used by the

wavelet and their positions are based upon powers of two. This is called the dyadic scales and

positions as the term dyadic stands for the factor of two [9]. As in many real world applications,

most of the important features of a signal lie in the low frequency section. For voice signals, the

low frequency content is the section or the part of the signal that gives the signal its identity

whereas the high frequency content can be considered as the part of the signal that gives nuance

to the signal. This is similar to imparting flavor to the signal. For a voice signal, if the high

frequency content is removed, the voice will sound different but the message can still be heard or

conveyed. This is not true if the low frequency content of the signal is removed as what is being

spoken cannot be heard except only for some random noise. The DWT is represented using the

mathematical equation

where the discrete wavelet is described as:

The basic operation of the DWT is that the signal is passed through a series of high pass and low

pass filter to obtain the high frequency and low frequency contents of the signal. The low

frequency contents of the signal are called the approximations [10]. This means the

approximations are obtained by using the high scale wavelets which corresponds to the low

frequency. The high frequency components of the signal called the details are obtained by using

the low scale wavelets which corresponds to the high frequency

Figure 1 DWT Operation

From Figure 1, demonstrates the single level filtering using DWT. First the signal is fed into the

wavelet filters. These wavelet filters comprises of both the high-pass and low-pass filter. Then,

these filters will separate the high frequency content and low frequency content of the signal.

However, with DWT the numbers of samples are reduced according to dyadic scale. This process

is called the sub-sampling. Sub-sampling means reducing the samples by a given factor. Due to

the disadvantages imposed by CWT which requires high processing power [11] the DWT is

chosen due its simplicity and ease of operation in handling complex signals such as the voice

signal.

B. Wavelet Energy

Whenever a signal is being decomposed using the wavelet decomposition method, there is a

certain amount or percentage of energy being retained by both the approximation and the detail.

This energy can be obtained from the wavelet bookkeeping vector and the wavelet

decomposition vector. The energy calculated is a ratio as it compares the original signal and the

decomposed signal.

CHAPTER

VOICE SIGNAL ANALYSIS

A.RASTA (Relative Spectral Algorithm)

RASTA or Relative Spectral Algorithm as it is known is a technique that is developed as

the initial stage for voice recognition [13]. This method works by applying a band-pass filter to

the energy in each frequency sub-band in order to smooth over short-term noise variations and to

remove any constant offset. In voice signals, stationary noises are often detected. Stationary

noises are noises that are present for the full period of a certain signal and does not have

diminishing feature [14]. Their property does not change over time. The assumption that needs to

be made is that the noise varies slowly with respect to speech. This makes the RASTA a perfect

tool to be included in the initial stages of voice signal filtering to remove stationary noises [15].

The stationary noises that are identified are noises in the frequency range of 1Hz - 100Hz.

B. Formant Estimation

Formant is one of the major components of speech. The frequencies at which the resonant peaks

occur are called the formant frequencies or simply formants [12]. The formant of the signal can

be obtained by analyzing the vocal tract frequency response.

Figure 2 Vocal tract Frequency Response

Figure 2 shows the vocal tract frequency response. The x-axis represents the frequency scale and

the yaxis represents the magnitude of the signal. As it can be seen, the formants of the signals are

classified as F1, F2, F3 and F4. Typically a voice signal will contain three to five formants. But

in most voice signals, up to four formants can be detected. In order to obtain the formant of the

voice signals, the LPC (Linear Predictive Coding) method is used. The LPC (Linear Predictive

Coding) method is derived from the word linear prediction. Linear prediction as the term implies

is a type of mathematical operation. This mathematical function which is used in discrete time

signal estimates the future values based upon a linear function of previous samples

where is the predicted or estimated value and is the pervious value. By expanding this equation

The LPC will analyze the signal by estimating or predicting the formants. Then, the formants

effects are removed from the speech signal. The intensity and frequency of the remaining buzz is

estimated. So by removing the formants from the voices signal will enable us to eliminate the

resonance effect. This process is called inverse filtering. The remaining signal after the formant

has been removed is called the residue. In order to estimate the formants, coefficients of the LPC

are needed. The coefficients are estimated by taking the mean square error between the predicted

signal and the original signal. By minimizing the error, the coefficients are detected with a higher

accuracy and the formants of the voice signal are obtained.

IV. SYSTEM IMPLEMENTATION

A. Variation System Implementation

In order to implement the system, a certain methodology is implemented by decomposing the

voice signal to its approximation and detail. From the approximation and detail coefficients that

are extracted, the methodology is implemented in order to carry out the recognition process. The

proposed methodology for the recognition phase is the statistical calculation. Four different types

of statistical calculations are carried out on the coefficients. The statistical calculations that are

carried out are mean, standard deviation, variance and mean of absolute deviation. The wavelet

that is used for the system is the symlet 7 wavelet as that this wavelet has a very close correlation

with the voice signal. This is determined through numerous trial and errors. The coefficients that

are extracted from the wavelet decomposition process is the second level coefficients as the level

two coefficients contain most of the correlated data of the voice signal. The data at higher levels

contains very little amount of data deeming it unusable for the recognition phase. Hence for

initial system implementation, the level two coefficients are used. The coefficients are further

threshold to remove the low correlation values, and using this coefficients statistical computation

is carried out. The statistical computation of the coefficients is used in comparison of voice

signal together with the formant estimation and the wavelet energy. All the extracted information

acts like a fingerprint for the voice signals. The percentage of verification is calculated by

comparing the current values signal values against the registered voice signal values. The

percentage of verification is given by:

Verification % = (Test value / Registered value) x100

Between the tested and registered value, whichever value is higher is taken as the denominator

and the lower value is taken as the numerator. Figure 3 shows the complete flowchart which

includes all the important system components that are used in the voice verification program.

Figure 3 Complete System Flowchart

CHAPTER

SOFTWARE EXPLANATION

C. GUI (Graphical User Interface)

Figure 4 shows the GUI (Graphical User Interface) implementation for the Voice Registration

Section. This GUI enables the user to register an individuals voice signal using the pre-loaded

voice signals that are saved in the program. The STEP 1 panel shows the pre-loaded voice

signals contained in the program. The voice signals are obtained from a clean and noise-free

environment. The user can select the available voice signal from the popup menu. The Show

Voice Plot button enables the user to view the voice plot in the graph shown in the panel. The

STEP 2 panel contains the function that enables the user to de-noise the signal. By pressing the

De- Noise button, the user will be able to de-noise the signal and view the de-noised signal in the

graph shown in the plot. The Extract Coefficients button enables the user to view the DWT

(Discrete Wavelet Transform) Coefficients Detail and Approximation plot. The STEP 3 panel

shows the extracted coefficients plot which is the 2nd level approximation & detail and the 3rd

level approximation & detail. The STEP 4 panel shows the recognition methodology of the

program. The Compute Values button performs the statistical computation on the 2nd level

approximation and the 3rd level approximation and displays these values. At the same time the

formant values and the wavelet energy of the voice signal is calculated and shown.

Figure 4: Voice Registration GUI

Figure 5 shows the GUI (Graphical User Interface) implementation for the Voice Verification

Section. This GUI enables the user to verify an individuals voice signal using the pre-loaded

voice signals that are saved in the program.

Figure 5: Voice Verification GUI

The STEP 1 panel shows the pre-loaded voice signals contained in the program. These voice

signals are recorded from different individuals saying their own name. The user can select the

available voice signal from the pop-up menu. The Plot Voice Signal button enables the user to

view the voice plot in the graph shown in the panel. The STEP 2 panel shows the recognition

methodology of the program. The Compute Values button performs the statistical computation of

the 2nd level approximation and the 3

rd

level approximation and displays these values. At the

same time the formant values and the wavelet energy of the voice signal is calculated and shown.

The VERIFICATION panel shows the verification process of the system. The overall percentage

values of the statistical computation, formant values and the wavelet energy are displayed.

CHAPTER

RESULT AND DISCUSSION

TABLE 1: COMPARISON TEST 1

TABLE 2: COMPARISON TEST 2

TABLE 3: COMPARISON TEST 3

TABLE 4: COMPARISON TEST 4

TABLE 4: COMPARISON TEST 5

From the Tables above of the verification result shows from the five random tests carried out, at

any one given time, the program can successfully verify 4 out 5 persons accurately. The

complete systems which constitutes all the system components for the recognition methodology

is one of the main reasons for the high accuracy of the system. Currently, the percentage of

verification is set at an average value of 78.75%. The verification rate can be further increased

or decreased by adjusting the percentage of verification to a higher or lower value. By

substituting a lower value, the system will be less secure while a higher value could jeopardize

the accessibility rate of the system because of the certain level of tolerance is required for the

voice signal as it tends to change with internal and external factors.

CONCLUSION

The Voice Recognition Using Wavelet Feature Extraction employ wavelets in voice recognition

for studying the dynamic properties and characteristics of the voice signal. This is carried out by

estimating the formant and detecting the pitch of the voice signal by using LPC (Linear

Predictive Coding). The voice recognition system that is developed is word dependant voice

verification system used to verify the identity of an individual based on their own voice signal

using the statistical computation, formant estimation and wavelet energy. A GUI is built to

enable the user to have an easier approach in observing the step-by-step process that takes place

in Wavelet Transform. By using the fifty preloaded voice signals from five individuals, the

verification tests have been carried and an accuracy rate of approximately 80 % has been

achieved. The system can be enhanced further by using advanced pattern recognition techniques

such as Neural Network or Hidden Markov Model (HMM) for more accurate and efficient

system.

REFERENCES

[1] Soontorn Oraintara, Ying-Jui Chen Et.al. IEEE Transactions on Signal Processing, IFFT,

Vol. 50,No. 3, March 2002

[2] Kelly Wong, Journal of Undergraduate Research, The Role of the Fourier Transform in

Time-Scale Modification,University

of Florida, Vol 2, Issue 11 - August 2001

[3] Bao Liu, Sherman Riemenschneider, An Adaptive Time- Frequency Representation and Its

Fast Implementation, Department of Mathematics, West Virginia University

[4] Viswanath Ganapathy, Ranjeet K. Patro, Chandrasekhara Thejaswi, Manik Raina, Subhas K.

Ghosh, Signal Separation using Time Frequency Representation, Honeywell Technology

Solutions Laboratory

[5] Amara Graps, An Introduction to Wavelets, Istituto di Fisica dello Spazio Interplanetario,

CNR-ARTOV

[6] Brani Vidakovic and Peter Mueller, Wavelets For Kids A Tutorial Introduction, Duke

University

[7] O. Farooq and S. Datta, A Novel Wavelet Based Pre Processing For Robust Features In ASR

[8] Giuliano Antoniol, Vincenzo Fabio Rollo, Gabriele Venturi, IEEE Transactions on Software

Engineering, LPC & Cepstrum coefficients for Mining Time Variant Information from

Software Repositories, University Of Sannio, Italy

[9] Michael Unser, Thierry Blu, IEEE Transactions on Signal Procesng, Wavelet Theory

Demystified,Vol. 51,No. 2,Feb03

[10] C. Valens, IEEE, A Really Friendly Guide to Wavelets, Vol.86, No. 11, Nov 1998,

[11] James M. Lewis, C. S Burrus, Approximate CWT with An Application To Noise Reduction,

Rice University, Houston

[12] [Ted Painter, Andreas Spanias, IE EE, Perceptual Coding of Digital Audio, ASU

[13] D P. W. Ellis, PLP,RASTA, MFCC & inversion Matlab, 2005

[14] Ram Singh, Proceedings of the NCC, Spectral Subtraction Speech Enhancement with

RASTA Filtering IIT-B 2007

[15] NitinSawhney, Situational Awareness from Environmental Sounds, SIG, MIT Media Lab,

June 13, 1997

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Top 10 Reasons Why INFJs Are Walking ParadoxesDocument13 pagesTop 10 Reasons Why INFJs Are Walking ParadoxesRebekah J Peter100% (5)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

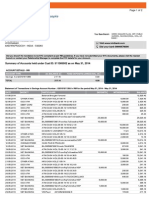

- Summary of Accounts Held Under Cust ID: 511365502 As On May 31, 2014Document2 pagesSummary of Accounts Held Under Cust ID: 511365502 As On May 31, 2014viswakshaNo ratings yet

- Architecture of FPGAs and CPLDS: A TutorialDocument41 pagesArchitecture of FPGAs and CPLDS: A Tutorialgongster100% (5)

- 2018 수능특강 영어독해연습Document40 pages2018 수능특강 영어독해연습남창균No ratings yet

- Propositional Logic: If and Only If (Rather Than Plus, Minus, Negative, TimesDocument46 pagesPropositional Logic: If and Only If (Rather Than Plus, Minus, Negative, Timesrams3340% (1)

- Understanding Social Enterprise - Theory and PracticeDocument8 pagesUnderstanding Social Enterprise - Theory and PracticeRory Ridley Duff100% (5)

- Abstract Teacher Education and Professional DevelopmentDocument20 pagesAbstract Teacher Education and Professional Developmentrashbasheer100% (1)

- DocumentationDocument70 pagesDocumentationviswakshaNo ratings yet

- DocumentationDocument76 pagesDocumentationviswakshaNo ratings yet

- Pick and Place RoboDocument44 pagesPick and Place RoboviswakshaNo ratings yet

- 11 Performance AnalysisDocument6 pages11 Performance AnalysisviswakshaNo ratings yet

- Proposal 2Document2 pagesProposal 2viswakshaNo ratings yet

- 06 - Reversible Data Embedding Using Reflective BlocksDocument4 pages06 - Reversible Data Embedding Using Reflective BlocksviswakshaNo ratings yet

- C Programming: by Deepak Majeti M-Tech CSE Mdeepak@iitk - Ac.inDocument19 pagesC Programming: by Deepak Majeti M-Tech CSE Mdeepak@iitk - Ac.inviswakshaNo ratings yet

- Frequency Domain Packet Scheduling With MIMO For 3GPP LTE DownlinkDocument10 pagesFrequency Domain Packet Scheduling With MIMO For 3GPP LTE DownlinkviswakshaNo ratings yet

- Building Automated Robots: Sensors, Motors, and ControlDocument34 pagesBuilding Automated Robots: Sensors, Motors, and ControlviswakshaNo ratings yet

- 8 Bit Risc McuDocument6 pages8 Bit Risc McuviswakshaNo ratings yet

- Analysis of Mobile IP For NS-2: Toni Janevski, Senior Member, IEEE, and Ivan PetrovDocument4 pagesAnalysis of Mobile IP For NS-2: Toni Janevski, Senior Member, IEEE, and Ivan PetrovviswakshaNo ratings yet

- Gabor FilterDocument23 pagesGabor Filtervitcon1909No ratings yet

- C48 - Efficient Localization in Mobile Wireless Sensor NetworksDocument5 pagesC48 - Efficient Localization in Mobile Wireless Sensor NetworksviswakshaNo ratings yet

- Rep07 17Document70 pagesRep07 17viswakshaNo ratings yet

- Rectangular Wave GuidesDocument44 pagesRectangular Wave GuidesArshadahcNo ratings yet

- AodvDocument67 pagesAodvSushain SharmaNo ratings yet

- Lecture1 of RtsDocument29 pagesLecture1 of RtsEsha BindraNo ratings yet

- Chapter 12Document72 pagesChapter 12viswakshaNo ratings yet

- VerilogDocument61 pagesVeriloganukopoNo ratings yet

- Basic English Skills STT04209Document18 pagesBasic English Skills STT04209JudithNo ratings yet

- Halal Diakui JAKIMDocument28 pagesHalal Diakui JAKIMwangnadaNo ratings yet

- False MemoryDocument6 pagesFalse MemoryCrazy BryNo ratings yet

- Unpopular Essays by Bertrand Russell: Have Read?Document2 pagesUnpopular Essays by Bertrand Russell: Have Read?Abdul RehmanNo ratings yet

- Lead Poisoning in Historical Perspective PDFDocument11 pagesLead Poisoning in Historical Perspective PDFintan permata balqisNo ratings yet

- Chapter 6Document21 pagesChapter 6firebirdshockwaveNo ratings yet

- Jagran Lakecity University, Bhopal: B.Tech. Third Semester 2020-21 End Semester Examination BTAIC303Document19 pagesJagran Lakecity University, Bhopal: B.Tech. Third Semester 2020-21 End Semester Examination BTAIC303Abhi YadavNo ratings yet

- Development of An Effective School-Based Financial Management Profile in Malaysia The Delphi MethodDocument16 pagesDevelopment of An Effective School-Based Financial Management Profile in Malaysia The Delphi Methodalanz123No ratings yet

- 2MS - Sequence-1-Me-My-Friends-And-My-Family 2017-2018 by Mrs Djeghdali. M.Document21 pages2MS - Sequence-1-Me-My-Friends-And-My-Family 2017-2018 by Mrs Djeghdali. M.merabet oumeima100% (2)

- Teaching Methods and Learning Theories QuizDocument8 pagesTeaching Methods and Learning Theories Quizjonathan bacoNo ratings yet

- CS 561: Artificial IntelligenceDocument36 pagesCS 561: Artificial IntelligencealvsroaNo ratings yet

- RamaswamyDocument94 pagesRamaswamyk.kalaiarasi6387No ratings yet

- Edtpa Lesson PlanDocument3 pagesEdtpa Lesson Planapi-457201254No ratings yet

- First Cause ArgumentDocument7 pagesFirst Cause Argumentapi-317871860No ratings yet

- MomentumDocument2 pagesMomentumMuhammad UsmanNo ratings yet

- Eco Comport AmDocument10 pagesEco Comport AmIHNo ratings yet

- Research Method Syllabus-F14Document6 pagesResearch Method Syllabus-F14kanssaryNo ratings yet

- Report in PhiloDocument7 pagesReport in PhiloChenly RocutanNo ratings yet

- An Historical Analysis of The Division Concept and Algorithm Can Provide Insights For Improved Teaching of DivisionDocument13 pagesAn Historical Analysis of The Division Concept and Algorithm Can Provide Insights For Improved Teaching of DivisionAguirre GpeNo ratings yet

- Alfredo Espin1Document10 pagesAlfredo Espin1Ciber Genesis Rosario de MoraNo ratings yet

- Osamu Dazai and The Beauty of His LiteratureDocument5 pagesOsamu Dazai and The Beauty of His LiteratureRohan BasuNo ratings yet

- Money As A Coordinating Device of A Commodity Economy: Old and New, Russian and French Readings of MarxDocument28 pagesMoney As A Coordinating Device of A Commodity Economy: Old and New, Russian and French Readings of Marxoktay yurdakulNo ratings yet

- Hon. Mayor Silvestre T. Lumarda of Inopacan, Leyte, PhilippinesDocument18 pagesHon. Mayor Silvestre T. Lumarda of Inopacan, Leyte, PhilippinesPerla Pelicano Corpez100% (1)

- MandalasDocument9 pagesMandalasgustavogknNo ratings yet