Professional Documents

Culture Documents

Data Profiling Basics

Uploaded by

sarferazul_haque0 ratings0% found this document useful (0 votes)

28 views4 pages159497270 Data Profiling Basics

Original Title

159497270 Data Profiling Basics

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Document159497270 Data Profiling Basics

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

28 views4 pagesData Profiling Basics

Uploaded by

sarferazul_haque159497270 Data Profiling Basics

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 4

Trillium Software

Solution Guide

Data Profiling Basics

What is Data Profiling?

Data profiling is a process for analyzing large data sets. Standard

data profiling automatically compiles statistics and other summary

information about the data records. It includes analysis by field

for minimum and maximum values and other basic statistics, frequency counts for fields, data type and patterns/formats, and conformity to expected values. Other advanced profiling techniques

also perform analysis about the relationships between fields, such

as dependencies between fields in a single set and between fields

in separate data sets.

Why Do People Profile?

People may want to profile for several reasons, including:

Assessing risksCan data support the new initiative?

Planning projectsWhat are realistic time lines and what

data, systems, and resources will the project involve?

Scoping projectsWhich data and systems will be included

based on priority, quality, and level of effort required?

Assessing data qualityHow accurate, consistent,

and complete is the data within a single system?

Designing new systemsWhat should the target structures

look like? What mappings or transformations need to occur?

Checking/monitoring dataDoes the data continue to meet

business requirements after systems have gone live and changes

and additions occur?

Key Considerations

for Selecting a

Data Profiling Tool

1. Who is profiling:

business users, IT,

or both

2. Common enviroment

to communicate,

review, and interpret

results

3. Complexity of analysis,

number of sources

4. Security of data

5. Ongoing support and

monitoring

Who Should Be Profiling the Data?

Data profiling is primarily considered part of

IT projects, but the most successful efforts

involve a blend of IT resources and business

users of the data. IT, business users, and data

stewards each contribute valuable insights

critical to the process:

IT system owners, developers, and project

managers analyze and understand issues

of data structure: how complete is the

data, how consistent are the formats, are

key fields unique, is referential integrity

enforced?

Business users and subject matter experts

understand the data content: what the data

means, how it is applied in existing business

processes, what data is required for new

processes, what data is inaccurate or out

of context?

Data stewards understand corporate standards and enterprise data requirements

as a whole. They can contribute to both

the requirements for specific projects and

the corporation.

of records, many fields, multiple sources, and questionable

documentation and metadata. Sophisticated data profiling

technology was built to handle complex problems, especially

for high-profile and mission-critical projects.

How Do Data Profiling Tools Differ?

Data profiling tools vary both in the architecture they use to analyze data and in the working environment they provide for the

data profiling team.

Architecture option: Query-based profiling Some profiling

technologies involve crafting SQL queries that are run against

source systems or against a snapshot copy of the source data.

While this generates some good information about the data, it

has several limitations:

Performance risks: Queries strain live systems, slowing down

operations, sometimes significantly. When additional information is required, or if users want to see the actual data, a second query executes, creating even more strain on the system.

Organizations reduce this risk by making a copy of the data,

but this requires replicating the entire environmentboth

hardware and software systemswhich can be costly and

time-consuming.

Traceability risks: Data in production systems changes constantly. The statistics and metadata captured from querybased profiling risk being out of date immediately.

How Do People Profile Data?

The techniques for profiling are either manual

or automated via a profiling tool:

Manual techniques involve people sifting

through the data to assess its condition,

query by query. Manual profiling is appropriate for small data sets from a single

source, with fewer than 50 fields, where the

data is relatively simple.

Automated techniques use software tools

to collect summary statistics and analyses. These tools are the most appropriate

for projects with hundreds of thousands

Completeness risks: It is difficult to gain comprehensive

insights using query-based analysis. Queries are based on

assumptions, and the purpose is to confirm and quantify

expectations about what is wrong and right in the data.

Given this, it is easy to overlook problems that you are not

already aware of.

Profiling by query is valuable when you want to monitor production data for certain conditions. But it is not

the best way to analyze large volumes of data in preparation for large-scale data integrations and migrations.

Trillium Software Solution Guide: Data Profiling Basics

Architecture option: Data profiling repository

Other profiling technologies profile data as part of a scheduled

process and store results in a profiling repository. Stored results

can include content such as summary statistics, metadata, patterns, keys, relationships, and data values. Results can then be

further analyzed by users or stored for later trending analysis.

Profiling repositories that allow users to drill down on information and see original data values in the context of source records

provide the most versatility and stability for non-technical audiences. Independence from operational source systems coupled

with the vast amount of metadata and information derived from

a point in time profile provide a cross-functional team of business and IT resources a common, comprehensive view of source

system data from which traceable decisions can be based.

Volume considerations: Should tables or files enter into

the range of hundreds of millions of records, a profiling

repository strategy should be considered. With volumes

this large, the best strategy may be a blend between weekend-scheduled profiling processes and focused, non-contentious query-based profiling, closely monitored by IT.

Work environment: Multi-user workspace

Some profiling tools are designed as desktop solutions for resources to use as a team of one. How many resources will be

involved in your data profiling efforts? For large projects, there

is generally a cross-functional team involved.

Consider the environment a profiling tool provides since multiple users with different skills, different expertise, and varying

level of technical skills all need to be able to access and clearly

see the condition of the data. Even if some prospective data

profilers are skilled in SQL and database technologies, profiling tools that foster collaboration between business users and

IT offer greater value overall. With a common window on the

data sets, people with diverse backgrounds can concretely and

productively discuss the data, its current state, and what is required to move forward.

Work environment: Graphical Interface

Because users may not be familiar with database structures and technologies, it is important to find a tool that provides an intuitive,

easy-to-learn graphical user interface (GUI).

Appropriate security features should also be

a part of the work environment, to ensure that

access to restricted fields or records can be allowed or denied, for sensitive information.

What Follows Data Profiling?

Once the task of data profiling is complete,

there is more to do. Keep in mind both the

short- and long-term goals driving the need to

profile your data. Leverage your investments

by understanding what follows and see if there

are logical extensions to your profiling efforts

that can be executed within the same tool.

ETL projects for data integration or migration use profiling results to design target

systems, define how to accurately integrate

multiple data sets, and efficiently move data

to a new system, taking all data conditions

into consideration.

Data quality processes that improve the

accuracy, consistency, and completeness

of data use results to identify problems or

anomalies and then develop rules for automated cleansing and standardization.

Data monitoring initiatives use profiling results to establish automated processes for

ongoing assessment of key data elements

and acute data conditions in production

systems. The profiling repository captures

results, sends alerts, and centrally manages

data standards.

Trillium Software Solution Guide: Data Profiling Basics

TS Discovery for Data Profiling

TS Discovery is unlike many other data profiling tools on the market. It performs as a

best-of-breed data profiling tool, and also

has several key differentiators that advance

its overall value:

User Interface the interface is designed

specifically for a business user. It is intuitive, easy to use, and allows for immediate

drill down for further analysis, without hitting production systems.

Collaborative Environment team members can log into a common repository, view

the same data, and contribute to prioritizing

and determining appropriate actions to take

for addressing anomalies, improvements,

integration rules, and monitoring thresholds.

Profiling Repositorythe repository stores

metadata created by reverse-engineering

the data. This metadata can be summarized,

synthesized, drilled down into, and used to

recreate original source record replicas. Business rules and data standards can be developed within the repository to run against the

metadata or deployed to run systematically

against production systems, complete with

alert notifications.

Robust profiling functionsin addition

to basic profiling, TS Discovery provides

advanced profiling options such as:

pattern analysis, soundex routines, metaphones, natural key analysis, join analysis,

dependency analysis, comparisons against

defined data standards, and regulation

against established business rules.

Improve Data Immediatelydata can be cleansed and

standardized directly using TS Discovery. Name and address

cleansing, address validation, and recoding processes can be

run using TS Discovery. Cleansed data is placed in new fields,

never overwriting source data. The cleansed files can be used

immediately in other systems and business processes.

Helpful Modeling Functionsdata architects and modelers rely on results from key integrity, natural key, join,

and dependency analyses. Physical data models can be

produced through the effects of reverse engineering the

data, to validate models and identify problem areas. Venn

diagrams can be used to identify outlier records and orphans.

Monitoring capabilitiesmonitor data sources for specific

events and/or errors. Notify users when data values do not meet

predefined requirements, such as unacceptable data values or

incomplete fields.These powerful features give users the environment necessary to understand the true nature of their current data landscape and how data relates across systems.

TS Discovery: Investing in the Future

A data profiling solution cannot exist in a vacuum because it is

also a part of a larger process. While data profiling is a way to

understand the condition of data, TS Discovery provides bridges

to larger initiatives for data integrations, data quality, and data

monitoring. Trillium Software is committed to expanding those

bridges. It continues to innovate and provide new ways to track,

control, and access data across the enterprise. It also continues

to integrate TS Quality functionality into TS Discovery to establish and promote high quality data in complex and dynamic

business environments.

Harte-Hanks Trillium Software

www.trilliumsoftware.com

Corporate Headquarters

+ 1 (978) 436-8900

trlinfo@trilliumsoftware.com

European Headquarters

+44 (0)118 940 7600

trillium.uk@trilliumsoftware.com

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Books That Teach Kids To WriteDocument200 pagesBooks That Teach Kids To WriteIsaias Azevedo100% (9)

- Esssays For Junior ClassesDocument17 pagesEsssays For Junior ClassestanyamamNo ratings yet

- Odi 11gr1certmatrix ps6 1928216Document27 pagesOdi 11gr1certmatrix ps6 1928216sarferazul_haqueNo ratings yet

- Flat File Source ModuleDocument8 pagesFlat File Source ModuleranusofiNo ratings yet

- Integrating Hadoop Data With OracleDocument20 pagesIntegrating Hadoop Data With Oraclesarferazul_haqueNo ratings yet

- Oracle Financial TablesDocument9 pagesOracle Financial TablesShahzad945No ratings yet

- TM1 Budget Tool Logging OnDocument7 pagesTM1 Budget Tool Logging Onsarferazul_haqueNo ratings yet

- 1logical Data Model - BankDocument25 pages1logical Data Model - BankdrrkumarNo ratings yet

- Scheduling of Jobs - Sset11Document56 pagesScheduling of Jobs - Sset11sarferazul_haqueNo ratings yet

- An Overview of Oracle FinancialsDocument7 pagesAn Overview of Oracle FinancialsJahangir RathoreNo ratings yet

- Oracle Data Integrator CurriculumDocument5 pagesOracle Data Integrator CurriculumKapilbispNo ratings yet

- Oracle Data Integrator IntroductionDocument27 pagesOracle Data Integrator IntroductionAmit Sharma100% (3)

- Oracle Hrms Absence ManagementDocument18 pagesOracle Hrms Absence Managementdaoudacoulibaly80% (5)

- Cdocumentsandsettingsjnorwooddesktopranzalessbasefinancialbistarterkit 090422105918 Phpapp01 PDFDocument40 pagesCdocumentsandsettingsjnorwooddesktopranzalessbasefinancialbistarterkit 090422105918 Phpapp01 PDFsarferazul_haqueNo ratings yet

- Business Intelligence RFP SummaryDocument19 pagesBusiness Intelligence RFP Summarysarferazul_haqueNo ratings yet

- Statistical Analysis With R - A Quick StartDocument47 pagesStatistical Analysis With R - A Quick StartPonlapat Yonglitthipagon100% (1)

- REQUEST FOR PROPOSAL For Procurement of Power PDFDocument117 pagesREQUEST FOR PROPOSAL For Procurement of Power PDFsarferazul_haqueNo ratings yet

- Hyperion - Session - Essbase - and Planning - JulDocument4 pagesHyperion - Session - Essbase - and Planning - Julsarferazul_haqueNo ratings yet

- CRM challenges in investment banking and financial marketsDocument16 pagesCRM challenges in investment banking and financial marketssarferazul_haqueNo ratings yet

- Request For Proposal Business Intelligence SystemDocument42 pagesRequest For Proposal Business Intelligence Systemsarferazul_haqueNo ratings yet

- BR RepositoryBasics 1Document16 pagesBR RepositoryBasics 1sarferazul_haqueNo ratings yet

- Gude To Grant WritingDocument26 pagesGude To Grant Writingsarferazul_haque100% (1)

- SCD Type2 Through A With Date RangeDocument19 pagesSCD Type2 Through A With Date RangeSatya SrikanthNo ratings yet

- Case Study ColonialDocument2 pagesCase Study Colonialsarferazul_haqueNo ratings yet

- Saranathan Asuri MphasisDocument30 pagesSaranathan Asuri Mphasissarferazul_haqueNo ratings yet

- E 10935Document828 pagesE 10935sarferazul_haqueNo ratings yet

- Essbase Installation 11.1.1.3 ChapterDocument24 pagesEssbase Installation 11.1.1.3 ChapterAmit SharmaNo ratings yet

- Eb 5792Document3 pagesEb 5792sarferazul_haqueNo ratings yet

- Oracle® Warehouse Builder API and Scripting ReferenceDocument206 pagesOracle® Warehouse Builder API and Scripting ReferenceranusofiNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Coffee Shop ThesisDocument6 pagesCoffee Shop Thesiskimberlywilliamslittlerock100% (2)

- Top 10 Opportunities Opportunity Title Client Status Opp Lead Est Fee (GBP K)Document5 pagesTop 10 Opportunities Opportunity Title Client Status Opp Lead Est Fee (GBP K)Anonymous Xb3zHioNo ratings yet

- CENG 4339 Homework 11 SolutionDocument3 pagesCENG 4339 Homework 11 SolutionvanessaNo ratings yet

- Maithili AmbavaneDocument62 pagesMaithili Ambavanerushikesh2096No ratings yet

- OVIZ - Payroll Attendance SystemDocument10 pagesOVIZ - Payroll Attendance Systemdwi biotekNo ratings yet

- FABIZ I FA S2 Non Current Assets Part 4Document18 pagesFABIZ I FA S2 Non Current Assets Part 4Andreea Cristina DiaconuNo ratings yet

- Innovations & SolutionsDocument13 pagesInnovations & SolutionsSatish Singh ॐ50% (2)

- Motivation QuizDocument3 pagesMotivation QuizBEEHA afzelNo ratings yet

- CAPE Management of Business 2008 U2 P2Document4 pagesCAPE Management of Business 2008 U2 P2Sherlock LangevineNo ratings yet

- Vrio Analysis Pep Coc CPKDocument6 pagesVrio Analysis Pep Coc CPKnoonot126No ratings yet

- Hidden Costof Quality AReviewDocument21 pagesHidden Costof Quality AReviewmghili2002No ratings yet

- Material Handling and ManagementDocument45 pagesMaterial Handling and ManagementGamme AbdataaNo ratings yet

- Sapm QBDocument8 pagesSapm QBSiva KumarNo ratings yet

- SEC Filings - Microsoft - 0001032210-98-000519Document18 pagesSEC Filings - Microsoft - 0001032210-98-000519highfinanceNo ratings yet

- How to Write a Business Plan for a Social Enterprise Furniture WorkshopDocument7 pagesHow to Write a Business Plan for a Social Enterprise Furniture Workshopflywing10No ratings yet

- Coca-Cola Union Dispute Over Redundancy ProgramDocument11 pagesCoca-Cola Union Dispute Over Redundancy ProgramNullumCrimen NullumPoena SineLegeNo ratings yet

- Labor Standards Law Case ListDocument4 pagesLabor Standards Law Case ListRonel Jeervee MayoNo ratings yet

- 1.2.2 Role of Information Technologies On The Emergence of New Organizational FormsDocument9 pages1.2.2 Role of Information Technologies On The Emergence of New Organizational FormsppghoshinNo ratings yet

- 01 Fairness Cream ResearchDocument13 pages01 Fairness Cream ResearchgirijNo ratings yet

- Ohsms Lead Auditor Training: Question BankDocument27 pagesOhsms Lead Auditor Training: Question BankGulfam Shahzad100% (10)

- Forexbee Co Order Blocks ForexDocument11 pagesForexbee Co Order Blocks ForexMr WatcherNo ratings yet

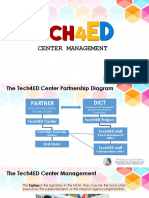

- BCMT Module 3 - Tech4ED Center ManagementDocument46 pagesBCMT Module 3 - Tech4ED Center ManagementCabaluay NHSNo ratings yet

- Cell No. 0928-823-5544/0967-019-3563 Cell No. 0928-823-5544/0967-019-3563 Cell No. 0928-823-5544/0967-019-3563Document3 pagesCell No. 0928-823-5544/0967-019-3563 Cell No. 0928-823-5544/0967-019-3563 Cell No. 0928-823-5544/0967-019-3563WesternVisayas SDARMNo ratings yet

- The Digital Project Manager Communication Plan ExampleDocument2 pagesThe Digital Project Manager Communication Plan ExampleHoney Oliveros100% (1)

- An Assignment On Niche Marketing: Submitted ToDocument3 pagesAn Assignment On Niche Marketing: Submitted ToGazi HasibNo ratings yet

- The Management of Organizational JusticeDocument16 pagesThe Management of Organizational Justicelucianafresoli9589No ratings yet

- Database Design: Conceptual, Logical and Physical DesignDocument3 pagesDatabase Design: Conceptual, Logical and Physical DesignYahya RamadhanNo ratings yet

- Abdullah Al MamunDocument2 pagesAbdullah Al MamunMamunBgcNo ratings yet

- Asst. Manager - Materials Management (Feed & Fuel Procurement)Document1 pageAsst. Manager - Materials Management (Feed & Fuel Procurement)mohanmba11No ratings yet

- IT Leadership Topic 1 IT Service Management: Brenton BurchmoreDocument25 pagesIT Leadership Topic 1 IT Service Management: Brenton BurchmoreTrung Nguyen DucNo ratings yet