Professional Documents

Culture Documents

Detection of Web Denial-Of-Service Attacks

Uploaded by

James HoganCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Detection of Web Denial-Of-Service Attacks

Uploaded by

James HoganCopyright:

Available Formats

Detection of Web Denial-of-Service Attacks

using decoy hyperlinks

Dimitris Gavrilis1, Ioannis S. Chatzis2 and Evangelos Dermatas3

1

Electrical & Computer Engineering Department, University of Patras

gavrilis@upatras.gr, 2chatzis@upatras.gr, 3dermatas@george.wcl2.ee.upatras.gr

Abstract-In this paper a method for detecting Denial-ofService attacks in Web sites is presented. The detection of

Web attacks are distinguished from normal user patterns by

inserting decoy hyperlinks into some key pages in the Website. Typical types of decoy hyperlinks are described and

experimental results derived from real Web-sites gives the

extremely low false positive rate of 0.0421%. A method for

selecting an effective and minimal number of decoy

hyperlinks and pages is also presented and evaluated in real

and simulated data.

Keywords: Denial of Service, HTTP Flood, Graphs, decoy

hyperlinks

I.

INTRODUCTION

The Denial of Service (DoS) attacks are often thought

as SYN-floods rather than resource exhaust problems. In

the second case, the DoS attacks can be expanded to

include Email Spam and Web-DoS. The latter is simply

the use of legitimate means to exhaust a web server's

bandwidth and even to crash it. Such attacks are not

hypothetical and simple tools to combat them exist [1].

Recently, a Company in Massachusetts paid hackers to

launch a Distributed DoS attack against three of its

competitors [9]. The attack involved more than 10000

compromised machines. During the attack, the SYN-flood

failed so the attackers used Web based denial of service

(Web-DoS or HTTP-flood) by requesting large image files

from the victims servers. The victims servers remained

offline for two weeks.

The goal of the Web-DoS attacks is not only to crash

the server, by exhausting all of its resources, but also to

use its resources so that fewer users can be serviced.

During a Web-DoS attack (also known as HTTP Floods),

a program requests Web pages from a server using one of

the following forms:

Constantly requesting the same page.

Randomly requesting Web pages from the server.

A number of random Web pages, linked with each

other, are requested following a navigation pattern.

As a result, the Web-DoS not only tries to crash the

Web-server but also without the administrators

knowledge reduces the effective number of users that can

be serviced. Although these types of attacks are new it is

not uncommon and at least one tool exists [1], which

defeats such simple attacks. The work presented in this

paper targets the most difficult to detect Web-DoS attack,

which is the third case.

II. RELATED WORK

Recently, a number of Web-DoS detectors have been

presented and can be classified into two categories. The

first type of detectors is based on surfer models stored in

the Web server log-files. A surfer model is derived from

the click streams and is matched against stored models.

This approach could be used to classify any Web-activity

into two types of user: a human activity and a machine

based Web-surfer.

In [2], Mukund and Karypis present three pruning

algorithms that are used in reducing the states of Kth order

Markov models. The authors test their method on three

datasets and show that the proposed method outperforms

both 1st and simple Kth order Markov models. In [3],

Ghosh and Acharyya find the 1st order Markov models

inadequate to describe accurately the user behavior and

higher order Markov chains are too complex and

computationally intensive. Instead, they propose a method

create and map Web-pages to concept trees which the user

traverses. This approach has the advantage that the surfer

models are derived from the concepts of the pages rather

than the pages themselves.

In [4], Ypma and Heskes propose a mixture of Hidden

Markov Models for modeling the surfer's behavior. The

page topics are extracted from the pages based on the

surfer's click streams. Consequently, a surfer type is

recognized based on those topics. The authors also

incorporate prior knowledge about the user behavior into

the transition matrices in order to achieve better results. In

[5], the authors employ the longest repeated sequence

alignment algorithm to predict the surfer behavior.

In the second category, architectures that are DoS

resistant are presented. The authors in [6,7] propose the

Secure Overlay Services (SOS), an architecture that hides

the servers true location, providing cover from DoS

attacks. Although the proposed architecture does not

require modifications on the server or the client, the

clients must be aware of SOS. The authors have also used

Turing tests to allow only human users to access the SOS

services. It is understandable that SOS does not target

publicly open services such as commercial Web-sites. A

major drawback of graphical test authentication

mechanisms is that they make it impossible for blind users

to use the services.

This problem is confronted in [8], where the authors

present Kill-Bots, a Linux kernel based system designed

to detect and block Web-DoS attacks. Kill-Bots combines

a two stage authentication mechanism that includes

graphical tests and an admission control algorithm that

protects the server itself from overloading.

In this paper a novel detection method of Web-DoS

attacks are presented and evaluated in both real and

simulation experiments. The proposed detector is based on

the reaction differences between human and machine

users in the presence of decoy hyperlinks on Web-pages.

Hyperlinks invisible to the human eyes are followed by

machine based users.

The structure of this paper is as follows: In section III,

the types Web-DoS attacks are described in detail. In

section IV, the proposed detection method is presented.

The experimental evaluation, some simulation results are

shown along with some results from a real Web-site and a

short discussion is given in sections V and VI.

III.

WEB BASED DENIAL OF SERVICE

The Web-DoS attacks have been classified into three

types as described in section I. The first type, where the

same page is requested continuously, is easy to detect. A

simple threshold can be applied to effectively block an IP

for a short period of time. The well-known tool

dos_evasive [1] does exactly that.

In the second type of Web-DoS attacks, the Web-site is

first parsed, its hyperlinks harvested and then these pages

are requested randomly. This form of attack it is also easy

to detect by using the variance and the length of the click

stream as attack indicators.

The third case however is more complicated, especially

in the form of a distributed attack. In this scenario, a

program harvests all the hyper links from the website and

then randomly creates navigation patterns which then

requests from the server. The server would normally

record this as huge traffic but if the attack was to be

launched from multiple IP addresses, it would be very

difficult to trace. In this case, Web-surfer modeling

techniques could be employed to detect such attacks.

However, experimental results on real data using Markov

models showed that this is not possible, especially in the

case where the attack program starts from the homepage

of the web site and descends up to 3-5 levels. This

happens because in this case the probabilities tend to be

uniform.

Selection of a minimal number of links and pages

as decoys in order to maximize the probability a

Web-DoS attack will hit them.

Construction of an algorithm that could detect

Web-Dos attacks.

1.

Construction of decoy hyperlinks

The decoy hyperlinks must be constructed in such ways

that are undetectable to human users, while an automated

program will not be able to distinguish between them and

real hyperlinks. A great number of different types of

decoy hyperlinks can be created:

A few pixels in an image map hidden in some image

of the Web-page.

A hyperlink is invisible to human users if the

hypertext has the same color as the page background.

Hyperlinks without hypertext

Hidden tables that include such links could be used

in order to include such hidden hyper links.

2.

Selection of decoy Web-pages

The process of inserting decoy hyperlinks in Web-pages

is not only cost and time expensive but it also requires

extensive testing. Taking into account that modern Websites consist of more that 500 web pages, the question that

arises is how many and which pages have to be selected as

decoys so that an attack will hit them with a good

probability? To address this problem, the Web-site is

represented by a undirected Graph G(V,E), as shown in

Fig. 1, where the graph's vertices (V) represent the site's

pages and the graph's edges (E) represent the hyper links

(the interconnection between the pages). The graph is

undirected because the reverse direction of a hyper link

can be accomplished using the Back button of any

browser. The vertices that are to contain the decoy

hyperlinks are selected using one of the following three

selection functions:

Vbest = arg max{

u1 V

(u , u1 )

d (u , u )

= arg min{

| u V } , (B)

d (u , u )

u1 V

u1 V u 2 V

WEB DOS DETECTION

The proposed method involves two actions: the

insertion of hidden hyperlinks (decoys) in a number of

Web-pages that point to a decoy web-page and a

mechanism that detect the users surfing through decoy

hyperlinks. This procedure requires that some of the pages

of the web server to be modified. The method is

transparent to the client, it does not employ authentication

mechanisms, such as graphical tests, and it does not

require special software on the clients side. The key

aspects of the proposed method are:

Construction of decoys in order to minimize false

positives.

(1)

IV.

| u V } , (A)

Vbest

(2)

Vbest = arg max{d G (u ) | u V } , (C)

(3)

where dG(u) is the degree of the vertex u, and |V| the order

of the graph.

Taking into account the experimental results, the

selection function C is used in the proposed Web-page

selection algorithm as follows.

1. Initialization. Set

Dv:={},

Rv:=V,

where V is the complete set of the Web-site pages.

2. Best Decoy page.

Vbest = arg max{d G (u ) | u Rv}

u

Dv:=Dv U VBest

Rv := Rv - VBest.

3. Termination criterion. If the number of selected

vertices exceeds a pre-define threshold, the algorithm

terminates, otherwise steps 2 and 3 are repeated.

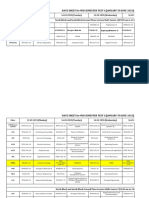

Fig.1: Typical presentation of Web-site hyperlinks in the form of a

concept tree

University of Patras, for a period of one month. Several

such decoy hyperlinks were inserted into the most popular

Web-pages of the site. In that period, 45121 hits were

recorded and only 19 of them where decoy hyperlinks

originating from normal users (the rest were various bots).

This indicates a probability of 0.000421 that a normal user

would discover and follow a decoy hyperlink.

Furthermore, from the 19 hits, only 3 users clicked on a

decoy hyperlink twice in the same session. Thus, the

injection of Web-pages with such decoy hyperlinks,

combined with a simple thresholds technique, can be used

to detect Web-DoS attacks.

In the second direction, a simulation environment was

built in Java where the three selection functions were

tested. Each simulated Web-site consists of 500 pages

with random links to other pages ranging from 40-50.

After the site is created, an attack is launched by

requesting 1000 random click streams of length 5, and the

probability that the attack passes from a decoy vertex is

measured. The number of the selected decoy-vertices

increases gradually until all vertices are marked as decoys.

The probability that an attack will hit a decoy is measured.

From the experimental results (shown in Fig. 2) it is

derived that the selection function C gives better hit

probability followed by A and then by B. The results from

the simulations for the three selection functions are shown

in Fig. 2.

3.

Proposed Detection Algorithm

After the Web-site has been modified to include the

decoys, a detection algorithm must be proposed to detect

the Web-DoS attack. Taking into account the 0.000421

false positive rate in the previous experiments, it is safe to

assume that if a click stream passes from a decoy

(threshold=1) and the IP of the client is not registered as a

valid bot, a Web-DoS attack is in progress. In order to

exclude bots (such as msnbot, googlebot etc.) a white list

could be used as shown in the following pseudo-code:

IF(HIT == DECOY)

IF( SOURCE_IP != BOT )

DoS=1

ENDIF

ENDIF

In the case of a high false alarm rate (because of badly

positioned decoy links in web pages), the detection

threshold could be increased.

V. EXPERIMENTAL RESULTS

The experimental results evaluate the proposed method

in two directions. In the first direction, the problem of

constructing the decoy hyperlinks in the Web-pages is

addressed, while in the second direction, the position

efficiency of the decoy hyperlinks is explored.

Initially, two types of decoy hyperlinks are inserted on a

large Web-site, the main Web-server of the Department of

Electrical Engineering and Computer Technology at the

Fig. 2 Probability of Web-DoS attack to pass from a decoy hyperlink

versus the number of decoy hyperlinks

If the position of the decoy-hyperlink is selected using

the selection function C, the probability of a Web-DoS

attack to pass a decoy-hyperlink is approximately 0.45

using only 50 decoys (10% of the Web sites pages). If the

selection function A is used for 50 decoys, the detection

probability is only 0.18. This result shows the significance

of choosing a correct selection function. In the case of the

graph of Fig. 1, the function C would select the vertices A,

C and F.

of 0.175 is not satisfactory, in the case of a Web-DoS

attack it is high enough to detect a single attack. In case of

DoS attacks, thousands of such attempts are made.

Therefore, in real Web-attacks, the detection probability

increases enormously, convergence to the upper limit in

few milliseconds.

VII. CONCLUSIONS & FUTURE WORK

In this paper a method of detecting complex Web-based

denial of service attacks is presented. The proposed

method requires minimal modifications on the Web-site

pages and a simple real-time algorithm can be used to

detect such attacks. Once the attacks are detected, the

attackers IP address can be blocked for a period of time.

Currently, work is being done on creating hidden decoy

hyperlinks between pages (re-arranging the Web sites

structure) so that the probability of a decoy hyperlink will

be hit during an attack will be maximized.

REFERENCES

[1]

Fig. 3 Detection probability of the Web-DoS attack versus the number

[2]

of decoy hyperlinks using the selection function C. Click streams of

lengths 6,9 and 12 are shown.

In the experimental results shown in Fig. 2 the DoS

attack click streams of length 5 are requested. However, if

click streams of longer lengths are requested the

probability of detecting the attack increases. The

experimental results are shown in Fig. 3

[3]

[4]

[5]

[6]

VI. DETECTION METHOD

In the simulated Web-site, shown in the previous

section, 10% of the Web-pages are marked by decoy

hyperlinks using the selection function C, giving a

detection probability of 0.45. The experiment assumes that

an attack will select the decoy hyperlink and not any other

real hyperlink in the Web-page. A real Web-page contains

many links. If the attack program selects the links

uniformly and 50% of the hyperlinks are decoys, then the

probability of actually selecting the decoy hyperlink is

0.35*0.5=0.175. Although, the decoy selection probability

[7]

[8]

[9]

Jonathan

A.

Zdziarski,

mod_evasive,

http://www.

nuclearelephant. com/ projects/mod_evasive/.

Mukund Deshpande and George Karypis, Selective Markov for

Predicting Web-Page Accesses, Technical Report #00-056,

University of Minessota, 2000.

Acharyya Sreangsu, Ghosh Joydeep, Context-Sensitive Modeling

of Web-Surfing Behaviour using Concept Trees, in Proceedings of

the 5th WEBKDD Workshop, Washington, 2003.

Alexander Ypma and Tom Heskes, Automatic Categorization of

Web Pages and User Clustering with Mixtures of Hidden Markov

Models, in Proccedings in the 4th WEBKDD Workshop, Canada,

2002.

Weinan Wang and Osmar R. Zaiane, Clustering Web Sessions by

Sequence Alignment, in Proceedings of DEXA Workshops, 2002,

pp. 394-398.

William G. Morein, Angelos Stavrou, Debra L Cook, Angelos

Keromytis, Vishal Misra, Dan Rubnstein, Using Graphic Turing

Tests To Counter Automated DdoS Attacks Against Web Servers,

Proceedings of the 10th ACM International Conference on

Computers & Communications Security, Washington 2003.

D.L. Cook, W.G. Morein, A.D. Keromytis, V. Misra, D.

Rubenstein, WebSOS: protecting web servers from DDoS

attacks, Proceedings of the 11th IEEE International Conference on

Networks (ICON), 2003, pp. 455-460.

Srikanth Kandula and Dina Katabi and Matthias Jacob and Arthur

W. Berger, Botz-4-Sale: Surviving Organized DDoS Attacks That

Mimic Flash Crowds, 2nd Symposium on Networked Systems

Design and Implementation, Boston, 2005

K.

Poulsen,

FBI

Busts

Alleged

DDoS

Mafia,

http://www.securityfocus.com/news/9411/

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Fourier Series: Yi Cheng Cal Poly PomonaDocument46 pagesFourier Series: Yi Cheng Cal Poly PomonaJesusSQANo ratings yet

- Test Script 459680Document9 pagesTest Script 459680Naresh KumarNo ratings yet

- III Year SyllabusDocument68 pagesIII Year SyllabusFaiz KarobariNo ratings yet

- AWUS036EW User Guide PDFDocument29 pagesAWUS036EW User Guide PDFFelipe GabrielNo ratings yet

- GSM Technique Applied To Pre-Paid Energy MeterDocument9 pagesGSM Technique Applied To Pre-Paid Energy MeterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The 17 Day Diet PDF EBookDocument56 pagesThe 17 Day Diet PDF EBooktinadjames25% (4)

- C86AS0015 - R0 - WorkSiteSecurityPlan PDFDocument38 pagesC86AS0015 - R0 - WorkSiteSecurityPlan PDFLiou Will SonNo ratings yet

- Satelec-X Mind AC Installation and Maintainance GuideDocument50 pagesSatelec-X Mind AC Installation and Maintainance Guideagmho100% (1)

- Date Sheet (MST-I)Document12 pagesDate Sheet (MST-I)Tauqeer AhmadNo ratings yet

- Berjuta Rasanya Tere Liye PDFDocument5 pagesBerjuta Rasanya Tere Liye PDFaulia nurmalitasari0% (1)

- Your Order Has Been ApprovedDocument5 pagesYour Order Has Been ApprovedSUBA NANTINI A/P M.SUBRAMANIAMNo ratings yet

- Implementation Guide C2MDocument127 pagesImplementation Guide C2MLafi AbdellatifNo ratings yet

- Eclipse PDFDocument18 pagesEclipse PDFanjaniNo ratings yet

- CP Form formats guideDocument3 pagesCP Form formats guideAsadAli25% (4)

- Smart Farming SystemDocument52 pagesSmart Farming Systempatikanang100% (1)

- FSP150-50LE Is An Industrial Level of Switching Power Supply - SpecificationDocument1 pageFSP150-50LE Is An Industrial Level of Switching Power Supply - SpecificationJohn HallowsNo ratings yet

- LG CM8440 PDFDocument79 pagesLG CM8440 PDFALEJANDRONo ratings yet

- EM Algorithm: Shu-Ching Chang Hyung Jin Kim December 9, 2007Document10 pagesEM Algorithm: Shu-Ching Chang Hyung Jin Kim December 9, 2007Tomislav PetrušijevićNo ratings yet

- EWFC 1000: "Quick-Chill and Hold" Cold ControlDocument5 pagesEWFC 1000: "Quick-Chill and Hold" Cold ControlGede KusumaNo ratings yet

- Visi Misi Ketos 20Document11 pagesVisi Misi Ketos 20haidar alyNo ratings yet

- CableFree FSO Gigabit DatasheetDocument2 pagesCableFree FSO Gigabit DatasheetGuido CardonaNo ratings yet

- Maths Bds Contpeda9 10Document7 pagesMaths Bds Contpeda9 10AhmedNo ratings yet

- Stock Coal and Limestone Feed Systems: Powering Industry ForwardDocument12 pagesStock Coal and Limestone Feed Systems: Powering Industry ForwardAchmad Nidzar AlifNo ratings yet

- Solucionario Econometría Jeffrey M. WooldridgeDocument4 pagesSolucionario Econometría Jeffrey M. WooldridgeHéctor F Bonilla3% (30)

- Spring CoreDocument480 pagesSpring CoreANUSHA S GNo ratings yet

- AOPEN DEV8430 Preliminary DatasheetDocument2 pagesAOPEN DEV8430 Preliminary DatasheetMarisa García CulpiánNo ratings yet

- Post Graduate Course Software Systems Iiit HyderabadDocument20 pagesPost Graduate Course Software Systems Iiit HyderabadLipun SwainNo ratings yet

- How Would You Describe The Telecommunication IndustryDocument3 pagesHow Would You Describe The Telecommunication Industryvinay kumarNo ratings yet

- EE1071-Turnitin Guide For StudentsDocument6 pagesEE1071-Turnitin Guide For StudentsRogsNo ratings yet

- HPLC Guide for Pharma Analysis by Oona McPolinDocument3 pagesHPLC Guide for Pharma Analysis by Oona McPolinOmar Cruz Ilustración0% (1)