Professional Documents

Culture Documents

Virtual Reality - Lecture6-3DgraphicsVRsoftware

Uploaded by

sgrrscCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Virtual Reality - Lecture6-3DgraphicsVRsoftware

Uploaded by

sgrrscCopyright:

Available Formats

www.jntuworld.

com

Rendering systems

3D Graphics Rendering,

scanning, VR software

OHJ-2700 Computer Graphics

http://www.realtimerendering.com/

Conference papers: http://trowley.org/

Generate signals from 3D models to output devices

Transform computer representations of the virtual world into

real-time images sent to the display devices

Visual, aural, haptic rendering

30 fps = VR engine needs to calculate frame in 33 ms

SGN-5406 Virtual Reality

Autumn 2009

ismo.rakkolainen@tut.fi

Challenges: speed, stability, user interaction, hardware

limitations

Hardware and software systems

Graphics Rendering

Graphics Rendering

Visual rendering systems

CG History

The process of converting the 3D geometrical models of

a virtual world into a 2D scene presented to the user

Vector vs. raster graphics

Sutherland Sketchpad demo

The conversion of a scene into an image

Software rendering system

http://www.youtube.com/watch?v=USyoT_Ha_bA

Graphical rendering routines and formats

Parses a file containing prebuilt graphical shapes and/or

instructions to generate the shapes that compose the image

Douglas Engelbart : The Mother of All Demos

http://www.youtube.com/watch?v=JfIgzSoTMOs

Hardware for rendering the graphics

CG: Charles Csuri, Robert Abel

Computer animation from 1975

Display adapter

Graphics processor (GPU)

Hardware support for many complicated 3D drawing operations

& effects

http://www.youtube.com/watch?v=wduTjxYCunI

1981 Early Computer Graphics

http://www.youtube.com/watch?v=CK7b7oc7hWI

Graphics Rendering

Graphics Rendering

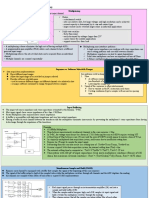

Illumination and Rendering

Polygonal rendering

To paint the picture, figure out the color and intensity of light hitting

each point in the image. The whole problem is very difficult

Photorealistic image synthesis: synthetic pictures that are

indistinguishable from real photographs

Physically based rendering: model the light the way light

interacts with the environment

Reflectance: the fraction of incoming light that is reflected

Transmittance: the fraction of incoming light that is transmitted

into the material. Opaque materials have zero transmittance

Diffuse: The component of illumination which is reflected in all

directions from a point on the surface of an object. Does not

depend on the observers position relative to the surface point

Specular: The component of illumination which is produced by

reflection about the surface normal. It depends on the observers

position. Appears as a highlight at shiny surfaces

Ambient: The amount of illumination which is assumed to come

from any direction and is thus independent of the presence of

objects, the viewer position, or actual light sources in the scene

The simplest method

Polygons are planar shapes defined by line segments

Normally 3 or 4 edges

Usually polygons are organized as polygon meshes for efficiency

Most of real-time graphics is rendered using this method

Graphics hardware almost exclusively use this method

Fast algorithms and special effects for polygonal rendering

integrated into hardware geometry engines

Z-buffer: iterate over objects, drawing each one as a polygonal

surface, and for each pixel keep track of the closest object that

we have seen so far. The array to store this info is the z-buffer

Graphics Rendering

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

Geometrically based rendering

systems

Polygonal rendering

Vertex

Non-uniform rational B-splines (NURBS)

A corner point of a polygon

Shared by neighbor polygons

Parametrically defined shapes

Can be used to describe curved objects

Octrees

Fractal geometry methods

Constructive solid geometry (CSG)

CSG objects are created by adding and subtracting

simple shapes together

Spheres, cylinders, cubes, etc.

For example, a table can be created by adding five

parallelepipeds, four as legs and one as tabletop

Dino with 1558 polygons

Dino with 638 polygons

Graphics Rendering

Graphics Rendering

Geometrically based rendering

systems

Nongeometric rendering systems

Volume rendering

CSG example:

Here cylinders and

a box have been

added together

Another cylinder is

then subtracted

from the model to

make a simple

guitar model

Well suited for semitransparent objects

3D pixels, voxels

E.g., medical applications

Commonly accomplished using ray-tracing

Light sources defined

Behavior of the light rays based on the laws of physics

calculated by the computer

Graphics Rendering

Graphics Rendering

12

Scene Graph

Particle rendering

Many small particles are rendered over time

Produces visual features that reveal the process of

larger phenomenon

Often used to show complex flow in virtual scene

Used in

Description of the current state of the world

Hierarchical organization of objects

Can change over time

Grouping nodes

Fire

Explosions

Smoke

Water flow

Animal group behavior etc

World

Hierarchical transformation tree

Cumulating:

Hand moves, fingers follow

Leafs

End a tree

Graphics Rendering

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

The rendering equation

Scene Graph

At a particular position and direction, the

outgoing light (Lo) is the sum of the emitted light

(Le) and the reflected light. The reflected light

being the sum of the incoming light (L i) from all

directions, multiplied by the surface reflection

and incoming angle

The whole overall movement of light in a scene

http://en.wikipedia.org/wiki/Rendering_equation

Graphics Rendering

Graphics Rendering

Surface Reflection

Lambertian (Diffuse) Reflection

Light hits the surface from various directions. Some gets

absorbed, some refracted, the rest is reflected. (lets assume

everything is opaque from now on)

If nothing is on the way, part of the reflected light will reach the

camera, and form the image

Different materials reflect light in different (and complicated

ways) which is why they look different. We see them different

The simplest kind of reflector to model is an ideal diffuse

reflector, also called a Lambertian reflector or chalk

Incoming light is scattered equally in all directions, so

brightness doesnt depend on view direction

Reflected brightness depends on the direction and

brightness of illumination (incoming light). This is given by

the cosine of light/normal angle

The details of reflection depend on the surfaces microstructure

Incident Light

N

L

Reflected Light

I=Ip kd cos q

Camera

Ip: Light source intensity

kd : Surface reflectance

(bet. 0 and 1)

q : Light / Normal Angle

Surface

Graphics Rendering

Graphics Rendering

Basic Shading Models

Historically, shading in computer graphics and VR started

with the ultimately simple Lambertian model (ideal diffuser)

and more and more terms were gradually added in to

account for additional effects. (Gouraud -> Phong -> )

N

L

Simple shading methods

Flat shading for Lambertian surfaces

Phong shading for specular highlights

Gouraud shading makes polygons look softer

I= Ia ka + Ip kd N.L

Ip: Light source intensity

kd: Surface reflectance

(bet. 0 and 1)

q : Light / Normal Angle

Ia: Ambient light intensity

ka : Ambient reflectance

(bet. 0 and 1)

q

v

Gouraud shading

The Lambertian model, with an ambient light term added in

Graphics Rendering

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

Ray tracing

Ray tracing

Photo-realistic images of nearly any scene

Shadows, reflections, specular highlights, textures

Image Plane

Global illumination based rendering method

A point sampling algorithm

Sample a continuous image in world coordinates by shooting

one or more rays from the eye through each pixel

leads to aliasing: anti-aliasing needed

The rays are tested against all objects in the scene to determine

if they intersect any objects

If we hit a light source, were done

If we hit a surface, we ask where the light impinging at that

surface came from by shooting out more rays recursively

If the ray misses all objects, then that pixel is shaded the

background color

Some rays (like A and E) never reach the image plane at all. Others

follow simple or complicated routes.

Graphics Rendering

Ray tracing

Radiosity

Ray types

Eye rays bring light directly to the image (pixel rays)

Illumination rays bring light directly from a light source to an object

surface (shadow rays)

Reflection rays carry light reflected from a surface

Transparency rays carry light passing through an object

Each ray must be checked against all objects

Each ray may generate much more rays

Bad for VR, if too much calculation

Several approaches to speeding up computations:

Graphics Rendering

A lot of computation needed, often not real-time

Attempts to simulate the complex inter-reflections of illuminated

surfaces. Good especially for static and diffuse environments

Precomputed illumination!

Well suited for VR!

Unfortunately few environments are purely diffuse and static

(especially in dynamic VR) -> progressive radiosity etc.

Ray tracing and radiosity are complementary photorealistic

techniques => hybrids, improvements

Cornell radiosity box:

1. Use faster machines

2. Use specialized hardware, especially parallel processors

3. Speed up computation by using more efficient algorithms

4. Reduce the number of ray - object computations

http://www.graphics.cornell.edu/online/box/compare.html

E.g., PovRay software

Graphics Rendering

Graphics Rendering

Texture Mapping

Other imaging methods

Light field rendering, computational imaging,

Lumigraphs, multi-camera arrays

http://www-graphics.stanford.edu/projects/lightfield/

Image-based rendering

Warping image sets

http://cs.unc.edu/~ibr/

Spherical panoramas & videos

QuickTime VR, etc.

Special models for natural and synthetic objects

Waves, plants, humans, clouds,

Non-photorealistic rendering

Uniformly colored, flat shaded surfaces are boring and rare

Real objects invariably have textures

We can scan textures in from the real world (e.g., wood grain)

or paint them, or generate them algorithmically, and store

them in 2D image arrays

Once we have the image, we map the (u, v) coordinates of a

parametric surface onto the indices of the image array. During

rendering, each time we sample the surface and compute a

color, we fetch the appropriate value from the map

This technique is called parametric texture mapping

It has no great overhead, except for anti-aliasing texturemapped images

Increases realism significantly

Graphics Rendering

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

Bump Mapping

Texture Mapping (cont.)

v

inverse of texture transformation +

bi-linear interpolation +

area weighted color averaging

Texture Map

We could add millions of

little polygons with their

vertices jiggled around

Instead, we can just

perturb the normal vector

at each surface point

before the shading

calculation. The surface

geometry doesnt change,

but we use shading to

make it appear that it does

Texture transformation

Viewing transformation

y

Basic texture maps just

paint things onto a

smooth surface, but

bump maps make a

rough textured surface

Surface of objects

inverse of viewing

transform

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

0

0

0

0

0

0

0

1

1

0

0

0

0

0

0

0

1

1

0

0

0

Bump Map

Pixel on screen

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

Bump Mapped Surface

(Appearance)

Perturbing Surface Normals

x

Graphics Rendering

Graphics Rendering

Environment Maps

Graphics rendering pipeline

Polygons

Paint six images on the faces of a cube, to make a

background image that surrounds your objects. This

could be clouds, sky, mountains, or a room

When you do the shading calculation, use the normal and

view vectors to figure out the light direction that would

produce a specular ray to the viewer

Intersect that light direction with the environment map

Get the color from the environment map, and add in a light

source with that color

Result: you see an (approximate) image of the

environment reflected from shiny surfaces

A very effective and cheap hack thats widely used as an

alternative to ray-tracing

Classically, model to scene to image

conversion broken into finer steps, called

the graphics pipeline

Commonly implemented in graphics

hardware to get interactive speeds

Frame rate VERY important for VR, ~30fps

Stereoscopic rendering requires suitable

graphics cards with dual output

Model, view transf.

sequence of alternating left and right

images displayed on a monitor/HMD

time-sequential, field-sequential, framesequential, or image-sequential

http://3d.curtin.edu.au/3D-PC/index.html

Screen mapping

Compute lighting

Projection, clipping

Scan line convers.

Z-buffer, shading

Graphics Rendering

Graphics Rendering

Graphics rendering pipeline

At a high level, the graphics pipeline usually

looks like this

Backface culling

Pixels

Graphics rendering pipeline

Application stage

Done entirely in software by the CPU

Reads the world geometry database

Reads the users input

Mice, keyboard, trackers, gloves etc.

Changes the view to the simulation or

orientation of virtual objects in response

to the user input

Simulations that drives the application

Physics, AI, collision detection, animation

Graphics Rendering

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

Graphics rendering pipeline

Geometry stage

Display adapter

Display, rasterizer stage

Vertex info to display pixels

A small specialized computer inside the computer, consisting

Implemented in hardware (or software)

Model transformations

The processor for graphics, GPU (Graphics Processing Unit)

Memory, memory bus, motherboard connection bus (PCI Express)

Monitor connectors (Analog: VGA, S-Video. Digital: DVI, HDMI, DisplayPort)

Translation, rotation, scaling etc.

Lighting computations

The graphics are processed in graphics processing unit (GPU)

Calculate the surface color based on

The type and number of light sources

The lighting model

The surface material properties

Atmospheric effects (fog etc.)

Typically situated on display adapter card

Integrated graphics solutions

High-end cards used to cost $100,000+ only 10 years ago!

Largest manufacturers are NVIDIA and ATI

Powerful GPUs at reasonable prices (also Intel, Matrox etc)

3D-capability emerging also to smartphones

The scene will be shaded and looks more realistic

Scene projection

Clipping

Mapping

GPU can be used also for many other calculations

Upcoming AMD Eyefinity: 6 displays per card

Multiple cards, upto 268 megapixels

Graphics Rendering

Graphics Rendering

3D Graphics Libraries

OpenGL

Programming 3D graphics in low level

is done using some graphics library

Hides the differing capabilities of hardware and the

complexities of interfacing with different 3D accelerators

through a uniform API. Converts primitives into pixels

OpenGL 3.1

OpenGL, ES

Direct3D

Part of DirectX

PCs, also for Xbox 360

Released in 2009, backward compatible

M3G, Mobile 3D Graphics API

OpenGL ES

An API for Java programs that produce 3D

computer graphics

Extends the capabilities of the Java Platform,

Micro Edition for mobile phones etc.

High level 3D API, but may be slow (often no

floating point unit)

Subset of OpenGL, especially for mobile devices

OpenGL Shading Language (GLSL)

More direct control of the graphics pipeline without assembly or

hardware-specific languages

Modern GPUs support DirectX and

OpenGL standards

http://www.graphicsformasses.com/

Managed by Khronos consortium

http://people.csail.mit.edu/kapu/EG_09_MGW/

Graphics Rendering

Graphics Rendering

Display adapter bus

PCI

AGP

PCI Express, 2.0, 3.0

Level of Detail

Some years ago the display cards were connected to a standard PCI slot

Very slow interface, would not be suitable for modern graphics

Now replaced by PCI Express

Faster than AGP, has replaced AGP

Direct Transport Compositor by Scalable Graphics is a middleware that

enables any number of graphics cards and processors to concentrate their

effort on a single display

http://www.khronos.org/

opengl-redbook.com

http://www.glprogramming.com/red/

OpenGL tutorial: http://nehe.gamedev.net/

No modification to the application

Higher frame rates and anti-aliasing

Stereovision support

http://www.scalablegraphics.com/

Graphics Rendering

Level of Detail (LOD):

a usual trick or

approximation to

increase rendering

speed

Use as low level a 3D

model as possible,

depending on

distance

Billboards are textures

which replace

polygons alltogether.

They turn towards the

viewpoint

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

Optimization

3D Rendering

Performance affected by e.g.,

Number of lights, vertices, pixel sizes

Bottlenecks

1. Locate the bottlenecks

2. Remove them

3. If needed, Goto 1

If Application stage takes 30ms, Geometry stage takes 20ms, Rasterizer stage takes 40ms

The maximum framerate is then 1/40ms=25Hz

Optimizing the application stage wont make the app go any faster

Could get more time for doing more complex lighting equations (20ms idle time in Geometry stage!)

Increase performance by

ad hoc formulations (fake it), low-res, HW, precomputation

Reducing the amount of data, disabling lights, fog, blending

Resizing window, reduce the number/size of textures, using LOD, billboards

Setup a number of tests, where each test only affects one stage at a time

Much more

realism & speed,

lower price in

recent years

Much bigger

polygon models

and data sets

Graphics Rendering

Graphics Rendering

3D Modeling

3D Modeling

CAD, 3D modeling software

Many ways to acquire 3D data & to create 3D

models

Usually laborous, a bottleneck

Geometry, textures, others?

Semiautomatic ways to create models?

3D Studio MAX, Alias|Maya, Houdini, SolidWorks, LightWave3D

AutoCAD, CATIA, Presagis, Multigen, Cinema 4D, XSI etc.

http://www.blender3d.org/

www.students.autodesk.com

http://sketchup.google.com/

3D editors in VR toolkits

Existing 3D models

Send images, get 3D model: http://3dsee.net/

Utah teapot is a classic 3D model since the 1970s

http://www.sjbaker.org/teapot/

Importing CAD files (2D, 3D)

3D object databases

3dtotal.com. Smithmicro Poser 8

GIS, aerial stereo photographs

Google Earth, 3D warehouse

3D digitizers & scanners

Other methods

Flat-land Inc.: 3DML (easy)

ATTs WordsEye: speech description

Graphics Rendering

Graphics Rendering

3D Digitizers

Optical 3D Scanners

Measurement Arms (Faro, Romer etc.)

3D pantographs (Microscribe, Hyperspace etc.)

Tactile robots

CMM (Coordinate Measuring Machine)

Separate points, even microns

Based on touch, laborous, slow, expensive

Fast way to create models

Laser range scanners and/or computer vision

Usually structured light / laser, which makes things easier

Sometimes no emitted light, but images only

Much harder computationally

Problems are glass, dark, and glossy surfaces

Polhemus FastScan

Cyrax 2500

DAVID-Laserscanner: freeware for 3D laser scanning

http://www.david-laserscanner.com/

3D desktop scanning, $500

http://www.real-view3d.com/

Illumination capturing, Aguru dome

http://www.aguruimages.com/

Graphics Rendering

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

3D Scanners

3D Digital Cameras

Many discontinued products

Microscribe, 3500 - 7000

Cyrax 2500, 150,000

Polhemus Fastscan

Freepoint3D

http://www.inspeck.com/

http://www.eyetronics.com/

Cyberware

3Q, http://www.3q.com/

http://www-2.cs.cmu.edu/~cil/v-hardware.html

E.g., 3D VuCam

http://www.stereovisioninc.com/

Visionsense 3D medical camera

Helps on minimally invasive surgery

Graphics Rendering

Graphics Rendering

Images to

Models

(Semi)automatic Modeling

Canoma, Geometra, D Sculptor

Minolta: 3D digital camera, 4000 , discontinued

3D City Engine, semiautomatic city models

www.procedural.com

Graphics Rendering

Graphics Rendering

Virtualized Reality

3D Scanning Procedure

Data acquisition

Carnegie-Mellon University 2001

multiple strategically located cameras, laser range

scanners or such

Calibration

Surface registration

Mesh reconstruction

Data modeling and smoothing

Texture reconstruction

http://www.cs.cmu.edu/afs/cs/project/VirtualizedR

/www/VirtualizedR.html

Capture, analyze, model real events

3D + time = 4D digitization

Bottlenecks:

Content creation, 3D-modeling, texturing

Graphics Rendering

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

Space Shuttle Radar

Topography Mission

High dynamic range imaging

Launched in 2000

Radar interferometry

Large scale Earth 3D modeling

80% of Earths landmass (60N-56S)

12.3 Terabytes total data

Digital topographic map

High dynamic range imaging allows a greater

dynamic range of luminance between light and

dark areas of a scene

30 m x 30 m spatial sampling

<=16 m absolute vertical height accuracy

Graphics Rendering

Graphics Rendering

Spherical Imaging etc.

Computational Photography

Panoramic, spherical images and videos provide a way for look-around and

telepresence applications. Limited moving may be possible

Immersive Media Corp., a leader in 360 spherical video technology,

http://demos.immersivemedia.com

Panoramic cameras: fisheye, http://immervision.com/en/home/index.php

D7, stitch, no camera movement, http://scallopimaging.com/

Multiple positional IR sensors, moving camera, http://luciddimensions.com/

Spherical video, Point Grey Labybug 3, http://www.ptgrey.com/

Softkinetic 3D cameras, http://www.softkinetic.net

Lots of panoramic pictures: http://www.vrmag.org/,

http://www.gregdowning.com/, www.world-heritage-tour.org

Google's Street View with panoramic streetviews

Microsoft Photosynth takes regular photographs and reconstructs the scene

or object in a 3D environment. Share them with friends. http://photosynth.net/

Microsoft research HD View Gigapixel Panoramas,

http://research.microsoft.com/en-us/um/redmond/groups/ivm/HDView/

Graphics Rendering

Artificial focus, super-resolution, etc.

Adobe 3D lens

Liquid lenses (rensselaer poly), fresnel, etc.

Origami optics, adaptive optics (astronomy)

Raskar, Tumblin: Computational Photography

computationalphotography.org

Course: http://web.media.mit.edu/~raskar/photo/2008

Graphics Rendering

Plenoptic Cameras

Kinematics Modeling

Integral imaging

Location, rotation and motion in 3D world

Kinematic control: moving objects without forces

Dynamic control: calculate forces to produce the motions

Lens arrays capture & display the 3D image

E.g., Stanford multi-aperture camera

Video or still camera arrays for improved imaging,

acquisition, special effects

Skeleton, muscle, skin layers

Parent-child hierarchical relations (body, hand, finger)

Direct kinematics

Slightly overlapping 16x16 subarrays

Each subarray has its own microlens

3D depth computationally

Can reduce noise, super-closeups

Lens: lithography, semiconductor processes

Disadvantages

Specifying rotations all the way from root node to leaf node to

get the leaf nodes position and rotation

Inverse kinematics (IK)

The opposite of direct kinematics, heavily used in robotics

Position/rotation of the leaf node is known, calculate all

rotations up to the root node

Used in interaction, motion capture, modeling tools

Closed or numerical solutions

Lower resolution

Power consumption, 10x calculation

Requires textures

Together IK and a hierarchical skeleton enable

specific motions in real-time

Graphics Rendering

Graphics Rendering

www.jntuworld.com

www.jntuworld.com

Physical Modeling

Behavior Modeling

Gravitation, friction

Collision detection

Collision detection software:

Human or crowd behavior modeling

Virtual humans (avatar = 3D model of an online user)

I-COLLIDE, SWIFT++, OPCODE, AABB trees, SOLID

Collision, surface deformation, force

computations

Autonomic objects, intelligent Ves, realistic scenarios

Applied e.g. in games

Methods: scripting, fuzzy logic, neural networks, ...

Simulation of emotions

Artificial intelligence

Elasticity, collisions etc.

Force feedback

Vortex, Novodex, Havok , VPS-PBM ,

Renderware Physics , Meeqon Physics SDK

Graphics Rendering

Graphics Rendering

VR Toolkits

VR Toolkits

Commercial VR software and hardware: e.g., http://www.est-kl.de/

List of VR software: http://www.diverse.vt.edu/VRSoftwareList.html

Low-level programming (e.g. OpenGL) would be possible, but hard

WorldToolKit is a multi-platform software development system for building

high-performance, real-time, integrated 3D applications for scientific and

commercial use

A set of API, function libraries and tools to create, prototype, develop, configure,

manage and commercialize applications

Support for networked distributed simulations

Options for immersive displays, and I/O devices (HMDs, trackers, etc)

3DVIA Virtools, many plugins

The Lightning VR system can be used either as a development system or as

a runtime module under Unix/Linux

OpenGL Performer is a programming interface helping developers to create

real-time simulation 3D graphics applications

Sets of functions for 3D models, I/O and communications. API allows the user to

make adjustments using either the incorporated language or use C/C++ modules

even during run-time

WorldViz Vizard

OpenSG

Alice 3 beta software, http://www.alice.org/

CAVElib is a C programming interface that can be combined with

Performer, Open Inventor or other OpenGL renderer to manage VR

systems and help building VR applications:

Input device interface libraries

A number of libraries are available to simplify application developer task

to manage the various input devices in VR systems (commercial e.g.,

trackd, open source e.g., VRPN, OpenTracker)

Capabilities that help in complex applications such as VR, scientific visualization,

interactivity and CAD. Incorporates capabilities of using multi-processor and multigraphics pipelines. Built on top of the OpenGL standard graphics library and

combines Ansi C and C++

Graphics Rendering

VR Toolkits

OpenSceneGraph

DIVERSE is a cross-platform, open source API for developing

VR on Linux or Windows. Enables to quickly build applications

that will run on the desktop or immersive systems. DIVERSE

can interact with other APIs and toolkits

Collada is an initiative to create a portable 3D file format

Maverik - VR micro kernel, http://directory.fsf.org/project/maverik/

VRED, vred.org

PeopleShop, PeoplePak

Real time physical engines

Collisions, rigid body dynamics

Vortex, Novodex, Havok , VPS-PBM

Open source: ODE (Open Dynamic Engine)

VR Juggler is an open-source VR application devl. framework

APIs that help the programmer on interface aspects, display surfaces,

tracking, navigation, graphics and rendering techniques

The VR Juggler Suite includes a virtual application, a device

management system (local or remote access to I/O devices), a

standalone generic math library, a portable runtime, a simple sound

abstraction, a distributed model view-controller implementation and an

XML-based configuration system

VR Toolkits

(VR professor Randy Pauschs "Last Lecture": youtube.com/watch?v=ji5_MqicxSo)

Display configurations, multi-pipe parallel rendering, stereo and tracking

Graphics Rendering

Presagis AI.implant is an AI authoring and runtime software

Simulated characters make sophisticated context specific decisions

Authoring the AI world, creating rules used for decision making

ViCrowd, PeopleShop, MRE, games

Open Inventor

VRML / X3D libraries

Java3D

Alias MOCAP is a real-time motion capture system designed

for the capture, editing, and blending of motion data

Alias Online for broadcast productions

Alias MOTIONBUILDER to create 3D character animation

The MotionMonitor is a real-time 3D motion capture system

Integrates 3D animation and live video

3D characters that interact with live actors in real time

Advanced data capture and analysis package

Designed for use in medical research; physical therapy clinics;

sports medicine labs; motor control, balance, neurological, and gait

studies; golf, tennis and baseball instruction

Haptics libraries

Device-dependent, e.g. GHOST, ReachIn API,

Sensable FreeForm for modeling

Immersion VirtualHand SDK

A very large model visualization with Realityserver

Commercial & free VR packages

www.mentalimages.com/realityserver

http://conferences.computer.org/vr/2009/IE3_tutorialMaterials.html

Development environments for adding hand-motion capture, handinteraction and force feedback to simulation applications

Graphics Rendering

Graphics Rendering

www.jntuworld.com

10

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- StanleyDocument30 pagesStanleycrankycoderNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Dfa Motivation HandoutDocument25 pagesDfa Motivation HandoutsgrrscNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- GeoServer 2.5.x User ManualDocument903 pagesGeoServer 2.5.x User ManualAdam KahunaNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Dynazvzsvmic Scheduling Topc DraftDocument29 pagesDynazvzsvmic Scheduling Topc DraftsgrrscNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- StanleyDocument30 pagesStanleycrankycoderNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- A Citizen-Centric Approach Towards Global-Scale Smart City PlatformDocument6 pagesA Citizen-Centric Approach Towards Global-Scale Smart City PlatformsgrrscNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Compiler Optimization Orchestration For Peak PerformanceDocument32 pagesCompiler Optimization Orchestration For Peak PerformancesgrrscNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- ExhfdDocument1 pageExhfdsgrrscNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- DOs and DONTs PDFDocument1 pageDOs and DONTs PDFDeepak SharmaNo ratings yet

- Dummmy DataDocument1 pageDummmy DatasgrrscNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Challenges of Monitoring Software Defined Everything: WhitepaperDocument4 pagesChallenges of Monitoring Software Defined Everything: WhitepapersgrrscNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- P2P Networks Vs Multicasting For Content DistributionDocument5 pagesP2P Networks Vs Multicasting For Content DistributionsgrrscNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- ReadmeDocument1 pageReadmesgrrscNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Tutorial 1cbxbDocument1 pageTutorial 1cbxbsgrrscNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Network Functions Virtualization: Technical White PaperDocument12 pagesNetwork Functions Virtualization: Technical White Paperpsanjeev100% (1)

- Wolfgang Sollbach - Storage Area Networks.11Document1 pageWolfgang Sollbach - Storage Area Networks.11sgrrscNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Wolfgang Sollbach - Storage Area Networks.541Document1 pageWolfgang Sollbach - Storage Area Networks.541sgrrscNo ratings yet

- LicenseDocument7 pagesLicenseaya_lakNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Arp Uploads 2021Document13 pagesArp Uploads 2021sgrrscNo ratings yet

- V3i4 0347Document6 pagesV3i4 0347sgrrscNo ratings yet

- Cloud Computing Internet MaterialDocument3 pagesCloud Computing Internet MaterialsgrrscNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- AR ITerm BroDocument6 pagesAR ITerm BrosgrrscNo ratings yet

- Guidelines For Abstract PreparationDocument1 pageGuidelines For Abstract PreparationsgrrscNo ratings yet

- Security For GIS N-Tier Architecture: Mgovorov@usp - Ac.fjDocument14 pagesSecurity For GIS N-Tier Architecture: Mgovorov@usp - Ac.fjsgrrscNo ratings yet

- Protection: Shentao@casm - Ac.cnDocument9 pagesProtection: Shentao@casm - Ac.cnsgrrscNo ratings yet

- Zero WatermarkingDocument4 pagesZero WatermarkingsgrrscNo ratings yet

- Spatial Data Authentication Using Mathematical VisualizationDocument9 pagesSpatial Data Authentication Using Mathematical VisualizationsgrrscNo ratings yet

- Wisa 09 P 190Document4 pagesWisa 09 P 190sgrrscNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- AcsacDocument9 pagesAcsacsgrrscNo ratings yet

- Referencer - DA Rates (Dearness Allowances Rates)Document3 pagesReferencer - DA Rates (Dearness Allowances Rates)sgrrscNo ratings yet

- Functional ArrchitectureDocument5 pagesFunctional ArrchitectureliyomNo ratings yet

- An Optimized Shortest Job First Scheduling Algorithm For CPU SchedulingDocument6 pagesAn Optimized Shortest Job First Scheduling Algorithm For CPU SchedulingDeath AngelNo ratings yet

- CNSSI-1253F Cross Domain Solution OverlayDocument13 pagesCNSSI-1253F Cross Domain Solution OverlayMitch QuinnNo ratings yet

- The Magic Behind Programming LanguagesDocument4 pagesThe Magic Behind Programming LanguagesTehreem AnsariNo ratings yet

- PythonScientific SimpleDocument356 pagesPythonScientific SimpleNag PasupulaNo ratings yet

- Kabarak University: 1. Section A Is Compulsory, and Then Attempt Any Other Two Questions From Section BDocument6 pagesKabarak University: 1. Section A Is Compulsory, and Then Attempt Any Other Two Questions From Section BTigerNo ratings yet

- Chapter 11 InheritanceDocument39 pagesChapter 11 InheritanceRaksa MaNo ratings yet

- Nutanix Files User GuideDocument70 pagesNutanix Files User GuideagdfhhfgdjNo ratings yet

- Experiment ManualDocument50 pagesExperiment ManualRoberto RilesNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Circuits Second 2nd Edition Ulaby Maharbiz CH1 SolutionsDocument53 pagesCircuits Second 2nd Edition Ulaby Maharbiz CH1 SolutionsConor Loo33% (3)

- מערכות הפעלה- הרצאה 3 יחידה ד - ProcessDocument43 pagesמערכות הפעלה- הרצאה 3 יחידה ד - ProcessRonNo ratings yet

- Specifications For Metro Ethernet 13-Sdms-10Document30 pagesSpecifications For Metro Ethernet 13-Sdms-10ashrafNo ratings yet

- Summary of Chapter 3 - Multiplexing MultiplexingDocument3 pagesSummary of Chapter 3 - Multiplexing MultiplexingumarNo ratings yet

- Galgotias College of Engineering & TechnologyDocument15 pagesGalgotias College of Engineering & Technologypawan vermaNo ratings yet

- Panasonic TX26LXD60 Service ManualDocument67 pagesPanasonic TX26LXD60 Service ManualwatteaucarNo ratings yet

- PIC Instruction SetDocument64 pagesPIC Instruction SetHiru Purushothaman Hirudayanathan100% (2)

- HandPunch Reader Cross - Reference ChartDocument2 pagesHandPunch Reader Cross - Reference Chartjduran_65No ratings yet

- DNS Amplification Attacks - CISADocument6 pagesDNS Amplification Attacks - CISAManishNo ratings yet

- CV RogerdeguzmanDocument4 pagesCV RogerdeguzmanNickNo ratings yet

- Advantages and DisadvantagesDocument2 pagesAdvantages and DisadvantagesLuqman HasifNo ratings yet

- SUN2000-100KTL-M1 Output Characteristics Curve: Huawei Technologies Co., LTDDocument7 pagesSUN2000-100KTL-M1 Output Characteristics Curve: Huawei Technologies Co., LTDKarlo JurecNo ratings yet

- ITIL Change Control Form TemplateDocument2 pagesITIL Change Control Form TemplateSergio TNo ratings yet

- Juniper OliveDocument5 pagesJuniper OliveKhairul Izwan Che SohNo ratings yet

- Avnet Minized Technical Training Courses: Prelab - Lab 0Document9 pagesAvnet Minized Technical Training Courses: Prelab - Lab 0gsmsbyNo ratings yet

- Content Server 53 SP6 Release NotesDocument163 pagesContent Server 53 SP6 Release NotesMiguel FloresNo ratings yet

- Smart Farming System Using IoT For Efficient CropDocument4 pagesSmart Farming System Using IoT For Efficient CropKumar ManglamNo ratings yet

- Skulpt Synth ManualDocument26 pagesSkulpt Synth ManualTomasz KuczaNo ratings yet

- Zendrive - Family Safety - iOS Recommendations For App Store ReviewDocument11 pagesZendrive - Family Safety - iOS Recommendations For App Store ReviewPeladitoNo ratings yet

- 10.1.4.8 Lab - Configure ASA 5505 Basic Settings and Firewall Using ASDMDocument40 pages10.1.4.8 Lab - Configure ASA 5505 Basic Settings and Firewall Using ASDMerojasNo ratings yet

- VLT FCD 300Document144 pagesVLT FCD 300Joel SamuelNo ratings yet