Professional Documents

Culture Documents

Balaceanu

Uploaded by

Oana ElenaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Balaceanu

Uploaded by

Oana ElenaCopyright:

Available Formats

Informatica Economic, nr.

2 (42)/2007

67

Components of a Business Intelligence software solution

Daniel BLCEANU

TotalSoft

www.totalsoft.ro

Business intelligence (BI) is a business management term which refers to applications

and technologies which are used to gather, provide access to, and analyze data and

information about company operations. Business intelligence systems can help companies

have a more comprehensive knowledge of the factors affecting their business, such as metrics

on sales, production, internal operations, and they can help companies to make better

business decisions.[3]

The ability to extract and present information in a meaningful way is vital for any business

management application. Business Intelligence enables companies to make better decisions

faster than ever before by providing the right information to the right people at the right time.

Employees increasingly find that they suffer from information overload and need solutions

that provide the analysis to effectively make decisions. Whether they are working on the

strategic, the tactical, or the operational level, business intelligence applications provides

tools to make informed decisions a more natural part of all employees everyday work

experience.

Keywords: business intelligence, KPI (Key Performance Indicator), software architecture,

data warehouse, pivot table

ntroduction

The reporting and analytics solutions that

form a BI solution could be delivered as part

of the core of business applications, while

others can be additional products that extend

the business applications. Key goals of these

solutions are to provide:

An integrated platform and applications

A secure and personalized user experience

A collaborative environment

A total solution that is cost effective and

comprehensive

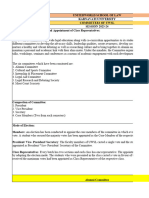

Figure 1 shows all the components of a

business intelligence architecture including

the users. This article describes all of the

components and their relationships.

A solid BI architecture: the multidimensional data warehouse

The multi-dimensional data warehouse is the

core

of

the

business

intelligence

environment. Basically it is a large database

containing all the data needed for

performance management. The modeling

techniques used to build up this database are

crucial for the functioning of the BI solution.

Typical characteristics of the data warehouse

are that:

it contains time invariant data, in other

words a report ran 2 months ago can be

reproduced today (if launched with the same

parameters)

it contains integrated data, where integrated

means that the same business definitions are

use throughout the data warehouse

it contains atomic data, and not aggregated

data as often assumed, because users may

(and often do) need the lowest level of detail

To get a good understanding of what a multidimensional data warehouse is, it is

important to understand the multidimensional modeling techniques that assure

the above characteristics.

A data warehouse is the main repository of

the organization's historical data, its

corporate memory [1]. For example, an

organization would use the information that's

stored in its data warehouse to find out what

day of the week they sold the most widgets in

May 1992, or how employee sick leave the

week before the winter break differed

between California and New York from

2001-2005. In other words, the data

warehouse contains the raw material for

management's decision support system. The

Informatica Economic, nr. 2 (42)/2007

68

critical factor leading to the use of a data

warehouse is that a data analyst can perform

complex queries and analysis (such as data

mining) on the information without slowing

down the operational systems.

Fig.1. Components of a BI solution

While operational systems are optimized for

simplicity and speed of modification (online

transaction processing, or OLTP) through

heavy use of database normalization and an

entity-relationship model, the data warehouse

is optimized for reporting and analysis (on

line analytical processing, or OLAP).

Frequently data in data warehouses is heavily

denormalised, summarized and/or stored in a

dimension-based model but this is not always

required to achieve acceptable query

response times.

More formally, Bill Inmon (one of the

earliest and most influential practitioners)

defined a data warehouse as follows [2]:

Subject-oriented, meaning that the data in

the database is organized so that all the data

elements relating to the same real-world

event or object are linked together;

Time-variant, meaning that the changes to

the data in the database are tracked and

recorded so that reports can be produced

showing changes over time;

Non-volatile, meaning that data in the

database is never over-written or deleted,

once committed, the data is static, read-only,

but retained for future reporting;

Integrated, meaning that the database

contains data from most or all of an

organization's operational applications, and

that this data is made consistent.

In multi-dimensional modeling often the

words star-schema and data mart are used as

synonyms. In the architecture pictured here

(Fig.1. Components of a BI solution), it is

used the same naming convention. Therefore

the data warehouse is a collection of data

marts connected by conformed dimensions.

A data mart (DM) is a specialized version of

a data warehouse (DW) [5]. Like data

warehouses, data marts contain a snapshot of

operational data that helps business people to

strategize based on analyses of past trends

and experiences. The key difference is that

the creation of a data mart is predicated on a

specific, predefined need for a certain

grouping and configuration of select data. A

data mart configuration emphasizes easy

access to relevant information.

Sometimes data marts may be deployed

physically on a different platform or database

than the data warehouse, but in my concept

of data warehousing this is done solely for

technical reasons as e.g. performance or

security.

There can be multiple data marts inside a

single corporation; each one relevant to one

or more business units for which it was

designed. DMs may or may not be dependent

or related to other data marts in a single

corporation. If the data marts are designed

using conformed facts and dimensions, then

they will be related. In some deployments,

each department or business unit is

considered the owner of their data mart

which includes all the hardware, software

and data.[6] This enables each department

to use, manipulate and develop their data any

Informatica Economic, nr. 2 (42)/2007

way they see fit; without altering information

inside other data marts or the data

warehouse. In other deployments where

conformed dimensions are used, this business

unit ownership will not hold true for shared

dimensions like customer, product, etc.

The source systems

Actually source systems aren't really part of

the business intelligence environment. But

they feed the BI solution and so they are at

the basis of your whole architecture.

Important in the set-up of BI environment is

that you consider all the types of data you

may need to include in your analysis. Don't

stop at considering only information included

in databases, but consider also external data

feeds (from partners) e.g. through XML; or

capturing customer behavior on your website

(clickstream analysis); connecting to ERP

systems on application server level (rather

than trying to read a 10.000 table SAP data

model :-( ) or uploading Excel files (almost

always needed); and many more candidate

sources.

When you start working with the data from

these systems you'll soon get to know that

"you can't live with them and you can't live

without them". I often use this phrase to

describe that the data provided to you by

source systems is crucial for your analysis,

but often that data is so bad that it is

impossible to work with it. Most companies

run into the data quality problem sooner or

later in their implementation, and it is a key

element at the start of any BI project.

Another phenomenon that occurs frequently

is the off-line copy of a source system.

Sometimes a 24x7 online application is so

business critical that even simple operational

reporting on this application jeopardizes the

availability of the application. In this case a

mirror of the application database is set up to

run the reports on. Obviously if such a

situation is in place, the data warehouse

should extract from the mirror rather than the

online database.

ETL: Extract, transform and load

In order to build up a multi-dimensional data

warehouse, data from all the sources

previously described needs to be extracted

69

and brought to your BI environment. Given

the variety of source systems, possible

connectivity is a key word here.

After extraction, the data needs to be

transformed. Transformation can mean a lot

of things, but it includes all activities to make

the data fit the multi-dimensional model that

makes up the data warehouse. Given the

significant difference between ER models

and

multi-dimensional

models,

the

transformations may become quite complex.

If we add to this the extra work done to clean

up and 'harmonize' the data coming from

different systems, then one might understand

why some authors describe ETL work as

70% of the IT side of a BI project. Usually a

separate database or specific zone in the data

warehouse is reserved as storage space for

intermediate results of the required

transformations. This area is called often

staging area or work area. After all the

transformation work, the prepared data can

be loaded into the multi-dimensional model.

Although this step is not as complex as the

transformation part, a lot of attention needs

to be given to this step, since data loaded to

the data warehouse may be 'released' or

'public' to end users.

A specific aspect of the ETL work which has

gained much attention during the last years is

everything related to data quality.

Data profiling is the activity of 'measuring'

the level of data quality one might expect to

get from a specific source system prior to

starting the ETL work. This analysis might

be quite complex, so specific tools have been

developed for this. However the value of

knowing any data deficiencies before

engaging in an expensive BI adventure is

enormous. Building a technically perfect BI

solution with perfectly unusable data in it is

an expensive mistake.

Data cleansing refers to specific activity of

cleaning up data. Mostly it is about address

data. Specific tools are available where one

can validate customer addresses against an

external database of addresses so all address

information in the data warehouse is identical

and verified. Similar methods of cleansing

are available for product classification codes

70

(as UNSPSC).

Obviously the usefulness of data quality

solutions extends beyond the area of business

intelligence. Due to the enormous impact of

data quality on the ROI of your BI

application however their first use is often in

this context.

ODS or Operational Data Store

Often customers ask about the difference

between an ODS and a data warehouse. The

difference is almost as big as between an

operational system and a data warehouse.

An ODS is an off-line copy of 1 or more

source systems:

with a data model that respects the data

model of the source system (ER model)

with an added functionality of storing

historical versions of the data

potentially with some or full integration

between the ER models of different

applications

In any case the ODS is not built to support

strategic decision making, but rather support

operational reporting, related to a the

functional scope of a specific application.

OLAP cubes

While most data warehouses or data marts

today are still deployed on relational

databases, also multi-dimensional databases

are available, often called OLAP cubes. The

multi-dimensional modeling techniques using

facts and dimensions are applicable to both

relational databases and multi-dimensional

databases.

Even though OLAP offers superior

performance in analysis of the data,

historically the size of an OLAP cube was

limited and incremental loading of the cubes

sometimes difficult. OLAP cubes have had

very high success rates for environments

where the BI solution is used for what-if

analysis, financial simulations, budgeting and

target setting (requiring write-back).

Semantic layer for reporting

The objective of a BI solution is to offer a

tool where end users can easily access data

for analytical purposes. Unfortunately

databases (relational or multi-dimensional)

are notoriously user-unfriendly. To make

Informatica Economic, nr. 2 (42)/2007

data access easier for end users most vendors

of reporting solutions have provided the

possibility to create a layer between the

database and the reporting tool. In this layer

the database fields can be translated into

'objects', each having a clear business

definition. These objects can be dragged and

dropped onto a report, making report creation

a 'piece of cake'.

Reporting

The semantic layer and the reports produced

on top of your data warehouse are only the

tip of the iceberg for a person sitting in the IT

department, but for most of the end users

they are the only visible part of your BI

solution. IT professionals tend to give less

attention to the reporting part of the BI

application as the big IT work goes into the

construction of the data warehouse. It is in

this part of the architecture that we need to

make a huge distinction between how IT

persons view the BI environment and how

end users view the BI environment. For end

users the availability of reports, access to the

reporting tools, correctness of titles and

descriptions on the reports is crucial, and

lack of attention on this side may result in

utter failure of the BI project, even with a

correctly modeled data warehouse, perfect

architecture and good quality data.

The richness of reports that can be provided

to end users is sometimes outside of the

comprehension of typical IT persons, but also

outside of the imagination of the business

people. IT people often know too little of the

business to format a report into the most

readable, easiest understandable graph, while

business user have to few insight into the

technical possibilities of the platform and

have been forced to think within the limits of

the same graphs and tables they have always

worked with. An extreme attention needs to

be paid to the collaboration between IT and

business to build a good set of reports that

maximizes the return on investment of the BI

project.

Usually the reports are structured in the

following typology:

Standard reports: Fixed reports scheduled

Informatica Economic, nr. 2 (42)/2007

and made available to the users a specific

point in time or at user request without any

further required input.

Parameter reports: Reports of fixed layout, available at user request, requiring input

of some parameters when the user launches

the report.

Ad-hoc analysis: Report created by the

user on the spot, either starting from an

existing standard or parameter report or from

scratch.

Fig.2. Ad-Hoc reporting with Microsoft

Excel 2007 (reference to Microsoft web site,

Deliver Business Intelligence through

Microsoft Office SharePoint Server 2007)

Budgeting & target setting: These reports

show data from the data warehouse and allow

input of data with write-back to the data

warehouse.

Dashboards: Highly aggregated reports,

containing a multitude of KPI's and thus

showing an overview of how the company or

a department is doing. Mostly dashboard

reports combine actual figures with target

figures (as well as figures of previous year or

month for comparison).

Data quality reports: To follow up the

evolution of the quality of the information in

your data warehouse usually the data quality

steward requires some specific data quality

reports. Also data profiling tools and data

cleansing tools may provide their own

reports that fall into this category.

Data mining reports: As output of data

mining exercises, before feeding the results

back to the data warehouse usually there are

several outputs to be interpreted. These

reports, aimed at the analyst working with

71

data mining, are usually part of the data

mining tool.

IT technical reports: A series of technical

reports to follow up load performance, query

performance, number users, data volumes,

etc are necessary for the good functioning of

the BI solution.

Meta data reports: In order to give end

users and system analysts a good insight into

what data is available and how it has been

transformed to match the company's business

definitions, an overview of the metadata is

very interesting. Some authors view the IT

technical information I described above also

as metadata.

It is important to realize for each functional

set of reports who are the main users. A good

allocation of report ownership, and clear

rules for version and release management

will help to keep control of the many reports

that will be created.

BI portal: single point of information

access

The multitude of different reports describe

above demonstrates how easy the BI solution

may grow into a wilderness of reports. Once

the amount of reports begins to grow, the

best solution is to create a single point of

access to information within the company.

Usually this results into some kind of portal

solution with pointers to the different reports,

clear descriptions of the scope of each report,

as well as indication who is the business

owner of the report (Fig.3. BI Portal ).

Fig.3. BI Portal (reference to Microsoft web

site, Deliver Business Intelligence through

Informatica Economic, nr. 2 (42)/2007

72

Microsoft Office SharePoint Server 2007)

Another element that the portal application

can provide is collaboration. Often figures on

reports need interpretation, explanation, side

notes and other comments. This textual

information needs to be stored somewhere.

Given the fact that this information may have

multiple forms, a portal solution, which

allows document storage (content/document

management system) is the right solution to

allow this kind of BI collaboration.

End users or why did you build your

solution in the first place

Of course users aren't part of the BI

architecture, however they are in the picture

to stress that the roll-out of a BI solution is

not comparable to the roll-out of an

application. While the functional scope of an

application is limited and therefore user

training is limited in scope, the possibilities

of a data warehouse are limited only by the

available data and the creativity of the end

users. Unfortunately it is often the creativity

of the end user which poses a problem. Most

end users are not used to have massive

amounts of information at hand. Or in other

words, most users are not used to work with

a complete BI solution. So while, training

users to work with a few pre-defined reports

might not take much time, training users to

get the most of the data warehouse and to

make the actively participate in building the

reports part of the BI environment is a

tedious and time consuming task.

The link between user education and ROI for

BI is an often forgotten part of the project. IT

vendors consider the BI project closed when

the data warehouse is built, together with a

set of requested reports. Unfortunately

business people tend to see the project also as

closed when IT delivers, while the task of

business community actually just starts at

that point in time.

Enabling BI in the organization is an often

forgotten part of BI projects, requiring

change management in how the organization

works with KPI's, how managers work with

reports and dashboards, definition of new

responsibilities, awareness of a 'new way of

working'.

Data mining

Data mining is the automated process of

discovering previously unknown useful

patterns in structured data. The data

warehouse is therefore a perfect environment

to conduct data mining exercises on. To a

certain extent online analytical processing, in

which users slice and dice, pivot, sort, filter

data to see patterns is a form of human,

visual data mining. However the human eye

can only see a limited amount of dimensions

(mostly three) at the same time and therefore

cannot discover more complex relationships.

Also discovering relationships between

different attributes of dimensions is a time

consuming exercise. The field of automated

pattern detection has gained a lot of

popularity in the last years. Successful

implementations of data mining applications

are however clearly limited in functional

scope and data mining on large scope is not

expected before 2010.

Conclusion

According to Gartner [10], CIOs will need to

concentrate on information as a leverage

point to enhance efficiency, increase

effectiveness and support competitiveness.

This also corresponds to the continued

importance of business intelligence in 2007.

As such, CIOs will continue to be

responsible for IT the mechanism. They

can further play a greater role in leveraging

information the understanding that drives

performance and innovation.

Top 10 Business and Technology Priorities in 2007

Top

Business Priorities

Business process improvement

Controlling enterprise-wide operating costs

Attract, retain and grow customer relationships

Improve effectiveness of enterprise workforce

Revenue growth

10

Ranking

Top 10 Technology Priorities

Ranking

1

2

3

4

5

Business Intelligence applications

Enterprise applications (ERP, CRM and others)

Legacy application modernization

Networking, voice and data communications

Servers and storage technologies (virtualization)

1

2

3

4

5

Informatica Economic, nr. 2 (42)/2007

Improving competitiveness

Using intelligence in products and services

Deploy new business capabilities to meet strategic goals

Enter new markets, new products or new services

Faster innovation

73

6

7

8

9

10

Source: Gartner EXP (February 2007)

References

1. Wikipedia,

http://en.wikipedia.org/wiki/Data_warehouse

2. W. H. Inmon, Building the Data

Warehouse, Wiley, 1005

3. Wikipedia,

http://en.wikipedia.org/wiki/Business_Intelli

gence

4. Ralph Kimball, Margy Ross, The Data

Warehouse Toolkit: The Complete Guide to

Dimensional Modeling, Wiley, 2002

5. Wikipedia,

http://en.wikipedia.org/wiki/Data_mart

6. Bill Immon, Data Mart Does Not Equal

Data Warehouse, DM Direct Newsletter,

November

20,

1999

Issue,

http://www.dmreview.com/article_sub.cfm?a

rticleId=1675

7. Business Intelligence: Making the data

make sense, Dresner, Howard. 2001, Group

US IT Expo 2001

8. Larissa T. Moss, Shaku Atre, Business

Intelligence Roadmap: The Complete Project

Lifecycle for Decision-Support Applications,

Addison-Wesley Professional 2003

9. Joy Mundy, Warren Thornthwait, Ralph

Kimball, The Microsoft Data Warehouse

Toolkit: With SQL Server 2005 and the

Microsoft Business Intelligence Toolset,

Wiley 2006

10. Gartner EXP Survey, STANFORD,

Conn.,

February

14,

2007,

http://www.gartner.com/it/page.jsp?id=5011

89

Security technologies

Service-oriented architectures

Technical infrastructure management

Document management

Collaboration technologies

6

7

8

9

10

You might also like

- UC Excel 2010 - Module 3 - ChartingDocument39 pagesUC Excel 2010 - Module 3 - Chartingqazx77No ratings yet

- Business EnglishDocument145 pagesBusiness EnglishHo Ha67% (3)

- Filme de DescarcatDocument1 pageFilme de DescarcatOana ElenaNo ratings yet

- 14 2Document9 pages14 2Oana ElenaNo ratings yet

- 9 Vol 9 No 1Document11 pages9 Vol 9 No 1Movidi VenkatvinodNo ratings yet

- BUSINESS & KNOWLEDGE PROCESS OUTSOURCING - CONTACT CENTERDocument8 pagesBUSINESS & KNOWLEDGE PROCESS OUTSOURCING - CONTACT CENTEROana ElenaNo ratings yet

- 26 BusinessDocument7 pages26 BusinessOana ElenaNo ratings yet

- Sales Populatia Tinta 150,000 Populatie Tota 209,945Document1 pageSales Populatia Tinta 150,000 Populatie Tota 209,945Oana ElenaNo ratings yet

- BibliografieDocument5 pagesBibliografieOtilia FloreaNo ratings yet

- Sales Populatia Tinta 150,000 Populatie Tota 209,945Document1 pageSales Populatia Tinta 150,000 Populatie Tota 209,945Oana ElenaNo ratings yet

- BUSINESS & KNOWLEDGE PROCESS OUTSOURCING - CONTACT CENTERDocument8 pagesBUSINESS & KNOWLEDGE PROCESS OUTSOURCING - CONTACT CENTEROana ElenaNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5783)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Biomed Part-B QuestionsDocument4 pagesBiomed Part-B QuestionsNirmal KumarNo ratings yet

- Wi-Fi Planning and Design Questionnaire 2.0Document12 pagesWi-Fi Planning and Design Questionnaire 2.0Free Space67% (3)

- EHV AC Transmission System Design and AnalysisDocument104 pagesEHV AC Transmission System Design and Analysispraveenmande100% (1)

- Schedule-of-rates-MI 2014-15Document151 pagesSchedule-of-rates-MI 2014-15Vinisha RaoNo ratings yet

- System ThinkingDocument18 pagesSystem Thinkingpptam50% (2)

- Coventor Tutorial - Bi-Stable Beam Simulation StepsDocument45 pagesCoventor Tutorial - Bi-Stable Beam Simulation Stepsrp9009No ratings yet

- Slides Iso 17021 Be LacDocument117 pagesSlides Iso 17021 Be Lacjorge.s1943No ratings yet

- International Journal of Organizational Innovation Final Issue Vol 5 Num 4 April 2013Document233 pagesInternational Journal of Organizational Innovation Final Issue Vol 5 Num 4 April 2013Vinit DawaneNo ratings yet

- Principles of Clinical Chemistry Automation and Point-of-Care TestingDocument27 pagesPrinciples of Clinical Chemistry Automation and Point-of-Care TestingMalliga SundareshanNo ratings yet

- Script in Conduct Competency AssessmentDocument4 pagesScript in Conduct Competency AssessmentJane Dagpin100% (1)

- Sovtek 5881 Wxt/6L6 WGC Tube GuideDocument8 pagesSovtek 5881 Wxt/6L6 WGC Tube GuideKon GekasNo ratings yet

- 20 Đề Thi Thử Tiếng Anh Có Đáp Án Chi Tiết - LovebookDocument462 pages20 Đề Thi Thử Tiếng Anh Có Đáp Án Chi Tiết - LovebookPhương UyênNo ratings yet

- SQL QuestionsDocument297 pagesSQL Questionskeysp75% (4)

- Eichhornia Crassipes or Water HyacinthDocument5 pagesEichhornia Crassipes or Water HyacinthJamilah AbdulmaguidNo ratings yet

- Journal Pre-Proof: Crop ProtectionDocument34 pagesJournal Pre-Proof: Crop ProtectionKenan YılmazNo ratings yet

- Solids, Liquids and Gases in the HomeDocument7 pagesSolids, Liquids and Gases in the HomeJhon Mark Miranda SantosNo ratings yet

- File Download ActionDocument45 pagesFile Download Actionecarter9492No ratings yet

- Bracing Connections To Rectangular Hss Columns: N. Kosteski and J.A. PackerDocument10 pagesBracing Connections To Rectangular Hss Columns: N. Kosteski and J.A. PackerJordy VertizNo ratings yet

- Arduino Project: Smart Irrigation SystemDocument13 pagesArduino Project: Smart Irrigation SystemAleeza AnjumNo ratings yet

- GTN Limited Risk Management PolicyDocument10 pagesGTN Limited Risk Management PolicyHeltonNo ratings yet

- Research On Water Distribution NetworkDocument9 pagesResearch On Water Distribution NetworkVikas PathakNo ratings yet

- Met Worksheet Atmosphere QuestionsDocument4 pagesMet Worksheet Atmosphere QuestionsSujan IyerNo ratings yet

- Form 2 Physics HandbookDocument90 pagesForm 2 Physics Handbookmosomifred29No ratings yet

- Pageant Questions For Miss IntramuralsDocument2 pagesPageant Questions For Miss Intramuralsqueen baguinaon86% (29)

- Test Booklet Primary-1 PDFDocument53 pagesTest Booklet Primary-1 PDFReynold Morales Libato100% (1)

- Test 1: (Units 1-2)Document59 pagesTest 1: (Units 1-2)Elena MH100% (1)

- Committees of UWSLDocument10 pagesCommittees of UWSLVanshika ChoudharyNo ratings yet

- Reviews On IC R 20Document5 pagesReviews On IC R 20javie_65No ratings yet

- MPP3914 - 36 11 00 05 1Document24 pagesMPP3914 - 36 11 00 05 1clebersjcNo ratings yet

- Topographic Map of New WaverlyDocument1 pageTopographic Map of New WaverlyHistoricalMapsNo ratings yet