Professional Documents

Culture Documents

.Archivetemp01 Comp - Sing.value - Decomp.using - Mesh.connect - Proc.

Uploaded by

Deepthi Priya Bejjam0 ratings0% found this document useful (0 votes)

16 views29 pagescomputation of svd using mesh connected processors

Original Title

.Archivetemp01 Comp.sing.Value.decomp.using.mesh.Connect.proc.

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentcomputation of svd using mesh connected processors

Copyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

16 views29 pages.Archivetemp01 Comp - Sing.value - Decomp.using - Mesh.connect - Proc.

Uploaded by

Deepthi Priya Bejjamcomputation of svd using mesh connected processors

Copyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

You are on page 1of 29

© 242 JOURNAL OF VLSI AND COMPUTER SYSTEMS, VOLUME I, NUMBER 3

Computation of the Singular Value

Decomposition Using Mesh-Connected

Processors

RICHARD P. BRENT,* FRANKLIN T. LUK,t

and CHARLES VAN LOAN+

Abstract—A cyelie Jacobl method for computing the singular value decompostien of an m

a matrix {rt 2 2) using systolic arrays is proposed. The algorithm requires O(7"} processors and

Olm + nt tog n)} units of tiene.

Key words and phrases: Systolic arrays, singular value decompatition, cyclic Jacobi method,

real-time compatation, VLSI.

1, INTRODUCTION

A singular value decomposition (SVD) of a matrix A € C"*" is given by

A= ULYH, (LD

where U ¢ C™%" and Ve C*%* are unitary matrices and Le R™* is a real

non-negative “diagonal” matrix. Since 44 = VE'UA, we may assume m >

n without loss of generality. Let

Us (uy ss tel 0 = diagio), ..., ¢,) and

(1.2)

V= (vo... ale

We refer to o, as the é-th singular values of A, u, and y; as the corresponding

left and right singular vectors. The singular values may be arranged in any

“Centre for Mathematical Analysis, Australtan National University, Canberra. A.C.T. 2601,

Australia,

School of Electrical Engineering, Cornell University, Ithaca, New York 14853.

+Department of Computer Science, Corneil University. Ithaca, New York 14853.

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 243

order, for if Pe R"*” and Q € R"*" are permutation matrices such that PEQ

remains “diagonal,” then

A = (UPT)(PEQXQTV#)

is also an SVD. It is customary to choose P and Q so that the singular values

are arranged in non-increasing order:

ot 20,>0, O45, =: =o, =0, (1.3)

with r = rank(A). If A is real, then the unitary matrices U and V are real and

hence orthogonal. To simplify the presentation, we shall assume that the ma-

trix A is real. The algorithms presented in this paper can be readily extended

to handle the complex case.

The standard method for computing (1.1) is the Golub-Kahan-Reinsch

SVD algorithm ({9] and [11]) that is implemented in both EISPACK [7] and

LINPACK [4]. It requires time O(mn?). However, the advent of massively

parallel computer architectures has aroused much interest in parallel SVD

procedures, e.g., [1], [5], [13], [16], [18], [21], and [22]. Such architectures

may turn out to be indispensable in settings where real-time computation of

the SVD is required (cf. [24] and [25]). Speiser and Whitehouse [24] survey

parallel processing architectures and conclude that systolic architectures of-

fer the best combination of characteristics for utilizing VLSI/VHSIC technol-

ogy to do real-time signal processing (see also [15] and [25]). On a linear sys-

tolic array, the most efficient SVD algorithm is the Jacobi-like algorithm

given by Brent and Luk [1]; it requires O(mz) time and O(n) processors. This

is not surprising because Jacobi-type procedures are very amenable to parallel

computations (cf. [1], [18], [20], and [21]).

In this paper we present a modification of the two-sided Jacobi SVD

method for square matrices detailed in Forsythe and Henrici [6] and show

how it can be implemented on a quadratic systolic array. The array is very

similar to the one proposed in Brent and Luk [1] that uses O(n) processors to

solve n-by-n symmetric eigenproblems in O(a log 7) time. However, to handle

m-by-n SVD problems, our algorithm requires a ‘‘pre-processing” step in

which the QR-factorization A = QR is computed. This can be done in O(m)

time using the systolic array described in {8]. Our modified Brent-Luk array

then computes the SVD of R in O(n log n) time. O(n) processors are required

for the overall algorithm. For an extension of this work, see the paper (2] by

the authors on computing the generalized SVD of two given matrices.

The paper is organized as follows. In Section 2, basic concepts and calcula-

tions are discussed by reviewing the Jacobi algorithm for the symmetric eigen-

problem. Our modified Forsythe-Henrici scheme is then presented in Sec-

244 JOURNAL OF VLSI AND COMPUTER SYSTEMS.

tions 3 and 4 where we discuss two-by-two SVD problems and “parallel

orderings,” respectively. In Section 5, we describe a systolic array for imple-

menting the scheme. Section 6 discusses how this array can be used to solve

rectangular SVD problems. We also describe in that final section a block Ja-

cobi method that might be of interest when our array is too small to handle a

given SVD problem.

2. BACKGROUND

The classical method of Jacobi uses a sequence of plane rotations to

diagonalize a symmetric matrix A ¢ R"%". We denote a Jacobi rotation of an

angle @ in the (p, q) plane byJ(p. q, 0) =J, where p < q. The matrix J is the

same as the identity matrix except for four strategic elements:

Typ = Cs Joq F Sy

Top = 8 qq =

where c = cos() and s = sin(@). Letting B = JTAJ, we get

Bop pq ele S]"fapp pqlf ec s

bap Pag ~s 6} [4p Fag ILS ©.

If we choose the cosine-sine pair (c, s) such that

Bag = Bap = Apq(c? — 82) + (app — agg)es = (2.1)

then B becomes “more diagonal” than A in the sense that

off(B) = off(A) — 2a34,

where

off(C) = Ech for C= (cy) eRm*.

isi

Assuming that a,, # 0, we have from equation (2.1) that

qq = App ct = 2 =

o= aoa 577 ctn (28), (2,2)

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 245,

and that = tan(@) satisfies

t+ 2pt-1=0.

By solving this quadratic equation and using a little trigonometry, we find two

possible solutions to (2.1):

1

rs csign) (le) +VTFR] c=. ssc 023)

and

p= — Sn gi, (2.4)

pl +v1i+ p? vite

The angle @ associated with (2.4) is the smaller of the two possible rotation

angles and satisfies 0 = |@| = 1/4.

By systematically “zeroing” off-diagonal entries, 4 can be effectively

diagonalized. To be specific, consider the iteration:

Algorithm Jacobi

do until off(A) < ¢

forp =1,...,2—1

forg=pti,....a

begin

Determine c and 5 via (2.2) and (2.4);

A:=J(p, gq, 9)7 AI(p, gq, 8)

end.

The parameter ¢ is some smail machine-dependent number. Each pass

through the “until” loop is called a “‘sweep.”’ In this scheme a sweep consists

of zeroing the off-diagonal elements according to the “row ordering" [6]. This

ordering is amply illustrated by then = 4 case:

(p,q) = (1, 2), (1, 3), (1, 4), (2, 3), (2, 4), (3, 4).

It is weil known that Algorithm Jacobi always converges [6] and that the

asymptotic convergence rate is quadratic (26]. The rigorous proof of these

results requires that (2.4) rather than (2.3) be used. Brent and Luk {1} conjec-

ture that O(log 7) sweeps are required by Algorithm Jacobi for typical values

246 JOURNAL OF VLSI AND COMPUTER SYSTEMS

of ¢, e.g., ¢ = LO~'? off (Ao), where Ay denotes the original matrix. For most

problems this usually means about six to ten sweeps (cf. [1] and [19]).

The practical computation of c and s requires that we guard against over-

flow. If ¢ denotes the machine precision, then this can usually be accom-

plished as follows:

Algorithm CS

By 1 Gag ~ Appi

by 1= Ayo}

if |yo| < €|)| then

Jacobi methods for the symmetric eigenproblem are of interest because

they lend themselves to parallel computation (see, ¢.g., {1] and (20]). Of par-

ticular interest to us is the ‘parallel ordering” described in {1} and illustrated

in then = 8 case by

(p,q) = GC, 2), GB.

a, 4), 2.

(i, 6), (4,

U, 8), (0,

5}, (6, 3), 6

+ 3), (8, 2), (6,

(1, 3), (5, 2), (7, 4), (8, 6).

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 247

Note that the rotation pairs associated with each “row” of the above can be

calculated concurrently. Brent and Luk [1] have developed a systolic array

that exploits this concurrency to such an extent that a sweep with the parallel

ordering can be executed in O(7) time. Our aim is to extend their work to the

SVD probiem.

At first glance this seems unnecessary, since software (or hardware) for the

symmetric eigenvalue problem can in principle be used to solve the SVD

problem. For example, we may compute the eigenvalue decomposition

V™ATA)V = diag(o7, ..., 02),

where V = [y,, ..., v,] is orthogonal and the g; satisfies

O26 BO, > G4, =) = 9, = 0,

with r = rank(A). We next calculate the vectors

ja @=1, 00.0, (2.8)

4,

and then determine the vectors u,+1,...,u,_ so that the matrix

U = [uy, ..., uy] is orthogonal. The factorization UTAV = diag(o,, ...,

0,) gives the SVD of A. Thus, one can theoretically compute an SVD of A via

an eigenvalue decomposition of AA. Unfortunately, well-known numerical

difficulties are associated with the explicit formation of ATA.

A way around this difficulty is to apply the Jacobi method implicitly. This is

the gist of the “one-sided” Hestenes approach in which the matrix Vis deter-

mined so that the columns of AV are mutually orthogonal [14]. Implementa-

tions are discussed in Luk [18] and in Brent and Luk [1]. In the latter refer-

ence, a systolic array is developed that is tailored to the method. However,

inner products of m-vectors are required for each (c, s) computation. Because

of this, the speed of their paralle! algorithm is O(rnn log n) for a linear array

of processors, and O(n log m log n) for a two-dimensional array of O(mn)

processors with some special interconnection patterns for inner-product com-

putations. Another drawback of the one-sided Jacobi method is that it does

not directly generate the vectors u,+;, ..., Uj. This is an inconvenience in

the systolic array setting since one would need a special architecture to carry

out the matrix-vector multiplications in (2.5). It should be pointed out, how-

ever, that these vectors are not necessary for solving the least squares problem

Ax — bi, = min.

248, JOURNAL OF VLSI AND COMPUTER SYSTEMS

Yet another approach to the SVD problem is to compute the eigenvalue

decomposition of the (im + 2) X (m + n) symmetric matrix

c=[° ‘t

[AT 0

TO Alu) fu)

=e],

Lat oly, |

then ATAy = 02v and AATu = ou, Thus, the eigenvectors of C are “made

up” of the singular vectors of A. It can also be shown that the spectrum of Cis

given by

Note that if

MC) = (Hap, veey Hoy, 0 022, OF

The disadvantages of this approach to the SVD are that C has expanded di-

mension and that recovering the singular vectors may be a difficult numerical

task (Golub and Kahan (9). In addition, the case rank(A) < 1 requires extra

work to generate v.41, -.-) Yn

To summarize, it is preferable from several different points of view not to

approach the SVD problem as a symmetric eigenvalue problem.

3. THE TWO-BY-TWO SVD PROBLEM

Forsythe and Henrici [6] extend the Jacobi eigenvalue algorithm to the

computation of an SVD of a square matrix A € R"*". They effectively

diagonalize A via a sequence of two-by-two SVDs. In this section, we discuss

how to compute cosine-sine pairs (c, 5,) and (cz, sz) such that

ey sy Tw xfer $2 d, 0

; (3.1)

“DE eb.

.-(

L

where

y

N

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 249

is given. It is always possible to choose the above rotations such that |d,| =

|d2|; however, since det(A) = d,d2, it may not be possible to achieve d, =

d, = 0. Consequently, we refer to (3.1) as an “unnormalized” SVD. To gen-

erate a genuine, ‘‘normalized” SVD, it may be necessary to use reflections as

well as rotations. We shail return to this detail at the end of this section.

The following approach is suggested in [6] for computing (3.1). Let 6, and

0, be the angles generating the cosine-sine pairs (c, s;) and (c3, s2), respec-

tively. Then 9, and 6, are solutions to the equations:

+ i

=, tan(—0, + 6) = tS.

tan(0, + 6) = +

w

y.

zZ—w

We may use the following procedure to determine the cosine-sine pairs:

Algorithm FHSVD

a

wat

{Fina xu = cos( 5 *2) and o, = sin( 15%)

if |u2| = € |u| then

ZW;

yru

begin

x= hy

H:=0

end

else

begin

=f

y=;

Be

yi SBD,

lol +i + oF

i .

Ey

= xT

250 ; JOURNAL OF VLSI AND COMPUTER SYSTEMS

Wye Zz tw;

wi y mx

{rina x = ooo( 2) and. 0) = sin( 2+ *)}

JE | al S € [ay] then

x=

= 0

end

else

begin

ve AL,

ae ;

sign(p2)

we Tmt vite

1

= 5

ae vita

Ox = X272

end;

{Find (c;, 51) and (3, s2)}

Cy ?= xix2 + o1095

$155 9X2 X1925

C22 XiX2 ~ 91025

522 01xq + X102-

An alternative method for computing (3.1) is to first symmetrize A:

r.

AE en

—s c]ly z qr

and then diagonalize the result:

e% sp gife $7 d, 0

= .

~sz co] Le rinse 2, 0 d

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 251

Equation (3.1) holds by setting

eo ihe st ey sy]

= , G.3)

~ | l-s ¢ 5, ey

C1 = ene — 828,

that is,

Sy = s7e + eps.

The formulas for c and s are easily derived. From (3.2) we have

cx — $z = sw tcy.

Thus, if x * y, then we have

wt: _ _Sign(o)

p = ctn(6) zy s We

c=sp.

For reasons that will be explained in Section 6, it is necessary that our two-

by-two SVD algorithm handles the special casesx = z = O andy = z = Oas

follows:

ce, si l?fw O1f1 Oo d, 0

STD ale d-[ eb ee

5) ey Oy{O 1 0 0

fl OF fw xtc sy d, 0

LUE Eb 98

0 1) L0 OlL—s, ¢: 00

In other words, 8, should be zero if x = z = 0, and 9, should be zero if y =

z =.0. Our scheme as described already produces (3.4). However, (3.5) is only

achieved if |w| > |x|. To rectify this, we apply our algorithm to the transpose

problem and obtain

[ol le d-Lo. ob

and

Be JOURNAL OF VLSI AND COMPUTER SYSTEMS

We then set cp = c,, 5) = 5, c) = 1, and s, = 0. Let us present the overall

scheme: : i :

Algorithm USVD

flag := 0;

ify =0 and z=0. then

begin

yrex, x1 0} flag := 1

end;

wy Sw bas

Byte

if |ag| S € || then

begin

ere dy sO

end

~ else

oa

U2)

= te,

i+ p

Cc: sp

end;

missaty)+ceze—w); {=r-p)}

tg. Dex — sz) {= 2g}

if |yg| s ¢ fyi] then

begin

2: 1; sg: 0

end

else

begin

aie hy

By

ss sign(o2)

tai 5

a Teal ti tok

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE

1

2: :

pee MS rz

S21 Coty

end;

cy = c20 ~ $283

Sy se + 028;

dy := cy(wey — x52) ~ 8;(ye, — 252);

dz := $,(ws2 + xe) + ey(ys2 + z¢2);

if flag = 1 then

begin

C2 Cy, S25

Ch 1; Sy

end.

If a “normalized” SVD is required, then further computations must be

performed. The diagonal elements must be sorted by modulus and then (if

necessary) premultiplied by -1, as shown in the following algorithm.

Algorithm NSVD

Apply Algorithm USVD;

if |d2| > |d,| then

Cpe 8 | She

62 RW $a 82

died dy:=r

T,

Tt

end;

ifd, < 0 then

begin

dy := —d}

KI mK

end.

254 JOURNAL OF VLSI AND COMPUTER SYSTEMS

The ‘‘normalized” SVD is given by

cy ski [wo x]f co. so d, 0

= 7 (3.6)

—s, eK] Ly z]i-s, ¢ 0 ad

Note that if « = —1 then the left transformation is a “reflection,” i.e., a two-

by-two orthogonal matrix of the form

cos(6,) —sin(6,)

—sin(,) —cos(@,) ,

We denote the corresponding #-by-n orthogonal transformation by J(p, 4,

6, x) =J, where p < q. The matrixJ is the same as the identity except for the

four elements:

Jup = 008(01), Jog = xsin(6,),

Jap = sin), Iyq = xeos (0),

and it is a reflection whenever « = —1.

4, SOME TWO-SIDED JACOBI SVD PROCEDURES

By solving an appropriate sequence of two-by-two SVD problems, we may

compute the SVD of a general m X n matrix A. Analogous to Algorithm Ja-

cobi for the symmetric eigenvalue problem, we propose the following routine:

Algorithm JSVD

do until off(A) < ¢

for each (p, g) in accordance with the chosen ordering

begin

Set w= ayy, x = apg,

YF agp, 2 = agi

Compute (cj, 51), (c2, $2) and xe{ —1, 1} so that

the (p, q) and (q, p) entries

of Ip, q, 1, «)? Allp, g, 82, x) are zero;

A:= Kp, 4, Oy 0) Alp, q, 82 ©)

end.

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 255

We have experimentally compared the three-angle formulas FHSVD,

USVD, and NSVD. The convergence of Algorithm JSVD in conjunction with

any one of these formulas and with any ordering has not been rigorously

proved. However, there are some important theoretical observations that can

be made.

Let 6, and @2 be the angles generating the cosine-sine pairs (c,, s;) and

(cp, 52), respectively, and suppose that —b < 6,, 0. = b. Although Forsythe

and Henrici [6] prove that JSVD with the “row ordering” always converges if

b < 2/2, they also demonstrate that this condition may fail to hold. As a

remedy, they suggest an under- or over-rotation variant of FHSVD and prove

its convergence. However, we do not favor this variant, believing that it will

eliminate the empirically observed quadratic convergence of Algorithm

JSVD. One can show that the bound b equals (i) 1/2 for FHSVD, (ii) 34/4

for USVD, and (iii) 52/4 for NSVD even if no reflection is involved. We con-

jecture that the smaller the rotation angles are the faster the procedure

ISVD will converge. Our experimental results agree with the conjecture (see

Table 1).

We also point out that the computed diagonal matrix in JSVD may not

have sorted diagonal entries even if the “normalized” SVD of each two-by-

two submatrix is calculated. To obtain the “normalized” SVD of A we must

sort the diagonal entries and permute the columns of U and V accordingly.

It is quite obvious that the “row ordering” is not amenable to parallel

processing. A new “parallel ordering” has been introduced in [1] for doing

[2/2] rotations simultaneously. For the symmetric eigenvalue problem, the

latter ordering has been found to be superior to the former both empirically

[1] and theoretically (see [1] and [12]), even on a single-processor machine.

Our consideration of systolic array implementation necessitates the use of the

“parallel ordering” in this paper.

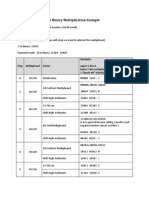

‘Table 1. Average and maximum number (in parentheses) of sweeps using

the “parallel ordering”

n Trials FHSVD USVD : NSVD

4 1000 2.97(3.67) 2.97(4.00) 2.97(4.33)

6 1000 3.73(4.80) 3.76(4.87) 4.17(5.40)

8 1000 4,195.07) 4.21(5.14) 4.76(5.96)

10 1000 4.51(5.38) 4.55(S.44) 5.18(6.40)

20 100 $.50(6.13) 5.54(6,01) 6.44(7.44)

30 100 6.03(6.52) 6.09(6.80) 7.30(7.98)

40 100 6.36(6.98) 6.40(6.98) 7.94(8.50)

SO 100 6.66(7.11) 6.72(7.34) 8.51(9.00)

256 JOURNAL OF VLSI AND COMPUTER SYSTEMS

We have applied each of the three-angle formulas with the “parallel order-

ing” to random n X n matrices A, whose elements were uniformly and inde-

pendently distributed in [—1, 1]. The stopping criterion was that off(A) was

reduced to 10~!? times its original value. Table 1 gives our simulation results.

We conclude that our “unnormalized” SVD method USVD performs just as

well as the more complicated Forsythe-Henrici scheme FHSVD and matgin-

ally faster than the “normalized” SVD method NSVD.

We have also compared the “row” and “parallel” orderings for the method

USVD. The test data and convergence criterion are as described in the last

paragraph. Our simulation results are presented in Table 2. The convergence

rates for the two orderings appear to be about the same.

Table 2. Average and maximum number (in parentheses) of sweeps using

the USVD method

n Trials Row ordering Parallel ordering

4 1000 3.22(4.17) 2.97(4.00)

6 1000 4.00(5.13) 3.76(4.87)

8 1000 4,52(5.50) 4,21(5.14)

10 1000 4.83(6.02) 4,S5(8.44)

20 100 5.81(6.59) 5.54(6.01)

30 100 6.26(6.79) 6.09(6.80)

40 100 6.60(7.00) 6.40(6.98)

SO 100 6,78(7.62) 6.72(7.34)

80 30 7.31(7.81) 7.30(7.79)

100 10 7.46(7.79) 7.56(8.00)

120 5 7.60(7.90) 7.73(7.98)

150 3 7.76(7.80) 7.73(8.03)

170 2 7.86(7.92) 8.02(8.02)

200 1 7,90(7.90) 8.10(8.10)

230 1 8.35(8.35) 8.43(8.43)

In the remainder of the paper, we will focus our attention exclusively on the

USVD method for solving two-by-two SVD problems.

5. A SYSTOLIC ARRAY

We now describe a systolic array for implementing the Jacobi SVD method

ISVD for ann X n real matrix A. Our array is very similar to the one detailed

in [1]. For pedagogic purposes, we first idealize the array so that it has the

ability to broadcast the rotation parameters in constant time.

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 257

Assume that n is even and that we have a square array of 2/2 by n/2 proces-

sors, each containing a 2 X 2 submatrix of A. Initially, processor P; contains

ae oa

424,41 92,3

Gi, j = 1, ...,n/2). Processor P; is connected to its “diagonally” nearest,

neighbors Pri j11 (1 < ij < n/2). For the boundary processors and for

a complete picture, see Figures 1 and 2. In general, Py contains four real

numbers

[* 5

vai Oy

The diagonal processors P; (i = 1, ..., 2/2) act differently from the off-

diagonal processors Pi # j, 1 $i, j S n/2). Ateach time step, the diagonal

processors P; compute rotation pairs (cf, s+) and (cf, sf) to annihilate their

off-diagonal elements 6,; and y, (cf. Algorithm USVD):

cr tT foe Ball cf sh oy 0

mst cf] Law dx JL—s? of ~ Lo ag)

ina ot

inp

outa

iny out

outy ing

Figure 1. Diagonal input and output lines for processors.

258 JOURNAL OF VLSI AND COMPUTER SYSTEMS

03 24

Ps Pse P33 Psa

Pay Pa P43 | Pag

g ~ 0D

Figure 2. “Diagonal” connections, n = 8. (here + stands for =)

To complete the rotations that annihilate ¢; and yali = 1, ..., 2/2), the off-

diagonal processors P;(i # j) must perform the transformations

* *)-( #

vi 89) La isl

fej Bi] [ ct st] Pay

Lyi 88) Lash et) Lay

We assume that the diagonal processor P;; broadcasts the rotation parameters

(cf, s4) to other processors on the i-th row, and (cf, s®) to other processors on

the /-th column in constant time, so that the off-diagonal processor P,; has

access to the parameters (cf, s£) and (c*, s®) when required.

To complete a step, columns and corresponding rows are interchanged be-

tween adjacent processors so that a new set of n off-diagonal elements is ready

to be annihilated by the diagonal processors during the next time step. We

where

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 259

have assumed diagonal connections for convenience. They can easily be simu-

lated if only horizontal and vertical connections are available (cf. [1]). The

diagonal outputs and inputs are illustrated in Figure 1, where the subscripts

(i,j) are omitted. They are connected in the obvious way, as shown in Figure

2. For example,

inyerjer i i>1, j1, j=n/2

in Bi, fist, fan

Formally, we can specify the diagonal interchanges performed by processor

P,; as follows.

Algorithm Interchange

outa a; our B By

if i=1 and j=1. then

lout y — 4 out 6 — 6;

fourasB; out B — a]

elseif ¢=1 then

out y — 3; out d = y;

outa e ¥; out 8 + 4;

elseif j= 1 then

Loury — a; out 6 — Bi |

[four a = 4; our 8 — y3}

else |

| ous yo 8 out d = cy

{wait for outputs to propagate to inputs of adjacent processors }

[imac a in8= 8B;

|

Liny on ind — 6. |

It is clear that a diagonal processor P;; might omit rotations if its off-diago-

nal elements 3; and ~/;; were sufficiently small. All that is required is to broad-

cast (cf, s£) = (1, 0) and (c%, s*) = (1, 0) along processor row and column !,

respectively. As is well known (cf. [1]), a suitable threshold strategy guaran-

260 JOURNAL OF VLSI AND COMPUTER SYSTEMS

tees convergence, although we do not know any example for which our al-

gorithm fails to give convergence even without a threshold strategy.

If singular vectors are required, the matrices U and V of singular vectors

can be accumulated at the same time that A is being diagonalized. To com-

pute U, each systolic processor Py (1 = i, f = n/2) needs four additional

memory cells

by Yi

I Ty

During each step it updates

Each processor transmits its

{7

values to its adjacent processors in the same way as the

fa 8

ly 4

yalues (see Algorithm Interchange). Initially we set py = 77 = oj =

# J), and py = t= 1, oy = vy = 0. After a sufficiently large and integral

number of sweeps, we have U defined by

["e 4u2-1.3)|

|

Byg-1 eaiay |

The matrix V can be computed in an identical manner, but the rotation

[ct sf

| is replaced by

Lost of

BRENT, ET. AL.: COMPUTATION OF THE SINGULAR VALUE 264

If the dimension n is odd, we either modify the algorithm for processors P,;

and Py (i = 1, ..., [#/2]) ina manner analogous to that used in (1], or

simply border A by a zero row and column. The details of handling problems

where 7 is larger or smaller than the effective dimension of the array are pre-

sented in the next section.

Instead of broadcasting the parameter pairs (cf, s#) and (c®, s8), it may be

preferable to broadcast only the corresponding tangent values tf and r* and

let each off-diagonal processor P;; compute (cf, s+) and (cf, s*) from +f and

£R (ef. (2.4). Communication costs are thus reduced at the expense of requir-

ing off-diagonal processors to compute two square roots per time step. This is

not significant since the diagonal processors must compute three square roots

per step in any case. In what follows a “rotation parameter” may mean either

t, or the pair (c;, s,) (superscripts omitted).

Let us show how we may avoid the idealization that the rotation parameters

are broadcast along processor rows and columns in constant time. We can

retain time O() per sweep for our algorithm by merely transmitting rotation

parameters at constant speed between adjacent processors.

Let Aj = |i — j| denote the distance of processor P;; from the diagonal.

The operation of processor Pi, will be delayed by A, time units relative to the

operation of the diagonal processors, in order to allow time for the rotation

parameters to be propagated at unit speed along each row and column of the

processor array.

A processor cannot commence a rotation until data from earlier rotations

are available on all its input lines. Thus, processor P,; needs data from its four

neighbors Pj); (1 < i,j < n/2). For the other cases, see Figure 2. Since

[Ag ~ Asijerl S 2,

it is sufficient for processor P, to be idle for two time steps while waiting for

the processors P,., jx, to complete their (possibly delayed) steps. Thus, the

ptice paid to avoid broadcasting is that each processor is active for only one-

third of the total computation. This is a special case of the general technique

of Leiserson and Saxe [17], and is illustrated in Figure 3. A similar ineffi-

ciency occurs with many other systolic algorithms, see, e.g., [1] and (15]. The

fraction can be increased to almost unity if the rotation parameters are propa-

gated at greater than unit speed, or if multiple problems are interleaved.

A typical processor Py(1 j then set our @ as in Algorithm Interchange;

ifi 1) and (j > 1) then set our a as.in Algorithm Interchange;

if i 7, because the symmetrization step (3.2) will diagonalize the

two-by-two matrix (see equation (3.4). Thus, the sparsity structure ofA will

be preserved during the iteration, and we will emerge with the factorization:

we will have

vo

UTA Oj = diag(a,, ©. 45,0, ..-, 0),

or

from which we get

UTAV = diag(a,, .... o,)

as the desired SVD. A drawback with this approach is the expanded dimen-

sion of A, especially painful if m >> n. On the other hand, no additional

266 JOURNAL OF VLSI AND COMPUTER SYSTEMS.

hardware is required so long as the array of Section $ can handle m-by-m

problems.

In contrast, the second approach to handling rectangular SVD problems is

more efficient but entails the interfacing of an SVD array with a OR array.

The idea is to compute first the QR factorization of A:

where R € R"™" is upper triangular, and then compute the square SVD:

pp g Pp ql

WIRV =Z = diag(a,, ..., 0%).

Defining

lwo

U=Q) .

Lor

we get

UTAV = diag(o,, ..., 4).

Actually, in the conventional one-processor setting, it is advisable to compute

the QR factorization first anyway, especially if m >> n. See Chan [3].

We have not looked into the details of the interface between the QR and the

SVD arrays. It would be preferable to have a single array with enough gener-

ality to carry out both phases of the computation. For example, perhaps the

QR factorization could be obtained as A is ‘‘loaded’’ into the combination

QR-SVD array.

We conclude with some remarks about the handling of SVD problems

whose dimension differs from the effective dimension of the systolic array of

Section 5. To fix the discussion, suppose that A is an n-by-n matrix whose

SVD we want and that our array can handle SVD problems with maximum

dimension N.

lin < N, then it is natural to have the array compute the SVD of

-_ [Aa 0}

“elo o|

BRENT, ET. AL: COMPUTATION OF THE SINGULAR VALUE 267

UTAV = diag(o,, ...,9,,0, -.., 0)

Our method of computing this SVD (based on JSVD) ensures that

al

L 4

vo

or}

whence UTAV = diag(a, ..., 0,). This follows because of (3.4) and (3.5).

(Whenever a two-by-two SVD involves an index greater than n, the special

form of the orthogonal update guarantees that A's block structure is pre-

served.) Let us point out that an SVD procedure need not produce a U anda

V matrix with the above block structure in the case rank (A) = rank (A)

You might also like

- PDFDocument58 pagesPDFDeepthi Priya BejjamNo ratings yet

- PDFDocument8 pagesPDFDeepthi Priya BejjamNo ratings yet

- Singular Value Array AND: Reducing The Computations of The Decomposition Given by Brent Luk YangDocument13 pagesSingular Value Array AND: Reducing The Computations of The Decomposition Given by Brent Luk YangDeepthi Priya BejjamNo ratings yet

- Oil Price Trends in India and Its DeterminentsDocument16 pagesOil Price Trends in India and Its DeterminentsDeepthi Priya BejjamNo ratings yet

- Polar Plot Analysis and Stability of Control SystemsDocument33 pagesPolar Plot Analysis and Stability of Control SystemsAnonymous kj9KW15CQnNo ratings yet

- Bode Plot PDFDocument31 pagesBode Plot PDFDeepthi Priya BejjamNo ratings yet

- Oil Price Trends in India and Its DeterminentsDocument16 pagesOil Price Trends in India and Its DeterminentsDeepthi Priya BejjamNo ratings yet

- Lecture 1Document23 pagesLecture 1Deepthi Priya BejjamNo ratings yet

- 6th Central Pay Commission Salary CalculatorDocument15 pages6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- Linear Inverted Pendulum - Workbook (Student) PDFDocument23 pagesLinear Inverted Pendulum - Workbook (Student) PDFDeepthi Priya BejjamNo ratings yet

- TE-MPP Final Uploaded PDFDocument28 pagesTE-MPP Final Uploaded PDFDeepthi Priya BejjamNo ratings yet

- Microprocessorlabmanual EE0310Document87 pagesMicroprocessorlabmanual EE0310dhilipkumarNo ratings yet

- Booth ExampleDocument1 pageBooth ExampleRajinder SanwalNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)