Professional Documents

Culture Documents

ALLTOPICS txw102

Uploaded by

Shandaar MohammedOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

ALLTOPICS txw102

Uploaded by

Shandaar MohammedCopyright:

Available Formats

Topic 1 - Introduction to Computer Hardware Architecture

PC Architecture (TXW102)

Topic 1:

Introduction to Computer Hardware Architecture

© 2008 Lenovo

PC Architecture (TXW102) September 2008 1

Topic 1 - Introduction to Computer Hardware Architecture

Objectives:

Computer Hardware Architecture

Upon completion of this topic, you will be able to:

1. Identify the types of computers and their key differentiating features

2. Define various PC architecture terminology including computer layers,

controllers, and buses

3. Identify common industry standards and the objective of benchmarks

used with computer systems

4. Identify two features to enhance computer security

© 2008 Lenovo

PC Architecture (TXW102) September 2008 2

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers

Notebook Desktop Server

- Mobile - Non-mobile - High security

- Wireless - Wired connection - Data processing and storage

Designs Designs Designs

- Ultraportable (one spindle) - Mini Desktop - Tower

- Full function (two spindles) - Ultra Small - Rack

- Desktop alternative - Small - 1U and 2U rack

(three spindles) - Desktop - Blades

- Tablet - Tower

- PC Blades

Brands Brands Brands

- Lenovo IdeaPad - Lenovo IdeaCentre - Lenovo ThinkServer

- Lenovo ThinkPad - Lenovo ThinkCentre

© 2008 Lenovo

Types and Features of Computers

The three main types of computers (or PCs) are

• Notebooks

• Desktops

• Servers

PC Architecture (TXW102) September 2008 3

Topic 1 - Introduction to Computer Hardware Architecture

Notebooks

Notebooks are optimized for traveling and for mobile users who need easy access to data.

Notebooks can be categorized as ultraportable (around three pounds), full function (up to seven

pounds), or a desktop alternative (desktop features in a larger notebook design). Lenovo uses the

brand names IdeaPad or ThinkPad for its notebook systems.

Lenovo ThinkPad T400 Lenovo IdeaPad U110

The tablet PC is another kind of PC. Tablet PCs are based on a Microsoft specification for ink-

enabled touch screen computers (using Windows XP Tablet PC Edition or Windows Vista). The

tablet PC comes in two form factors: slate (which has no keyboard attached because the tablet can

be connected to a docking station) and convertible (includes integrated keyboard). Lenovo markets

the ThinkPad X61 and X200 Tablet, which are convertibles.

Lenovo ThinkPad X200 Tablet

PC Architecture (TXW102) September 2008 4

Topic 1 - Introduction to Computer Hardware Architecture

Desktops

Desktops are for users who work in one place and need access to data on the desktop or through a

network. Lenovo uses the brand names IdeaCentre or ThinkCentre for its desktop systems.

Common desktop mechanical designs include the following:

• Ultra Small (0 slot x 2 bay or 1 slot x 2 bay)

• Small (2 slot x 3 bay or 4 slot x 3 bay)

• Desktop (4 slot x 4 bay or 3 slot x 3 bay)

• Tower (4 slot x 5 bay)

Ultra Small mechanical in Small mechanical in

Lenovo ThinkCentre M57 Lenovo ThinkCentre A62

(1 slot x 2 bay) (2 slot x 3 bay)

Desktop Mechanical in Tower mechanical in

Lenovo ThinkCentre M57 Lenovo ThinkCentre A62

(4 slot x 3 bay) (4 slot x 4 bay)

PC Architecture (TXW102) September 2008 5

Topic 1 - Introduction to Computer Hardware Architecture

Workstations

A workstation, such as a Unix workstation, RISC workstation, or engineering workstation, is a high-

end desktop or deskside microcomputer designed for technical applications. Workstations are intended

primarily to be used by one person at a time, although they can usually also be accessed remotely by

other users when necessary.

Workstations usually offer higher performance than is normally seen on a personal computer,

especially with respect to graphics, processing power, memory capacity, and multitasking ability.

Workstations are often optimized for displaying and manipulating complex data such as 3D

mechanical design, engineering simulation results, and mathematical plots. Consoles usually consist of

a high resolution display, a keyboard, and a mouse at minimum, but often support multiple displays

and may often utilize a server level processor. For design and advanced visualization tasks,

specialized input hardware such as graphics tablets or a SpaceBall may be used.

Lenovo markets the ThinkStation family of workstations.

ThinkStation S10 (left)

ThinkStation D10 (right)

Servers

Servers are computers that provide services to other computers, called clients. Servers are in secure

areas because so many users are dependent on their function. They include file servers, print servers,

terminal servers, Web servers, e-mail servers, database servers, and computation servers.

Server designs include

• Tower, which rests on the floor

• Rack-based, which must be installed in a rack

• Server blades, which have server circuitry on a single board which slides into an enclosure with

other blades.

Note: Servers for racks vary in height by a U measurement (a U is 1.75-inch height). 1U servers are

popular for Web sites because for Web pages it is better to spread the load across multiple servers

(horizontal scalability) rather than to increase the processing power of a centralized server (vertical

scalability).

Lenovo markets the ThinkServer family of servers.

PC Architecture (TXW102) September 2008 6

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

Differentiating Computer Features

Notebook Desktop Server

- Size and weight - Fastest processor - Support many concurrent users

- Power mgmt/battery - Graphics performance (up to 1000s)

- Screen type and size - 3D graphics adapters - Multiple processors

- Integrated wireless - Systems management - Large memory capacity

- Docking station or port replicator - Removable storage (DVD±RW) - Large disk capacity

- Number of spindles or bays - Chipset (internal and external)

- Modular bay(s) - Security chip - Redundant components (disk,

- Sales presentation capability - ThinkVantage Technologies fans, power supplies, memory,

- Integrated wireless, Bluetooth etc.)

- Security chip - Hot-swap components to

- ThinkVantage Technologies maximize uptime (disk, fans,

power supplies, memory, etc.)

- Hardware Failure Prediction to

warn the admin of any

impending failure

© 2008 Lenovo

Differentiating Computer Features

Each type of computer has important characteristics that distinguish it from each other.

Key differentiating features of notebooks are size and weight, power management and battery,

screen type and size, integrated wireless, docking station or port replicator, number of spindles or

bays, modular bay(s), sales presentation capability, integrated wireless, Bluetooth, infrared, security

chip, and ThinkVantage Technologies.

Key differentiating features of desktops are fastest processor, graphics performance, 3D graphics

adapters, systems management, removable storage (DVD±RW), chipset, security chip, and

ThinkVantage Technologies.

Key differentiating features of servers are support of many concurrent users (up to 1000s), multiple

processors, large memory capacity, large disk capacity (internal and external), redundant

components (disk, fans, power supplies, memory, etc.), hot-swap components to maximize uptime

(disk, fans, power supplies, memory, etc.), and Hardware Failure Prediction to warn the admin of

any impending failure.

PC Architecture (TXW102) September 2008 7

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

Netbook vs. Notebook

Netbook Notebook

Device for Internet Multi-purpose PC

Purpose built for Internet use Performance for multi-tasking,

Web usage: learn, play, content creation, intense workloads,

Usage

communicate, and view and Internet

No optical drive Entertainment, productivity, and

rich Web

Compact form factor Range of form factors

Size

(7-10" screen) (> 10" screen)

Price ~$250 to $450 $450 and above

Intel

Brands

Intel Atom Processor Intel Celeron, Pentium, Core 2 Processor brands

© 2008 Lenovo

Netbook vs. Notebook

The netbook is a smaller and lighter version of a notebook with less functionality.

Lenovo markets the IdeaPad S10 netbook.

Lenovo IdeaPad S10 netbook

PC Architecture (TXW102) September 2008 8

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

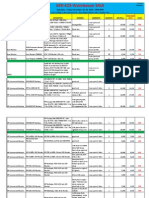

Architectural Choices

Manageability

Compatibility

Performance

Total Cost of

Capital Cost

Application

Application

Ownership

Vendors

Security

Network

Ease of

Impact

Offline

Traditional PC 3 3 3 3 Several

Server-based

Computing 3 3 z z 3 3 Citrix, MS

HP,

Blade PC 3 3 3 z 3 3 ClearCube

Virtual Desktops

From Servers 3 3 z z 3 3 z VMWare

Application Softricity,

Streaming z z z 3 z 3 z AppStream

Ardence,

OS Streaming z z z 3 3 3 Wyse

Source: Gartner (March 2007)

3 - Decided advantage

z - Neutral

- Major weakness

© 2008 Lenovo

Architectural Choices

There are many choices of computing because different requirements drive different architectures.

PC Architecture (TXW102) September 2008 9

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

Architecture Spectrums

Server-based

PCs Hosted VMs Blade PCs Streaming Web

Computing

Data Data Data Data Data Data

Data Applications Applications

LAN/ VM VM VM Applications WTS/Citrix Web server

WAN LAN/

PC OS WAN Server

VMM LAN/

WAN

Data

LAN/

LAN/ WAN

Applications WAN Data Applications

LAN/

Preso Layer WAN Applications ICA/RDP Browser

PC OS RDP PC OS OS OS

PC Hardware Thin Client Thin Client PC Hardware Hardware Hardware

Different architectures can coexist

More Complex More Secure

Higher Total Cost of Ownership Lower Total Cost of Ownership

Highly Flexible Source: Gartner (March 2007) Rigid Design

© 2008 Lenovo

Architecture Spectrums

The PC provides the greatest degree of flexibility for diversity of applications, types of

management, and configuration options. As other architectures such as hosted virtual machines,

blade PCs, streaming, server-based computing, and Web-based computing are considered, security

and lower total cost of ownership are increased at the expense of a more rigid design with little

flexibility.

PC Architecture (TXW102) September 2008 10

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

PC Blades

User Ports

The ClearCube User Port connects

• PC is removed computer peripherals like the

monitor, keyboard, mouse, speakers,

from user’s desk or USB peripherals to PC Blades at

and replaced with the data center or telecom closet.

a small User Port User Port (I/Port)

Ethernet

• PC Blade is in a

rack in secure,

Direct Connection

centralized location User Port PC Blade

(C/Port) The PC Blade is each user's actual

• Lenovo resells computer: a configurable, Intel-based PC

Blade that delivers full functionality to the

ClearCube-branded desktop.

PC Blades and

management Cage

The ClearCube Cage is

software a centralized chassis that

houses up to eight PC Blades.

ClearCube Management Suite

The ClearCube Management Suite empowers

administrators to manage the complete ClearCube

infrastructure from any location. This powerful,

remotely accessible suite includes a versatile set of

features such as "hot spare" switching, move

management, and automatic data backup.

© 2008 Lenovo

PC Blades

PC Blades separate the guts of the PC from the physical desktop, putting processing power in data

centers and computer rooms. Employees then have only a monitor, keyboard and mouse on their

desks, along with a client appliance that is linked back to a blade server. PC blades offer a range of

benefits, including streamlined management and tighter security since all the hardware is

centralized. PC Blade configurations provide a dedicated blade to each user or a pool of blades that

can be dynamically allocated. In addition, spare blades can be used to provide hot backup to avoid

system outages.

PC Blade Advantages

• Centralized asset management – PC Blade hardware is centralized for easy access and asset

management.

• Mission critical applications – Blade infrastructure has high levels of redundancy; users can be

swapped to a functioning blade very quickly in case of hardware or software failure

• Reduced support costs – Hardware or software upgrades can be managed centrally in a fraction

of the time it would take to upgrade large numbers of dispersed PCs.

• Multiple locations – There is potential to support multiple locations with PC Blades by remotely

switching a user to a spare standby blade in the event of hardware failure (saving the cost of an

urgent engineer visit or keeping support staff on-site).

• Reduced costs for new users – It is lower cost to install and configure a new user with a PC

Blade than a desktop.

PC Architecture (TXW102) September 2008 11

Topic 1 - Introduction to Computer Hardware Architecture

• Easy relocation – There are no significant costs when users move work location within a building.

• Improved security – The physical asset and intellectual property on the disk are centralized, e.g., it

is easier to steal a hard disk from a desktop than a PC Blade.

• Reduced user down time – Spare PC Blades can be configured to provide hot backup in case of

hardware failure.

• Improved appearance – In front office environments, the client’s user port has no moving parts,

generates no noise, produces little heat, and requires less space.

• Remote access – Users can access their own PC environment from multiple desks in the building

or from other remote locations with blade infrastructure installed

PC Blade Disadvantages

• Higher acquisition cost – Purchase price of PC Blade and its infrastructure is higher than a stand

alone PC.

• No wireless mobility – Mobile users or users who need to work away from their desks are not

supported.

• Lagging technology – PC Blade processors and technology may be six to 12 months behind

desktop technology.

• Unsuitable for power graphics users.

• New infrastructure – Significant change to current PC deployment, maintenance, and support

(skills, tools and processes).

• More difficult to plan and manage upgrades when customer has a mix of PC Blades and traditional

desktops.

• Lack of local CD and DVD drives except USB devices which open security risks and asset control

issues.

• User resistance for advanced/experienced PC users to losing access to 'their' PC.

• Extra cost for redundancy – Extra cost for closet spare (with cooling) to enable redundancy.

• Technology lock in – Little option to cascade or sell blades to other users or customers.

PC Architecture (TXW102) September 2008 12

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

ClearCube PC Blade Products

• PC Blade

- PC Blade is located with other

PC Blades in a rack in centralized

location

- Intel processor, memory, disk, and

graphics on PC Blade

• User Port PC Blade (ClearCube R1300)

- Small client device that connects the

user’s monitor, keyboard, mouse,

speakers, and USB devices to their

PC Blade

- No moving parts, generates no noise,

and creates little heat

- Can support multiple monitors

User Port (ClearCube C/Port)

© 2008 Lenovo

ClearCube

ClearCube is a company that has offered PC Blades since 1997 and dominates the PC Blade

market. Lenovo resells ClearCube-branded PC Blades and management software.

See www.clearcube.com for more information.

PC Architecture (TXW102) September 2008 13

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

Lenovo IdeaPad Notebooks and IdeaCentre Desktops

Lenovo IdeaPad Notebooks

IdeaPad U110 IdeaPad Y530 IdeaPad Y730

Lenovo IdeaCentre Desktops

IdeaCentre K210 IdeaCentre Q200

© 2008 Lenovo

Lenovo IdeaPad Notebooks and IdeaCentre Desktops

In 2008, Lenovo introduced the IdeaPad Family of notebooks and the IdeaCentre Family of

desktops. The following information shows the branding and positioning of the two product

lines.

Lenovo

New World Company

Best Engineered PCs

Think Family Idea Family

(ThinkPad and ThinkCentre) (IdeaPad and IdeaCentre)

Think Idea

The Ultimate Business Tool Engineered for People

Rock Solid Cutting Edge Capabilities

Thoughtful Design Trendsetting Design

Lowest TCO Peace of Mind

Visit lenovo.com for more information on the IdeaPad notebooks and IdeaCentre desktops.

PC Architecture (TXW102) September 2008 14

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

Lenovo ThinkPad, ThinkCentre, and ThinkVision

Lenovo Think Family

ThinkVantage ThinkPlus ThinkVantage

Technologies Accessories Design

and Services

ThinkPad, ThinkCentre, and ThinkVision offerings will continue to differentiate

Lenovo from our competitors with…

• Quality, service, and support expected from Lenovo

• Industrial design that simplifies and enhances usability

• Open standards-based products that work well together

• Lenovo innovation that delivers key benefits for customers

© 2008 Lenovo

Lenovo ThinkPad, ThinkCentre, and ThinkVision

The Lenovo Think-branded family of offerings includes the following brands:

• ThinkPad – Notebook category

• ThinkCentre – Desktop category

• ThinkVision – Visuals category

• ThinkVantage Technologies – Solutions and offerings category

• ThinkPlus Accessories – Accessories and upgrades for Think products

• ThinkPlus Services – Services for Think offerings

An essential part of what makes a product a ThinkPad, ThinkCentre, or ThinkVision offering is

its industrial and graphic design. Lenovo calls this design approach “ThinkVantage Design.”

ThinkVantage Design is built upon the concept of synergistically joining form and function.

ThinkVantage Design provides value to the customer by providing meaningful innovation that

enhances the ownership experience. It also has its own “design DNA” based on the classic

ThinkPad design, which is its heritage.

Visit lenovo.com for more information on Lenovo brand offerings.

PC Architecture (TXW102) September 2008 15

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

ThinkVantage Technologies

ImageUltra Builder System Migration Assistant

Consolidates multiple software Moves system settings and data

images into one master image easily from an old PC to a new PC

Software Delivery Center Access Connections

Automates delivery of application Switches painlessly between

software updates to PCs settings for different wireless

and wired networks

System Information Center Client Security Solution

Collects and tracks PC asset and Secures users’ PCs, data, and

security compliance information network communications from

unauthorized access

Rescue and Recovery System Update

Enables hassle-free recovery Accesses, downloads, and installs the

of data and system image latest updates for Think systems

Productivity Center

Provides users with access to

self-help support tools and

information with just one click

© 2008 Lenovo

ThinkVantage Technologies

ThinkVantage Technologies are a select group of offerings from

Lenovo designed to address emerging customer needs. Adding

value to open industry standards, ThinkVantage Technologies help

customers manage the cost of deploying end-user systems,

implement new technologies such as wireless computing, and help

ensure that these technologies can be implemented securely. While

many of these offerings currently exist, some are being significantly

enhanced and all of them have now been consolidated into a single

family of offerings.

Visit lenovo.com/thinkvantage for more information.

Popup from ThinkVantage

Productivity Center

PC Architecture (TXW102) September 2008 16

Topic 1 - Introduction to Computer Hardware Architecture

Types and Features of Computers:

Benefits of ThinkVantage Technologies

ThinkVantage Technologies address the entire customer ownership experience from

deployment to disposal.

Image Creation Hassle Free Secure Client End-

End-user Self-

Self- Hard Drive

• ImageUltra Builder Connection Data help Portal Data

- Hardware Independent • Access • Client Security Solution • Productivity Center Destruction

Imaging Technology Connections

• Password Manager • Rescue and • Secure Data

- Dynamic Operating

Recovery Disposal

Environment

- Software Delivery

Assistant Secure Data Information

Media and Asset

• Image on Demand

• Active Protection Management

• Imaging Technology System • System Information

Center

Center

Backup and

Network Deployment Software on

Recovery

• Remote Deployment

• Rescue and Recovery

Demand

Manager

• Software Delivery

Center

Client Migration Critical Updates

• System Update

• System Migration • Rescue and Recovery

Assistant with Antidote Delivery

Manager

© 2008 Lenovo

Benefits of ThinkVantage Technologies

Industry analysts state that the annualized cost of a PC represents less than 20 percent of the annual

total cost of ownership. ThinkVantage Technologies address the other 80% to help reduce your

total cost of ownership. ThinkVantage Technologies also help improve your business' productivity

and efficiency throughout each system's life cycle as you deploy, connect, protect, support, and

dispose of your company's PCs.

End-user productivity Life-cycle Management

(value out of the box that also can (solutions for SMB and LE)

provide key IT benefits)

• Access Connections • System Information Center

• Productivity Center • Software Distribution Center

• Active Protection System • ImageUltra Builder

• Client Security Software • Secure Data Disposal

• Rescue and Recovery • Remote Deployment Manager

• System Migration Assistant

• System utilities

PC Architecture (TXW102) September 2008 17

Topic 1 - Introduction to Computer Hardware Architecture

Life Cycle Phase TVT Purpose/Function of Tool

Deploy ImageUltra Builder Umbrella name for imaging technology that is focused on simplifying the

complexity of creating and managing corporate images; ImageUltra

Builder consists of the three components described below

- Software Delivery Assistant Provides customized installation of software applications based on a

(SDA) user's unique work group assignment and/or needs

- Dynamic Operating Environment Consolidates support for multiple operating systems and languages into

(DOE) one Super Image

- Hardware Independent Imaging Provides hardware-independent images that will support multiple system

Technology (HIIT) types via system-specific drivers and applications pulled from the PC’s

service partition (supported on ThinkPad and ThinkCentre systems only)

System Migration Assistant (SMA) An easy-to-use migration tool that automates the migration of both

settings and data through a menu system and advanced scripting

capability

Remote Deployment Manager Network-based imaging and system support tool that distributes images

(RDM)* and updates system settings (includes PowerQuest Drive Image Pro Lite)

Connect Access Connections Manages all wireless and wired connectivity settings and allows easy

switching between them

Protect Client Security Solution (CSS) Security software that provides authentication of end-user identity,

encryption of data, and simplified password management

Active Protection System (APS) Prevents some hard-drive crashes on most new ThinkPad models by

temporarily stopping the hard drive when a fall or similar event is

detected; provides up to four times greater impact protection than

systems without this feature

Support Productivity Center Provides one-button access to self-help support tools and information

about a user’s system

Rescue and Recovery A help desk behind the button that allows a system to recover itself from

OS corruption and even hard drive failures, fills the gap between

traditional backup and restore programs and re-imaging, allows remote

system recovery with or without user intervention, and automates the

deployment of critical patches, even if a system will not boot.

System Information Center (SIC) An electronic inventory management solution that tracks client PC

hardware and software assets, provides ThinkVantage Technology usage

information, and reports and measures security compliance

Software Delivery Center (SDC) Automates delivery of application software updates to PCs without end-

user intervention or disruption

Asset ID A unique asset-tagging technology that allows data, events, actions, and

responses to be read by or become interactive with other programs

System Update Accesses, downloads, and installs the latest updates for Think systems

IBM Director Agent An advanced CIM/WMI-based agent for systems and asset management

that allows extensive upward integration into other enterprise

management tools and databases

IBM Director A powerful systems management program with extensions targeted for

improved system setup, remote manageability, and event monitoring of

clients and servers

Dispose Secure Data Disposal An automated program that allows multiple levels of disk cleansing, which

ensures systems are properly safeguarded during disposal; meets the

DOD Level 5 and German standards for safe disposal

PC Architecture (TXW102) September 2008 18

Topic 1 - Introduction to Computer Hardware Architecture

ThinkVantage Technology Potential Savings* Assumptions Used in Calculating Savings

ImageUltra Builder $100 per unit • Deployed once per system versus typical cloned

image management and loading process

System Migration Assistant $70 per unit deployed • Deployed once per system versus manual

processes

Remote Deployment Manager $90 per system • Deployed once per system versus manual

processes

Access Connections $50 per wireless PC • Annual savings and only in notebook systems

• Assumes two help desk call per user per year

Client Security Solution $124-250+ per unit • $35-60 hardware replacement

• $49-150 encryption software replacement

• $40 support cost reduction

• Replaces comparable equipment (key fobs, etc.)

Active Protection System $200 or more per • Only in notebook systems

occurrence • $200 is the hardware replacement cost for a

ThinkPad 30 GB hard drive

Rescue and Recovery $180 per occurrence • Used for one incident in 13% of installed systems

• Average support time savings of 183 minutes

Secure Data Disposal $45 per PC • Once per PC per life cycle

* Potential savings are based on typical customer environments. Some figures represent costs that customers may redirect from

labor-intensive areas to other areas of their business. Other figures are based on cost avoidance of competitive solutions

purchased separately. All figures are calculated using the TVT and Wireless Calculators and data from Gartner Research and

customers. Actual savings are not guaranteed and will vary by customer.

PC Architecture (TXW102) September 2008 19

Topic 1 - Introduction to Computer Hardware Architecture

PC Architecture:

Computer Layers

• Layered structure

User

- This structure allows for

compatibility. Applications

- Bypassing layers increases Middleware API

performance. Operating

system

• BIOS (basic input/output system) Device

driver BIOS

- Located in flash memory Firmware

(sometimes called EEPROM) Adapter Hardware

- Supports plug-and-play Layers

- Supports power management

• Device driver

- Software to control a piece of

hardware

EEPROM BIOS

© 2008 Lenovo

Computer Layers

A computer consists of several layers that each have interfaces to communicate to the layer next

to it. A layered structure allows for compatibility; for example, the same shrink wrapped

operating system can work on millions of PCs from different vendors because it interfaces with

industry standard BIOS calls. The disadvantage to the layers is that each layer can slow

performance. So to increase performance a layer could be bypassed; for example, an application

could be written directly to the BIOS and device driver of a specific computer which would gain

performance, but would only work on that unique computer.

Some of the different computer layers shown in the diagram above are explained below.

• Applications are the software programs with which a user typically interacts, such as those used

for word processing (Microsoft Word), Web browsing (Internet Explorer), sending e-mail

(Lotus Notes), and using spreadsheets (Microsoft Excel).

• Middleware is software that provides an additional level of abstraction to applications. The idea

behind the middleware is to hide the complexity of implementing code that is not strictly

related to the business objectives that the application is supposed to be written for. Writing

applications against the basic APIs that the OS is able to expose is sometimes very time

consuming and it might take a while before a programmer starts to get into the “business

modules” of the application being developed. Using middleware is like actually talking to a

cleverer interface compared to the interface provided by the OS. Middleware has to implement

all the “boring stuff,” so that developers can concentrate on the business logic. Examples

include IBM DB2, Oracle, Microsoft SQL Server, Lotus Domino, Microsoft Internet

Information Server, IBM WebSphere, and BEA WebLogic.

PC Architecture (TXW102) September 2008 20

Topic 1 - Introduction to Computer Hardware Architecture

•The operating system is a set of programs that provides an environment in which applications

can run, allowing them easily to take advantage of the processor and I/O devices, such as disks

or adapters. Examples include Windows 2000, Windows XP, Vista, Red Hat Linux, and AIX

5L.

•The Basic Input/Output System (BIOS) is a set of program instructions that activates system

functions independently of hardware design (the layer between the physical hardware and the

operating system) and allows for software compatibility. BIOS is typically located in flash

memory (EEPROM) on the systemboard. When a PC is started, the BIOS runs a power-on self-

test (POST). It then tests the system and prepares the computer for operation by searching for

other BIOSes on the plug-in boards and setting up pointers (interrupt vectors) in memory to

access those routines. It then loads the operating system and passes control to it. The BIOS

accepts requests from the drivers as well as the application programs. The BIOS supports plug-

and-play and power management. Although there are several BIOS vendors, there are few

differences among their products.

Note: To preclude the problem of performing OS, BIOS, or driver updates before the OS or

network drivers are loaded, a Preboot Execution Environment (PXE) allows the system to boot

off the network. At boot, a PXE agent executes, and the PC gets an IP address from a DHCP

server and then uses the BOOTP protocol to look for a PXE server. The PXE client is firmware

implemented in BIOS (if LAN hardware is on the systemboard) or as a boot PROM (if LAN

adapter). Programs, including those in the PXE environment, require system configuration and

diagnostic information. A Systems Management BIOS (SMBIOS) is a chip that makes the

necessary information available via BIOS calls that are available through the OS and in the

preboot environment.

•Firmware is usually the layer of software that is between the device driver and adapter. It

typically is on a EEPROM of an adapter card and can be upgraded with new releases. Firmware

is similar to BIOS.

•Device Drivers are a type of software (which may be embedded in firmware) that controls or

emulates devices attached to the computer such as a printer, scanner, hard disk, monitor, or

mouse. Device drivers are typically loaded low into the memory of PCs at boot time. A device

driver expands an operating system's ability to work with peripherals and controls the software

routines that make peripherals work (a network card, a disk, printer). These routines may be part

of another program (many applications include device drivers for printers), or they may be

separate programs. Basic drivers come with the operating system, and drivers are normally

installed for each peripheral added.

PC Architecture (TXW102) September 2008 21

Topic 1 - Introduction to Computer Hardware Architecture

Layers of a PC: Illustration

To illustrate the different layers of a PC, below is a graphic that shows the series of processes

that occur when a user executes a single keystroke on a computer keyboard, and the

corresponding key to the numbered steps.

Application Program

6.

Operating System

4.

5. BIOS 3.

7.

Video

Circuitry

Keyboard

Cable

Keyboard Port

Hardware

1. 2. Hardware

1. The user presses N on keyboard.

2. The code corresponding to pressed key is sent over the cable to the keyboard port on

the systemboard.

3. The keyboard BIOS routine accepts the code, translates it into the letter n, and passes

it to the operating system.

4. The operating system passes the keystroke to the application program and sends the n

to the video BIOS (or the device driver).

5. The video BIOS sends the n to the graphics circuitry.

6. The application program accepts the keystroke and instructs the operating system to

look for the next keystroke.

7. The n appears on the screen.

PC Architecture (TXW102) September 2008 22

Topic 1 - Introduction to Computer Hardware Architecture

PC Architecture:

Subsystems

Major internal subsystems of a PC:

• Processor (Core 2 Duo)

Processor +

• L2 cache (2 MB) L1/L2 cache

PCI Express Memory and optional

x16 slot graphics controller

• Memory (2 GB) MCH or

PCI Express slots GMCH

• Bus(es) (PCI, PCIe) host bridge Memory

Direct

• Graphics controller (SVGA) Media

Interface PCIe controller

• Disk controller (SATA) I/O PCI controller

PCIe Controller SATA controller

and disk (250 GB) Hub IDE controller

(ICH)

USB controller

• Slots (PCI Express) 4 SATA disks

Super I/O USB 2.0

Firmware AC '97 codec

hub or

Low Pin

High Definition Audio

Count interface

© 2008 Lenovo

Subsystems

Subsystems in a PC communicate to each other via buses. Buses adhere to a particular

architecture (set of rules) to allow compatibility with the numerous subsystems that adhere to

the same architecture.

Most PCs are associated with the term Wintel, which refers to Microsoft Windows and Intel

chip technologies. PCIe stands for PCI Express.

The processor is the central component of a PC. Intel and AMD are the main processor vendors

used in PCs.

Data in the processor, caches, memory, buses, disk controller, and graphics controller is stored

electrically; so when electrical power is shut down, this data is lost. Data on the disk is stored

magnetically, so the data is saved even when electrical power is removed.

PC Architecture (TXW102) September 2008 23

Topic 1 - Introduction to Computer Hardware Architecture

PC Architecture:

Controllers

• All major subsystems have controllers.

• Controllers are circuitry controlling manner, method, and speed of access

to device.

• Controllers are part of chipset.

Controller Controls Examples

L2 cache controller L2 cache 2 MB

Memory controller Memory 2 GB

Bus controller(s) Data bus PCI Express (PCIe)

Graphics controller Monitor ATI Radeon 9600

Disk controller Disk 250 GB Serial ATA disk

© 2008 Lenovo

Controllers

All major subsystems have controllers that define how data will be obtained and stored.

Sometimes a controller is a single chip with the data stored in separate physical circuitry. For

example, a memory controller controls memory, but the data is stored in different physical chips

called DIMMs.

Sometimes a single physical chip contains multiple controllers. For example, the I/O Controller

Hub (ICH) is a single physical chip which houses the PCI Express controller, PCI controller,

Serial ATA controller, EIDE controller, USB controller, and other controllers.

Controllers are normally included in the chipset of the computer.

PC Architecture (TXW102) September 2008 24

Topic 1 - Introduction to Computer Hardware Architecture

PC Architecture:

Buses

Most transfers use three buses

• Control bus

• Address bus

16-bit bus = 16 wires for on/off charges (data)

• Data bus 32-bit bus = 32 wires for on/off charges (data)

64-bit bus = 64 wires for on/off charges (data)

Control I/O

Controller Data

Address

Processor Data Memory

Disk Graphics LAN

• Some architectures multiplex signal on same bus (wires)

© 2008 Lenovo

Buses

If two subsystems are on a bus, such as in the diagram with processor and memory, a data

transfer first involves sending the address on the address bus. Next, data is sent on the data bus.

If multiple subsystems exist on a bus, a control bus is needed in addition to the address and data

bus. The control bus is used to signal which subsystem will control the bus for the next transfer.

PC Architecture (TXW102) September 2008 25

Topic 1 - Introduction to Computer Hardware Architecture

Address Bus

An address bus determines how much memory the processor or any subsystem can directly

address. For example, a 32-bit address bus means 2 to the power of 32 or 4 billion unique

numbers to address 4 GB of memory.

0 0 0 0 . . . 0 0 0 0 0

0 0 0 0 . . . 0 0 0 0 1

0 0 0 0 . . . 0 0 0 1 0

0 0 0 0 . . . 0 0 1 0 0

0 0 0 0 . . . 0 0 1 0 1

Memory Addressing Similar to Car Odometer

Before data is read or written by a processor, the address of that data is sent first. This address is

sent on a separate set of physical wires called the address bus. The data is then sent on a

different set of physical wires called the data bus.

A processor is designed to use a certain maximum quantity of address lines. The amount of

physical memory that a processor can address is determined by this quantity. The number of

unique numbers that can be made by a base two number system (0s and 1s) with the quantity of

address digits determines the maximum addressable memory of a processor. Software can limit

this maximum addressability, for example, DOS sets the processor to use 20 address lines as

DOS only addresses 1 MB of memory.

Following are some processors and their addressability:

Address lines Addressable memory Examples

24 16 MB 486SLC

32 4 GB 486DX2

36 64 GB Pentium 4, Xeon

40 1 TB EM64T physical memory

44 18 TB Itanium

48 256 TB EM64T virtual memory

64 18 EX IA-64 64-bit flat addressing

Sometimes operating systems limit addressability, so that the operating system can not utilize

all the available physical memory.

PC Architecture (TXW102) September 2008 26

Topic 1 - Introduction to Computer Hardware Architecture

PC Architecture:

Bus Speeds

Processor +

L1/L2 Cache

System bus

400 to 1066 MHz

PCI Express MCH or Memory

x16 slot GMCH

PCI Express slots Host Bridge Memory bus

PCIe 2.5 GHz

200 to 800 MHz

PCIe 2.5 GHz

PCI 2.0 33 MHz I/O

Controller Direct Media Interface (DMI)

Hub 100 MHz

PCI 2.0 slots (ICH)

4 SATA

disks

Firmware USB

Super I/O Hub

Low Pin Count (LPC) Interface 33 MHz

• Each bus is clocked at a different rate.

• Bus speed is different from data transfer rate (MB/s).

• Newer buses are double data rate (same MHz doubles throughput).

• System bus and memory bus can be asynchronous or synchronous.

© 2008 Lenovo

Bus Speeds

Each bus in a PC has a speed (measured in megahertz) and a data transfer rate.

The bus between the processor and the memory controller was originally called the frontside

bus; the processor had a separate bus to its integrated L2 cache called the backside bus and a

separate bus outside the processor to the memory controller called the frontside bus. The

frontside bus and the backside bus were two different buses. With the introduction of the

Pentium 4 and follow-on processors, the frontside bus was named system bus, although both

terms were still used interchangeably. The change of the name to system bus was due to the fact

that the L2 cache was not isolated off a separate, independent bus to the degree that it was for

earlier processors, such as the Pentium II and Pentium III.

The memory bus is clocked at 200 to 400 MHz, but most memory today is double data rate

(DDR); this means data is transferred on both the rising and falling edge which doubles the

throughput from the base clock speed.

The system bus and the memory bus can be either synchronous or asynchronous, depending on

the memory controller of the chipset. Some memory controllers only support synchronous

system and memory bus speeds; some support either synchronous or asynchronous speeds. An

example of a memory controller that supports synchronous system and memory bus speeds is a

400 MHz system bus with a 200 MHz memory bus with PC2-3200 DDR2 memory (there is an

even multiple of 200 among 200 MHz and 400 MHz). An asynchronous example is a 400 MHz

system bus with a 266 MHz memory bus with PC2-4200 533MHz DDR2 memory. DDR3 uses

a 400 to 800 MHz memory bus.

PC Architecture (TXW102) September 2008 27

Topic 1 - Introduction to Computer Hardware Architecture

In 1996 and 1997, the PC industry standardized the 66 MHz system bus. Migration to a 100

MHz system bus occurred in 1998, then to a 133 MHz bus in 2000. The Pentium 4 introduced a

400 MHz system bus in late 2000, although it was really 100 MHz × 4 to yield 400 MHz. Later,

Pentium 4 processors utilized an 800 MHz system bus (200 MHz × 4) followed by a 1066 MHz

system bus (266 MHz × 4). The Core 2 Quad uses a 1066 MHz system bus (266 MHz × 4)

Data Transfer Rates

Data transfer rates (assuming that data is transferred on only one edge of the clock):

• 32-bit at 33 MHz is 132 MB/s (PCI bus)

• 32-bit at 66 MHz is 264 MB/s (PCI bus)

• 64-bit at 33 MHz is 264 MB/s (PCI bus)

• 64-bit at 66 MHz is 528 MB/s (PCI bus and system bus)

• 64-bit at 100 MHz is 800 MB/s (system bus)

• 64-bit at 200 MHz is 1.6 GB/s (backside bus to L2 cache; PC1600 DDR memory)

• 64-bit at 266 MHz is 2.1 GB/s (PC2100 DDR memory)

• 64-bit at 400 MHz is 3.2 GB/s (backside bus to full speed L2 cache, Pentium 4 system bus)

PC Architecture (TXW102) September 2008 28

Topic 1 - Introduction to Computer Hardware Architecture

PC Architecture:

Cache

• Cache is a buffer between subsystems.

• Disk transfer could involve five cache locations.

Processor +

1. L1 Cache

2. L2 Cache

PCI Express

x16 slot

MCH or 3. Memory

PCI Express slots

GMCH

Host Bridge

PCI

Express

I/O

Controller

4. Hub

SCSI (ICH)

EIDE Disks

5.

Firmware

Hub

© 2008 Lenovo

Cache

Cache is a storage place (buffer or bucket) that exists between two subsystems in order for data

to be accessed more quickly to increase performance. Performance is increased because the

cache subsystem usually has faster access technology and does not have to cross an additional

bus. Cache is typically used for reads, but it is increasingly being used for writes as well.

For example, getting information to the processor from the disk involves up to five cache

locations:

1. L1 cache in the processor (memory cache)

2. L2 cache (memory cache)

3. Software disk cache (in main memory)

4. Hardware disk cache (some disks may only use an FIFO buffer)

5. Disk buffer

For reads, one subsystem will usually request more data than what is immediately needed, and

that excess data is stored in the cache(s). During the next read, the cache(s) is searched for the

requested data, and if it is found, a read to the subsystem beyond the cache is not necessary.

PC Architecture (TXW102) September 2008 29

Topic 1 - Introduction to Computer Hardware Architecture

Industry Standards:

Restriction of Hazardous Substances (RoHS) Directive

• Restriction of hazardous substances in electrical Restricted substances:

and electronic equipment

• Lead

• Started by the European Union • Mercury

• Effective for shipments after July 1, 2006 • Cadmium

• Lenovo PC products comply across all of its • Hexavalent chromium

product lines worldwide • Polybrominated biphenyls

• Polybrominated

diphenylethers

FIN FIN

IS

NO NO

RUS

ES ES

SE

LV LV

LT LT

BY BY

IE IE

GB GB

POL POL

NL GER

NL GER

UK

BE BE

LU LU CZ CZ

SK SK

AT AT

FR

FR CH CH HU HUROMROM

HR HR

BA BA

YU YU BUL BUL

IT

MK MK

PT

PT SP SP AL

TUR

GR GR

CY

MAL

© 2008 Lenovo

Restriction of Hazardous Substances (RoHS) Directive

In February 2003, the European Union (EU) issued directive 2002/95/EC on the restriction of the

use of certain hazardous substances in electrical and electronic equipment on the EU market

beginning July 1, 2006. The Restriction of Hazardous Substances (RoHS) Directive requires

producers of electrical and electronic equipment to eliminate the use of six environmentally-

sensitive substances: lead, mercury, cadmium, hexavalent chromium, and the use of

polybrominated biphenyls (PBB) and polybrominated diphenylethers (PBDE) flame retardants. The

purpose is to eliminate the potential risks associated with electronic waste, so this legislation affects

the content and disposal requirements for electronic products.

Most IT hardware is included in scope of the directive: PCs, printers, servers, storage, and options.

Products (and their components) must comply.

Lenovo PC products comply with the RoHS directive across its product lines worldwide.

See www.rohs.gov.uk for more information.

PC Architecture (TXW102) September 2008 30

Topic 1 - Introduction to Computer Hardware Architecture

A similar directive from the European Union is the Waste Electrical and Electronic Equipment (WEEE)

Directive. WEEE encourages and sets criteria for the collection, treatment, recycling and recovery of

electrical and electronic waste. WEEE requires producers to ensure that equipment they put on the

market in the EU after August 13, 2005 is marked with the crossed-out wheeled bin symbol, the

producer’s name, and indication that the equipment was put on the market after August 13, 2005.

Lenovo PC products comply with the WEEE Directive requirements.

WEEE-Compliant Symbol

Example of RoHS Labeling on a PCI Express Adapter

PC Architecture (TXW102) September 2008 31

Topic 1 - Introduction to Computer Hardware Architecture

Industry Standards:

ENERGY STAR 4.0 and 80 PLUS

• ENERGY STAR 4.0 ENERGY STAR 4.0 Requirements:

- Voluntary lableling program by EPA to identify • 80% efficient power supply

and promote energy-efficient products • Low idle power

- Systems receive certification and logo when

energy saving requirements met

• 80 PLUS

- Power supply 80% or greater energy efficient

- Required to get ENERGY STAR 4.0 logo Logo for ENERGY STAR

- Alternative option for non-ENERGY STAR

systems; more flexibility on configuration

• Select Lenovo notebooks, desktops, and

workstations are ENERGY STAR 4.0-compliant

• All Lenovo ThinkVision monitors are

ENERGY STAR 4.0-compliant

Logo for 80 PLUS

certified power supply

© 2008 Lenovo

ENERGY STAR 4.0 and 80 PLUS

In 1992 the US Environmental Protection Agency (EPA) evolved its voluntary program, called

ENERGY STAR, to cover computers. The ENERGY STAR program for computers has the goal of

generating awareness of energy saving capabilities, as well as differentiating the market for more

energy-efficient computers and accelerating the market penetration of more energy-efficient

technologies. On July 20, 2007, the EPA updated the ENERGY STAR computer specification to

Version 4.0.

Monitors can qualify for ENERGY STAR 4.1 Tier 1 or Tier 2 based on power requirements for

Sleep and Off modes.

There are two fundamental changes from the current ENERGY STAR 3.0 program to the ENERGY

STAR 4.0:

1. Idle power under the operating system will now be measured and used as a metric to earn the

ENERGY STAR 4.0 rating as opposed to Standby power.

2. An 80% efficient power supply is a requirement (80 PLUS logo not required).

ENERGY STAR 3.0 was concerned with Standby power. In Standby, the number of storage devices,

processor cores, graphics power, memory etc. makes very little difference to the power used. These

devices are either turned off or are in a very low power state. However, in idle, all these devices are

drawing power.

PC Architecture (TXW102) September 2008 32

Topic 1 - Introduction to Computer Hardware Architecture

The 80% efficient power supply is a principal requirement of ENERGY STAR 4.0 and a major new

innovation. When a power supply converts AC power from the wall to the various DC voltages that the

computer needs, there is always a loss of power. The power loss varies with how busy the computer is.

An 80% efficient power supply is guaranteed to lose less than 20% of the AC power at 20%, 50% and

100% loads. Currently, power supplies for desktop computers range from approximately 65% to 75%

efficiency. A system can have an 80 PLUS power supply but not be ENERGY STAR 4.0-compliant. 80

PLUS power supplies are always auto-sensing.

See www.energystar.gov/ and www.80plus.org for more information.

ENERGY STAR 4.0 Classifications

2007 Limits

If hardware configuration consists of… Category Idle Sleep Standby

(watt) (watt) (watt)

• Multi-core processor or Then C 95W

Multi-processor and

• Graphics controller with > 128 MB

discrete memory and 4W 2W

• Two of the following three:

• ≥ 2 GB system memory 4.7W with 2.7W with

• TV Tuner and/or video capture WOL WOL

• ≥ 2 disks Else

• Multi-core processor or Then B 65W

Multi-processor and

• ≥ 1 GB system memory Else

• Any configuration not covered in Then A 50W

Category C or Category B

Hardware configuration determines category and resultant power limits.

PC Architecture (TXW102) September 2008 33

Topic 1 - Introduction to Computer Hardware Architecture

Industry Standards:

EPEAT

• Industry standard comparing desktop,

notebook, and monitors based on

environmental attributes

• Bronze, Silver, or Gold performance tiers

• EPEAT products must be ENERGY STAR

4.0-compliant

• Select Lenovo notebooks, desktops, and

monitors are EPEAT Gold-compliant Select Lenovo ThinkCentre A61e

desktops are EPEAT Gold-compliant

© 2008 Lenovo

Electronic Product Environmental Assessment Tool (EPEAT)

EPEAT is a system to help purchasers in the public and private sectors evaluate, compare and select

desktop computers, notebooks and monitors based on their environmental attributes. EPEAT also

provides a clear and consistent set of performance criteria for the design of products, and provides an

opportunity for manufacturers to secure market recognition for efforts to reduce the environmental

impact of its products.

The EPEAT Registry on the EPEAT Web site includes products that have been declared by their

manufacturers to be in conformance with the environmental performance standard for electronic

products known as IEEE 1680-2006. EPEAT operates a verification program to assure the credibility

of the registry.

EPEAT evaluates electronic products according to three tiers of environmental performance: Bronze,

Silver and Gold. The complete set of performance criteria includes 23 required criteria and 28 optional

criteria in 8 categories: reduction/elimination of environmentally sensitive materials; material

selection; design for end of life; product longevity/life cycle extension; energy conservation; end of

life management; corporate performance; packaging.

All EPEAT Bronze, Silver, and Gold registered products must be ENERGY STAR 4.0-compliant.

See www.epeat.net for additional information.

PC Architecture (TXW102) September 2008 34

Topic 1 - Introduction to Computer Hardware Architecture

Industry Standards:

Climate Savers

• Promotes technologies that improve the

efficiency of a computer’s power delivery

and reduce the energy consumed in an

inactive state

• Lenovo is on the Board of Directors and

one of the original 40 companies of the Logo for Climate Savers

initiative

• Lenovo’s offerings are exceeding current

targets of the Climate Savers challenge

Logo for Climate Savers

© 2008 Lenovo

Climate Savers

The goal of the environmental effort is to save energy and reduce greenhouse gas emissions by

setting new targets for energy-efficient computers and components, and promoting the adoption of

energy-efficient computers and power management tools globally.

The typical desktop PC wastes more than half of the power it draws from a power outlet. The

majority of this unused energy is wasted as heat and never reaches the processor, memory, disks, or

other components. As a result, offices, homes, and data centers have increased demands on air

conditioning which in turn increases energy requirements and associated costs.

The Challenge starts with the 2007 ENERGY STAR requirements for desktops, laptops and

workstations (including monitors), and gradually increases the efficiency requirements over the next

three years, as follows:

• From July 2007 through June 2008, PCs must meet the Energy Star requirements. This means 80

percent minimum efficiency for the power supply unit (PSU) at 20 percent, 50 percent, and 100

percent of rated output, a power factor of at least 0.9 at 100 percent of rated output, and meeting

the maximum power requirements in standby, sleep, and idle modes.

• From July 2008 through June 2009 the standard increases to 85 percent minimum efficiency for

the PSU at 50 percent of rated output (and 82 percent minimum efficiency at 20 percent and 100

percent of rated output).

• From July 2009 through June 2010, the standard increases to 88 percent minimum efficiency for

the PSU at 50 percent of rated output (and 85 percent minimum efficiency at 20 percent and 100

percent of rated output).

See www.climatesaverscomputing.org for more information.

PC Architecture (TXW102) September 2008 35

Topic 1 - Introduction to Computer Hardware Architecture

Industry Standards:

GREENGUARD

• Certification focused on acceptable indoor

air standards

• GREENGUARD Environmental Institute

• Select Lenovo systems are

GREENGUARD certified

Lenovo ThinkPad X300 is GREENGUARD certified

© 2008 Lenovo

GREENGUARD

The GREENGUARD Environmental Institute is an industry-independent, non-profit organization

that oversees the GREENGUARD Certification Program. As an ANSI Accredited Standards

Developer, GEI establishes acceptable indoor air standards for indoor products, environments, and

buildings. GEI’s mission is to improve public health and quality of life through programs that

improve indoor air. A GEI Advisory Board consisting of independent volunteers, who are

renowned experts in the areas of indoor air quality, public and environmental health, building

design and construction, and public policy, provides guidance and leadership to GEI.

The GREENGUARD Certification Program is an industry independent, third-party testing program

for low-emitting products and materials.

Select Lenovo products are GREENGUARD certified.

See www.greenguard.org for more information.

PC Architecture (TXW102) September 2008 36

Topic 1 - Introduction to Computer Hardware Architecture

Industry Standards:

Intel High Definition Audio (Intel HD Audio)

• Next-generation architecture (after AC ’97) for implementing audio, modem,

and communications functionality

• Immersive home-theater-quality sound experience including Dolby 7.1

audio capability

• Up to eight channels at 192 kHz with 32-bit quality

• Multi-streaming capabilities to send two or more different audio streams to

different places at the same time

• Supported with Intel ICH6 and later I/O Controller Hubs

- ICH6/ICH7 integrates both AC ’97 and HD Audio to facilitate transition

- ICH8/ICH9/ICH10 only integrates HD audio (not AC '97)

- Only AC ’97 or Intel HD Audio can be used at one time

Dolby

Game audio Digital

Intel HD Audio

supports multiple

audio streams at

Chat audio the same time.

© 2008 Lenovo

Intel High Definition Audio (Intel HD Audio)

Intel High Definition (HD) Audio is an evolutionary technology that replaces AC ’97. This next

generation architecture for implementing audio, modem, and communications functionality was

developed to enhance the overall user PC audio experience and to improve stability. Intel HD

Audio facilitates exciting audio usage models while providing audio quality that can deliver

consumer electronics levels of audio experience. The Intel HD Audio specification v1.0 was

released in June 2004.

AC ’97 Intel High Definition Audio

• Single stream (in and out) • Up to 15 input and 15 output streams at one time

and up to 16 channels per stream

• 8 channels with 32-bit output, 192 kHz multi-

• 6 channels with 20-bit output, 96 kHz stereo max

channel

– 12 Mb/s max

– 48 Mb/s (SDO), 24 Mb/s (SDI)

• Fixed bandwidth assignment • Dynamic bandwidth assignment

• AC ’97 DMAs: dedicated function assignment • DMAs: dynamic function assignment

• Codec enumeration at boot time (BIOS) • Codec enumeration done by software

(bus driver)

• Codec configuration limitation • No codec configuration limitation

• 12 MHz clock provided by primary codec • 24 MHz clock provided by the ICH6

• Driver software developed by audio codec supplier • OS native bus driver and Independent Hardware

Vendor value-added function driver

PC Architecture (TXW102) September 2008 37

Topic 1 - Introduction to Computer Hardware Architecture

Support for Intel HD Audio is found in the ICH6 and later I/O Controller Hubs. The ICH6 and

ICH7 integrate both AC ’97 and Intel HD Audio to facilitate transition from the older AC ’97;

however, only AC ’97 or Intel HD Audio can be used at one time. (Either requires an additional

external codec chip; when Intel HD Audio was announced, the older AC ’97 chips cost less

money.) The ICH6/ICH7 Intel HD Audio digital link shares pins with the AC ’97 link. For

input, the ICH6/ICH7 adds support from an array of microphones that can be used for enhanced

communication capabilities and improved speech recognition. The ICH8 only supports HD

Audio (not AC '97).

Intel HD Audio has support for a multi-channel audio stream, a 32-bit sample depth, and a

sample rate up to 192 kHz.

Intel HD Audio delivers significant improvements over previous-generation integrated audio

and sound cards. Intel HD Audio hardware is capable of delivering the support and sound

quality for up to eight channels at 192 kHz/32-bit quality, while the AC ‘97 specification can

only support six channels at 48 kHz/20-bit quality. In addition, by providing dedicated system

bandwidth for critical audio functions, Intel HD Audio is architected to prevent the occasional

glitches or pops that other audio solutions can have.

Dolby Laboratories selected Intel HD Audio to bring Dolby-quality surround sound

technologies to the PC, as part of the PC Logo Program that Dolby recently announced. The

combination of these technologies marks an important milestone in delivering quality digital

audio to consumers. Intel HD Audio will be able to support all the Dolby technologies,

including the latest Dolby Pro Logic IIx, which makes it possible to enjoy older stereo content

in 7.1-channel surround sound.

Standardized

Register Interface

(UAA)

Modem codec

OS Audio codec

ICH6

Audio Intel HD

Telephony codec

driver UAA Audio Intel HD

Intel HD

Modem bus driver registers Audio Link HDMI codec

Audio

driver

Controller

AC ’97

AC ’97 registers Audio Dock

AC ’97 AC Link Dock codec

drivers codec

Cntrl

Only Intel HD Audio or AC ’97 Modem

may be used at one time codec

Intel HD Audio Overview

Intel HD Audio also allows users to play back two different audio tracks, such as a CD and a

DVD simultaneously, which can not be done using current audio solutions. Intel HD Audio

features multi-streaming capabilities that give users the ability to send two or more different

audio streams to different places at the same time, from the same PC.

PC Architecture (TXW102) September 2008 38

Topic 1 - Introduction to Computer Hardware Architecture

Microsoft has chosen Intel HD Audio as the main architecture for their new Unified Audio

Architecture (UAA), which provides one driver that will support all Intel HD Audio controllers

and codecs. While the Microsoft driver is expected to support basic Intel HD Audio functions,

codec vendors are expected to differentiate their solutions by offering enhanced Intel HD audio

solutions.

Intel HD Audio also enables enhanced voice capture through the use of array microphones,

giving users more accurate speech input. While other audio implementations have limited

support for simple array microphones, Intel HD Audio supports larger array microphones. By

increasing the size of the array microphone, users get incredibly clean input through better noise

cancellation and beam forming. This produces higher-quality input to voice recognition, Voice

over IP (VoIP), and other voice-driven activities.

Intel HD Audio also provides improvements that support better jack retasking. The computer

can sense when a device is plugged into an audio jack, determine what kind of device it is, and

change the port function if the device has been plugged into the wrong port. For example, if a

microphone is plugged into a speaker jack, the computer will recognize the error and can

change the jack to function as a microphone jack. This is an important step in getting audio to a

point where it “just works.” (Users won’t need to worry about getting the right device plugged

into the right audio jack.)

The Intel HD Audio controller supports up to three codecs (such as an audio codec or modem

codec). With three Serial Data In (SDI) and one Serial Data Out (SDO) signals, concurrent

codec transactions on multiple codecs are made possible.

The SDO connects to all codecs and provides a bandwidth of 48 Mb/s. Each of the three SDIs

are typically connected to a codec and have a bandwidth of 24 Mb/s. In addition, the controller

has eight non-dedicated, multipurpose DMA engines (4 input, and 4 output). This allows

potential for full utilization of DMA engines for better performance than the dedicated function

DMA engines found in AC ’97. In addition, dynamic allocation of the DMA engines allows

link bandwidth to be managed effectively and enables the support of simultaneous independent

streams. This capability enables new exciting usage models (e.g., listening to music while

playing a multi-player game on the Internet).

Surround Surround

Center

Left Right Dolby Digital

DTS

DVD Video

CD

Subwoofer

With Intel HD Audio, a DVD movie with 5.1 audio can be sent

to a surround sound system in the living room, while you

listen to digital music and surf the Web on the PC.

PC Architecture (TXW102) September 2008 39

Topic 1 - Introduction to Computer Hardware Architecture

Intel HD Audio Codec On Desktop Systemboard

PC Architecture (TXW102) September 2008 40

Topic 1 - Introduction to Computer Hardware Architecture

Industry Standards:

Intel Active Management Technology (Intel AMT)

• As part of Intel vPro technology, Intel AMT enables secure,

remote management of systems

• Offers robust features for asset management, remote

management, and security

• Desktop and notebook systems

Out-of-Band system Allows remote management of platforms regardless

access of system power or OS state

Remote troubleshooting Significantly reduces desk-side visits, increasing the

and recover efficiency of IT technical staff

Proactive alerting Decreases downtime and minimizes time-to-repair

Remote hardware and Increase speed and accuracy over manual inventory

software asset tracking tracking, reducing asset accounting costs

Third-party nonvolatile Increased speed and accuracy over manual inventory

storage tracking, reducing asset accounting cost

Proactive blocking and Keep viruses and worms from infecting end-user PCs

reactive containment of and spreading, increasing network uptime

network threats

© 2008 Lenovo

Intel Active Management Technology (Intel AMT)

A major barrier to greater IT efficiency has been removed by Intel Active Management

Technology (Intel AMT), a feature on desktops (Intel Core 2 Processor with vPro Technology)

and notebooks (Intel Centrino with vPro Technology). Using built-in platform capabilities and

popular third-party management and security applications, Intel AMT allows IT to better

discover, heal, and protect their networked computing assets. Here's how:

• Discover: Intel AMT stores hardware and software information in non-volatile memory. With

built-in manageability, Intel AMT allows IT to discover the assets, even while PCs are

powered off. With Intel AMT, remote consoles do not rely on local software agents, helping

to avoid accidental data loss.

• Heal: The built-in manageability of Intel AMT provides out-of-band management capabilities

to allow IT to remotely heal systems after OS failures. Alerting and event logging help IT

detect problems quickly to reduce downtime.

• Protect: Intel AMT System Defense Feature protects your network from threats at the source

by proactively blocking incoming threats, reactively containing infected clients before they

impact the network, and proactively alerting IT when critical software agents are removed.

Intel AMT also helps to protect your network by making it easier to keep software and virus

protection consistent and up-to-date across the enterprise. Third-party software can store

version numbers or policy data in non-volatile memory for off-hours retrieval or updates.

PC Architecture (TXW102) September 2008 41

Topic 1 - Introduction to Computer Hardware Architecture

Intel AMT requires the computer system to have an Intel AMT-enabled chipset, network hardware and

software, as well as connection with a power source and a corporate network connection. With regard to

notebooks, Intel AMT may not be available or certain capabilities may be limited over a host OS-based

VPN or when connecting wirelessly, on battery power, sleeping, hibernating, or powered off. For more

information, see www.intel.com/technology/manage/iamt.

Version Year Key Features

AMT 1.0 2005 • Hardware inventory

AMT 2.1 2006 • System defense

• Wake on LAN

• USB Provisioning

AMT 2.5 2006 • Notebook support

AMT 2.6/3.0 2007 • Remote configuration

AMT 4.0 2008 (notebooks) • WS-MAN and DASH 1.0

AMT 5.0 2008 (desktops) • WS-MAN and DASH 1.0

Dash 1.0 Dash 1.1 AMT 2.6/3.0 AMT 4.0 AMT 5.0

Boot Control X X X X X

Power State Management X X X X X

Hardware Inventory X X X X X

Software Inventory X X X X X

Hardware Alerting X X X X X

Serial Over LAN X X X X

IDE Redirect X X X X

Non Volatile Memory X X X X

Agent Presence X X X

Remote Configuration X X X

Enhanced System Defense X X X

Audit Logs X X

Wireless Management in X N/A

Sleep States

Microsoft NAP / Cisco SDN X X

Client Initiated Remote Access X X

(wired)

Measured AMT X X

Enhanced System Defense X X

KVM X

PC Architecture (TXW102) September 2008 42

Topic 1 - Introduction to Computer Hardware Architecture

Benchmarks

• Understand benchmark objective:

either application throughput or

subsystem performance

• Examples include:

- BAPCo

SYSmark 2004 SE

SYSmark 2007 Preview

- MobileMark 2007

Lenovo ThinkCentre Desktop

- 3DMark05 and 3DMark06

- SPEC CPU2000

PC performance

doubles every

two years.

© 2008 Lenovo

Benchmarks

The following is a short list of benchmarks and the systems they measure.

• Overall performance:

– SYSmark 2004 SE - SYSmark includes office productivity and Internet content creation

benchmark tests. The two scores are combined and given a weighted average to produce an

overall performance rating. Both SYSmark tests derive scores by using real-world

applications to run a preset script of user-driven workloads and usage models developed by

application experts.

The SYSmark 2004 SE Internet Content Creation test is organized as scenarios that are

designed to simulate an Internet content creator’s day. This benchmark incorporates such

applications as Adobe Photoshop 7.01, Discreet 3ds max 5.0, and Macromedia

Dreamweaver MX.

The SYSmark 2004 SE Office Productivity test follows ICC’s blueprint by mimicking the

usage patterns of today’s desktop and mobile business users, including the concurrent

execution of multiple programs. Applications such as Adobe Acrobat 5.0.5, McAfee

VirusScan 7.0, and the Microsoft Office suite are used. Each SYSmark test measures the

response time of the application to user input. Both scores are combined using a geometric

mean to get an overall score.

PC Architecture (TXW102) September 2008 43

Topic 1 - Introduction to Computer Hardware Architecture

The SYSmark benchmarks are created by BAPCo (Business Applications Performance

Corporation), which is a nonprofit corporation founded in May 1991 to create objective

performance benchmarks that are representative of the typical business environment. For

notebook systems, MobileMark 2005 is a benchmark created by BAPCo that measures both

performance and battery life at the same time using popular applications. Contact

www.bapco.com for more information.

– SYSmark 2007 Preview – In 2007, BAPCo released SYSmark 2007 Preview. This

benchmark extends the SYSmark family to support Windows Vista. SYSmark 2007

Preview allows users to directly compare platforms based on Windows Vista to those based

on Windows XP Professional and Home.

– MobileMark 2007 – MobileMark 2007 is the latest version of the premier notebook battery

life and performance under battery life metric based on real world applications.

• Graphics performance

– 3DMark03, 3DMark05, 3DMark06, and 3DMarkMobile06 are benchmark tests that run

through different scenes using various DirectX or OpenGL calls to derive a score reflecting

the graphics hardware and driver performance. See www.futuremark.com/products/ for

more information.

• Battery life

– Business Winstone BatteryMark (BWS BatteryMark) 2004 measures the battery life of

notebook computers, providing users with a good idea of how long a notebook battery will

hold up under normal use. This benchmark uses the same workload as in Business

Winstone 2004.

Notable benchmark organizations

• In 1988, the Transaction Processing Council (TPC) was formed to fulfill the need for

transaction processing benchmarks that emulate the workloads found on database servers.

The council includes representatives from a cross-section of 45 hardware and software

companies that meet to establish benchmark content. A primary goal of the council is to

provide objective and verifiable performance data to the industry. Visit www.tpc.org for

more information.

• The Standard Performance Evaluation Corporation (SPEC) establishes, maintains, and

endorses a standardized set of relevant benchmarks and metrics for performance evaluation.

Contact www.specbench.org for more information.

– SPEC CPU2000 - Introduced in late 2000, SPEC (Standard Performance Evaluation Corp.)

CPU2000 is a workstation application-based benchmark program that can be used across

several versions of Microsoft Windows NT, Windows 2000, and Unix. It consists of the

two benchmark suites listed below. Both measure the real-world performance of a

computer’s processor, memory architecture, and compiler. These replace CPUmark and

FPUmark:

• SPECINT2000 measures computation-intensive integer performance

• SPECFP2000 measures computation-intensive floating point performance.

PC Architecture (TXW102) September 2008 44

Topic 1 - Introduction to Computer Hardware Architecture

Security Issues:

Trusted Platform Module (TPM)

• Security chip to enhance security of PC

• Select Lenovo ThinkPad and ThinkCentre

systems have a TPM

• Used with ThinkVantage Client Security Solution

ThinkPad security chip

ThinkCentre security chip

© 2008 Lenovo

Trusted Platform Module