Professional Documents

Culture Documents

Lossy Image Compression Using Wavelets

Uploaded by

Bhavikk KotadiaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lossy Image Compression Using Wavelets

Uploaded by

Bhavikk KotadiaCopyright:

Available Formats

Journal of Intelligent and Robotic Systems 28: 3959, 2000.

2000 Kluwer Academic Publishers. Printed in the Netherlands.

39

Lossy Image Compression Using Wavelets

NICK D. PANAGIOTACOPULOS, KEN FRIESEN and SUKIT LERTSUNTIVIT

Department of Electrical Engineering, College of Engineering, California State University,

Long Beach, CA 90840, U.S.A.

(Received: 15 June 1998; accepted: 19 July 1999)

Abstract. In this paper, we report the results of the application of transform coding image data

compression techniques using Daubechies and Coifman wavelets. More specically, D2, D4, D8,

D16, and C6, C12 wavelets were used. The results from these wavelets were compared with those of

discrete cosine transform. They clearly demonstrated the superiority of the wavelet-based techniques

both in compression ratios and image quality, as well as in computational speed. Two quantiza-

tion methods were used: non-uniform scalar quantization and pseudo-quantization. Both produced

satisfactory results (8688% compression ratio, and acceptable image quality).

Key words: wavelet, wavelet function, multiresolution analysis (MRA), lter banks, lossy compres-

sion, scaling functions, dilation equation, pseudo-quantization.

1. Introduction

The aim of image compression is to reduce the size of an image with or without

loss of information (lossy or lossless compression). One of the most commonly

used lossy compression methods is that of transform coding using one of the many

image transforms available. In a previous paper [4], we examined the performance

of the three most common transform methods: the Hadamard, the Walsh, and

the discrete cosine transform [9]. The idea here is to transform the image into

a new domain, where the image is now represented (approximated) by a set of

decorrelated coefcients. Clearly, the larger the number of coefcients, the more

accurate the approximation is. However, in order to achieve (lossy) compression,

the coefcients containing less important information are removed while those with

the most important information are kept. At this point, we can inspect qualita-

tively (visually) and quantitatively (signal to noise ratio) the compressed image

by reconstructing it via the inverse transformation. It was observed that by using

the appropriate transform method (choose the best basis) only, we can achieve a

satisfactory compression ratio but not good enough for most practical applications.

In order to achieve higher compression ratios, The transformed image was subject

to a scalar, non-uniform quantization (which removes unimportant coefcients)

followed by the application of a combined variable length and run-length coding

process. In summary, the compression process consists of three steps: transfor-

mation, quantization, and encoding, while decompression (reconstruction) is the

40 N. D. PANAGIOTACOPULOS ET AL.

inverse process. In [4], we demonstrated that from all of these transform methods,

the discrete cosine transform method produced the best compression ratio, the best

image quality (higher signal to noise ratio), and the smallest mean square error. The

Walsh and Hadamard transforms were next in performance. In the same study [4],

we examined the performance of two discrete Wavelet transform methods, namely:

the classical Haar wavelet and the Daubechies (D4) wavelet. The quantization and

encoding used for both methods were the same as the ones used for the other three

methods. We found that the D4 wavelet outperformed the discrete cosine transform

method by obtaining a better compression ratio, a higher signal to noise ratio and

higher computational speed, while the Haar resulted in a very high compression

ratio but a low signal to noise ratio. Similar observations were made when higher

order Daubechies and Coifman wavelets were used.

2. Theoretical Basis

Image compression consists of two processes: the compression process (encoding),

and the decompression (decoding) process. The compression process requires three

steps: transformation, quantization, and encoding, while the decompression is the

inverse process and requires: decoding, dequantization, and inverse transformation.

Next, we discuss the theoretical foundation upon which this research is based.

2.1. THE TRANSFORMATION

Let us consider an (N N) image

f (x, y) in D = {a x b, c y d}

and a double sequence of square integral orthonomal functions:

{

m,n

(x, y)} in D m, n N,

where N = {1, 2, . . .} is the set of natural numbers. Then we can approximate

f (x, y) by

f (x, y)

=

N

m=1

N

n=1

a

m,n

m,n

(x, y),

where

a

m,n

=

_

b

a

_

d

c

f (x, y)

m,n

(x, y) dx dy.

The above expression for f (x, y) is known as generalized Fourier series in 2D and

a

m,n

are the generalized Fourier coefcients.

The functions

m,n

(x, y) are known as basis functions in 2Dand can be properly

chosen (i.e., cosine, or other orthonomal functions). Clearly, the image f (x, y) is

LOSSY IMAGE COMPRESSION USING WAVELETS 41

now represented by the coefcients a

m,n

m, n N = {1, 2, . . . , N}. If N is a

large integer number, then the number of coefcients a

m,n

, needed to represent the

image, is (impractically) large but the representation of f (x, y) is very accurate.

However, in lossy image compression, we want to approximate f (x, y) using fewer

coefcients as long as the quality of the compressed image is acceptable. By an

acceptable image we mean an image which is visually undistinguishable from the

original image. That is, the acceptability is a function of a user-specied threshold

error e (if e 0 the compression is lossless). Therefore, we want to nd an

approximation such that the error e does not exceed the allowable error level for

a good image quality. More precisely, the problem is: given the basis functions

m,n

(x, y) and a desired error e, nd a cruder approximation f

e

(x, y) for f (x, y)

using a smaller number of coefcients a

m,n

m, n N = {1, 2, . . . , M}, where

M < N. That is, we are looking for

f

e

(x, y)

=

M

m=1

M

n=1

a

m,n

m,n

(x, y)

such that the (L

2

) norm

_

_

f (x, y) f

e

(x, y)

_

_

2

e

for M < N. From the expressions for f (x, y), f

e

(x, y), and we obtain

_

_

f (x, y) f

e

(x, y)

_

_

2

2

=

N

u=m+1

N

l=n+1

a

2

k,l

.

This indicates that the square of the overall L

2

error is just the sum of squares of

all the coefcients we choose to leave out. We assume that the coefcients a

m,n

m, n N = {1, 2, . . . , M} are in descending order of their magnitude according

to the information content.

Furthermore, in order to make the computer implementation easier, let us as-

sume that the functions

m,n

(x, y) are separable a

m,n

m, n N. That is,

m,n

(x, y) =

m

(x)

n

(y) in D m, n N.

Then we can rewrite the expression for f (x, y) and a

m,n

as follows:

f (x, y) =

N

m=1

_

N

n=1

a

m,n

n

(y)

_

m

(x),

a

m,n

=

_

b

a

_ _

d

c

f (x, y)

n

(y) dy

_

m

(x) dx.

In summary [3], the compression is achieved by computing the coefcients a

m,n

of the image, sorting them in their descending order of decreasing magnitude such

that

N

u=m+1

N

l=n+1

(a

m,n

)

2

e

2

for M < N, and for a given error e.

42 N. D. PANAGIOTACOPULOS ET AL.

3. Filter Banks [2, 7, 8]

In the transformation section, we presented the general theoretical basis of the

classical compression methods. Next, we discuss in detail an efcient version of

this technique known as a multiresolution decomposition/reconstruction approach.

The Haar (D2), the Daubechies (D4, D6, D8, D10, D12, and D16) and the Coif-

man (C6 and C12) wavelets act as multiple branch lter banks upon the original

image transforming it into a sequence of independent base images preserving the

decorrelation property of the DCT. We can calculate the number of branches (nb)

of the lter back by the formula:

nb = log

2

N log

2

(order of lter) 1.

For example, for a 256256 image the D2 wavelet (WT) is an 8 branch lter bank

and the 2D D4 WT is a 7 branch lter bank. (The 2D D4 WT has 4 tap weights,

while the 2D D2 WT has 2 tap weights.) Each branch of the lter bank down

samples the input image by a factor of 2 which results in the output subimage

having its dimensions reduced by a factor of 2. The lter bank stops when the

dimensions of the output subimage equal half the tap weights of the lter.

The original image contains superimposed signals in all frequency bands. The

rst branch of the lter bank separates the original image into high-frequency

and low-frequency subimages which are orthogonal, hence independent. The low-

frequency subimage still contains superimposed signals in multi-frequency bands.

The second branch of the lter bank operation separates the low-frequency compo-

nents and high-frequency components of the correlated subimage output from the

rst branch into orthogonal low-frequency and high-frequency subimages. This

successive decomposition of the correlated low-frequency output subimages from

each branch of the lter bank continues through the last branch of the lter bank.

The result is a sequence of independent subimages each containing 2D signals in a

distinct frequency range.

3.1. TWO-DIMENSIONAL MULTIRESOLUTION ANALYSIS (MRA)

DECOMPOSITION

Both Daubechies and Coifman WT generate an MRA decomposition of the Hilbert

space L

2

(R

2

), the space of nite energy 2D signals. A signal f (x, y) is contained

in L

2

(R

2

) if the following condition holds:

_

f (x, y)

2

dx dy < .

Any nite image is contained in L

2

(R

2

) since the above integral always exists for

functions dened in a 2Dnite interval {[a, b][c, d]}. Any 2Dwavelet constitutes

an MRA decomposition of L

2

(R

2

) if it consists of a pair of basis functions for

LOSSY IMAGE COMPRESSION USING WAVELETS 43

L

2

(R

2

): ((x, y), (x, y)) that have special algebraic properties and as a result

impose a special algebraic structure upon the space L

2

(R

2

). The basis function

(x, y) is called scaling function and the basis function (x, y) is called wavelet

function. Before presenting a detailed description of the 2D MRA generated by

Daubechies and Coifman WT, we note that both (x, y) and (x, y) are separable

functions in the variables x, y. That is, (x, y) =

1

(x)

2

(y), where

1

(x) and

2

(y) are 1D scaling functions that generate 1D MRA decompositions of the space

of 1D nite energy signals: L

2

(R); and (x, y) =

1

(x)

2

(y), where

1

(x) and

2

(y) are 1D wavelet functions corresponding to the scaling functions

1

(x) and

2

(y), respectively. The properties of the 2D MRA decomposition for the space

L

2

(R

2

) will be dened as the Cartesian product of 2 independent 1D MRA de-

compositions one acting on the x-axis, the other on the y-axis. The key function

generating an MRAdecomposition is the scaling function

1

(x). Ascaling function

is the solution to the following dilation equation:

1

(x) =

k

a

k

1

(2x k),

where the coefcients a

k

have the property a

k

, a

k21

= 2(k 1), where () is

the Kronecker delta, which makes the set of integer translates of

1

(x):

1

(x k)

orthogonal. This property is expressed as follows

_

1

(x k),

1

(x 1)

_

= (k 1).

As such the integer translates of the scaling function form a basis for a closed

subspace V

0

. If

1

(x) is scaled by a factor of 2, the integer translates of

1

(2x),

1

(2xk), also form a basis for another subspaces of L

2

(R) V

1

which contains V

0

:

V

0

V

1

.

If

1

(x) is scaled by the factor 2

2

, then the integer translations of the function

1

(2

2

x),

1

(2

2

x k), form a basis for another closed subspace of L

2

(R) V

2

that

properly contains both the subspaces V

0

and V

1

:

V

0

V

1

V

2

.

The set of integer translates of

1

(x) scaled by a positive power of 2 (2

j

) forms the

basis for a closed subspace of L

2

(R) V

j

which properly contain all the subspaces

V of a lower order:

V

0

V

1

V

j

.

If we scale continuously the function

1

(x) by increasing powers of 2, a ladder

of subspaces is generated whose innite union comprises the space L

2

(R):

L

2

(R) = clos

_

jZ

V

j

.

44 N. D. PANAGIOTACOPULOS ET AL.

Hence, any nite energy 1D signal f (x) can be expressed as a series expansion of

the basis functions of these component spaces:

f (x) =

k

c

jk

jk

(x),

where the coefcient c

jk

is given by:

c

jk

=

_

f (x)

jk

(x) dx;

j,k

(x) =

1

_

2

j

x k

_

.

Since V

0

is a closed subspace in a Hilbert space which is also contained in V

1

, an-

other closed subspace in a Hilbert space, there must exist another closed subspace

denoted by W

0

which is the orthogonal complement to subspace V

0

such that the

following relationship between the subspaces V

0

, W

0

, and V

1

hold:

V

1

= V

0

W

0

.

That is, the subspace V

1

has, as a basis, the union of bases for the 2 subspaces on

the right-hand side and the bases for the respective subspaces are orthogonal. This

relationship is known as the decomposition of subspace V

1

. The subspace W

0

is

generated by integer of the wavelet function

1

(x),

1

(x k), corresponding to

the scaling function

1

(x). Since W

0

is orthogonal to V

0

for all j, k Z,

_

1

(x j),

1

(x k)

_

= 0.

Since the integer

1

(2x j) are also a basis for the closed subspace V

1

that is con-

tained in a closed subspace there must exist a closet subspace that is the orthogonal

complement of V

1

, W

1

, and the closed subspace V

2

is a direct sum of the closed

subspace V

1

and its orthogonal complement subspace W

1

:

V

2

= V

1

W

1

.

If one substitutes the decomposition of subspace V

1

in the above expression one

obtains:

V

2

= V

0

W

0

W

1

.

Since the closed subspace V

2

is contained in the closed subspace V

3

, there exists

an orthogonal complement subspace to V

2

, W

2

, such that V

3

= V

2

W

2

. Then,

V

3

= V

0

W

0

W

1

W

2

.

The decomposition relation

V

j

= V

j1

W

j1

can be extended to all orders of V subspaces. If one substitutes the direct sum

decomposition for all V

j

in

L

2

(R) = clos

_

jZ

V

j

,

LOSSY IMAGE COMPRESSION USING WAVELETS 45

then L

2

(R) has an alternate subspace composition:

L

2

(R) = clos

_

jZ

W

j

_

.

This is known as the direct sum composition for space L

2

(R). This expression

is more rened than the composition of V spaces since each of the generating

subspaces is orthogonal. This is the special algebraic structure for the Hilbert

space L

2

(R) created by an MRA basis: it produces 2 sets of generating subspaces

as dened in the two previous relations, the latter being composed of mutually

independent (orthogonal) generating subspaces. The WT in the space L

2

(R) is the

transformation obtained from the two previous generating expressions; that is, the

WT of any 1D nite energy signal f (x) is the conversion of its series expansion

relative to the subspaces

{jZ}

V

j

:

f (x) =

k

c

jk

jk

(x)

to a series expansion relative to the subspaces W

j

, j Z:

f (x) =

k

d

jk

jk

(x).

We now extrapolate the denition of a 1D MRA decomposition to the 2D Hilbert

space L

2

(R

2

) generated by separable wavelet functions. Since the scaling function

(x, y) is dened by

1

(x)

2

(y), where

1

(x) and

2

(y) are 1D scaling functions

in L

2

(R), the 2D wavelet function (x, y) is dened as

1

(x)

2

(y), where

1

(x)

and

2

(y) are the 1D wavelet functions corresponding to the scaling functions

1

(x) and

2

(y), respectively. The subspace V

(2)

0

is dened as the Cartesian prod-

uct space V

(x)

0

V

(y)

0

of the two 1D subspaces which, for the sake of notational

convenience, will be referred to as V

(x)

0

V

(y)

0

. The subspace V

(x)

0

is generated by the

basis

1

(x k), and V

(y)

0

is generated by the basis

2

(y k). The space W

(2)

0

is the

Cartesian product W

(x)

0

W

(y)

0

where W

(x)

0

is generated by the basis

1

(x k), and

W

(y)

0

is generated by the basis

2

(y k). The decomposition of the space V

(x)

1

V

(y)

1

is given by:

V

(x)

1

V

(y)

1

=

_

V

(x)

0

W

(x)

0

__

V

(y)

0

W

(y)

0

_

=

_

V

(x)

0

V

(y)

0

V

(x)

0

W

(y)

0

W

(x)

0

V

(y)

0

W

(x)

0

W

(y)

0

_

.

Each of these 2D subspaces is orthogonal. The subspace V

(x)

0

V

(y)

0

contains only

low-frequency components in both variables x and y, the subspaces V

(x)

0

W

(y)

0

con-

tain low-frequency components in the variable x and high-frequency components

in the variable y, the subspace W

(x)

0

V

(y)

0

contains high-frequency components in

the variable x and low-frequency components in the variable y, and the subspace

W

(x)

0

W

(y)

0

contains high-frequency components in both variables x and y.

46 N. D. PANAGIOTACOPULOS ET AL.

3.1.1. Decomposition Procedure

In our case, we are wavelet transforming a 256 256 image. By its dimension

the original image is contained in the L

2

(R

2

) subspace V

(x)

8

V

(y)

8

. The WT of this

image is the transformation of subspace V

(x)

8

V

(y)

8

onto the most rened direct

sum subspace possible for the WT used. In the case of the 2D Haar WT this

transformation is:

V

(x)

8

V

(y)

8

_

V

(x)

0

V

(y)

0

V

(x)

0

W

(y)

0

W

(x)

0

V

(y)

0

_

0j,k8

W

(x)

j

W

(y)

k

_

since 0 is the stopping order for the 2D Haar WT. For the 2D D4 WT, the transfor-

mation is:

V

(x)

8

V

(y)

8

_

V

(x)

1

V

(y)

1

V

(x)

1

W

(y)

1

W

(x)

1

V

(y)

1

_

1j,k8

W

(x)

j

W

(y)

k

_

since 1 is the stopping order for the 2D D4 WT. As described above, the target

subspace for either transformation is composed of mutually orthogonal subspaces.

Each of these respective decomposition procedures takes place in a series of levels;

8 levels for the 2D Haar WT and 7 levels for the 2D D4 WT. Since both wavelet

operators are separable, each level in turn is composed of 2 distinct steps:

(1) decomposition of the subspace V

(x)

k

onto the orthogonal subspace V

(x)

k1

W

(x)

k1

,

and

(2) decomposition of the subspace V

(y)

k

onto the orthogonal subspace V

(y)

k1

W

(y)

k1

for 1 k 8 (2D Haar WT) and 0 k 8 (2D D4 WT).

The rst step in each transformation level is accomplished by applying the

1D wavelet operator to each row of a subimage representing the subspace V

(x)

k

.

The second step in each transformation level is accomplished by applying the 1D

wavelet operator to each column of the subimage that is the output of the rst step

in the level decomposition. This second step decomposes the subspace V

(y)

k

. We

illustrate the rst two levels of the decomposition:

Level 1. The rst step of the rst level operates on the subspace V

(x)

8

in the

product subspace V

(x)

8

V

(y)

8

and creates an intermediary subspace:

V

(x)

8

V

(y)

8

_

V

(x)

7

W

(x)

7

_

V

(y)

8

= V

(x)

7

V

(y)

8

W

(x)

7

V

(y)

8

.

This decomposition produces two orthogonal subspaces labelled L: V

(x)

7

V

(y)

8

and

H: W

(x)

7

V

(y)

8

. Labels L and H represent low- and high-frequencies, respectively

(Figure 1).

The second step of level 1 decomposition operates on the subspace V

(y)

8

con-

tained in the product subspace (V

(x)

7

W

(x)

7

)V

(y)

8

creating the orthogonal subspace:

_

V

(x)

7

W

(x)

7

_

V

(y)

8

_

V

(x)

7

W

(x)

7

__

V

(y)

7

W

(y)

7

_

= V

(x)

7

V

(y)

7

W

(x)

7

V

(y)

7

V

(x)

7

W

(y)

7

W

(x)

7

W

(y)

7

.

LOSSY IMAGE COMPRESSION USING WAVELETS 47

Figure 1. 2D MRA decomposition (level 1, x-decomposition).

Figure 2. 2D MRA decomposition (level 1, y-decomposition).

This resultant subspace is composed of mutually orthogonal subspaces containing

either low-frequency components in x and y, high-frequency components in x

and y, or mixtures of low-frequency components in x and high-frequency com-

ponents in y or high-frequency components in x and low-frequency components

in y. This decomposition produces four subspaces with the following labelling

(Figure 2):

LL: V

(x)

7

V

(y)

7

, HH: W

(x)

7

W

(y)

7

, HL: W

(x)

7

V

(y)

7

, LH: V

(x)

7

W

(y)

7

.

Level 2. In this level, we deal with the LL subspace (V

(x)

7

V

(y)

7

) which cor-

responds to 128 128 image in the upper left-hand corner of the 256 256

decomposed image. In the case of V

(x)

7

, this decomposition is

V

(x)

7

V

(y)

7

_

V

(x)

6

W

(x)

6

_

V

(y)

7

= V

(x)

6

V

(y)

7

W

(x)

6

V

(y)

7

.

In the V

(y)

7

case, we have

V

(y)

7

=

_

V

(x)

6

W

(x)

6

__

V

(y)

6

W

(y)

6

_

= V

(x)

6

V

(y)

6

W

(x)

6

V

(y)

6

W

(7)

6

V

(x)

6

+W

(x)

6

W

(y)

6

.

This decomposition produces four orthogonal subspaces labelled (Figure 3):

LLLL: V

(x)

6

V

(y)

6

, LLHL: V

(x)

6

W

(y)

6

, LLHL: W

(x)

6

V

(y)

6

, LLHH: W

(x)

6

W

(y)

6

.

48 N. D. PANAGIOTACOPULOS ET AL.

Figure 3. 2D MRA decomposition (level 2).

3.1.2. Two-dimensional MRA Reconstruction

Image reconstruction is the inverse of image decomposition. The reconstruction

process is the inverse WT of the subspace

_

V

(x)

V

(y)

V

(x)

W

(y)

W

(y)

_

{j, k7}

W

(x)

j

W

(y)

k

_

onto the subspace V

(x)

8

V

(y)

8

( = 0 for the 2D Haar WT, and = 1 for the 2D

Daubechies and Coifman WT), where all the component 2D signals are super-

imposed over all frequency ranges. Since image reconstruction is the inverse of

image decomposition, all the steps of the decomposition process are inverted. The

step involving x-decomposition: V

(x)

k

(V

(x)

k1

W

(x)

k1

) becomes

_

V

(x)

k1

W

(x)

k1

_

V

(x)

k

, and the step involving y-decomposition.

V

(y)

k

(V

(y)

k1

W

(y)

k1

) becomes (V

(y)

k1

W

(y)

k1

) V

(y)

k

. The (x, y)-direction

I of the two-step procedure in each level of decomposition is inverted: (1) y-

reconstruction and then (2) x-reconstruction.

4. Generation of Daubechies and Coifman Wavelet Coefcients

4.1. DAUBECHIES WAVELETS [1, 2, 8]

The coefcients for the Daubechies lter banks of order N must satisfy the Nth

order dilation equation for the scaling function which is the basis for subspace V

0

:

(x) =

N1

k=0

c

k

(2x k)

and also simultaneously satisfy the orthogonality requirements for the scaling func-

tion:

N1

k=0

c

k

c

k2m

= (m).

LOSSY IMAGE COMPRESSION USING WAVELETS 49

These two requirements can be simultaneously satised if, by solving the matrix

equation for an ((N2)(N2)) matrix operating upon the (N2) dimensional

vector

= M,

where = [(1), (2), . . . , (N1)]

T

, the vector comprised of Daubechies scal-

ing functions evaluated at all the nonzero integer values in the interval [0, 1, 2, . . . ,

N 1] which is the support interval for the Daubechies scaling function of or-

der N. M is an orthogonal matrix whose eigenvector is with an eigenvalue of 1.

Therefore, the expression of each element of the vector as a linear sum of the

matrix product produces the dilation equation for (x) and the orthogonality of M

will satisfy

N1

k=0

c

k

c

k2m

= (m).

The order of the coefcients within the matrix M is [c

2jk

], 1 j, k N 1,

where j is the row index and k is the column index. In the case of N = 4, the (22)

matrix M is comprised of the sequence of 4th order scaling function coefcients

arranged in the row column sequence 2j k, 1 j, k 3j. Their arrangement

in the matrix M, given by the index 2j k, is the row indicator in the range of

1 j 2, and k is the column indicator of 1 k 2:

M =

_

c

1

c

0

c

3

c

2

_

and is [(1), (2)]

T

. The solution of this matrix equation produces the sequence:

c

0

=

1 +

3

2

, c

1

=

1 +3

3

2

,

c

2

=

1

3

2

, c

3

=

1

3

2

.

These elements of matrix M are the coefcients for the D4 scaling function which

satises both requirements. Since the previous condition of (x) makes them or-

thogonal, the coefcients for the Daubechies wavelet function can be generated by

applying the conversion formula, valid for all orthogonal wavelets, that converts

the scaling function sequence {c

k

} into the coefcient sequence {d

k

} for the dilation

equation for the wavelet function (x):

(x) =

N1

k=0

d

k

(2x k).

The conversion formula is: d

k

= (1)

k

c[(1 k) mod N]. For the case N = 4, the

wavelet coefcient sequence is: d

0

= c

3

, d

1

= c

2

, d

2

= c

1

, and d

3

= c

0

.

50 N. D. PANAGIOTACOPULOS ET AL.

4.2. COIFMAN WAVELETS [2]

The necessary and sufcient condition for a function to be MRA orthogonal wave-

lets is that the Fourier transform of the scaling function

(x) =

N1

k=0

c

k

(2x k)

satisfy the following equation in the frequency domain:

(2) = m

0

()(),

where

m

0

() =

1

2

k

c

k

e

ik

,

and {c

k

} is the coefcient sequence for the dilation equation. The function m

0

()

must also satisfy the added requirement for the orthogonality of the scaling func-

tion (x) =

kZ

c

k

(2x k) which is met if it also satises the condition:

m

0

()

2

+|m

0

( +)

2

= 1 for all R.

This property for the low-pass lter m

0

() is not restricted only to Coifman wave-

lets, but must apply to the low-pass lter component in the Fourier transform of

the dilation equation for the scaling function in all MRA orthogonal wavelets. The

Coifman wavelet differs from other MRA wavelets in that the low-pass lter m

0

()

not only meets these requirements but also has the added property:

(k)

{2} =

d

k

d

k

_

m

0

()()

_

= 0 for k = 0, 1, . . . , L.

This produces a zero moment of order L for the scaling function as well as for the

wavelet function, i.e.,

_

x

k

(x) dx = 0 for 0 k L.

The zero moment of order L for the Fourier transform of the scaling function ()

ensures that the functional approximations of both the Coifman wavelet and Coif-

man scaling function would be highly accurate, since the coefcients for the scaling

function series expansion for f (x) and the coefcients for the wavelet expansion

of f (x) are given by:

f, =

_

f (x)(x) dx

and

f, =

_

f (x)(x) dx.

LOSSY IMAGE COMPRESSION USING WAVELETS 51

If f (x) in both integrals is replaced by its Taylor series expansion, then one obtains

the expression for the coefcients of the scaling function series expansion:

f, =

_

k=0

f

(k)

(0)x

k

(x) dx

and for the coefcients of the wavelet series expansion:

f, =

_

k=0

f

(k)

(0)x

k

(x) dx.

If (x) and (x) both have zero moments of order L, then the coefcients of

the series expansions for both the wavelet and the scaling function will only have

nonzero components of terms in the Taylor series for f (x) that are of a higher order

than L.

This means that the wavelet coefcients in a nite series approximation of f (x)

will be centered only around higher moments in the Taylor series for f (x) and

thus have a rapid convergence. All orthogonal wavelets with a continuous deriv-

ative through order L have L-order zero moments; however, only the Coifman

wavelet has this property for the scaling function as well. It means that the series

approximations for the low frequency components of a signal will have as rapid

a convergence as those for the higher frequency components which have wavelet

function expansions.

EXAMPLE. Figure 4 shows the original image and its corresponding histogram.

Figure 5 shows the transform image for all levels, achieved by the application

of the D16 transform on the original image. Here we can observe the leptosis of

this histogram in comparison with the histogram of the original image.

Figure 4. Original 512 512 image of Aliki and Casey and its histogram.

52 N. D. PANAGIOTACOPULOS ET AL.

Figure 5. D16 transformed image and its histogram.

5. Image Quantization

Image quantization is the conversion of elements of the transformed image into a

data representation that requires less data storage than the original image. Quanti-

zation is achieved by the following two step process: (1) normalization and (2) inte-

ger conversion. Since all transform methods concentrate most of the image energy

in the low-frequency regions (Figure 3), most of the data in the high-frequency re-

gions have values that are negligible with respect to the values of the low-frequency

regions. These negligible values represent details of the original image. They are

of such ne resolution that the human visual system cannot distinguish (the differ-

ence) when one compares the perfectly reconstructed image and the reconstructed

image in which only transformed values of signicant magnitude were retained.

Normalization converts all negligible values to 0 and simultaneously reduces

the magnitude of the next level of lower high-frequencies while preserving the

magnitude of the lowest frequency components (see LLLL in Figure 3). Thus,

normalization is achieved by dividing each element in the transformed image by a

value known as a quantizer. It is important to select a quantizer that will reduce

Figure 6. Quantized image and its histogram.

LOSSY IMAGE COMPRESSION USING WAVELETS 53

all negligible values to 0. The second step in quantization is the conversion of

these normalized values to integer values. This process is achieved by the following

operation

f

q

(x, y) = int

_

f (x, y)

q(x, y)

_

,

where f (x, y) is the transformed coefcient, q(x, y) is the quantizer, and f

q

(x, y)

is the normalized value converted to an integer. Integer conversion changes the

normalized data to a set of integer values that can be encoded in such a way as to

minimize their storage requirements. The quantizer must be adjusted to different

frequency regions of the transformed image. In the low-frequency region, the quan-

tizer must have relatively lowvalue and, in the high-frequency region, the quantizer

must have a relatively high value. This quantizer adaptation is accomplished by

the use of non-uniform quantization. Non-uniform quantization utilizes two pa-

rameters: (1) the shifting size, and (2) the step size. The rst parameter is used

to adjust the value of the quantizer for a given step size to different regions of

the transformed image. The second parameter is used to determine the range of

quantization. The minimum shifting size of 1 corresponding to the step size of 1

allows the quantizer to be adjusted for each element in the transformed image. In

this research, the effect of the shifting and the step size changes on the storage

requirements was examined. A highly localized quantization (small shifting size)

mainly concentrates the nonzero elements in a low-frequency region (upper left-

hand corner), but also increases the detail loss of quantization. A less localized

quantization (larger shifting size) concentrates the nonzero elements less, but it

reduces its detail loss of quantization. In this study, the step size is xed at 1, while

shifting sizes as large as 128 and as small as 4 are used.

Continuation from the previous example: Figure 6 shows the effect of the quan-

tization on the transformed image. We can observe here the shifting of the his-

togram towards left.

6. Partial Decomposition and Pseudo-quantization

If we use any order of an MRA wavelet and decompose an image only through 2

levels, we use only the coefcients of the low-pass lter to reconstruct an image

that is extremely close to the original, very low MSE, and very high SNR. Thus,

by saving only a quarter of the coefcients in a 2-level decomposition, we can

reproduce the original image with a very small error. This, however, will give us

only a compression ratio of

= 4 : 1 which is far too low to be of any practical

use. If, on the other hand, we complete all the levels of decomposition and retain

only the low-pass coefcients in our image reconstruction, we will have achieved a

phenomenal compression ratio, but too much detail will have been lost to produce

an acceptable reconstruction.

54 N. D. PANAGIOTACOPULOS ET AL.

The high MSE and low SNR, resulting from the reconstruction of the image

from only a few low-pass coefcients, show that as one proceeds into all levels of

decomposition, the high-pass components become increasingly important. A ques-

tion arises: can one perform a partial decomposition, saving only a relatively small

number of low-pass coefcients, discard all of the high-pass coefcients at the ini-

tial levels of decomposition, and save only a relatively few high-pass coefcients at

the lower levels of the decomposition that contain signicant information, perform

a coding scheme (see Section 7) to reduce storage of the reduced level coefcients;

thus achieving excellent compression ratios and high quality image reconstruction?

We experimented with this procedure using all the wavelets under consider-

ation. We decomposed the image into 3 levels. For the 512 512 image, this

meant our subimage containing the low-pass coefcients was the 64 64 image

in the upper left-hand corner. For the 384 384 image, the subimage of low-pass

coefcients was the 4848 image in the upper left-hand corner. We then computed

the mean and the standard deviation for the remaining portion of the decomposed

image. Then we thresholded the high-pass region using a 1-SD threshold; any

coefcient in this region whose value exceeded 1 SD was retained, while all the

others were zeroed out. Next we applied the same coding procedure to encode

the low-pass coefcients and the nonzero thresholded high-pass coefcients. The

reconstruction consisted of decoding the nonzero coefcients and the application

of 3-level reconstruction.

7. Image Encoding [5, 6]

Image encoding is the nal step in the image compression process where integer

values of the quantized image are converted to binary symbols that require, on the

average, less storage than the integer representation. Typically, zero and positive

integers less than 255 require only 1 byte of storage, while all other integer values

require at least 2 bytes of storage. Binary symbols are composed of sequences of

0 and 1 each requiring only a single bit of storage. Thus, the bit requirements for

the storage of a binary symbol equal the number of 0s and 1s that compose it.

The object of encoding is to assign short binary symbols to integer values with a

relatively high probability (frequency of occurrence) and longer binary symbols to

integer values with a relatively low probability.

There are a number of different encoding schemes available. This study deals

with the variable length coding (VLC) and the run length coding (RLC) methods.

The VLC method assigns binary symbols to the integer values the length of which

is a function of its probability value. The RLC methods condenses streams of 0

values to special symbols called tokens whose binary representation requires less

average storage than the assignment of a single bit to each of the consecutive 0s.

The VLCmethod is applied to the nonzero values, while the RLCmethod is applied

to the 0 values. In this study, a combination of these two methods was used; taking

LOSSY IMAGE COMPRESSION USING WAVELETS 55

Figure 7. Reconstructed image and its histogram.

advantage of the quantization effect of concentrating nonzero integer values in a

small region in the upper left-hand corner of the image.

In the case of the VLC method, a histogram analysis was rst performed on

the quantized image in order to determine the probability of all integer values. For

the elements with the highest probability, a smaller binary symbol was used. The

elements with the third, fourth, fth, and sixth highest probabilities were assigned

the binary symbols: 00, 01, 10, and 11, etc. For the values of the lowest probabil-

ities, longer binary symbols were assigned. However, the average number of bits

per binary symbol was always equal to the total entropy of the encoded image.

Thus, the long binary symbols assigned to the integers with a low probability did

not signicantly increase the average storage requirements. Therefore, the RLC

method reduces the number of 0s. If, for example, the following pattern of integers

occurs: 1324000000000345, then the token (0 : 9) is assigned to 9 consecutive 0s.

The resulting representation is 1324(0 : 9)345. The token (0 : 9) will be converted

to a binary symbol using the VLC method. When quantized integers and tokens for

the sequences of 0s have been encoded, the data can be stored in a binary stream in

which each binary symbol is distinguished from its adjacent symbols by a special

prex code. The quantized image can be recovered without loss by reversing this

coding operation.

Continuation from the previous example: Figure 7 shows the decompressed

(reconstructed) image using the entire sequence of decoding, dequantization, and

inverse transformation. We observe here that the histogram of the reconstructed

image closely resembles the histogram of the original image.

8. ImageComparison Methods [7 9]

The delity of an image after reconstruction is an important aspect of image com-

pression. Compression methods are lossless product images that are an exact replica

of the original image. Lossy compression methods that remove visual redundan-

cies produce decompressed images that have lost visual information during the

56 N. D. PANAGIOTACOPULOS ET AL.

compression process. One measurement of the reconstructed image delity is that

of the mean square error, MSE, between the reconstructed image f

r

(x, y) and the

original image f (x, y). The smaller the MSE, the closer the compressed image is

to the original image. The signal to noise ratio (SNR) of the reconstructed image is

also an important index and it is computed using

SNR =

M1

y=0

N1

x=0

(f (x, y))

2

M1

y=0

N1

x=0

(f (x, y) f

r

(x, y))

2

.

The closer the reconstructed image is to the original image, the higher the signal

to the noise ratio will be. However, the main difculty with using the MSE as a

measure of the image quality is that in many instances these values do not match the

quality that is perceived by the human visual system. In addition to MSE and SNR,

the compression ratio (CR) is computed. CR is the measure of the compressed

image size versus the original image size and its % is

Compression ratio (%)

=

size of original imagel (byte) size of compressed image (byte)

size of original image (byte)

100.

There are several statistical measures that give quantitative information about an

image. These statistical measures include the mean value, the maximum value, the

minimum value, the entropy, and the standard deviation.

9. Results

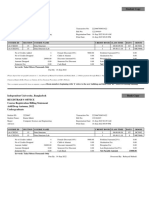

Tables IIII show, in a summarized fashion, the results obtained from all the image

compression measures used in this study. The entropy of the 512 512 input

image was 7.316, while the entropy of the 384 384 image was 7.564 and the

shifting/step ratio used in the non-uniform scalar quantization was 4/1.

Table I. Comparison of the compressed result of 512 512 image

WT Entropy of the Compressed CR CR Entropy of MSE SNR

transformed size (byte) % the inverse

image image

DCT 0.24 34345 7.63 : 1 86.90 7.48 61.21 220.85

D2 0.17 34093 7.69 : 1 87.00 7.65 95.61 141.38

D4 0.32 31818 8.24 : 1 87.86 7.66 65.80 205.43

D8 0.35 31321 8.37 : 1 88.05 7.67 57.30 235.91

D16 0.80 31816 8.24 : 1 87.87 7.68 55.23 244.77

LOSSY IMAGE COMPRESSION USING WAVELETS 57

Table II. Comparison of the compressed result of 512 512 image with pseudo-uantization

WT Entropy of the Compressed CR CR Entropy of MSE SNR

transformed size (byte) % the inverse

image image

D2-SQ 2.34 37041 7.07 : 1 85.87 7.57 178.55 75.71

D4-SQ 2.10 36515 7.18 : 1 86.07 7.63 125.91 107.36

D8-SQ 1.57 36444 7.19 : 1 86.10 7.63 107.62 125.60

D16-SQ 1.76 36652 7.15 : 1 86.02 7.64 103.27 130.89

Table III. Comparison of the compressed result of 384 384 image

WT Entropy of the Compressed CR CR Entropy of MSE SNR

transformed size (byte) % the inverse

image image

D6 0.5416 20912 7.05 : 1 85.82 7.67 57.59 232.05

D12 0.5750 20910 7.05 : 1 85.82 7.68 52.59 254.11

C6 0.4153 21035 7.01 : 1 85.74 7.67 59.39 225.01

C12 0.5174 20637 7.15 : 1 86.01 7.67 58.02 230.34

10. Discussion and Conclusion

10.1. EVALUATION OF THE COMPLETE DECOMPOSITION, QUANTIZATION,

AND ENCODING

The evaluation of DCT and wavelet transforms was based on: (1) Compression

ratio, (2) Quality of the reconstructed image (MSE and SNR), and (3), to a lesser

extent, computational speed. As to the compression ratio, D2, D4, D8, and D16

had a better compression ratio than that of DCT. Therefore, with respect to CR, the

Daubechies wavelets were superior performers than DCT. More specically, D8

and D16 had the best compression ratios (88 +% of the original image).

Regarding the quality of the reconstructed image, D2 and D4 had higher MSE

values than DCT, D2: 95.61 and D4 65.80, whereas the MSE for the DCT was

61.21. The difference between the DCT and D4 is small +4.59, whereas the dif-

ference between the DCT and D2 is considerable: +34.40, in fact, the MSE for D2

indicates a low quality reconstruction. For D8 the MSE was better: 3.91 and D16

it was 5.98. All these MSE values are in the range of good quality reconstruction.

Concerning the transforms which were applied to the 384 384 image, the

comparative results in the compression ratio, low MSE, and high SNR between

D6, D12, C6, and C12 wavelets are identical to that of the transforms applied to the

512512 image. Again, D12 had the best performance in both the lowest MSE and

the highest SNR. C12 had the best performance with respect to CR. However, in all

58 N. D. PANAGIOTACOPULOS ET AL.

the three categories the results were so similar that none of the transforms could be

categorically listed as superior. Because of the added L-order zero moment to the

scaling function for the Coifman wavelets, it was expected that they would have a

decided advantage to D6 and D12, but the performance of both D6 and D12 was

as good as that of the Coifman wavelets.

10.2. EVALUATION OF THE PARTIAL DECOMPOSITION AND

PSEUDO-QUANTIZATION

The compression ratio of the four wavelets applied in this study was comparable to

the compression ratios obtained when the complete decomposition, quantization,

and encoding were applied. Limiting the decomposition to 3 levels, thresholding

at 1 SD of the high-pass portions of the 3-level transformed image, together with

encoding produced compression ratios that were 85 + %. However, by other more

important criteria, low MSE and high SNR, this method produced decidedly poorer

results. The MSE ranged from 103.27, D16, 178.55, D2; and the SNR ranged from

75.71, D2, to 130.89, D16. The values of these parameters are not in the range of

acceptable quality. The decision to zero out all high-pass coefcients that had a

magnitude of less than 1 SD probably caused us to loose signicant detail. If the

quantization had been applied to the coefcients in the high-pass regions rather

than simple thresholding, the MSE and SNR values conceivably could be much

better. An interesting outcome of this study occurred as a result of tabulating the

image entropy in each step of the compression and reconstruction process. Tables

IIII show the entropy levels at all the levels of the compression and reconstruction

process, the Daubechies wavelets had higher entropy levels than did the DCT.

Lower entropy constraints that nevertheless yield high MSE and SNR values are

an important asset to a transform in data compression applications computational

time: the code for all Daubechies wavelets executed considerably faster than the

code for the DCT, at least by several orders of magnitude.

References

1. Chui, C. K.: An Introduction to Wavelets, Academic Press, New York, 1992.

2. Daubechies, I.: Ten Lectures on Wavelets, SIAM, Philadelphia, PA, 1992.

3. Devore, R., Jeweth, B., and Lucier, B.: Image compression through wavelet transform coding,

IEEE Trans. Inform. Theory (1992), 719746.

4. Friesen, K., Panagiotacopulos, N., Lertsuntivit, S., and Lee, J.: Wavelet image compression:

A comparative study, in: Advanced and Intelligent Systems: Concept, Tools, and Applications,

Kluwer Academic Publishers, Dordrecht, 1998, pp. 265276.

5. Jain, A. K.: Fundamentals of Digital Image Processing, Prentice-Hall, Englewood Cliffs, NJ,

1989.

6. Nelson, M. and Gailly, J. L.: The Data Compression Book, M&T Books, New York, 1996.

LOSSY IMAGE COMPRESSION USING WAVELETS 59

7. Vetterli, M. and Kovacevic, J.: Wavelet and Subband Coding, Prentice-Hall, Englewood Cliffs,

NJ, 1995.

8. Walter, G. G.: Wavelets and Other Orthogonal System with Applications, CRC Press, Boca

Raton, FL, 1994.

9. Weeks, A. R.: Fundamentals of Electronic Image Processing, SPIE Press, Bellingham, 1996.

You might also like

- Lakshmi Vilas Bank Job Notification - Marketing ProfessionalsDocument1 pageLakshmi Vilas Bank Job Notification - Marketing ProfessionalsCareerNotifications.comNo ratings yet

- HelpDocument1 pageHelpAkash SarjiNo ratings yet

- Securities Market (Advanced) Module PDFDocument196 pagesSecurities Market (Advanced) Module PDFbipinjaiswal1230% (1)

- Impact of M&a On Performance of Banks in India - Final Report (Contents)Document1 pageImpact of M&a On Performance of Banks in India - Final Report (Contents)Bhavikk KotadiaNo ratings yet

- Minor Project FormatDocument5 pagesMinor Project FormatBhavikk KotadiaNo ratings yet

- Dabur India - SWOT Analysis - BrandGuide - MBA Skool-Study - Learn.shareDocument7 pagesDabur India - SWOT Analysis - BrandGuide - MBA Skool-Study - Learn.shareBhavikk KotadiaNo ratings yet

- BMB - Probationary Officers RecruitmentDocument4 pagesBMB - Probationary Officers Recruitmentmayur_lanjewarNo ratings yet

- IRCTC LTD, Booked Titktcket PrintingDocument1 pageIRCTC LTD, Booked Titktcket PrintingGaurav TiwariNo ratings yet

- All You Want To Know About Lokpal Bill - The Times of IndiaDocument15 pagesAll You Want To Know About Lokpal Bill - The Times of IndiaBhavikk KotadiaNo ratings yet

- GroupsDocument2 pagesGroupsBhavikk KotadiaNo ratings yet

- Dabur India - SWOT Analysis - BrandGuide - MBA Skool-Study - Learn.shareDocument7 pagesDabur India - SWOT Analysis - BrandGuide - MBA Skool-Study - Learn.shareBhavikk KotadiaNo ratings yet

- Lect 01Document55 pagesLect 01vkubendranNo ratings yet

- Augmented-Reality: Ankit Bhatnagar (0702EC081004) Bharat Dhanotiya (0702EC081010) Bhawik Kotadia (0702EC081012)Document27 pagesAugmented-Reality: Ankit Bhatnagar (0702EC081004) Bharat Dhanotiya (0702EC081010) Bhawik Kotadia (0702EC081012)Bhavikk KotadiaNo ratings yet

- Night Light SaverDocument31 pagesNight Light SaverBhavikk KotadiaNo ratings yet

- What is Bandwidth and How it is UsedDocument25 pagesWhat is Bandwidth and How it is UsedElena PocketNo ratings yet

- MotherboardDocument11 pagesMotherboardBhavikk KotadiaNo ratings yet

- Z-Transforms Solved ProblemsDocument5 pagesZ-Transforms Solved ProblemsHarsha100% (1)

- Motherboard, BIOS and POSTDocument13 pagesMotherboard, BIOS and POSTBhavikk KotadiaNo ratings yet

- Redtacton IEEE ReportDocument9 pagesRedtacton IEEE ReportBhavikk Kotadia86% (7)

- NetDocument11 pagesNetBhavikk KotadiaNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Traffic LightDocument19 pagesTraffic LightDianne ParNo ratings yet

- Sentinel 2 Products Specification DocumentDocument510 pagesSentinel 2 Products Specification DocumentSherly BhengeNo ratings yet

- Antenna VisualizationDocument4 pagesAntenna Visualizationashok_patil_1No ratings yet

- SQL Guide AdvancedDocument26 pagesSQL Guide AdvancedRustik2020No ratings yet

- Resume Template & Cover Letter Bu YoDocument4 pagesResume Template & Cover Letter Bu YoRifqi MuttaqinNo ratings yet

- ABP - IO Implementing - Domain - Driven - DesignDocument109 pagesABP - IO Implementing - Domain - Driven - DesignddoruNo ratings yet

- Towards A Human Resource Development Ontology Combining Competence Management and Technology-Enhanced Workplace LearningDocument21 pagesTowards A Human Resource Development Ontology Combining Competence Management and Technology-Enhanced Workplace LearningTommy SiddiqNo ratings yet

- Books of AccountsDocument18 pagesBooks of AccountsFrances Marie TemporalNo ratings yet

- Describing-Jobs-British-English StudentDocument3 pagesDescribing-Jobs-British-English Studentrenata pedroso100% (1)

- Clark DietrichDocument110 pagesClark Dietrichikirby77No ratings yet

- Bluetooth TutorialDocument349 pagesBluetooth Tutorialjohn bougsNo ratings yet

- Evolution of Bluetooth PDFDocument2 pagesEvolution of Bluetooth PDFJuzerNo ratings yet

- Electronics Ecommerce Website: 1) Background/ Problem StatementDocument7 pagesElectronics Ecommerce Website: 1) Background/ Problem StatementdesalegnNo ratings yet

- KSEB Liable to Pay Compensation for Son's Electrocution: Kerala HC CaseDocument18 pagesKSEB Liable to Pay Compensation for Son's Electrocution: Kerala HC CaseAkhila.ENo ratings yet

- Maj. Terry McBurney IndictedDocument8 pagesMaj. Terry McBurney IndictedUSA TODAY NetworkNo ratings yet

- FR Post-10Document25 pagesFR Post-10kulich545No ratings yet

- Yellowstone Food WebDocument4 pagesYellowstone Food WebAmsyidi AsmidaNo ratings yet

- Leaked David Fry II Conversation Regarding Loopholes and Embezzlement at AFK Gamer LoungeDocument6 pagesLeaked David Fry II Conversation Regarding Loopholes and Embezzlement at AFK Gamer LoungeAnonymous iTNFz0a0No ratings yet

- Chem 102 Week 5Document65 pagesChem 102 Week 5CAILA CACHERONo ratings yet

- Obstetrical Hemorrhage: Reynold John D. ValenciaDocument82 pagesObstetrical Hemorrhage: Reynold John D. ValenciaReynold John ValenciaNo ratings yet

- Agricultural Engineering Comprehensive Board Exam Reviewer: Agricultural Processing, Structures, and Allied SubjectsDocument84 pagesAgricultural Engineering Comprehensive Board Exam Reviewer: Agricultural Processing, Structures, and Allied SubjectsRachel vNo ratings yet

- Reg FeeDocument1 pageReg FeeSikder MizanNo ratings yet

- Difference Between Mark Up and MarginDocument2 pagesDifference Between Mark Up and MarginIan VinoyaNo ratings yet

- Zelev 1Document2 pagesZelev 1evansparrowNo ratings yet

- If V2 would/wouldn't V1Document2 pagesIf V2 would/wouldn't V1Honey ThinNo ratings yet

- Exp 8 - GPG - D12B - 74 PDFDocument4 pagesExp 8 - GPG - D12B - 74 PDFPRATIKSHA WADIBHASMENo ratings yet

- Data Sheet: Experiment 5: Factors Affecting Reaction RateDocument4 pagesData Sheet: Experiment 5: Factors Affecting Reaction Ratesmuyet lêNo ratings yet

- Quantification of Dell S Competitive AdvantageDocument3 pagesQuantification of Dell S Competitive AdvantageSandeep Yadav50% (2)

- Chennai Metro Rail BoQ for Tunnel WorksDocument6 pagesChennai Metro Rail BoQ for Tunnel WorksDEBASIS BARMANNo ratings yet

- Lankeda 3d Printer Filament Catalogue 2019.02 WGDocument7 pagesLankeda 3d Printer Filament Catalogue 2019.02 WGSamuelNo ratings yet