Professional Documents

Culture Documents

Manual Testing Activity

Uploaded by

ஸ்ரீதர் ரெட்டிOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Manual Testing Activity

Uploaded by

ஸ்ரீதர் ரெட்டிCopyright:

Available Formats

In our project we are getting the requirements in the form of usecases and business rules documents.

Once we received the requirements, we need to identify the testable requirements(testable requirements is the requirements which we need to test it in the current release) in an Excel sheet, we are conducting the reviews. After client review client will sineoff the requirements phase, then we are exporting the requirements into Requirements tab of the QC ,and we will start preparing Testcases for the testable requirements in an Excel sheet. Then we will conduct reviews and after client review, we are consolidating all the testcases in a single Excel sheet and exporting it to Testplan tab of QC. Now we are mapping the requirements with the testcases in the test plan tab(i.e on which requirements basis we have designed the testcases we need to map that testcase) and we will conduct Tracability Matrix Report(TMR) to verify whether all the requirements are covered in the form of testcases or not. After getting 100% in the TMReport client will sign off the testcase phase. Now we are going for testdata preparation(testdata is the data/value which we are using for testing on the application). After completion of the testdata preparation we are going for the execution. We will execute the testcases in the testLab tab of QC, but all The testcases in the testplan tab are in the Alphabetical order, so we are pulling those testcases from testplan tab to testlab tab in a sequential executable order which we called it as a testlab setup. Now we are waiting for the application. When the development team has developed the application and that has deployed into the testing environment, we will start executing the testcases in the testlab tab. During execution time we are comparing the Actual result of the application with the Expected result. If the E.result is matching with the A.result in the application then we are marking the status of the step as PASS. When the A.result is mismatching with the E.result then we are marking the status of the step as FAIL, that failed step we are calling as a DEFECT and we are reporting it to the Development team directly from the failed step of the testcase of the testlab tab of QC. Once the defect is accepted by the developer and that has fixed by the developer, in order to check whether the fixed defect is working fine, we are performing retesting on the fixed defect in the testing environment. If the fixed defect is working fine in the testing environment we are not closing that defect, we are changing the status of the defect as UAT migrated-in. once we executed all the testcases in the testing environment and when all the defects got UAT migrated-in, then the application is deployed into the UAT environment. Once the application has deployed into the UAT environment, we need to perform testing on the application by executing all the testcases in UAT environment and also we need to perform the retesting on the migrated defect in the UAT environment, if it is working fine in the UAT environment also then we need to close the defect. Whenever we closed all the defects in the UAT environment, we need to perform regression testing to verify whether the fixed defect is influencing on other parts of the application or not. Once we completed regression testing we are moving for the testclosure, here project manager will be involving to sineoff the session and technical team will deploy the application into the production department.

You might also like

- Framework in SeleniumDocument3 pagesFramework in Seleniumஸ்ரீதர் ரெட்டி0% (1)

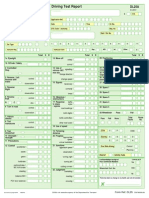

- dl25 Driving Test Report Form PDFDocument7 pagesdl25 Driving Test Report Form PDFஸ்ரீதர் ரெட்டி0% (1)

- InsuranceDocument142 pagesInsuranceஸ்ரீதர் ரெட்டிNo ratings yet

- End-To-End Process of Manual TestingDocument2 pagesEnd-To-End Process of Manual Testingஸ்ரீதர் ரெட்டிNo ratings yet

- Manual Testing Help Ebook by Software Testing HelpDocument22 pagesManual Testing Help Ebook by Software Testing Helpvarshash24No ratings yet

- Manual Testing Real TimeDocument18 pagesManual Testing Real TimePrasad Ch80% (5)

- Branch Statement Path CoverageDocument3 pagesBranch Statement Path CoverageazahirNo ratings yet

- G53 QAT10 PDF 6 UpDocument4 pagesG53 QAT10 PDF 6 UpRaviTeja MuluguNo ratings yet

- Harvard Resume GuideDocument18 pagesHarvard Resume GuidePrashant TalwarNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5783)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Topographic Map of Blooming GroveDocument1 pageTopographic Map of Blooming GroveHistoricalMapsNo ratings yet

- UntitledDocument21 pagesUntitledRoberto RamosNo ratings yet

- Roxas Avenue, Isabela City, Basilan Province AY: 2018-2019: Claret College of IsabelaDocument2 pagesRoxas Avenue, Isabela City, Basilan Province AY: 2018-2019: Claret College of IsabelaJennilyn omnosNo ratings yet

- Unit 01 Family Life Lesson 1 Getting Started - 2Document39 pagesUnit 01 Family Life Lesson 1 Getting Started - 2Minh Đức NghiêmNo ratings yet

- Physics MCQ Solid State PhysicsDocument15 pagesPhysics MCQ Solid State PhysicsRams Chander88% (25)

- Defender 90 110 Workshop Manual 5 WiringDocument112 pagesDefender 90 110 Workshop Manual 5 WiringChris Woodhouse50% (2)

- The Ideal Structure of ZZ (Alwis)Document8 pagesThe Ideal Structure of ZZ (Alwis)yacp16761No ratings yet

- Unit Test Nervous System 14.1Document4 pagesUnit Test Nervous System 14.1ArnelNo ratings yet

- JC Metcalfe - The Power of WeaknessDocument3 pagesJC Metcalfe - The Power of Weaknesschopin23No ratings yet

- 1 CAT O&M Manual G3500 Engine 0Document126 pages1 CAT O&M Manual G3500 Engine 0Hassan100% (1)

- MATH6113 - PPT5 - W5 - R0 - Applications of IntegralsDocument58 pagesMATH6113 - PPT5 - W5 - R0 - Applications of IntegralsYudho KusumoNo ratings yet

- Chapter 1 Critical Thin...Document7 pagesChapter 1 Critical Thin...sameh06No ratings yet

- Financial Reporting Statement Analysis Project Report: Name of The Company: Tata SteelDocument35 pagesFinancial Reporting Statement Analysis Project Report: Name of The Company: Tata SteelRagava KarthiNo ratings yet

- SID-2AF User Manual English V3.04Document39 pagesSID-2AF User Manual English V3.04om_zahidNo ratings yet

- Double Burden of Malnutrition 2017Document31 pagesDouble Burden of Malnutrition 2017Gîrneţ AlinaNo ratings yet

- Community Development A Critical Approach PDFDocument2 pagesCommunity Development A Critical Approach PDFNatasha50% (2)

- Intro - S4HANA - Using - Global - Bike - Slides - MM - en - v3.3 MODDocument45 pagesIntro - S4HANA - Using - Global - Bike - Slides - MM - en - v3.3 MODMrThedjalexNo ratings yet

- Ubc 2015 May Sharpe JillianDocument65 pagesUbc 2015 May Sharpe JillianherzogNo ratings yet

- Interview Question SalesforceDocument10 pagesInterview Question SalesforcesomNo ratings yet

- Metal Oxides Semiconductor CeramicsDocument14 pagesMetal Oxides Semiconductor Ceramicsumarasad1100% (1)

- Automated Crime Reporting SystemDocument101 pagesAutomated Crime Reporting SystemDeepak Kumar60% (10)

- NetworkingDocument1 pageNetworkingSherly YuvitaNo ratings yet

- Understanding The Self Metacognitive Reading Report 1Document2 pagesUnderstanding The Self Metacognitive Reading Report 1Ako Lang toNo ratings yet

- Sr. No. Name Nationality Profession Book Discovery Speciality 1 2 3 4 5 6 Unit 6Document3 pagesSr. No. Name Nationality Profession Book Discovery Speciality 1 2 3 4 5 6 Unit 6Dashrath KarkiNo ratings yet

- LAU Paleoart Workbook - 2023Document16 pagesLAU Paleoart Workbook - 2023samuelaguilar990No ratings yet

- SFA160Document5 pagesSFA160scamalNo ratings yet

- MEC332-MA 3rd Sem - Development EconomicsDocument9 pagesMEC332-MA 3rd Sem - Development EconomicsRITUPARNA KASHYAP 2239239No ratings yet

- Title Page Title: Carbamazepine Versus Levetiracetam in Epilepsy Due To Neurocysticercosis Authors: Akhil P SanthoshDocument16 pagesTitle Page Title: Carbamazepine Versus Levetiracetam in Epilepsy Due To Neurocysticercosis Authors: Akhil P SanthoshPrateek Kumar PandaNo ratings yet

- Pahang JUJ 2012 SPM ChemistryDocument285 pagesPahang JUJ 2012 SPM ChemistryJeyShida100% (1)

- Assessing Student Learning OutcomesDocument20 pagesAssessing Student Learning Outcomesapi-619738021No ratings yet