Professional Documents

Culture Documents

Evaluating Training Programs

Uploaded by

anjanareadOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Evaluating Training Programs

Uploaded by

anjanareadCopyright:

Available Formats

2007 Fall Extension Conference

1

Evaluating Training Programs

The Four Levels

Dr. Myron A. Eighmy

Based on the work of Dr. Donald L.

Kirkpatrick, University of Wisconsin -

Madison

2007 Fall Extension Conference

2

Objectives

Upon completion of this presentation you will

be able to:

State why evaluation of programs is critical to you

and your organization.

Apply Kirkpatricks four levels of evaluation to

your programs.

Use guidelines for developing evaluations.

Implement various forms and approaches to

evaluation

2007 Fall Extension Conference

3

Why Evaluate?

Determine the effectiveness of the program design

How the program was received by the participants

How learners fared on assessment of their learning

Determine what instructional strategies work

presentation mode

presentation methods.

learning activities

desired level of learning

Program improvement

2007 Fall Extension Conference

4

Why Evaluate?

Should the program be continued?

How do you justify your existence?

How do you determine the return on

investment for the program?

human capital

individual competence

social/economic benefit

2007 Fall Extension Conference

5

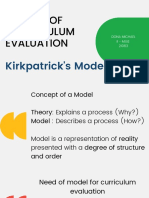

Four Levels of Evaluation

Kirkpatrick

During program evaluation

Level One Reaction

Level Two Learning

Post program evaluation

Level Three Behavior

Level Four Results

2007 Fall Extension Conference

6

Reaction Level

A customer satisfaction measure

Were the participants pleased with

the program

Perception if they learned anything

Likelihood of applying the content

Effectiveness of particular strategies

Effectiveness of the packaging of

the course

2007 Fall Extension Conference

7

Examples of Level One

Your Opinion, Please

In a word, how would you describe this

workshop?

Intent

Solicit feedback about the course. Can also

assess whether respondents transposed the

numeric scales.

2007 Fall Extension Conference

8

Example of Level One

Using a number, how would you describe this

program? (circle a number)

Terrible Average Outstanding

1 2 3 4 5

Intent: Provides quantitative feedback to determine

average responses (descriptive data). Watch scale

sets!

2007 Fall Extension Conference

9

Example of Level One

How much did you know about this subject before taking this

workshop?

Nothing Some A lot

1 2 3 4 5

How much do you know about this subject after participating in

this workshop?

Nothing Some A lot

1 2 3 4 5

Intent - The question does not assess actual learning, it

assesses perceived learning.

2007 Fall Extension Conference

10

Example Level One

How likely are you to use some or all of the skills

taught in this workshop in your work/community/

family?

Not Very

Likely Likely Likely

1 2 3 4 5

Intent determine learners perceived relevance of

the material. May correlate with the satisfaction

learners feel.

2007 Fall Extension Conference

11

Example of Level One

The best part of this program was

The one thing that could be improved most ..

Intent

Qualitative feedback on the course and help

prioritize work in a revision. Develop themes

on exercises, pace of course, etc.

2007 Fall Extension Conference

12

Guidelines for Evaluating Reaction

Decide what you want to find out.

Design a form that will quantify reactions.

Encourage written comments.

Get 100% immediate response.

Get honest responses.

If desirable, get delayed reactions.

Determine acceptable standards.

Measure future reactions against the standard.

2007 Fall Extension Conference

13

Learning Level

What did the participants learn in the

program?

The extent to which participants change attitudes,

increase knowledge, and/or increase skill.

What exactly did the participant learn and not

learn?

Pretest Posttest

2007 Fall Extension Conference

14

Learning Level

Requires developing specific

learning objectives to be

evaluated.

Learning measures should be

objective and quantifiable.

Paper pencil tests, performance on

skills tests, simulations, role-plays,

case study, etc.

2007 Fall Extension Conference

15

Level Two Examples

Develop a written exam based on the desired

learning objectives.

Use the exam as a pretest

Provide participants with a worksheet/activity sheet

that will allow for tracking during the session.

Emphasize and repeat key learning points during the

session.

Use the pretest exam as a posttest exam.

Compute the posttest-pretest gain on the exam.

2007 Fall Extension Conference

16

What makes a good test?

The only valid test questions emerge from

the objectives.

Consider writing main objectives and

supporting objectives.

Test questions usually test supporting

objectives.

Ask more than one question on each

objective.

2007 Fall Extension Conference

17

Level Two Strategies

Consider using scenarios, case studies,

sample project evaluations, etc, rather than

test questions. Develop a rubric of desired

responses.

Develop between 3 and 10 questions or

scenarios for each main objective.

2007 Fall Extension Conference

18

Level Two Strategies

Provide instructor feedback during the

learning activities.

Requires the instructor to actively monitor

participants discussion, practice activities, and

engagement. Provide learners feedback.

Ask participants open ended questions

(congruent with the learning objectives) during

activities to test participant understanding.

2007 Fall Extension Conference

19

Example

Which of the following should be considered when

evaluating at the Reaction Level? (more than one

answer possible)

___Evaluate only the lesson content

___Obtain both subjective and objective

responses

___Get 100% response from participants

___Honest responses are important

___Only the course instructor should review results.

2007 Fall Extension Conference

20

Example

Match the following to the choices below

___ Reaction Level

___ Learning Level

A. Changes in performance at work

B. Participant satisfaction

C. Organizational Improvement

D. What the participant learned in class

2007 Fall Extension Conference

21

Scenario Example

An instructor would like to know the

effectiveness of the course design and how

much a participant has learned in a seminar.

The instructor would like to achieve at least

Level Two evaluation.

What techniques could the instructor use to

achieve level two evaluation?

Should the instructor also consider doing a level

one evaluation? Why or why not?

2007 Fall Extension Conference

22

Rubric for Scenario Question

Directions to instructor: Use the following topic checklist to determine

the completeness of the participants response:

___ Learner demonstrated an accurate understanding of what level two

is: learning level.

___ Learner provided at least two specific examples: pretest -posttest,

performance rubrics, scenarios, case studies, hands-on practice.

___ Learner demonstrated an accurate understanding of what level one

evaluation is: reaction level.

___The learner provided at least three specific examples of why level

one is valuable: assess satisfaction, learning activities, course

packaging, learning strategies, likelihood of applying learning.

2007 Fall Extension Conference

23

Behavior Level

How the training affects performance.

The extent to which change in behavior

occurred.

Was the learning transferred from the

classroom to the real world.

Transfer Transfer - Transfer

2007 Fall Extension Conference

24

Conditions Necessary to Change

The person must:

have a desire to change.

know what to do and how to do it.

work in the right climate.

be rewarded for changing.

2007 Fall Extension Conference

25

Types of Climates

Preventing forbidden to use the learning.

Discouraging changes in current way of

doing things is not desired.

Neutral learning is ignored.

Encouraging receptive to applying new

learning.

Requiring change in behavior is

mandated.

2007 Fall Extension Conference

26

Guidelines for Evaluating Behavior

Measure on a before/after basis

Allow time for behavior change (adaptation) to take

place

Survey or interview one or more who are in the best

position to see change.

The participant/learner

The supervisor/mentor

Subordinates or peers

Others familiar with the participants actions.

2007 Fall Extension Conference

27

Guidelines for Evaluating Behavior

Get 100% response or a sample?

Depends on size of group. The more the better.

Repeat at appropriate times

Remember that other factors can influence

behavior over time.

Use a control group if practical

Consider cost vs. benefits of the evaluation

2007 Fall Extension Conference

28

Level Three Examples

Observation

Survey or Interview

Participant and/or others

Performance benchmarks

Before and after

Control group

Evidence or Portfolio

2007 Fall Extension Conference

29

Survey or Patterned Interview

1. Explain purpose of the survey/interview.

2. Review program objectives and content.

3. Ask the program participant to what extent performance was improved as a result

of the program. __ Large extent __ Some extent __ Not at all

If Large extent or Some extent, ask to please explain.

4. If Not at all, indicate why not:

___ Program content wasnt practical

___ No opportunity to use what I learned

___ My supervisor prevented or discouraged me to change

___ Other higher priorities

___ Other reason (please explain)

5. Ask, In the future, to what extent do you plan to change your behavior?

___ Large extent ___ Some extent ___ Not at all

Ask to please explain:

2007 Fall Extension Conference

30

Evidence and Portfolio

Thank you for participating. I am very interested in how the

evaluation skills you have learned are used in your work..

Please send me a copy of at least one of the following:

a level three evaluation that you have designed.

a copy of level two evaluations that use more than one method of

evaluating participant learning.

a copy of a level one evaluation that you have modified and tell me how

it influenced program improvement.

(indicate if you would like my critique on any of the evaluations)

If I do not hear from you before January 30, I will give you a call no

pressure just love to learn what you are doing.

2007 Fall Extension Conference

31

Results Level

Impact of education and training on the

organization or community.

The final results that occurred as a result of

training.

The ROI for training.

2007 Fall Extension Conference

32

Examples of Level Four

How did the training save costs

Did work output increase

Was there a change in the quality of work

Did the social condition improve

Did the individual create an impact on the

community

Is there evidence that the organization or

community has changed.

2007 Fall Extension Conference

33

Guidelines for Evaluating Results

Measure before and after

Allow time for change to take place

Repeat at appropriate times

Use a control group if practical

Consider cost vs. benefits of doing Level Four

Remember, other factors can affect results

Be satisfied with Evidence if Proof is not possible.

You might also like

- Evaluating Training Programs The Four Levels: Dr. Myron A. EighmyDocument33 pagesEvaluating Training Programs The Four Levels: Dr. Myron A. EighmyRuth Pauline HutapeaNo ratings yet

- Krickpatric Training EvaluationDocument33 pagesKrickpatric Training EvaluationskkuumarNo ratings yet

- Evaluating Training Programs The Four Levels by Kirkpatrick: Sajid Mahsud Abdul AzizDocument30 pagesEvaluating Training Programs The Four Levels by Kirkpatrick: Sajid Mahsud Abdul AzizSajid MahsudNo ratings yet

- Lecture 11 - Training Evaluation - SSM - IUNC - Spring 2015Document31 pagesLecture 11 - Training Evaluation - SSM - IUNC - Spring 2015Muqaddas IsrarNo ratings yet

- End Term Assignment Q-1Document3 pagesEnd Term Assignment Q-1Nishtha gulati batraNo ratings yet

- Models of Ciurriculum EvaluationDocument22 pagesModels of Ciurriculum EvaluationDona MichaelNo ratings yet

- Homework For Feb 21Document4 pagesHomework For Feb 21api-268310912No ratings yet

- Rework AssignmentDocument34 pagesRework AssignmentAditi DhimanNo ratings yet

- He Ext Tep: Eek OurDocument10 pagesHe Ext Tep: Eek OurТеодора ВласеваNo ratings yet

- Outcomes Used in Training EvaluationDocument20 pagesOutcomes Used in Training EvaluationSreya RNo ratings yet

- Summative EvalutionDocument3 pagesSummative EvalutionLieyanha Thandtye CiieladyrozeWanabeeNo ratings yet

- Module ActivitiesDocument14 pagesModule ActivitiesPatricia Jane CabangNo ratings yet

- The Outcomes Assessment Phases in The Instructional CycleDocument4 pagesThe Outcomes Assessment Phases in The Instructional Cyclemarvin cayabyabNo ratings yet

- TC EC MIDTERM ReviewerDocument14 pagesTC EC MIDTERM ReviewerVictor S. Suratos Jr.No ratings yet

- IDocument5 pagesIIrene Quimson100% (6)

- Module 2 For Competency Based Assessment 1Document10 pagesModule 2 For Competency Based Assessment 1jezreel arancesNo ratings yet

- Assess 2 Module 2Document10 pagesAssess 2 Module 2jezreel arancesNo ratings yet

- PRIMALS Math Session 9 Omarty Oct 26Document37 pagesPRIMALS Math Session 9 Omarty Oct 26Marilyn BidoNo ratings yet

- Fs5 Learning Assessment Strategies-Episode1Document10 pagesFs5 Learning Assessment Strategies-Episode1Yhan Brotamonte Boneo100% (1)

- DO8s 2015group-9Document58 pagesDO8s 2015group-9Angelica SnchzNo ratings yet

- Assessment of Learning GuideDocument112 pagesAssessment of Learning Guidenika marl TindocNo ratings yet

- Educ6 Report Jessa Mae B. CantilaDocument36 pagesEduc6 Report Jessa Mae B. Cantilachan DesignsNo ratings yet

- DatateamplanningguideDocument6 pagesDatateamplanningguideapi-233553262No ratings yet

- Chapter 4 School and Curriculum MagcamitReina Chiara G.Document7 pagesChapter 4 School and Curriculum MagcamitReina Chiara G.Carlo MagcamitNo ratings yet

- Teaching EvaluationDocument18 pagesTeaching EvaluationIbrahimNo ratings yet

- MYP AssessmentDocument2 pagesMYP Assessmentenu76No ratings yet

- Learning and Development: Gary DesslerDocument28 pagesLearning and Development: Gary DesslerDarshraj ParmarNo ratings yet

- Evaluating Training Using Kirkpatrick's Four LevelsDocument19 pagesEvaluating Training Using Kirkpatrick's Four LevelsAwab Sibtain100% (1)

- Field Study 5 Assessment StrategiesDocument39 pagesField Study 5 Assessment StrategiesNosyap Nopitak IlahamNo ratings yet

- Previous Knowledge and Types of AssessmentsDocument6 pagesPrevious Knowledge and Types of AssessmentsLina CastroNo ratings yet

- ASSIGNMENTDocument6 pagesASSIGNMENTCharlene FiguracionNo ratings yet

- Chapter 10 MarzanoDocument3 pagesChapter 10 MarzanoJocelyn Alejandra Moreno GomezNo ratings yet

- Module 4 To 8Document12 pagesModule 4 To 8zedy gullesNo ratings yet

- Improving Outcomes For Students: Effective Instructional Practices Using Microteaching & Research Based CurriculumDocument81 pagesImproving Outcomes For Students: Effective Instructional Practices Using Microteaching & Research Based CurriculumrajNo ratings yet

- Peer ObservationDocument3 pagesPeer Observationapi-243721743No ratings yet

- Beyond Behavioural ObjectivesDocument11 pagesBeyond Behavioural ObjectivesJoseph Ojom-NekNo ratings yet

- FS2 Ep 17Document5 pagesFS2 Ep 17Michael Angelo DagpinNo ratings yet

- Assignment - Assessment of Student Learning OutcomesDocument6 pagesAssignment - Assessment of Student Learning OutcomesClaudine DimeNo ratings yet

- Alternative AssessmentDocument59 pagesAlternative AssessmentEko SuwignyoNo ratings yet

- Let Us Understand What Kirkpatrick's Model IsDocument26 pagesLet Us Understand What Kirkpatrick's Model IsFlora found100% (9)

- FormativeDocument7 pagesFormativeShehari WimalarathneNo ratings yet

- Assessing Student LearningDocument5 pagesAssessing Student LearningChekaina Rain Baldomar100% (1)

- Peer Observation 3Document4 pagesPeer Observation 3Sabha HamadNo ratings yet

- Teaching & Learning Lesson Planning: Berni AddymanDocument25 pagesTeaching & Learning Lesson Planning: Berni AddymanLuis Miguel CaipaNo ratings yet

- Health EducationDocument23 pagesHealth EducationMay PeraltaNo ratings yet

- Field Study 5: Episode 4Document6 pagesField Study 5: Episode 4KENZO LAREDONo ratings yet

- THE IMPACT STUDY ON EFFECTIVENESS OF AKEPT'S T& L TRAINING PROGRAMMESDocument86 pagesTHE IMPACT STUDY ON EFFECTIVENESS OF AKEPT'S T& L TRAINING PROGRAMMEShanipahhussinNo ratings yet

- Assessment For LearningDocument12 pagesAssessment For LearningZuraida Hanim Zaini100% (2)

- Evaluation: Standards of AchievementDocument6 pagesEvaluation: Standards of AchievementTinee WirataNo ratings yet

- 2 Kirkpatrick Evaluation ModelDocument13 pages2 Kirkpatrick Evaluation ModelhafsaniaNo ratings yet

- Portfolio in Assessment of LearningDocument59 pagesPortfolio in Assessment of LearningRoedbert SalazarrNo ratings yet

- Module One RefelctiveDocument12 pagesModule One Refelctiveabuki bekriNo ratings yet

- Goal Measurable Learning ObjectiveDocument11 pagesGoal Measurable Learning ObjectiveMonica M.No ratings yet

- Learning Goals and AssessmentsDocument46 pagesLearning Goals and AssessmentsPrincessNo ratings yet

- Unit 4Document6 pagesUnit 4Cynicarel Mae GestopaNo ratings yet

- Assess Student Understanding with 14 ToolsDocument7 pagesAssess Student Understanding with 14 ToolsarunNo ratings yet

- How to Practice Before Exams: A Comprehensive Guide to Mastering Study Techniques, Time Management, and Stress Relief for Exam SuccessFrom EverandHow to Practice Before Exams: A Comprehensive Guide to Mastering Study Techniques, Time Management, and Stress Relief for Exam SuccessNo ratings yet

- The Leaders of Their Own Learning Companion: New Tools and Tips for Tackling the Common Challenges of Student-Engaged AssessmentFrom EverandThe Leaders of Their Own Learning Companion: New Tools and Tips for Tackling the Common Challenges of Student-Engaged AssessmentNo ratings yet

- Leadership Skills: High School Manual: Violence Prevention ProgramFrom EverandLeadership Skills: High School Manual: Violence Prevention ProgramNo ratings yet

- Baba Says... "Sri Saibaba Knows That Due To Circumstances Your Desire Is Not Fulfilled. Remember Shree Saibaba Then Everything Will Be Alright"Document1 pageBaba Says... "Sri Saibaba Knows That Due To Circumstances Your Desire Is Not Fulfilled. Remember Shree Saibaba Then Everything Will Be Alright"anjanareadNo ratings yet

- Science ProjectDocument2 pagesScience ProjectanjanareadNo ratings yet

- Sri Sainatha Athanga PujaDocument1 pageSri Sainatha Athanga PujaanjanareadNo ratings yet

- Hanuman Chalisa and Its MeaningDocument4 pagesHanuman Chalisa and Its Meaningmanoj dwivedi100% (6)

- QMDocument2 pagesQMAnjana27No ratings yet

- Sri Sainatha Athanga PujaDocument1 pageSri Sainatha Athanga PujaanjanareadNo ratings yet

- Will I Be Back To Normal?Document1 pageWill I Be Back To Normal?anjanareadNo ratings yet

- Discover Your True Self: A Tibetan Personality Test in 4 QuestionsDocument24 pagesDiscover Your True Self: A Tibetan Personality Test in 4 QuestionsmaheshboobalanNo ratings yet

- Corporate LessonsDocument7 pagesCorporate LessonspjblkNo ratings yet

- Carrot, Egg & CoffeeDocument2 pagesCarrot, Egg & CoffeeanjanareadNo ratings yet

- 5 Traits of A Team LeaderDocument10 pages5 Traits of A Team LeaderanjanareadNo ratings yet

- The American Poet: Robert FrostDocument59 pagesThe American Poet: Robert FrostanjanareadNo ratings yet

- Sidney Sheldon (1997) The Best Laid Plan - Sidney SheldonDocument328 pagesSidney Sheldon (1997) The Best Laid Plan - Sidney Sheldonanjanaread83% (6)

- Atwinisoneoftwo: OffspringDocument18 pagesAtwinisoneoftwo: OffspringanjanareadNo ratings yet

- As If U R BrilliantDocument6 pagesAs If U R BrilliantAnjana27No ratings yet

- Syllabus English (Language & Literature) (184) Class - Ix S. A. - II (2012-13) Annexure - D'Document18 pagesSyllabus English (Language & Literature) (184) Class - Ix S. A. - II (2012-13) Annexure - D'Ian BradleyNo ratings yet

- India Has A Unique and Wonderful Culture Embracing in Diversities of CastesDocument2 pagesIndia Has A Unique and Wonderful Culture Embracing in Diversities of CastesAnjana27No ratings yet

- Orientation 1Document34 pagesOrientation 1anjanareadNo ratings yet

- As If U R BrilliantDocument6 pagesAs If U R BrilliantAnjana27No ratings yet

- Large Lake Earthquakes Volcanic Eruptions Underwater Explosions Nuclear Devices Glacier Calvings Meteorite ImpactsDocument1 pageLarge Lake Earthquakes Volcanic Eruptions Underwater Explosions Nuclear Devices Glacier Calvings Meteorite ImpactsanjanareadNo ratings yet

- Sidney Sheldon (1994) Nothing Lasts Fore - Sidney SheldonDocument511 pagesSidney Sheldon (1994) Nothing Lasts Fore - Sidney Sheldonanjanaread78% (9)

- Cambridge International Diploma For Teachers and Trainers 2014 Syllabus English PDFDocument52 pagesCambridge International Diploma For Teachers and Trainers 2014 Syllabus English PDFsashreekaNo ratings yet

- Unit 4Document9 pagesUnit 4anjanareadNo ratings yet

- English Sample Paper Class 9 Max Marks: 45Document2 pagesEnglish Sample Paper Class 9 Max Marks: 45api-243565143No ratings yet

- Dec'13 Day Date Eltroxin Glador PANDocument2 pagesDec'13 Day Date Eltroxin Glador PANAnjana27No ratings yet

- Physics Numerical Chapter Motion Linear and Circular With AnswerDocument1 pagePhysics Numerical Chapter Motion Linear and Circular With AnsweranjanareadNo ratings yet

- MicroorganismsDocument12 pagesMicroorganismsanjanareadNo ratings yet

- Poetry TermsDocument1 pagePoetry TermsanjanareadNo ratings yet

- An Experimental Study of The Project CRISS Reading Program On Grade 9 Reading Achievement in Rural High SchoolsDocument65 pagesAn Experimental Study of The Project CRISS Reading Program On Grade 9 Reading Achievement in Rural High SchoolsanjanareadNo ratings yet

- Disneyland Paris: "Euro Disney" Redirects Here. For The Company That Owns and Operates Disneyland Paris, SeeDocument4 pagesDisneyland Paris: "Euro Disney" Redirects Here. For The Company That Owns and Operates Disneyland Paris, SeeanjanareadNo ratings yet

- Situated Learning - ReviewDocument3 pagesSituated Learning - ReviewChi nguyenNo ratings yet

- Understanding Synthesis Through MetaphorsDocument12 pagesUnderstanding Synthesis Through MetaphorsAlyaIsmahaniNo ratings yet

- Role of Women in SocietyDocument5 pagesRole of Women in SocietyShakeel BalochNo ratings yet

- The Impact of Note-Taking While Listening On Listening Comprehension in ADocument6 pagesThe Impact of Note-Taking While Listening On Listening Comprehension in AAn AnNo ratings yet

- Peer TutoringDocument4 pagesPeer TutoringEmil HaniNo ratings yet

- Creative Writing on Societal ProblemsDocument2 pagesCreative Writing on Societal ProblemsEazthaeng Dharleng LepzoNo ratings yet

- Appendix 1: Lesson Plan (Template)Document5 pagesAppendix 1: Lesson Plan (Template)bashaer abdul azizNo ratings yet

- 5 CRIMISO GroupProjectDetailsDocument3 pages5 CRIMISO GroupProjectDetailsYahye AbdillahiNo ratings yet

- A Blended Learning ExperienceDocument5 pagesA Blended Learning ExperienceostugeaqpNo ratings yet

- N S C Teacher Preparation Program Lesson Plan Format: Description of Classroom: Background: Content Objective(s)Document2 pagesN S C Teacher Preparation Program Lesson Plan Format: Description of Classroom: Background: Content Objective(s)HCOblondey11No ratings yet

- k-2 EngineeringDocument1 pagek-2 Engineeringapi-235408695No ratings yet

- TEACHING-AND-LEARNING-MONITORING-FORM - EditedDocument3 pagesTEACHING-AND-LEARNING-MONITORING-FORM - EditedHelena Custodio Caspe100% (1)

- 21st Century Skills (Graphic Organizer) FLORESDocument2 pages21st Century Skills (Graphic Organizer) FLORESAlyssa Panuelos FloresNo ratings yet

- Principles of Teaching Guide Future EducatorsDocument2 pagesPrinciples of Teaching Guide Future EducatorsJamena AbbasNo ratings yet

- DLL Blank Weekly TemplateDocument4 pagesDLL Blank Weekly TemplateYenyen Quirog-PalmesNo ratings yet

- Cognitive Learning Theory: General Psychology NotesDocument7 pagesCognitive Learning Theory: General Psychology NotesKizhakkedom Krishnankutty ShijuNo ratings yet

- Exam LP UpsrDocument9 pagesExam LP UpsrjaivandhanaaNo ratings yet

- Discovery LearninddavutDocument6 pagesDiscovery Learninddavutapi-340622406No ratings yet

- Remedial Teaching StrategiesDocument12 pagesRemedial Teaching StrategiesMarico River Conservation AssociationNo ratings yet

- 6th Grade Resource Room Math LessonDocument2 pages6th Grade Resource Room Math Lessonapi-496637404No ratings yet

- Cognitive and Psychosocial of Gifted and TalentedDocument19 pagesCognitive and Psychosocial of Gifted and TalentedvisionNo ratings yet

- Pre-reading Strategies Research CompilationDocument2 pagesPre-reading Strategies Research CompilationSylvaen WswNo ratings yet

- Sociocultural DiversityDocument26 pagesSociocultural DiversityJoshua BolilanNo ratings yet

- Science Lesson ReflectionDocument2 pagesScience Lesson Reflectionapi-30049964350% (2)

- Resume Molly Marchese - RevisedDocument1 pageResume Molly Marchese - Revisedapi-417841679No ratings yet

- Accomplishment Report Math SchoolDocument3 pagesAccomplishment Report Math SchoolJaylord Losabia100% (2)

- Rubric Video RecordingDocument1 pageRubric Video RecordingArbaiah DaklanNo ratings yet

- 5-Day Study Plan Gets Good ResultsDocument4 pages5-Day Study Plan Gets Good ResultsAnonNo ratings yet

- Teacher Observation ChecklistDocument2 pagesTeacher Observation ChecklistAbie Pillas100% (2)

- Day5 Group 6C (Newton's Laws of Motion)Document5 pagesDay5 Group 6C (Newton's Laws of Motion)Charlie CanarejoNo ratings yet