Professional Documents

Culture Documents

1 Simple Linear Regression Model

Uploaded by

shawktOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

1 Simple Linear Regression Model

Uploaded by

shawktCopyright:

Available Formats

Principles of Econometrics, 4t

h

Edition Page 1 Chapter 2: The Simple Linear Regression Model

Chapter 2

The Simple Linear Regression Model:

Specification and Estimation

Walter R. Paczkowski

Rutgers University

Principles of Econometrics, 4t

h

Edition Page 2 Chapter 2: The Simple Linear Regression Model

2.2 An Econometric Model

2.3 Estimating the Regression Parameters

2.4 Assessing the Least Squares Estimators

2.5 The Gauss-Markov Theorem

2.6 The Probability Distributions of the Least

Squares Estimators

2.7 Estimating the Variance of the Error Term

Chapter Contents

Principles of Econometrics, 4t

h

Edition Page 3 Chapter 2: The Simple Linear Regression Model

2.1

An Economic Model

Principles of Econometrics, 4t

h

Edition Page 4 Chapter 2: The Simple Linear Regression Model

Eq. 2.1

x x y E

y 2 1

) | ( | | + = =

The simple regression function is written as

where

1

is the intercept and

2

is the slope

Eq. 2.1

2 2

( | )

y

E y x x = = +

2.1

An Economic

Model

Principles of Econometrics, 4t

h

Edition Page 5 Chapter 2: The Simple Linear Regression Model

x x y E

y 2 1

) | ( | | + = =

It is called simple regression not because it is easy,

but because there is only one explanatory variable

on the right-hand side of the equation

2.1

An Economic

Model

Principles of Econometrics, 4t

h

Edition Page 6 Chapter 2: The Simple Linear Regression Model

2.1

An Economic

Model

Figure 2.2 The economic model: a linear relationship between

average per person food expenditure and income

Principles of Econometrics, 4t

h

Edition Page 7 Chapter 2: The Simple Linear Regression Model

Eq. 2.2

denotes change in and dE(y|x)/dx denotes the derivative of

the expected value of y given an x value

dx

x y dE

x

x y E ) | ( ) | (

2

=

A

A

= |

The slope of the regression line can be written as:

where denotes change in and dE(y|x)/dx

denotes the derivative of the expected value of y

given an x value

Eq. 2.2

2

( | ) ( | )

E y x dE y x

x dx

A

= =

A

2.1

An Economic

Model

Principles of Econometrics, 4t

h

Edition Page 8 Chapter 2: The Simple Linear Regression Model

2.2

An Econometric Model

Principles of Econometrics, 4t

h

Edition Page 9 Chapter 2: The Simple Linear Regression Model

2.2

An Econometric

Model

Figure 2.3 The probability density function for y at two levels of income

Principles of Econometrics, 4t

h

Edition Page 10 Chapter 2: The Simple Linear Regression Model

2.2.1

Introducing the

Error Term

The random error term is defined as

Rearranging gives

where y is the dependent variable and x is the

independent variable

( )

1 2

| e y E y x y x = = Eq. 2.3

1 2

y x e = + + Eq. 2.4

2.2

An Econometric

Model

Principles of Econometrics, 4t

h

Edition Page 11 Chapter 2: The Simple Linear Regression Model

The expected value of the error term, given x, is

The mean value of the error term, given x, is zero

1 2

( | ) ( | ) 0 E e x E y x x = =

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

Principles of Econometrics, 4t

h

Edition Page 12 Chapter 2: The Simple Linear Regression Model

Figure 2.4 Probability density functions for e and y

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

Principles of Econometrics, 4t

h

Edition Page 13 Chapter 2: The Simple Linear Regression Model

ASSUMPTIONS OF THE SIMPLE LINEAR REGRESSION MODEL - II

Assumption SR1:

The value of y, for each value of x, is:

1 2

y x e = + +

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

Principles of Econometrics, 4t

h

Edition Page 14 Chapter 2: The Simple Linear Regression Model

Assumption SR2:

The expected value of the random error e is:

This is equivalent to assuming that

0 ) ( = e E

1 2

( ) E y x = +

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

ASSUMPTIONS OF THE SIMPLE LINEAR REGRESSION MODEL - II

Principles of Econometrics, 4t

h

Edition Page 15 Chapter 2: The Simple Linear Regression Model

Assumption SR3:

The variance of the random error e is:

2

var( ) var( | ) e y x = =

The random variables y|x and e have the

same variance because they differ only by

a constant.

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

ASSUMPTIONS OF THE SIMPLE LINEAR REGRESSION MODEL - II

Principles of Econometrics, 4t

h

Edition Page 16 Chapter 2: The Simple Linear Regression Model

Assumption SR4:

The covariance between any pair of random

errors, e

i

and e

j

is:

The stronger version of this assumption is that

the random errors e are statistically independent,

in which case the values of the dependent

variable y are also statistically independent

cov( , ) cov( | , | ) 0

i j i i j j

e e y x y x = =

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

ASSUMPTIONS OF THE SIMPLE LINEAR REGRESSION MODEL - II

Principles of Econometrics, 4t

h

Edition Page 17 Chapter 2: The Simple Linear Regression Model

Assumption SR5:

The variable x is not random, and must take at

least two different values

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

ASSUMPTIONS OF THE SIMPLE LINEAR REGRESSION MODEL - II

Principles of Econometrics, 4t

h

Edition Page 18 Chapter 2: The Simple Linear Regression Model

Assumption SR6:

(optional) The values of e are normally

distributed about their mean if the values of y

are normally distributed, and vice versa

2

~ (0, ) e N

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

ASSUMPTIONS OF THE SIMPLE LINEAR REGRESSION MODEL - II

Principles of Econometrics, 4t

h

Edition Page 19 Chapter 2: The Simple Linear Regression Model

Figure 2.5 The relationship among y, e and the true regression line

2.2

An Econometric

Model

2.2.1

Introducing the

Error Term

Principles of Econometrics, 4t

h

Edition Page 20 Chapter 2: The Simple Linear Regression Model

2.3

Estimating the Regression Parameters

Principles of Econometrics, 4t

h

Edition Page 21 Chapter 2: The Simple Linear Regression Model

2.3

Estimating the

Regression

Parameters

Table 2.1 Food Expenditure and Income Data

Principles of Econometrics, 4t

h

Edition Page 22 Chapter 2: The Simple Linear Regression Model

Figure 2.6 Data for food expenditure example

2.3

Estimating the

Regression

Parameters

Principles of Econometrics, 4t

h

Edition Page 23 Chapter 2: The Simple Linear Regression Model

The fitted regression line is:

The least squares residual is:

2.3.1

The Least Squares

Principle

1 2

i i

y b b x = +

i i i i i

x b b y y y e

2 1

= =

Eq. 2.5

Eq. 2.6

2.3

Estimating the

Regression

Parameters

Principles of Econometrics, 4t

h

Edition Page 24 Chapter 2: The Simple Linear Regression Model

Figure 2.7 The relationship among y, and the fitted regression line

2.3

Estimating the

Regression

Parameters

2.3.1

The Least Squares

Principle

Principles of Econometrics, 4t

h

Edition Page 25 Chapter 2: The Simple Linear Regression Model

Suppose we have another fitted line:

The least squares line has the smaller sum of

squared residuals:

* * *

1 2

i i

y b b x = +

*

1

2 * *

1

2

then

and

if SSE SSE e SSE e SSE

N

i

i

N

i

i

< = =

= =

2.3

Estimating the

Regression

Parameters

2.3.1

The Least Squares

Principle

Principles of Econometrics, 4t

h

Edition Page 26 Chapter 2: The Simple Linear Regression Model

Least squares estimates for the unknown

parameters

1

and

2

are obtained my minimizing

the sum of squares function:

2

1 2 1 2

1

( , ) ( )

N

i i

i

S y x

=

=

2.3

Estimating the

Regression

Parameters

2.3.1

The Least Squares

Principle

Principles of Econometrics, 4t

h

Edition Page 27 Chapter 2: The Simple Linear Regression Model

=

2

2

) (

) )( (

x x

y y x x

b

i

i i

x b y b

2 1

=

Eq. 2.7

Eq. 2.8

2.3

Estimating the

Regression

Parameters

2.3.1

The Least Squares

Principle

THE LEAST SQUARES ESTIMATORS

Principles of Econometrics, 4t

h

Edition Page 28 Chapter 2: The Simple Linear Regression Model

2.3.2

Estimates for the

Food Expenditure

Function

2096 . 10

7876 . 1828

2684 . 18671

) (

) )( (

2

2

= =

x x

y y x x

b

i

i i

4160 . 83 ) 6048 . 19 )( 2096 . 10 ( 5735 . 283

2 1

= = = x b y b

A convenient way to report the values for b

1

and b

2

is to write out the estimated or fitted

regression line:

i i

x y 21 . 10 42 . 83 + =

2.3

Estimating the

Regression

Parameters

Principles of Econometrics, 4t

h

Edition Page 29 Chapter 2: The Simple Linear Regression Model

Figure 2.8 The fitted regression line

2.3

Estimating the

Regression

Parameters

2.3.2

Estimates for the

Food Expenditure

Function

Principles of Econometrics, 4t

h

Edition Page 30 Chapter 2: The Simple Linear Regression Model

The value b

2

= 10.21 is an estimate of |

2

, the

amount by which weekly expenditure on food per

household increases when household weekly

income increases by $100. Thus, we estimate that

if income goes up by $100, expected weekly

expenditure on food will increase by

approximately $10.21

Strictly speaking, the intercept estimate b

1

=

83.42 is an estimate of the weekly food

expenditure on food for a household with zero

income

2.3.3

Interpreting the

Estimates

2.3

Estimating the

Regression

Parameters

Principles of Econometrics, 4t

h

Edition Page 31 Chapter 2: The Simple Linear Regression Model

Income elasticity is a useful way to characterize

the responsiveness of consumer expenditure to

changes in income. The elasticity of a variable y

with respect to another variable x is:

In the linear economic model given by Eq. 2.1 we

have shown that

2.3.3a

Elasticities

y

x

x

y

x

y

A

A

= =

in change percentage

in change percentage

c

2

( )

E y

x

A

=

A

2.3

Estimating the

Regression

Parameters

Principles of Econometrics, 4t

h

Edition Page 32 Chapter 2: The Simple Linear Regression Model

The elasticity of mean expenditure with respect to

income is:

A frequently used alternative is to calculate the

elasticity at the point of the means because it is

a representative point on the regression line.

2

( ) ( ) ( )

( ) ( )

E y E y E y x x

x x x E y E y

c

A A

= = =

A A

Eq. 2.9

71 . 0

57 . 283

60 . 19

21 . 10

2

= = =

y

x

b c

2.3

Estimating the

Regression

Parameters

2.3.3a

Elasticities

Principles of Econometrics, 4t

h

Edition Page 33 Chapter 2: The Simple Linear Regression Model

Suppose that we wanted to predict weekly food

expenditure for a household with a weekly income

of $2000. This prediction is carried out by

substituting x = 20 into our estimated equation to

obtain:

We predict that a household with a weekly income

of $2000 will spend $287.61 per week on food

2.3.3b

Prediction

61 . 287 ) 20 ( 21 . 10 42 . 83 21 . 10 42 . 83

= + = + =

i

x y

2.3

Estimating the

Regression

Parameters

Principles of Econometrics, 4t

h

Edition Page 34 Chapter 2: The Simple Linear Regression Model

2.3.3c

Computer Output

Figure 2.9 EViews Regression Output

2.3

Estimating the

Regression

Parameters

Principles of Econometrics, 4t

h

Edition Page 35 Chapter 2: The Simple Linear Regression Model

2.3.4

Other Economic

Models

The simple regression model can be applied to

estimate the parameters of many relationships in

economics, business, and the social sciences

The applications of regression analysis are

fascinating and useful

2.3

Estimating the

Regression

Parameters

Principles of Econometrics, 4t

h

Edition Page 36 Chapter 2: The Simple Linear Regression Model

2.4

Assessing the Least Squares Fit

Principles of Econometrics, 4t

h

Edition Page 37 Chapter 2: The Simple Linear Regression Model

We call b

1

and b

2

the least squares estimators.

We can investigate the properties of the estimators

b

1

and b

2

, which are called their sampling

properties, and deal with the following important

questions:

1. If the least squares estimators are random

variables, then what are their expected values,

variances, covariances, and probability

distributions?

2. How do the least squares estimators compare

with other procedures that might be used, and

how can we compare alternative estimators?

2.4

Assessing the

Least Squares Fit

Principles of Econometrics, 4t

h

Edition Page 38 Chapter 2: The Simple Linear Regression Model

The estimator b

2

can be rewritten as:

where

It could also be write as:

2.4.1

The Estimator b

2

=

=

N

i

i i

y w b

1

2

=

2

) ( x x

x x

w

i

i

i

2 2

i i

b we = +

Eq. 2.10

Eq. 2.11

Eq. 2.12

2.4

Assessing the

Least Squares Fit

Please ignore if not comfortable with the maths below

Principles of Econometrics, 4t

h

Edition Page 39 Chapter 2: The Simple Linear Regression Model

We will show that if our model assumptions hold,

then E(b

2

) =

2

, which means that the estimator is

unbiased. We can find the expected value of b

2

using the fact that the expected value of a sum is

the sum of the expected values:

using and

2.4.2

The Expected

Values of b

1

and b

2

2 2 2 1 1 2 2

2 1 1 2 2

2

2

2

( ) ( ) ( ... )

( ) ( ) ( ) ... ( )

( ) ( )

( )

i i N N

N N

i i

i i

E b E b we E we w e w e

E E we E w e E w e

E E we

wE e

= + = + + + +

= + + + +

= +

= +

=

) ( ) (

i i i i

e E w e w E =

0 ) ( =

i

e E

Eq. 2.13

2.4

Assessing the

Least Squares Fit

Please ignore if not comfortable with the maths below

Principles of Econometrics, 4t

h

Edition Page 40 Chapter 2: The Simple Linear Regression Model

The property of unbiasedness is about the average

values of b

1

and b

2

if many samples of the same size

are drawn from the same population

If we took the averages of estimates from many

samples, these averages would approach the true

parameter values b

1

and b

2

Unbiasedness does not say that an estimate from

any one sample is close to the true parameter value,

and thus we cannot say that an estimate is unbiased

We can say that the least squares estimation

procedure (or the least squares estimator) is

unbiased

2.4

Assessing the

Least Squares Fit

2.4.2

The Expected

Values of b

1

and b

2

Principles of Econometrics, 4t

h

Edition Page 41 Chapter 2: The Simple Linear Regression Model

2.4.3

Repeated

Sampling

Table 2.2 Estimates from 10 Samples

2.4

Assessing the

Least Squares Fit

Principles of Econometrics, 4t

h

Edition Page 42 Chapter 2: The Simple Linear Regression Model

Figure 2.10 Two possible probability density functions for b

2

The variance of b

2

is defined as

2

2 2 2

)] ( [ ) var( b E b E b =

2.4

Assessing the

Least Squares Fit

2.4.3

Repeated

Sampling

Principles of Econometrics, 4t

h

Edition Page 43 Chapter 2: The Simple Linear Regression Model

If the regression model assumptions SR1-SR5 are

correct (assumption SR6 is not required), then the

variances and covariance of b

1

and b

2

are:

2.4.4

The Variances and

Covariances of b

1

and b

2

( )

2

2

1

2

var( )

i

i

x

b

N x x

(

( =

(

( )

2

2

2

var( )

i

b

x x

=

( )

2

1 2

2

cov( , )

i

x

b b

x x

(

( =

(

Eq. 2.14

Eq. 2.15

Eq. 2.16

2.4

Assessing the

Least Squares Fit

Principles of Econometrics, 4t

h

Edition Page 44 Chapter 2: The Simple Linear Regression Model

1. The larger the variance term

2

, the greater the uncertainty

there is in the statistical model, and the larger the variances

and covariance of the least squares estimators.

2. The larger the sum of squares, , the smaller the

variances of the least squares estimators and the more

precisely we can estimate the unknown parameters.

3. The larger the sample size N, the smaller the variances and

covariance of the least squares estimators.

4. The larger the term , the larger the variance of the least

squares estimator b

1

.

5. The absolute magnitude of the covariance increases the

larger in magnitude is the sample mean , and the

covariance has a sign opposite to that of .

( )

2

x x

i

2

i

x

x

x

MAJOR POINTS ABOUT THE VARIANCES AND COVARIANCES

OF b

1

AND b

2

2.4

Assessing the

Least Squares Fit

2.4.4

The Variances and

Covariances of b

1

and b

2

Principles of Econometrics, 4t

h

Edition Page 45 Chapter 2: The Simple Linear Regression Model

Figure 2.11 The influence of variation in the explanatory variable x on

precision of estimation (a) Low x variation, low precision (b) High x

variation, high precision

The variance of b

2

is defined as

| |

2

2 2 2

) ( ) var( b E b E b =

2.4

Assessing the

Least Squares Fit

2.4.4

The Variances and

Covariances of b

1

and b

2

Principles of Econometrics, 4t

h

Edition Page 46 Chapter 2: The Simple Linear Regression Model

2.5

The Gauss-Markov Theorem

Principles of Econometrics, 4t

h

Edition Page 47 Chapter 2: The Simple Linear Regression Model

2.5

The Gauss-Markov

Theorem

Under the assumptions SR1-SR5 of the linear

regression model, the estimators b

1

and b

2

have the

smallest variance of all linear and unbiased

estimators of b

1

and b

2

. They are the Best Linear

Unbiased Estimators (BLUE) of b

1

and b

2

GAUSS-MARKOV THEOREM

Principles of Econometrics, 4t

h

Edition Page 48 Chapter 2: The Simple Linear Regression Model

MAJOR POINTS ABOUT THE GAUSS-MARKOV THEOREM

1. The estimators b

1

and b

2

are best when compared to similar

estimators, those which are linear and unbiased. The Theorem does

not say that b

1

and b

2

are the best of all possible estimators.

2. The estimators b

1

and b

2

are best within their class because they

have the minimum variance. When comparing two linear and

unbiased estimators, we always want to use the one with the

smaller variance, since that estimation rule gives us the higher

probability of obtaining an estimate that is close to the true

parameter value.

3. In order for the Gauss-Markov Theorem to hold, assumptions SR1-

SR5 must be true. If any of these assumptions are not true, then b

1

and b

2

are not the best linear unbiased estimators of

1

and

2

.

2.5

The Gauss-Markov

Theorem

Principles of Econometrics, 4t

h

Edition Page 49 Chapter 2: The Simple Linear Regression Model

4. The Gauss-Markov Theorem does not depend on the assumption

of normality (assumption SR6).

5. In the simple linear regression model, if we want to use a linear

and unbiased estimator, then we have to do no more searching.

The estimators b

1

and b

2

are the ones to use. This explains why

we are studying these estimators and why they are so widely used

in research, not only in economics but in all social and physical

sciences as well.

6. The Gauss-Markov theorem applies to the least squares

estimators. It does not apply to the least squares estimates from a

single sample.

2.5

The Gauss-Markov

Theorem

MAJOR POINTS ABOUT THE GAUSS-MARKOV THEOREM

Principles of Econometrics, 4t

h

Edition Page 50 Chapter 2: The Simple Linear Regression Model

2.6

The Probability Distributions of the

Least Squares Estimators

Principles of Econometrics, 4t

h

Edition Page 51 Chapter 2: The Simple Linear Regression Model

If we make the normality assumption (assumption

SR6 about the error term) then the least squares

estimators are normally distributed:

2.6

The Probability

Distributions of the

Least Squares

Estimators

( )

2 2

1 1

2

~ ,

i

i

x

b N

N x x

| |

|

|

\ .

( )

2

2 2

2

~ ,

i

b N

x x

| |

|

|

\ .

Eq. 2.17

Eq. 2.18

Principles of Econometrics, 4t

h

Edition Page 52 Chapter 2: The Simple Linear Regression Model

If assumptions SR1-SR5 hold, and if the sample

size N is sufficiently large, then the least squares

estimators have a distribution that approximates the

normal distributions shown in Eq. 2.17 and Eq. 2.18

2.6

The Probability

Distributions of the

Least Squares

Estimators

A CENTRAL LIMIT THEOREM

Principles of Econometrics, 4t

h

Edition Page 53 Chapter 2: The Simple Linear Regression Model

2.7

Estimating the Variance of the Error

Term

Principles of Econometrics, 4t

h

Edition Page 54 Chapter 2: The Simple Linear Regression Model

The variance of the random error e

i

is:

if the assumption E(e

i

) = 0 is correct.

Since the expectation is an average value we might

consider estimating

2

as the average of the

squared errors:

where the error terms are

2.7

Estimating the

Variance of the

Error Term

2 2 2

var( ) [ ( )] ( )

i i i i

e E e E e E e = = =

2

2

i

e

N

=

1 2

i i i

e y x =

Principles of Econometrics, 4t

h

Edition Page 55 Chapter 2: The Simple Linear Regression Model

The least squares residuals are obtained by replacing

the unknown parameters by their least squares

estimates:

There is a simple modification that produces an

unbiased estimator, and that is:

so that:

1 2

2

2

i i i i i

i

e y y y b b x

e

N

= =

=

2

2

2

=

N

e

i

o

( )

2 2

E =

Eq. 2.19

2.7

Estimating the

Variance of the

Error Term

Principles of Econometrics, 4t

h

Edition Page 56 Chapter 2: The Simple Linear Regression Model

Replace the unknown error variance

2

in Eq. 2.14

Eq. 2.16 by to obtain:

2.7.1

Estimating the

Variance and

Covariance of the

Least Squares

Estimators

2

o

( )

2

2

1

2

var( )

i

i

x

b

N x x

(

( =

(

( )

2

2

2

var( )

i

b

x x

=

( )

2

1 2

2

cov( , )

i

x

b b

x x

(

( =

(

Eq. 2.20

Eq. 2.21

Eq. 2.22

2.7

Estimating the

Variance of the

Error Term

Principles of Econometrics, 4t

h

Edition Page 57 Chapter 2: The Simple Linear Regression Model

The square roots of the estimated variances are the

standard errors of b

1

and b

2

:

1 1

se( ) var( ) b b =

Eq. 2.23

Eq. 2.24

2 2

se( ) var( ) b b =

2.7

Estimating the

Variance of the

Error Term

2.7.1

Estimating the

Variance and

Covariance of the

Least Squares

Estimators

Principles of Econometrics, 4t

h

Edition Page 58 Chapter 2: The Simple Linear Regression Model

2.7.2

Calculations for the

Food Expenditure

Data

2

2

304505.2

8013.29

2 38

i

e

N

= = =

Table 2.3 Least Squares Residuals

2.7

Estimating the

Variance of the

Error Term

Principles of Econometrics, 4t

h

Edition Page 59 Chapter 2: The Simple Linear Regression Model

The estimated variances and covariances for a

regression are arrayed in a rectangular array, or

matrix, with variances on the diagonal and

covariances in the off-diagonal positions.

1 1 2

1 2 2

var( ) cov( , )

cov( , ) var( )

b b b

b b b

(

(

(

2.7

Estimating the

Variance of the

Error Term

2.7.2

Calculations for the

Food Expenditure

Data

Principles of Econometrics, 4t

h

Edition Page 60 Chapter 2: The Simple Linear Regression Model

For the food expenditure data the estimated

covariance matrix is:

2.7.2

Calculations for the

Food Expenditure

Data

C Income

C 1884.442 -85.90316

Income -85.90316 4.381752

2.7

Estimating the

Variance of the

Error Term

Principles of Econometrics, 4t

h

Edition Page 61 Chapter 2: The Simple Linear Regression Model

The standard errors of b

1

and b

2

are measures of

the sampling variability of the least squares

estimates b

1

and b

2

in repeated samples.

The estimators are random variables. As such,

they have probability distributions, means, and

variances.

In particular, if assumption SR6 holds, and the

random error terms e

i

are normally distributed,

then:

2.7.3

Interpreting the

Standard Errors

( )

( )

2

2

2 2 2

~ , var( )

i

b N b x x =

2.7

Estimating the

Variance of the

Error Term

Principles of Econometrics, 4t

h

Edition Page 62 Chapter 2: The Simple Linear Regression Model

The estimator variance, var(b

2

), or its square

root, which we might call the true

standard deviation of b

2

, measures the sampling

variation of the estimates b

2

The bigger is the more variation in the least

squares estimates b

2

we see from sample to

sample. If is large then the estimates might

change a great deal from sample to sample

If is small relative to the parameter b

2

,we

know that the least squares estimate will fall

near b

2

with high probability

( )

2

2

var

b

b =

2

b

o

2

b

o

2

b

o

2.7

Estimating the

Variance of the

Error Term

2.7.3

Interpreting the

Standard Errors

Principles of Econometrics, 4t

h

Edition Page 63 Chapter 2: The Simple Linear Regression Model

The question we address with the standard error is

How much variation about their means do the

estimates exhibit from sample to sample?

2.7

Estimating the

Variance of the

Error Term

2.7.3

Interpreting the

Standard Errors

Principles of Econometrics, 4t

h

Edition Page 64 Chapter 2: The Simple Linear Regression Model

We estimate

2

, and then estimate using:

The standard error of b

2

is thus an estimate of

what the standard deviation of many estimates

b

2

would be in a very large number of samples,

and is an indicator of the width of the pdf of b

2

shown in Figure 2.12

2

b

o

( )

2 2

2

2

se( ) var( )

i

b b

x x

=

=

2.7

Estimating the

Variance of the

Error Term

2.7.3

Interpreting the

Standard Errors

Principles of Econometrics, 4t

h

Edition Page 65 Chapter 2: The Simple Linear Regression Model

Figure 2.12 The probability density function of the least squares estimator b

2

.

2.7

Estimating the

Variance of the

Error Term

2.7.3

Interpreting the

Standard Errors

Principles of Econometrics, 4t

h

Edition Page 66 Chapter 2: The Simple Linear Regression Model

Key Words

Principles of Econometrics, 4t

h

Edition Page 67 Chapter 2: The Simple Linear Regression Model

assumptions

asymptotic

B.L.U.E.

biased estimator

degrees of freedom

dependent variable

deviation from the

mean form

econometric model

economic model

elasticity

Gauss-Markov

Theorem

heteroskedastic

homoskedastic

independent

variable

least squares

estimates

least squares

estimators

least squares

principle

least squares

residuals

linear estimator

prediction

random error term

regression model

regression

parameters

repeated sampling

sampling precision

sampling properties

scatter diagram

simple linear

regression function

specification error

unbiased estimator

Keywords

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Isaac Asimov - "Nightfall"Document20 pagesIsaac Asimov - "Nightfall"Aditya Sharma100% (1)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Tchaikovsky Piano Concerto 1Document2 pagesTchaikovsky Piano Concerto 1arno9bear100% (2)

- Sine and Cosine Exam QuestionsDocument8 pagesSine and Cosine Exam QuestionsGamer Shabs100% (1)

- Method Statement Pressure TestingDocument15 pagesMethod Statement Pressure TestingAkmaldeen AhamedNo ratings yet

- Essentials of Report Writing - Application in BusinessDocument28 pagesEssentials of Report Writing - Application in BusinessMahmudur Rahman75% (4)

- Boat DesignDocument8 pagesBoat DesignporkovanNo ratings yet

- RPH Sains DLP Y3 2018Document29 pagesRPH Sains DLP Y3 2018Sukhveer Kaur0% (1)

- Homeroom Guidance Grade 12 Quarter - Module 4 Decisive PersonDocument4 pagesHomeroom Guidance Grade 12 Quarter - Module 4 Decisive PersonMhiaBuenafe86% (36)

- Louisville Kentucky USA RamadanDocument1 pageLouisville Kentucky USA Ramadansm1234@att.netNo ratings yet

- Al Raheeq Ul MakhtoomDocument225 pagesAl Raheeq Ul MakhtoomMahmood AhmedNo ratings yet

- Chapter 1 - IntroductionDocument11 pagesChapter 1 - IntroductionshawktNo ratings yet

- Asyad Holding Company Balance Sheets and Income Statements 2007-2010Document9 pagesAsyad Holding Company Balance Sheets and Income Statements 2007-2010shawktNo ratings yet

- Test Bank Chapter1Document4 pagesTest Bank Chapter1shawktNo ratings yet

- Reasons for Conducting Qualitative ResearchDocument12 pagesReasons for Conducting Qualitative ResearchMa. Rhona Faye MedesNo ratings yet

- European Heart Journal Supplements Pathophysiology ArticleDocument8 pagesEuropean Heart Journal Supplements Pathophysiology Articleal jaynNo ratings yet

- Megneto TherapyDocument15 pagesMegneto TherapyedcanalNo ratings yet

- Hics 203-Organization Assignment ListDocument2 pagesHics 203-Organization Assignment ListslusafNo ratings yet

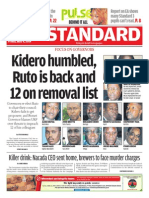

- The Standard 09.05.2014Document96 pagesThe Standard 09.05.2014Zachary Monroe100% (1)

- IPIECA - IOGP - The Global Distribution and Assessment of Major Oil Spill Response ResourcesDocument40 pagesIPIECA - IOGP - The Global Distribution and Assessment of Major Oil Spill Response ResourcesОлегNo ratings yet

- Kamera Basler Aca2500-20gmDocument20 pagesKamera Basler Aca2500-20gmJan KubalaNo ratings yet

- 45 - Altivar 61 Plus Variable Speed DrivesDocument130 pages45 - Altivar 61 Plus Variable Speed Drivesabdul aziz alfiNo ratings yet

- Ted Hughes's Crow - An Alternative Theological ParadigmDocument16 pagesTed Hughes's Crow - An Alternative Theological Paradigmsa46851No ratings yet

- 8510C - 15, - 50, - 100 Piezoresistive Pressure Transducer: Features DescriptionDocument3 pages8510C - 15, - 50, - 100 Piezoresistive Pressure Transducer: Features Descriptionedward3600No ratings yet

- Test Engleza 8Document6 pagesTest Engleza 8Adriana SanduNo ratings yet

- Supply Chain Management of VodafoneDocument8 pagesSupply Chain Management of VodafoneAnamika MisraNo ratings yet

- Nistha Tamrakar Chicago Newa VIIDocument2 pagesNistha Tamrakar Chicago Newa VIIKeshar Man Tamrakar (केशरमान ताम्राकार )No ratings yet

- JE Creation Using F0911MBFDocument10 pagesJE Creation Using F0911MBFShekar RoyalNo ratings yet

- REVISION OF INTEREST RATES ON DEPOSITS IN SANGAREDDYDocument3 pagesREVISION OF INTEREST RATES ON DEPOSITS IN SANGAREDDYSRINIVASARAO JONNALANo ratings yet

- ListDocument4 pagesListgeralda pierrelusNo ratings yet

- Report On Indian Airlines Industry On Social Media, Mar 2015Document9 pagesReport On Indian Airlines Industry On Social Media, Mar 2015Vang LianNo ratings yet

- Electronic Throttle ControlDocument67 pagesElectronic Throttle Controlmkisa70100% (1)

- Liebert PEX+: High Efficiency. Modular-Type Precision Air Conditioning UnitDocument19 pagesLiebert PEX+: High Efficiency. Modular-Type Precision Air Conditioning Unitjuan guerreroNo ratings yet

- Ôn tập và kiểm tra học kì Tiếng anh 6 ĐÁP ÁNDocument143 pagesÔn tập và kiểm tra học kì Tiếng anh 6 ĐÁP ÁNThùy TinaNo ratings yet

- MRI Week3 - Signal - Processing - TheoryDocument43 pagesMRI Week3 - Signal - Processing - TheoryaboladeNo ratings yet

- Mechanical Function of The HeartDocument28 pagesMechanical Function of The HeartKarmilahNNo ratings yet